AZ - 400 DevOps Engineer 17

The broad range of topic 17

Scenario 17

Chap -1: Developing an Actionable Alerting Strategy 18

SRE - Site Reliability Engineering 18

What is reliability: 18

Measuring reliability 18

What is SRE 18

Why do we need SRE 19

Key concepts 19

SRE vs. DevOps 20

Summary 20

Exploring metrics charts and dashboards 21

Intro to Azure monitoring 21

Azure Monitor metrics overview 21

Demo 21

1. Create a chart to view metrics 22

2. Add additional metrics and resources 22

3. Add charts to dashboards 22

Summary 22

Implementing application Health checks 22

Scenario 22

What is Application Insight 23

Availability 23

Demo 23

1. Configure URL ping test 24

2. Configure health alert 24

Summary 24

Discovering service and resource health alerts 24

Azure status 24

Service health 24

Resource health 25

Demo 25

1. Azure status page - https://status.azure.com/en-ca/status 26

2. View service health pages and create alerts 26

3. View resource health and create alerts 26

Summary 27

Self-healing alerts 27

Scenario 27

Vertical vs horizontal scaling 27

App Service vs. VMSS(Vitual machine scale set) 28

Autoscaling process 28

Demo 28

1. Configure autoscale notifications for App service and VMSS 28

Summary 29

Chap - 2: Designing Failure Prediction Strategy 29

Introduction 29

Exploring System load and failure conditions 29

Everything fail sometimes 29

What is failure mode analysis? 30

How to plan for failure 30

How can we reduce failure? 30

Performance Testing 31

Summary 31

Understanding failure prediction 31

You cannot prepare for everything 31

What is predictive maintenance PdM 31

How Microsoft used to do PdM 32

How does Microsoft do PdM now 33

Summary 33

Understanding baseline metrics 34

Scenario 34

Why create baseline 34

How to create baseline 34

Demo 35

1. Explore Azure monitor insight 36

Summary 36

Discovering Application Insight smart detection and dynamic threshold 36

Scenario 36

Dynamic threshold advantages 36

Application Insights smart detection 36

Smart detection categories 36

Demo 37

1. Create an alert which dynamic thresholds 37

2. Create smart detection alerts 37

Summary 38

Summary 38

Chap - 3: Designing and Implementing Health Check 38

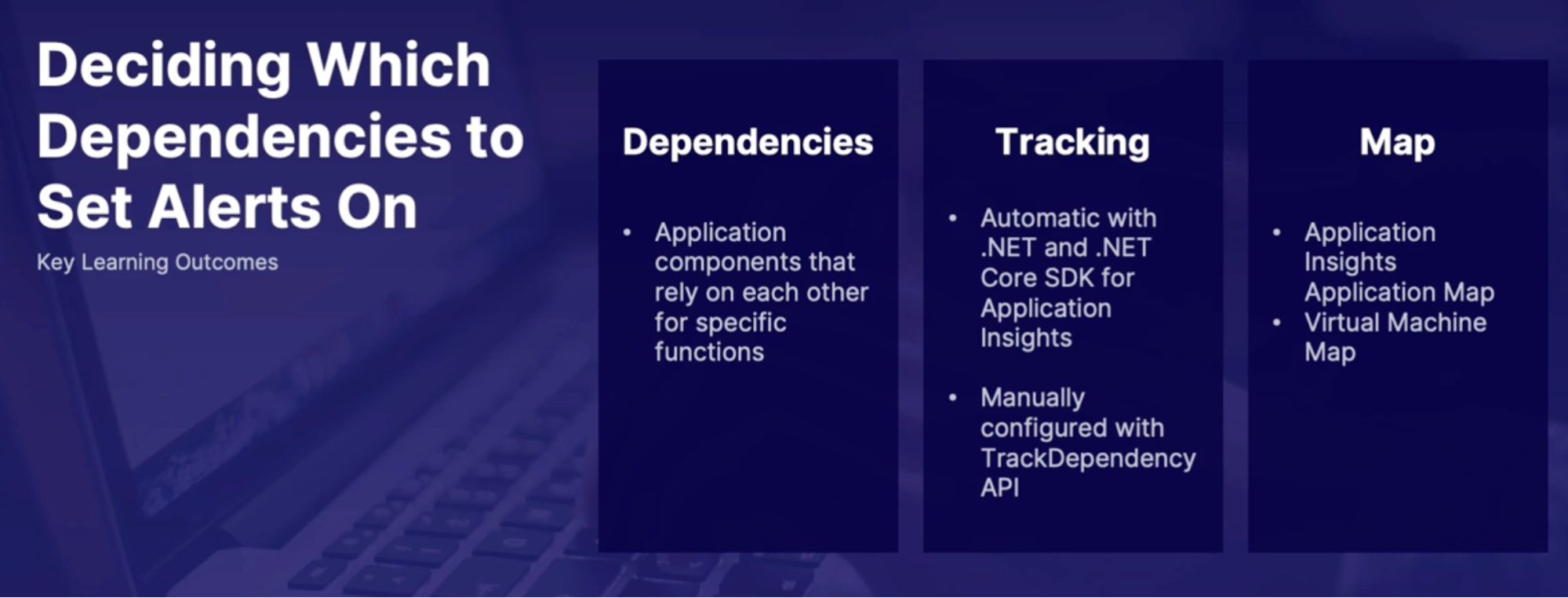

Deciding which dependencies to set alerts on 38

What is a dependency? 38

Application Insights dependency tracking 39

Which dependencies are tracked in Application Insights 39

Where can I find dependency data 40

Application dependencies on virtual machines 40

Demo exploring dependencies 40

Summary 41

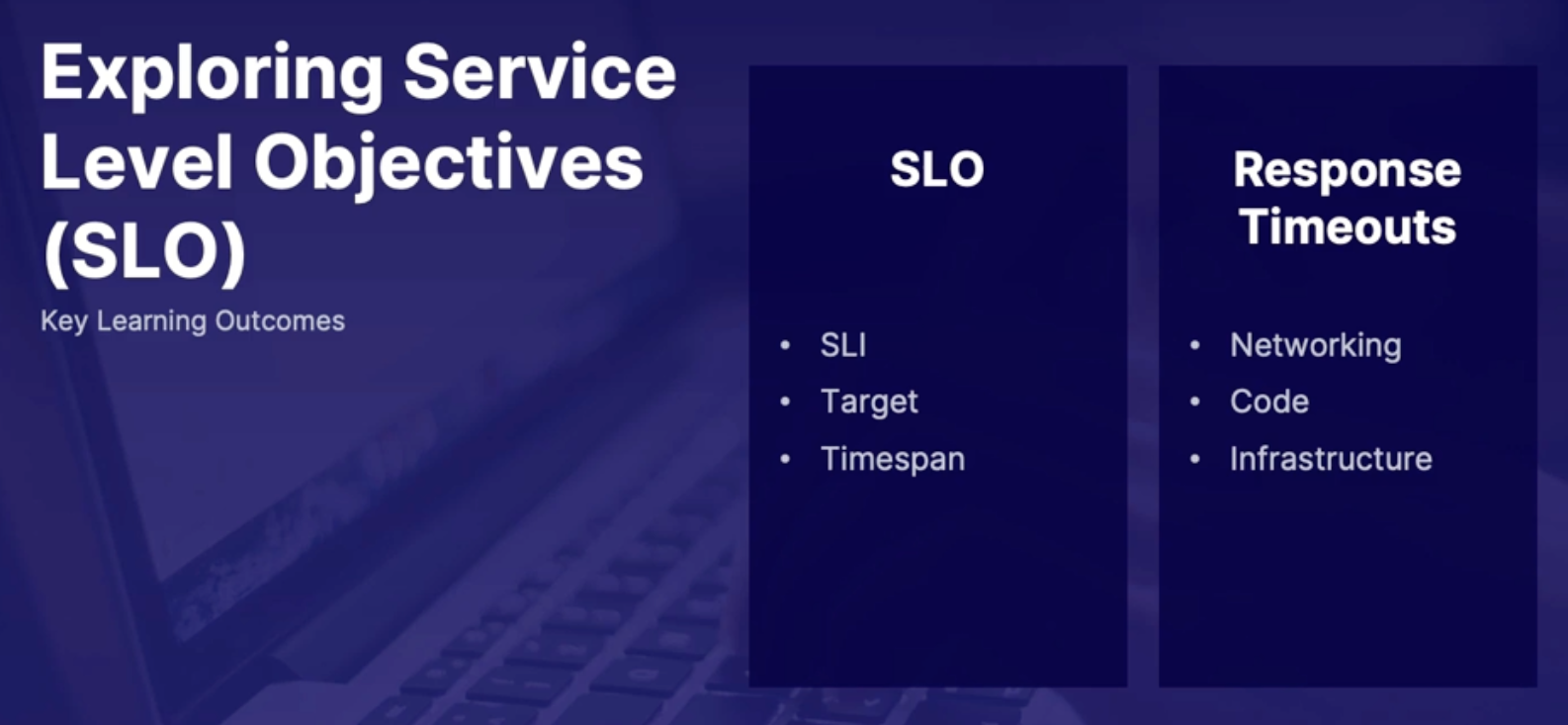

Exploring service level objectives SLO 42

What makes an SLO? 42

How is an SLO helpful? 42

SLO’s and response time-outs 42

Demo: Configure Azure SQL to meet SLO‘s 43

Call outs 44

Summary 44

Understanding partial health situation 44

Health monitoring 44

Health monitoring data 44

Telemetry correlation 45

Application logs best practices 45

Endpoint health monitoring 46

Summary 46

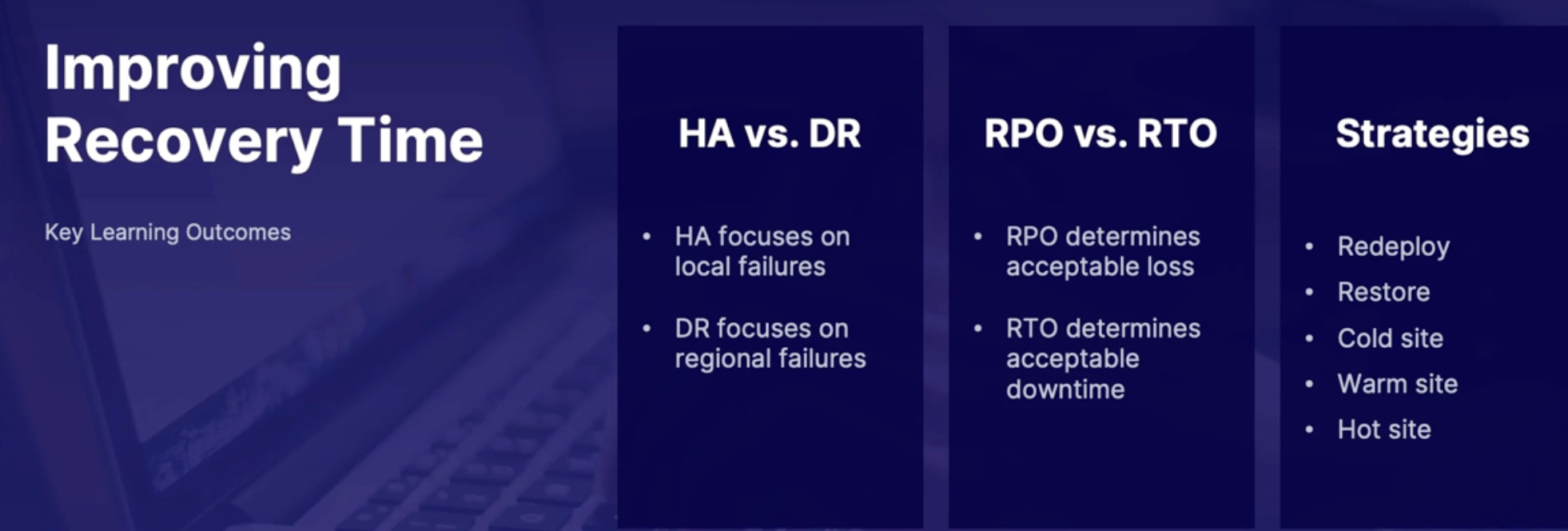

Improving recovery time 46

Why do we need a recovery plan 46

High availability(HA) Vs disaster recovery(DR) 47

Recovery Point Objective(RPO) Vs Recovery Time Objective(RTO) 47

Business continuity strategies 47

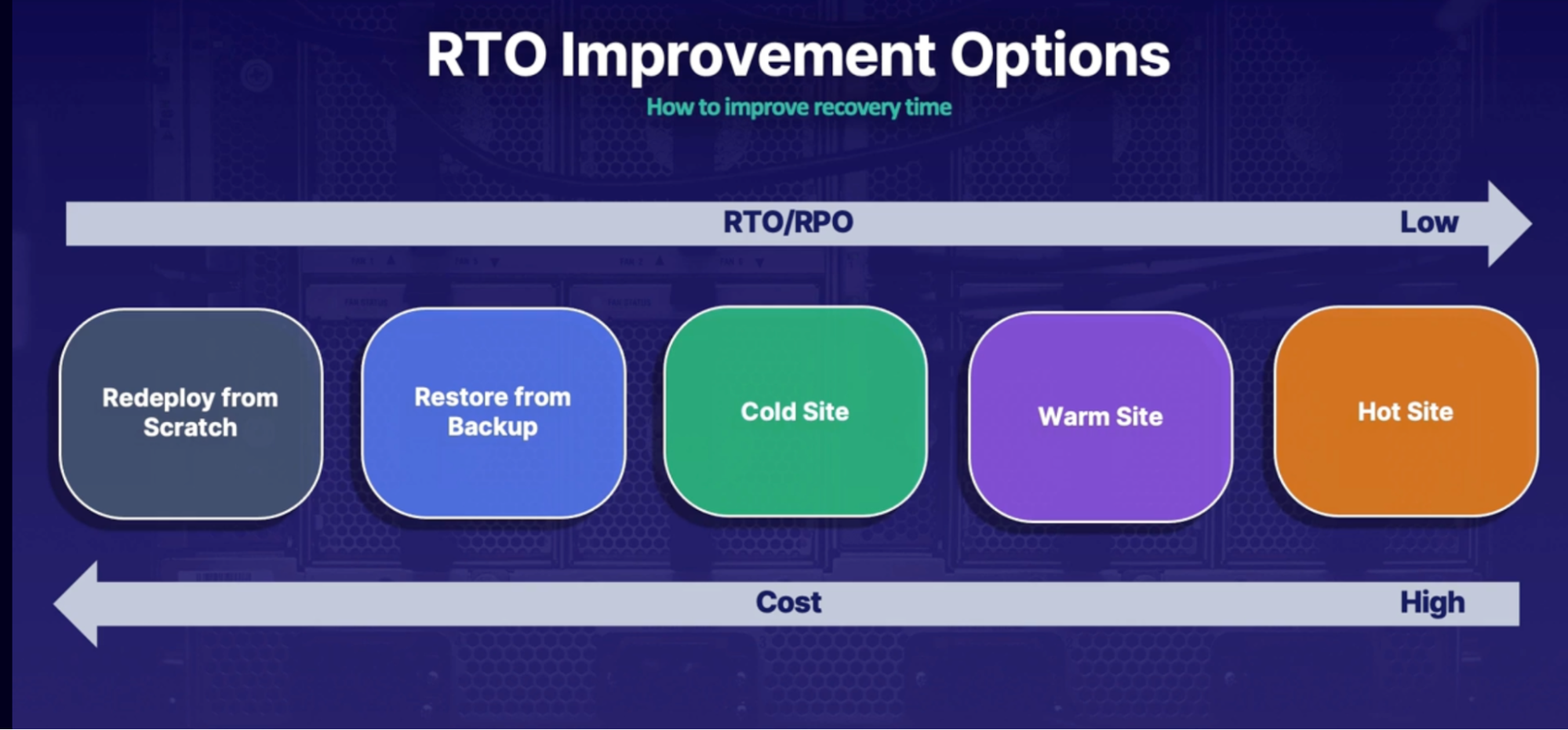

RTO improvement options 48

Azure services that help reduce RTO 50

Summary 50

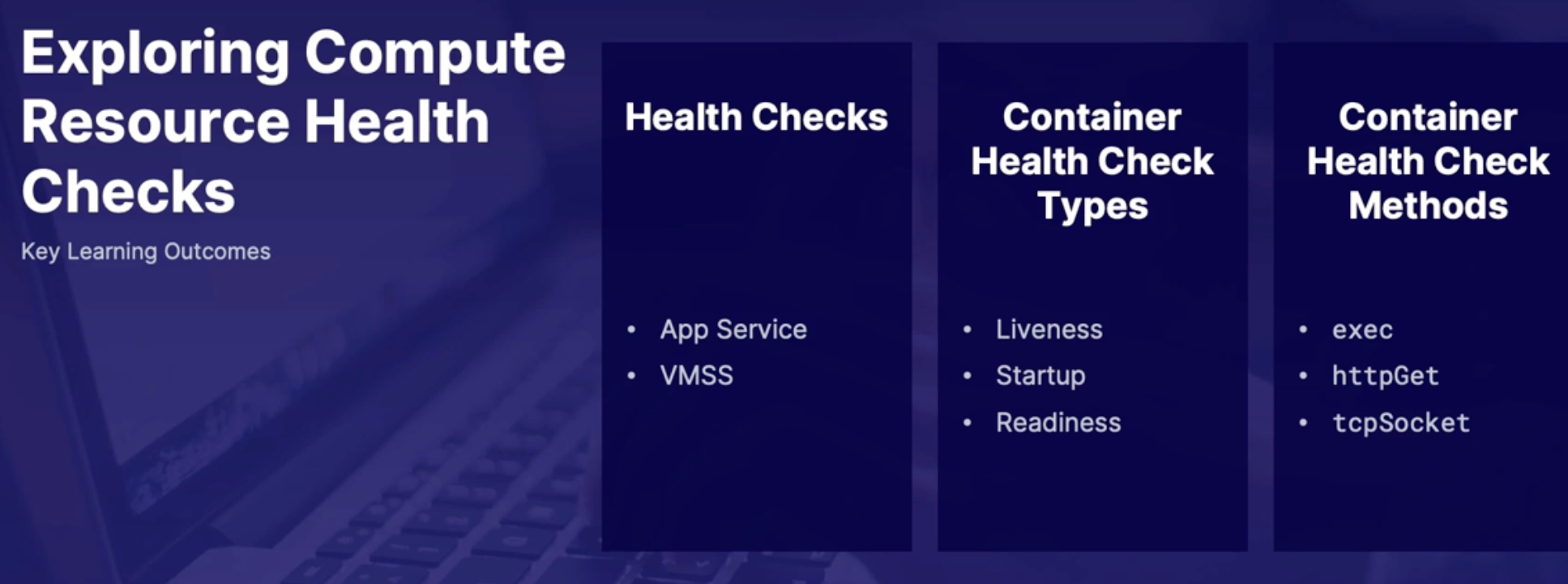

Exploring computer resource health checks 51

App service Health checks 51

Customize Web App health checks 51

VMSS Health extension 51

Container health check types 52

Liveness Check Example 52

Startup Check Example 53

Readiness Check example 53

Summary 54

Summary 54

Chap - 4: Developing a modern Source Control Strategy 56

Introduction to source control 56

What is source control 56

Source control types 56

Which one to use? 57

Summary 57

Exploring Azure repos 57

Azure repos at a glance 57

Setting up Azure Repos 57

Import options 57

Supported GIT features 57

Summary 58

Azure Repos demo and Git workflow 58

Repository sharing with submodule 58

What are submodules 58

How submodule works 59

Submodules in Azure DevOps 59

Demo: adding submodule to repo 59

Summary 60

Summary 60

lab: authenticate to Azure repose using an authentication token 61

Chap - 5: Planning and implementing branching strategies for the source code 62

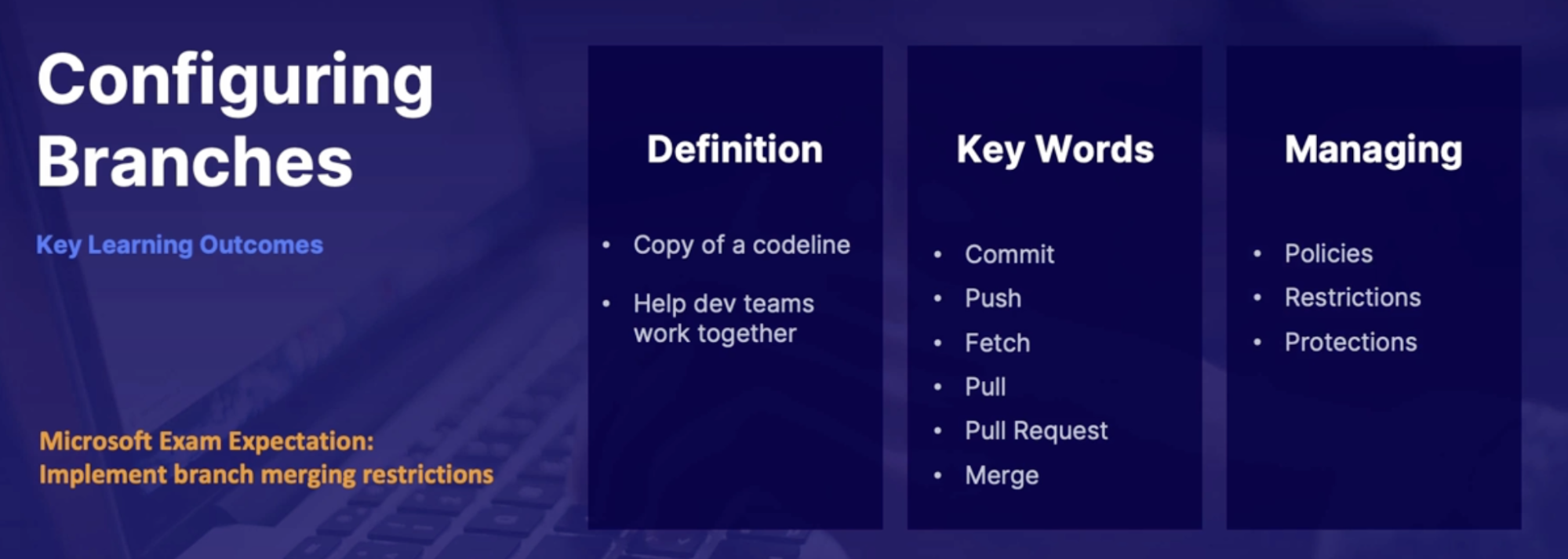

Configure branches 62

What is a branch 62

Branch management 62

Summary 63

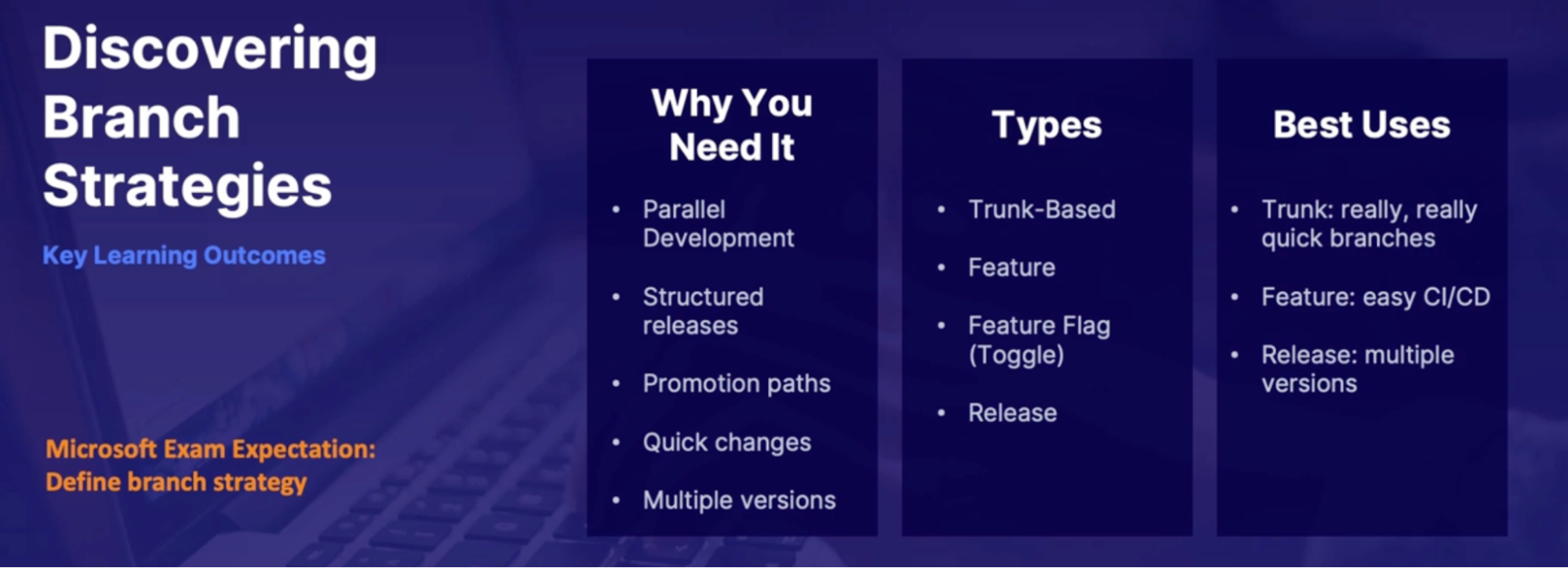

Discovering Branch Strategies 63

Branch strategies 63

● Trunk-based branching 63

● Feature(task) branching 64

● Feature flag branching 64

● Release branching 64

Summary 64

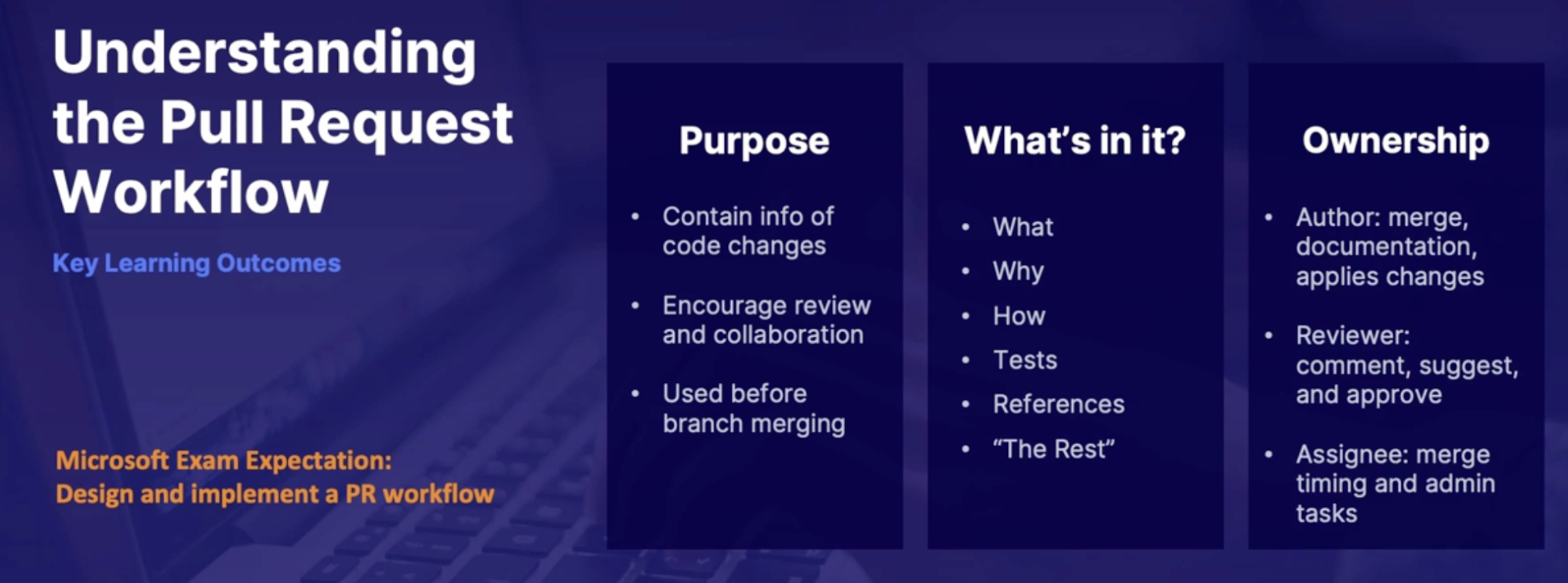

Pull request workflow 64

What is pull request 65

Goals 65

What’s in the pull request 65

Pull request workflow 65

Summary 65

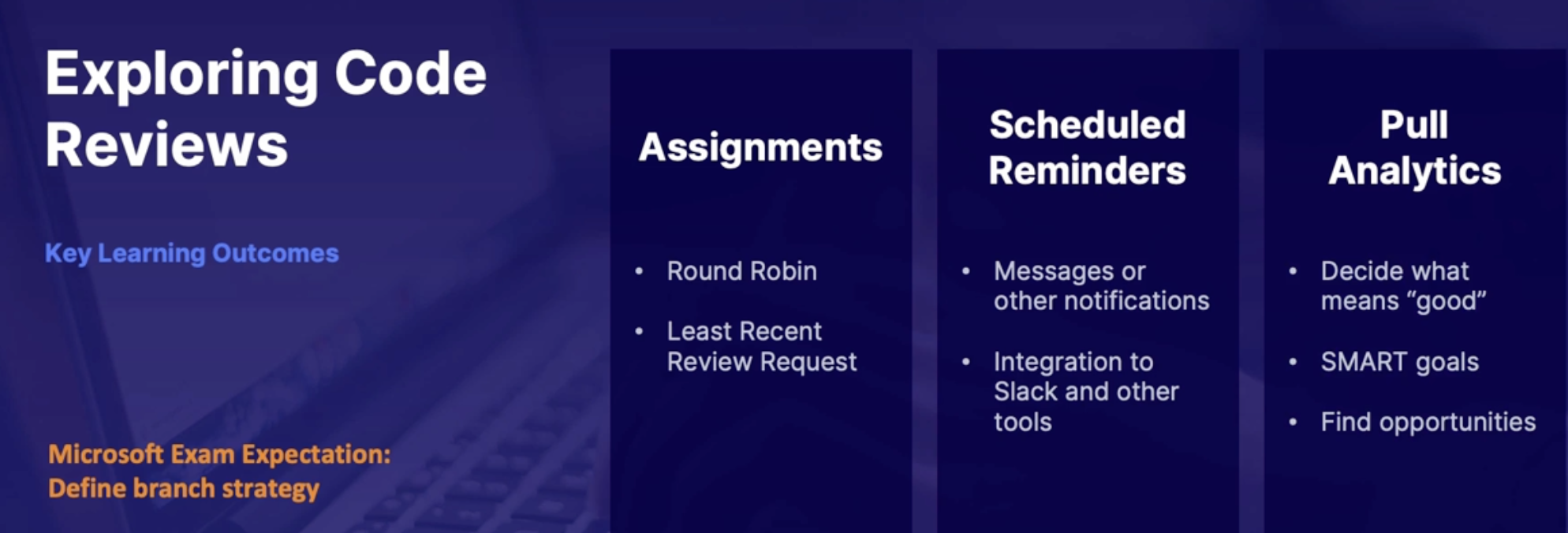

Code reviews 65

How can you make code reviews efficient 66

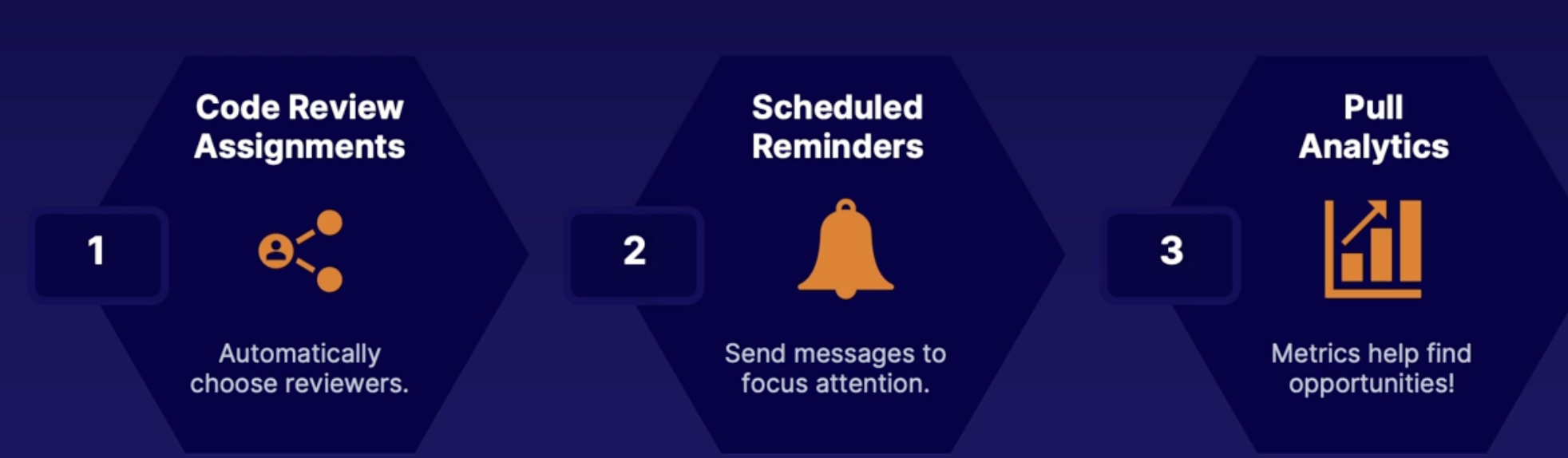

1. Code review assignments 66

2. Schedule reminders 66

3. Pull analytics 66

Demo 66

Summary 66

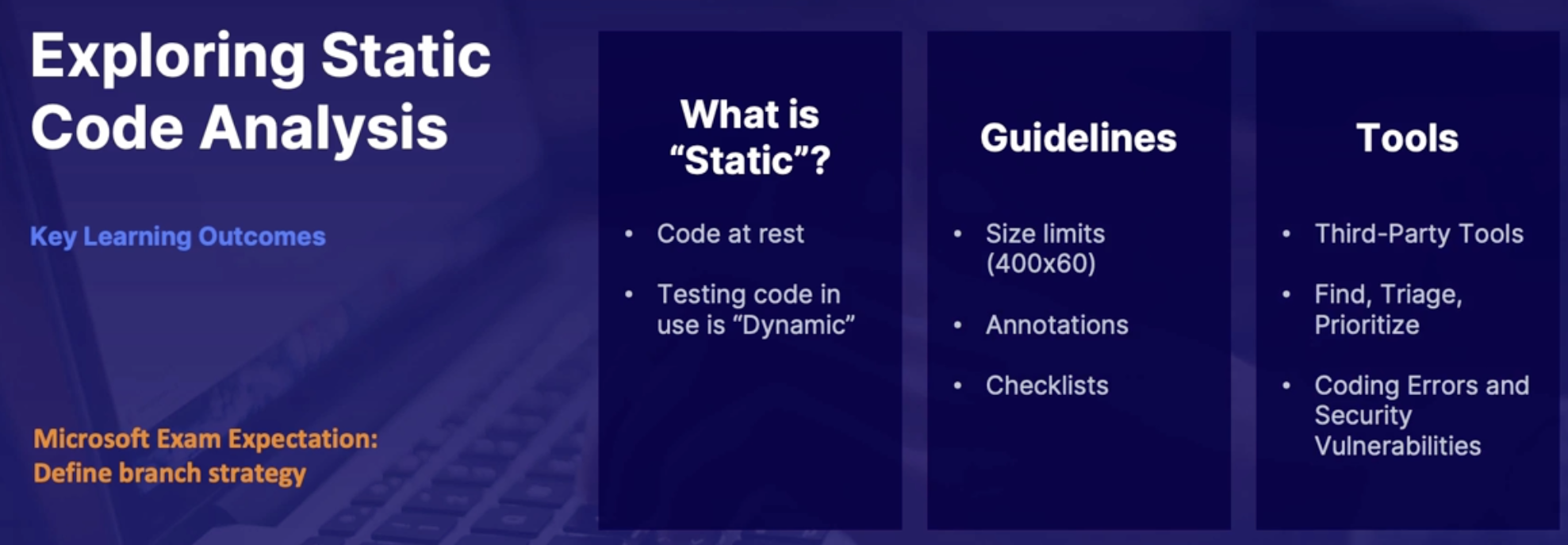

Static code analysis 66

Guidelines for effective code review 66

Code quality tools 67

Demo 67

Summary 67

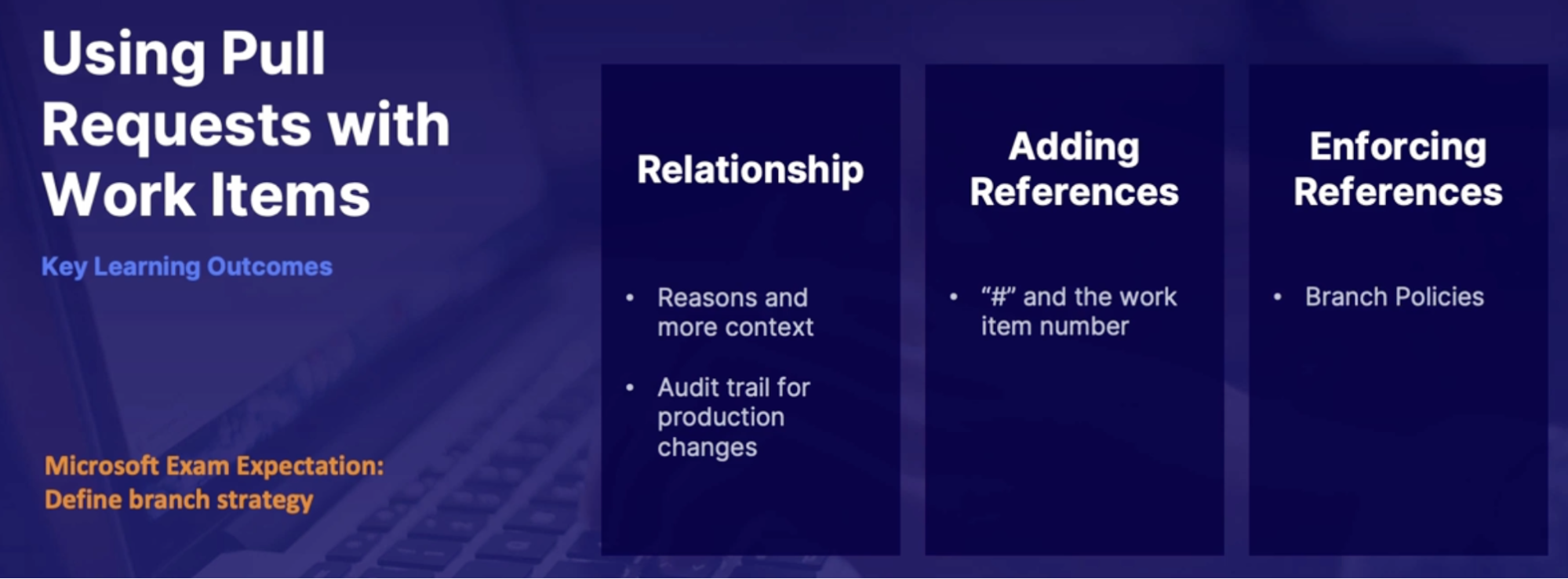

Useful requests with work items 67

The importance of relating work items 67

Demo 67

Summary 67

Lab: Configure branch policies in Azure Repos 67

Summary 68

Chap - 6: Configuring Repositories 70

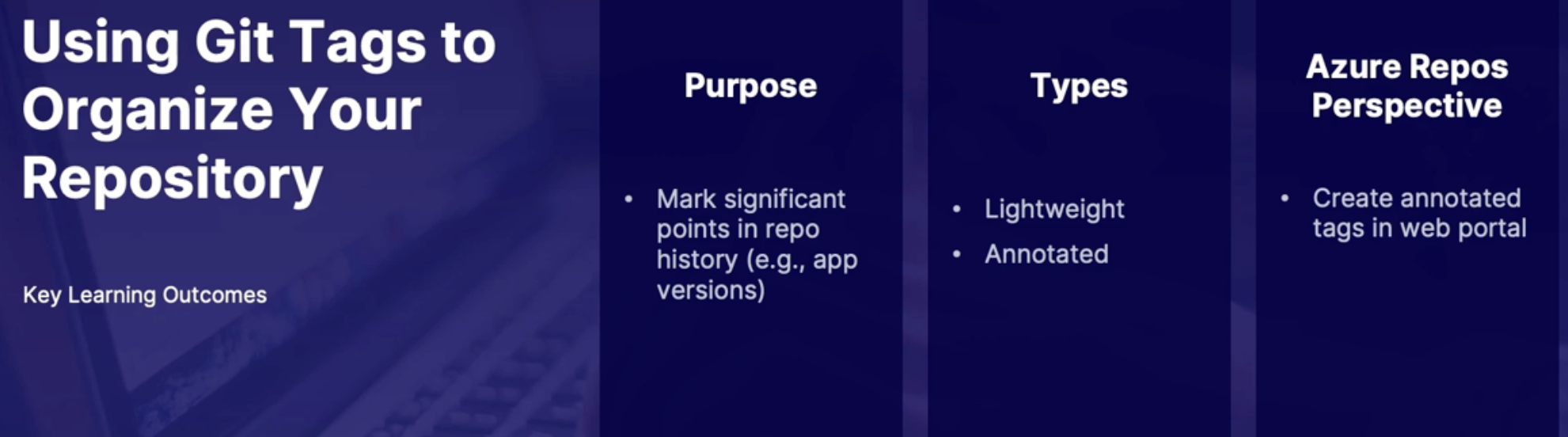

Using Git tags to organize your repository 70

What are Git tags, and why do we care? 70

Tag types and how they work 70

Demo + Tags in Azure repos 71

Summary 71

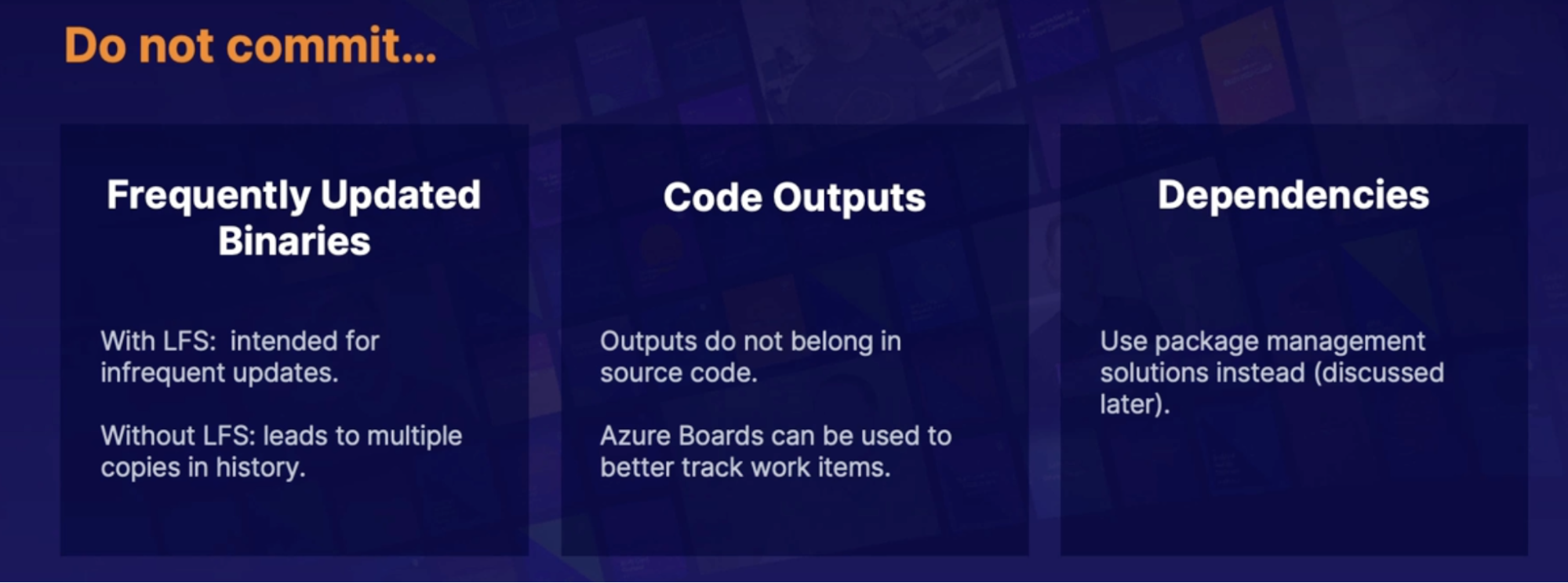

Handling large repositories 71

Challenges of Large repos 71

Working with Git large file storage LFS 71

Best practices for working with large files 72

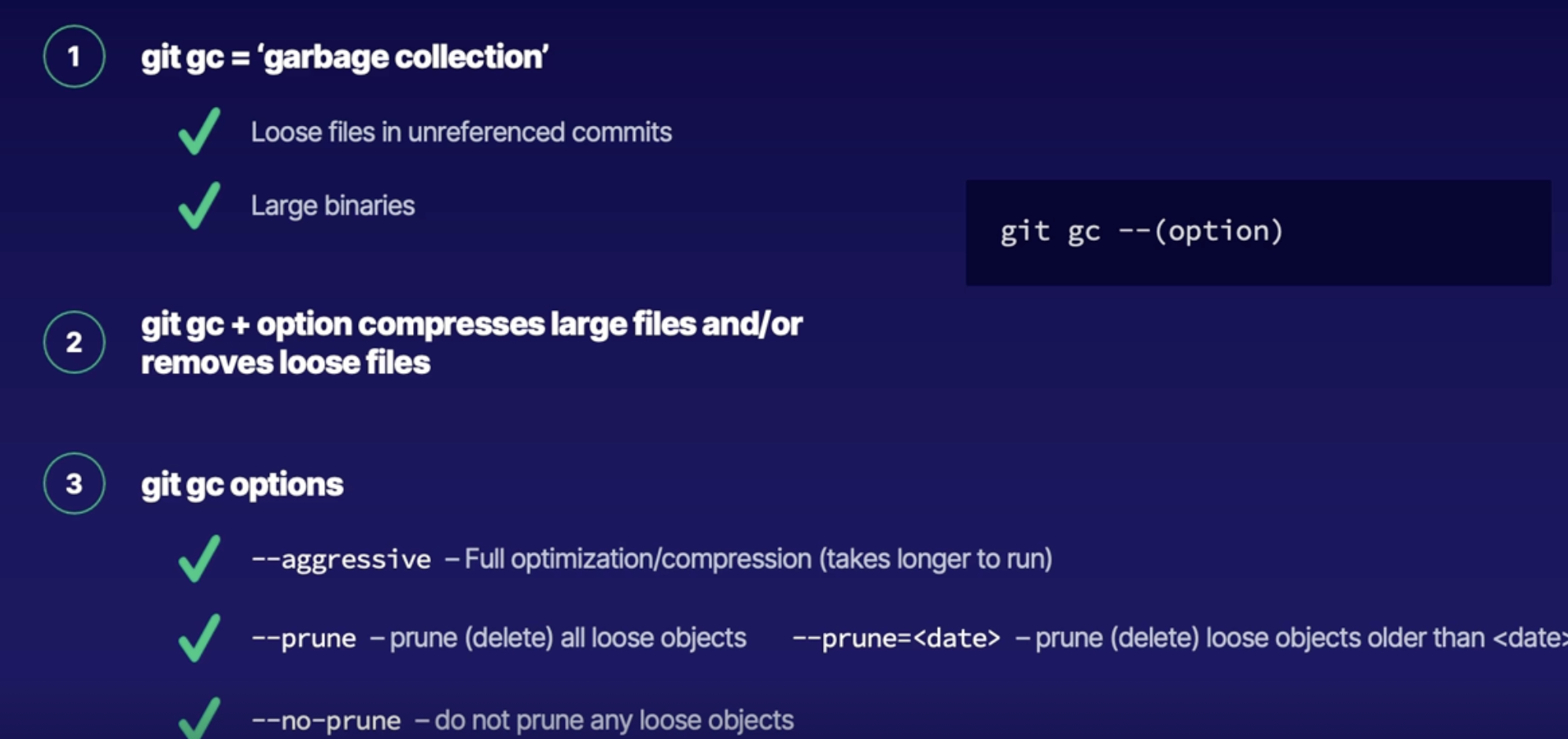

Clean up with git gc 72

Summary 72

Exploring repository permissions 73

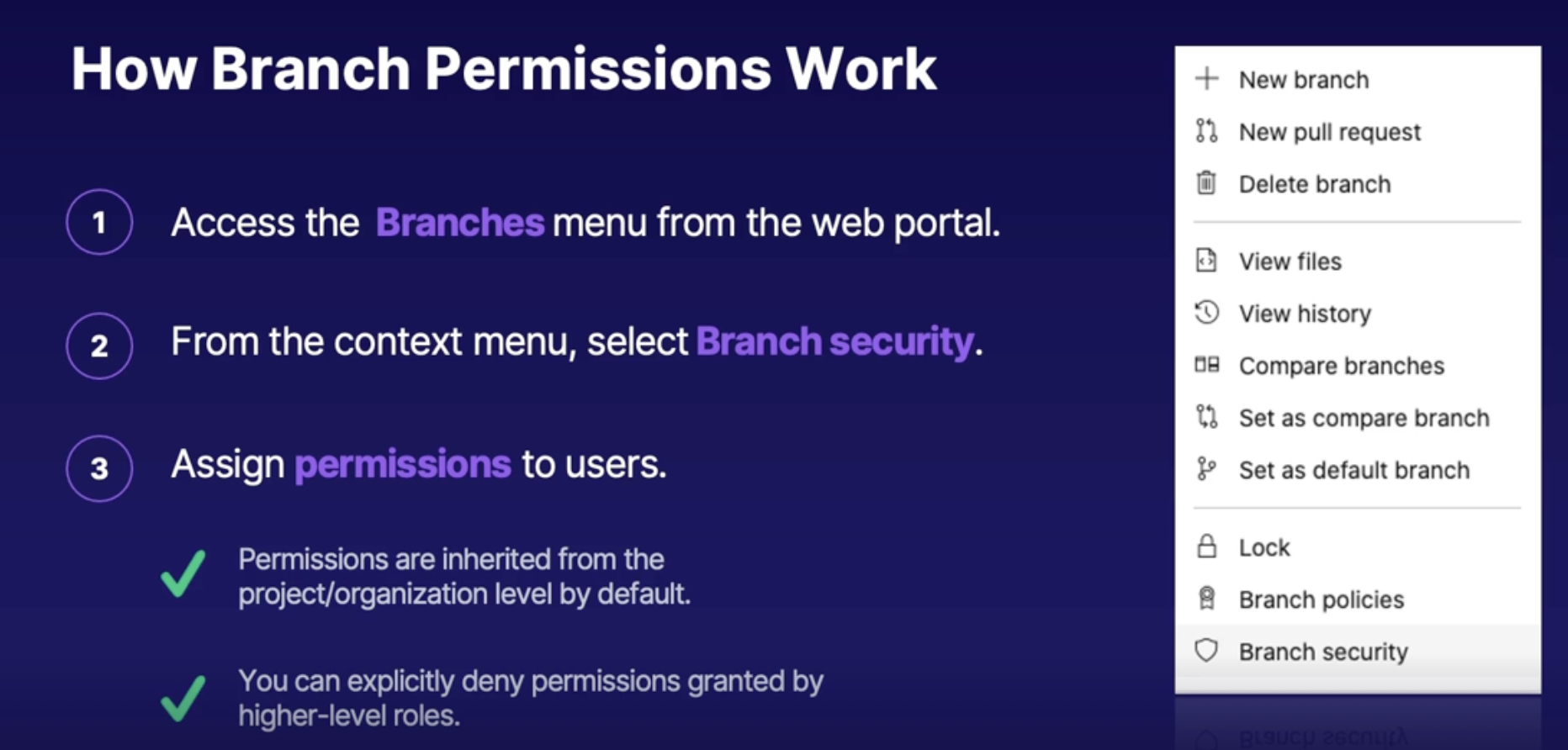

Branch permissions in Azure repos 73

How branch permissions work 73

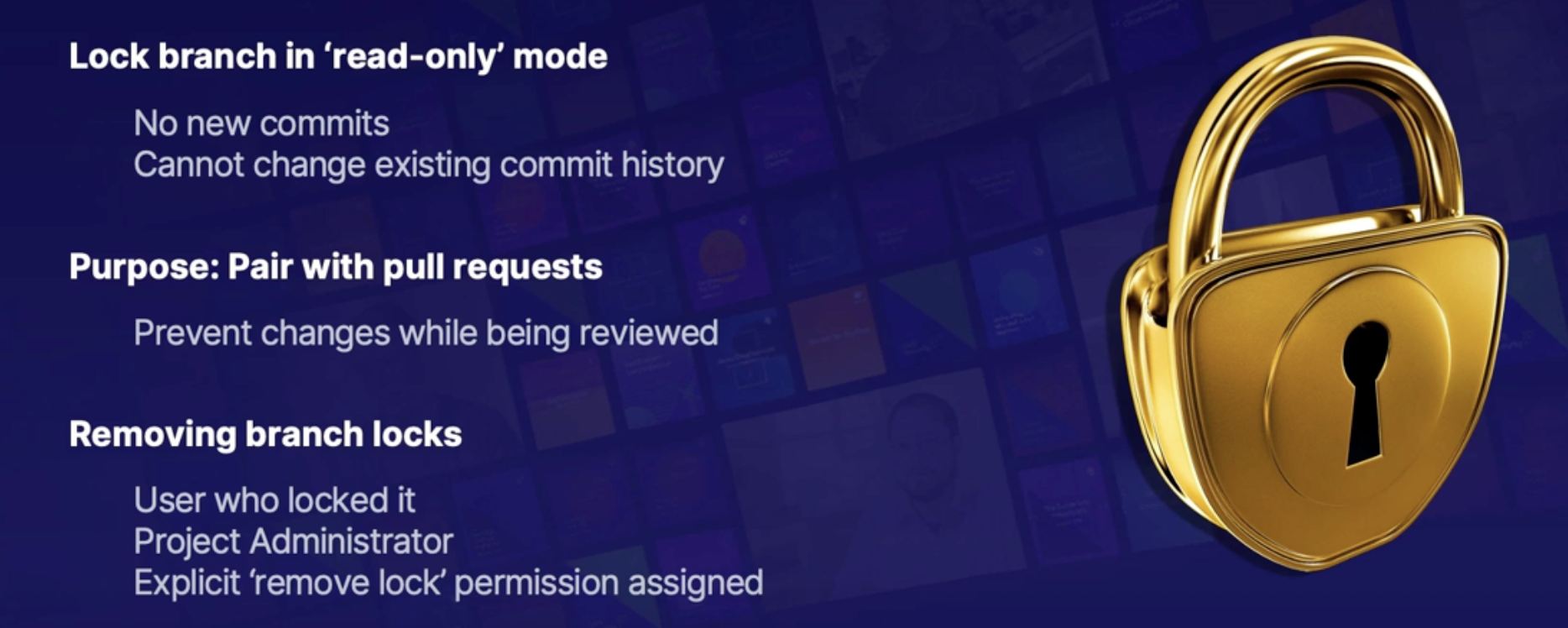

Branch locks 73

Demo: working with branch permissions/locks 74

Summary 74

Removing repository data 74

Challenges of removing Git Data 74

Unwanted file states 74

File removal scenario 74

Demo: Removing Unwanted files from Azure repo 75

Summary 75

Recovering repository data 75

Recovery scenarios 75

Revert to previous commit 76

Restore deleted branch from Azure repos 76

Restore deleted Azure repository 76

Demo: recover deleted branch 76

Summary 76

Summary 76

Chap - 7: Integrating source control with tools 78

Connecting to GitHub using Azure active directory 78

Advantage of AAD integration 78

Requirements for connecting to AAD 78

Azure AD SSO configuration 78

GitHub enterprise configuration 79

Summary 79

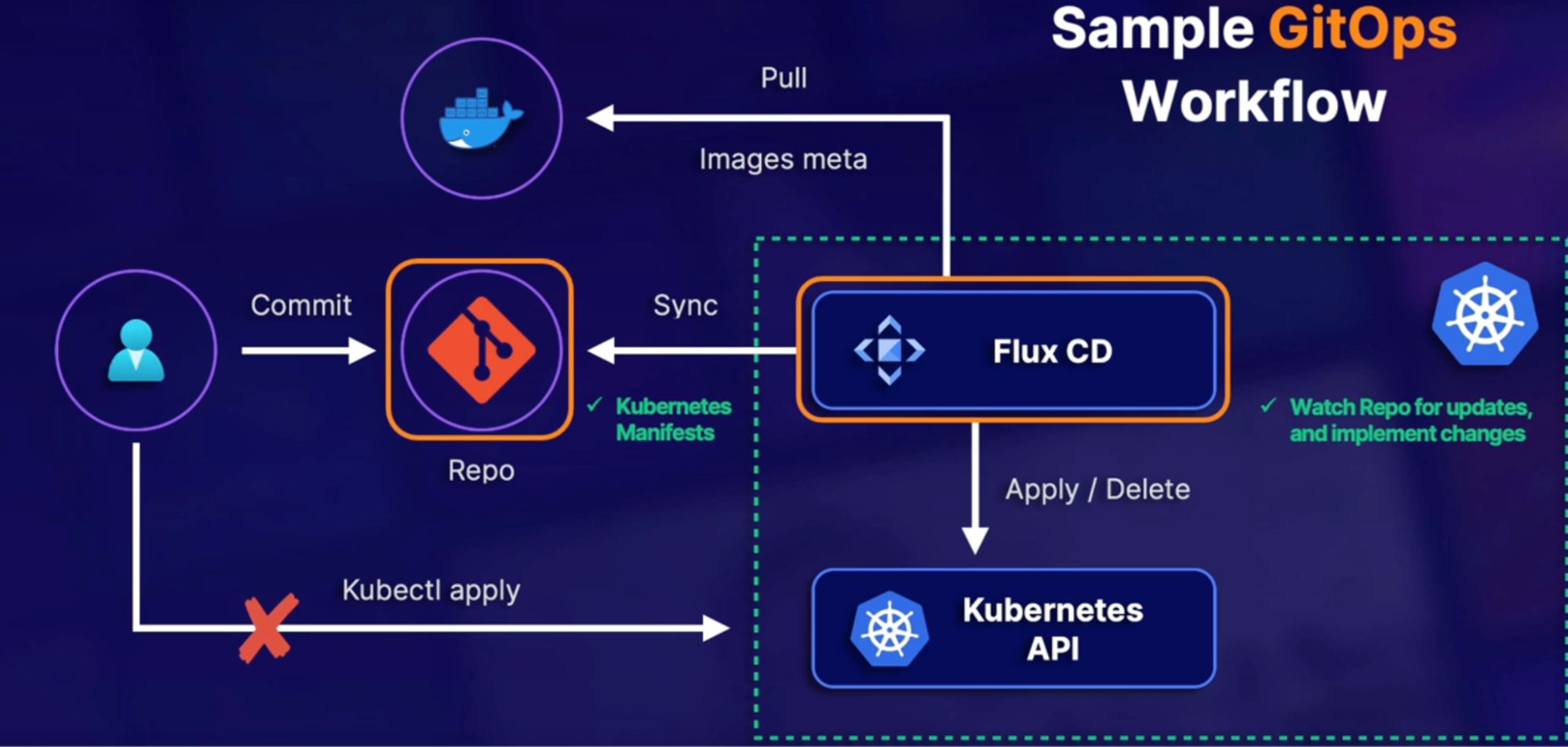

Introduction to GitOps 79

What is GitOps 79

Sample GitOps workflow 79

Exam perspective 80

Summary 80

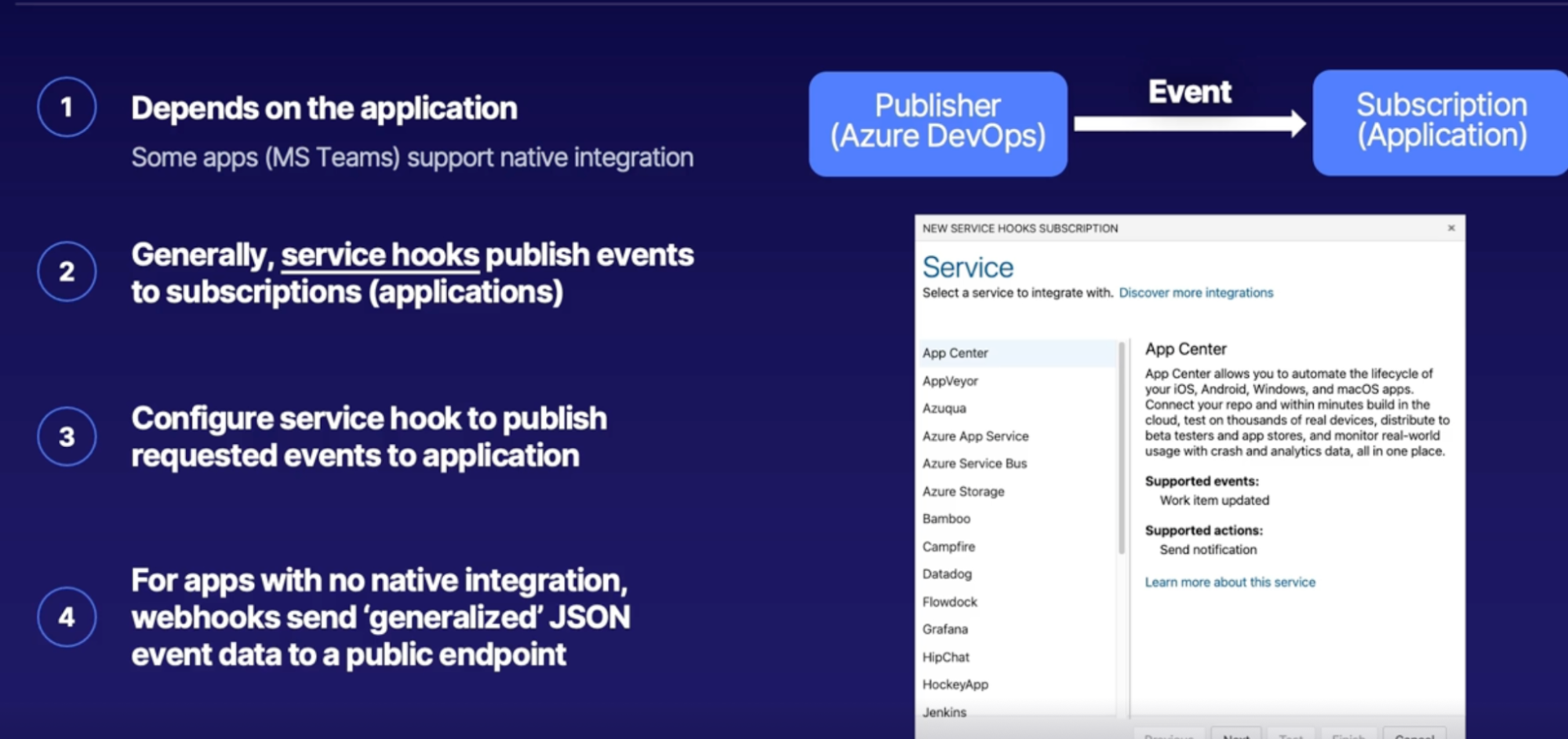

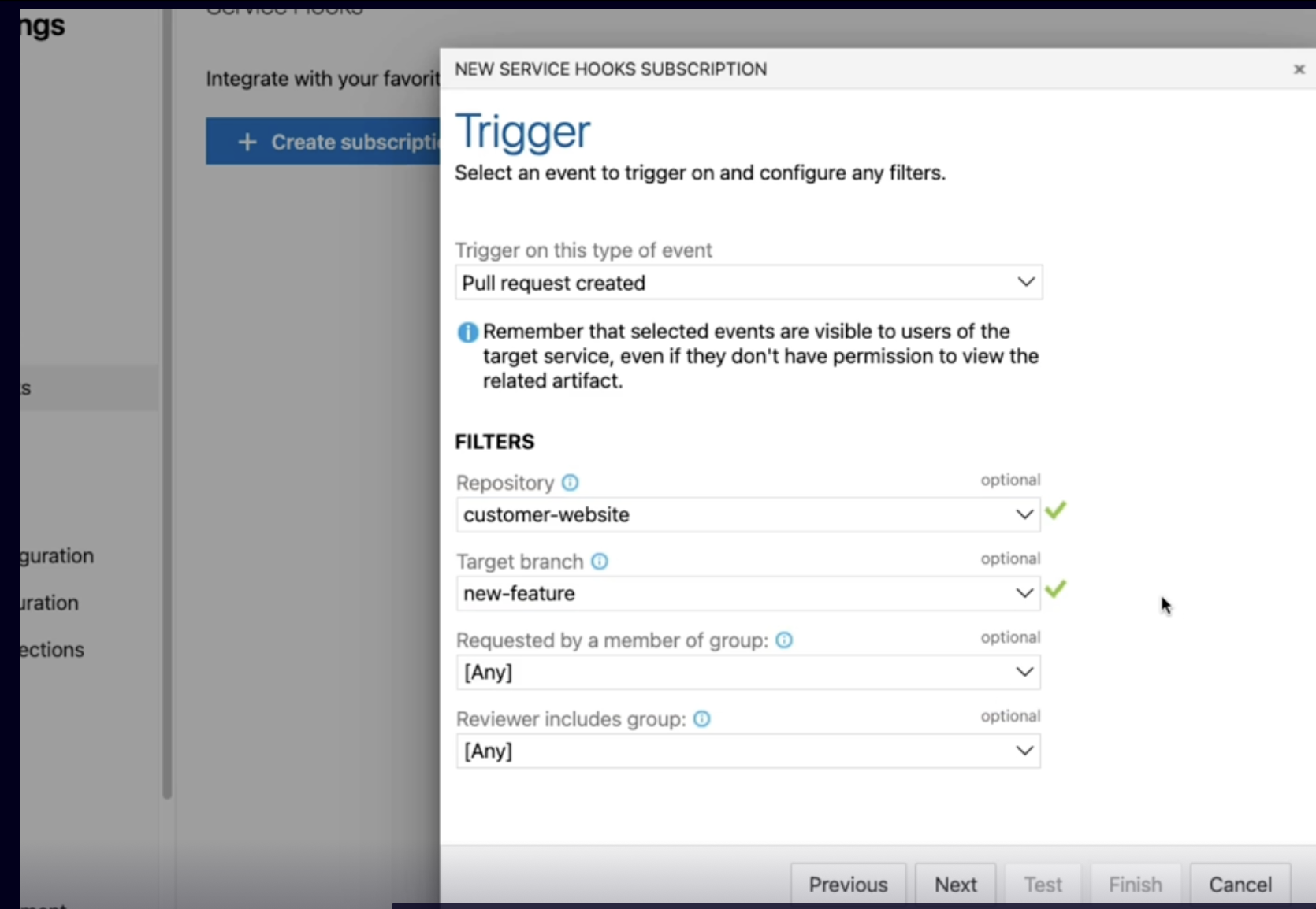

Introduction to ChatOps 80

What is ChatOps 81

How to connect Chat apps to Azure DevOps 81

Demo 81

Summary 82

Incorporating Changelogs 82

What is GIT Changelogs? 82

Manually creating/viewing Changelogs 83

Automation options 83

Demo viewing Changelogs via command line 83

Summary 83

Summary 83

Chap - 8: Implementing a build strategy 84

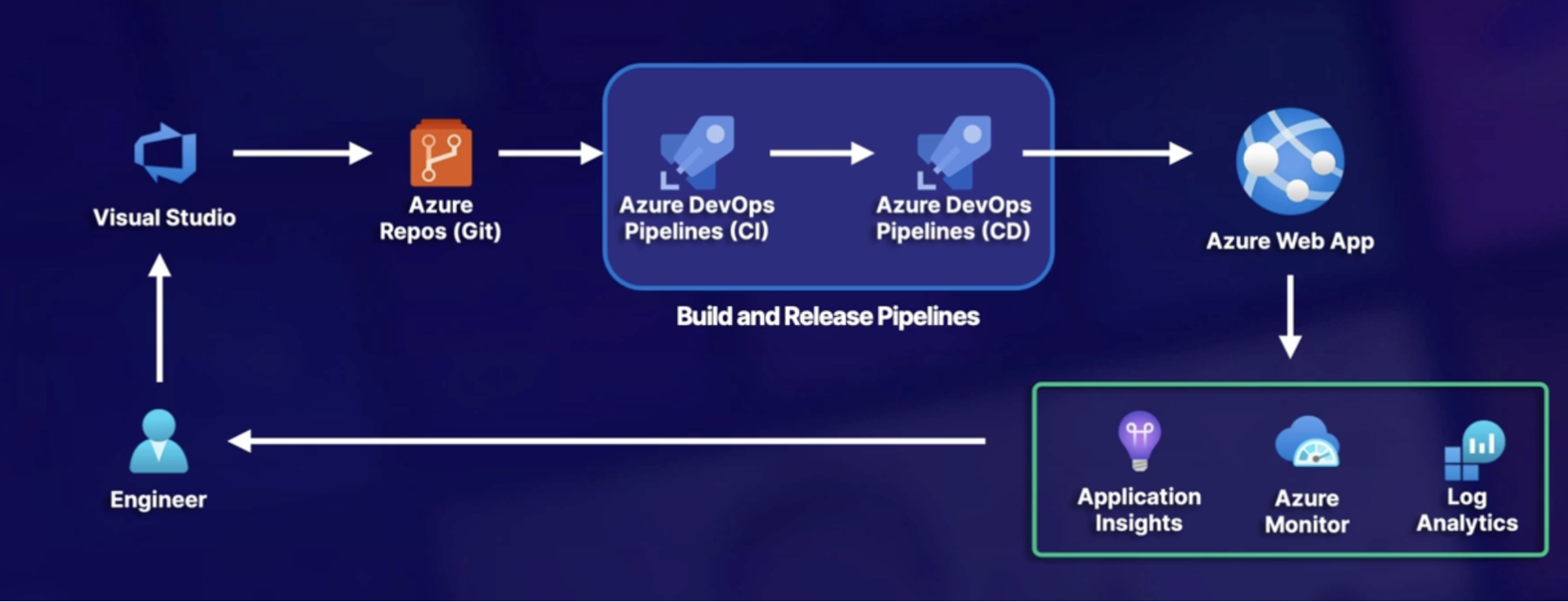

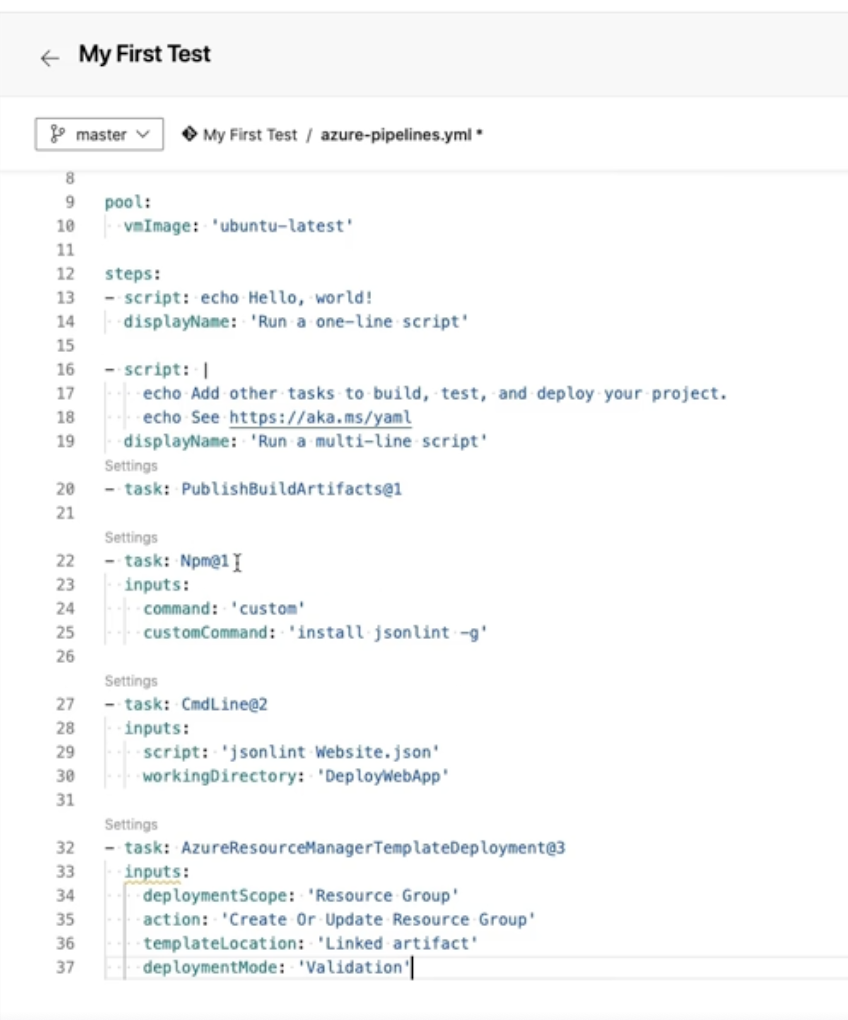

Getting started with Azure pipelines 85

What are Azure pipelines 85

Importance of automation 85

Pipeline basic/structure/trigger 86

Summary 87

Azure pipeline demo 87

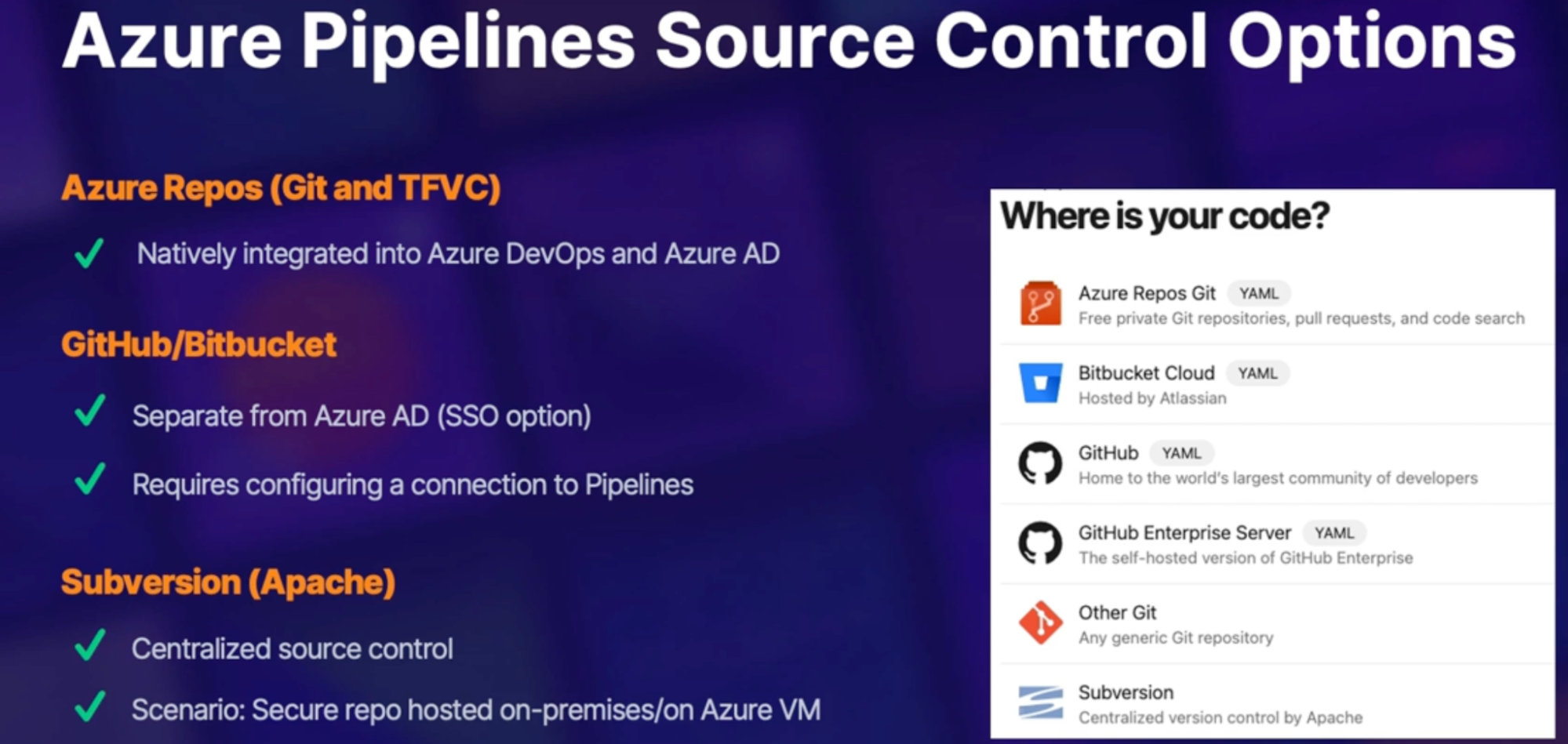

Integrate source control with Azure DevOps pipelines 88

Source control options 88

GitHub, Subversion 88

Demo 88

Summary 89

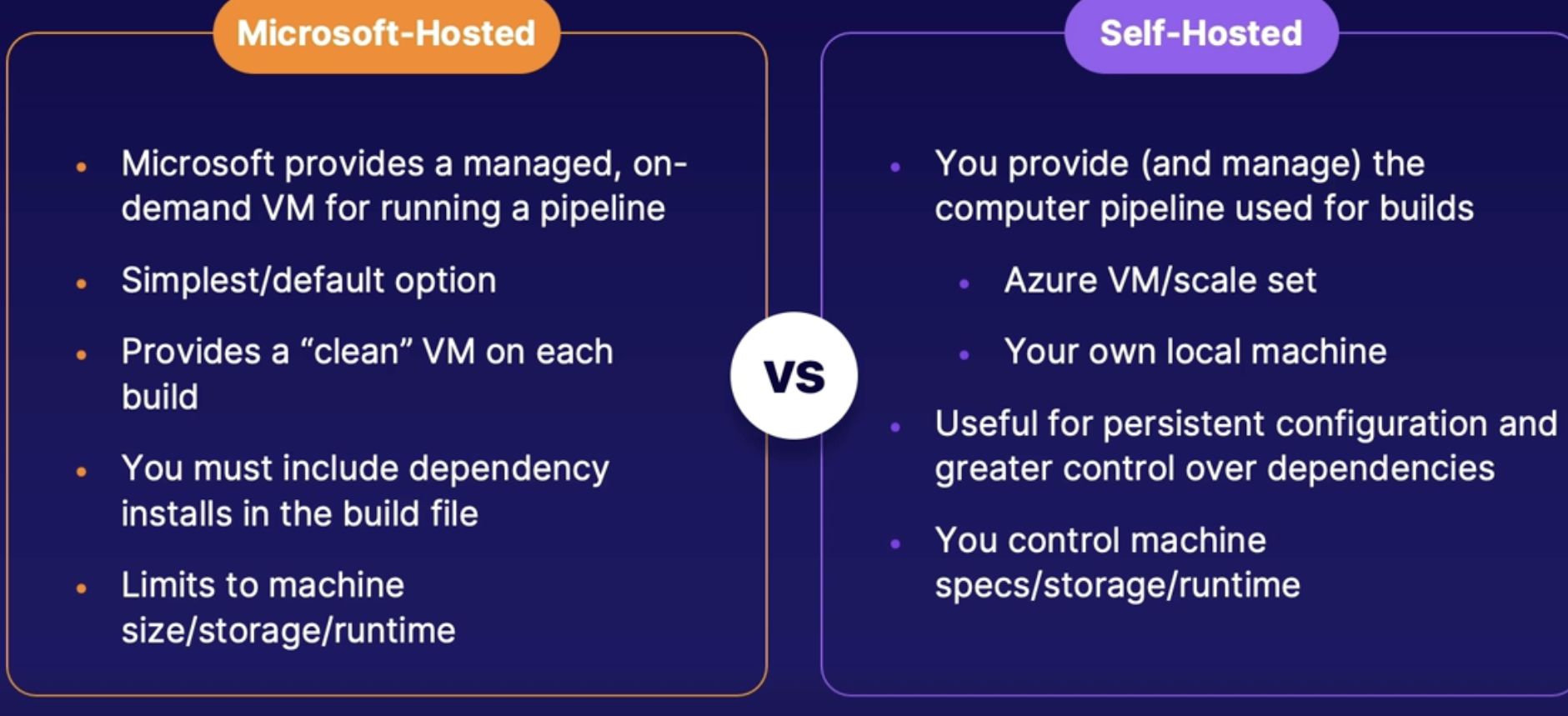

Understanding build agents 89

Role of agent 89

Microsoft and self-hosted agent 89

Parallel jobs 90

Demo 90

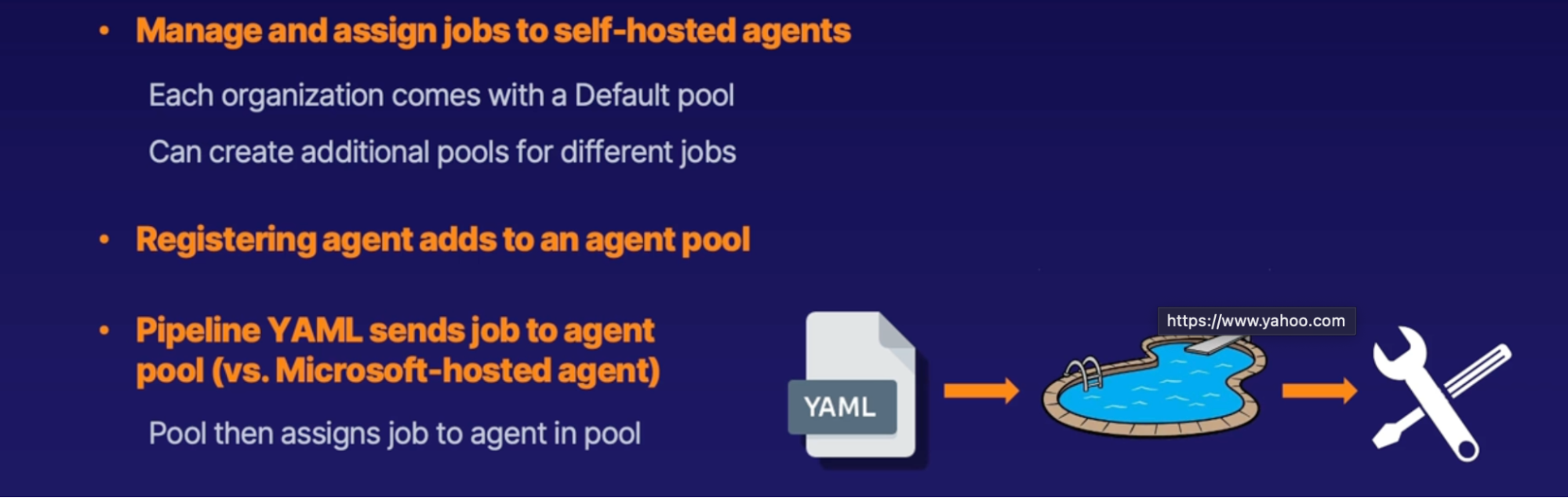

Exploring self hosted build agents 90

Self-hosted agent scenario 91

Self-hosted agent communication process 91

Agent pools 91

Demo 91

Summary 92

Using build trigger rules 92

Trigger types 93

Summary 94

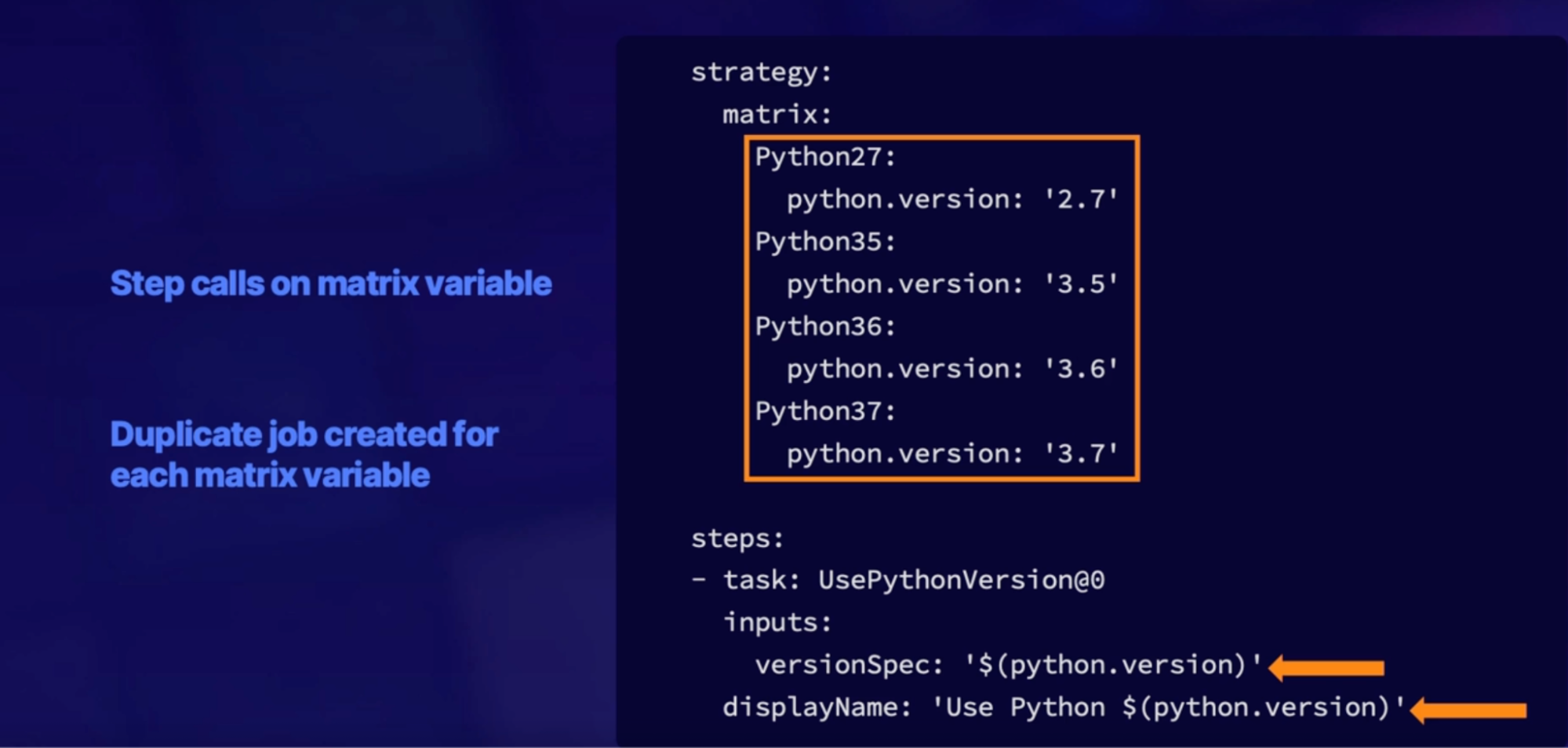

Incorporating multiple builds 94

Multiple Build scenario 95

Demo 95

Summary 96

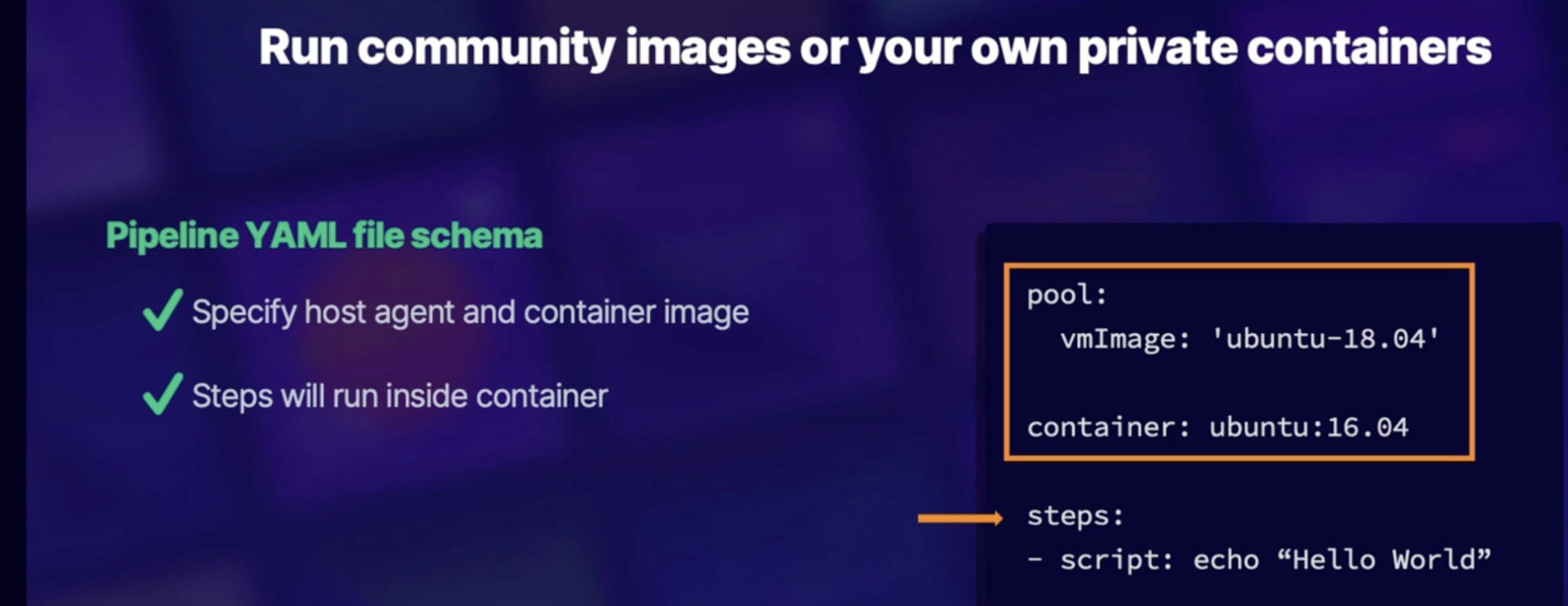

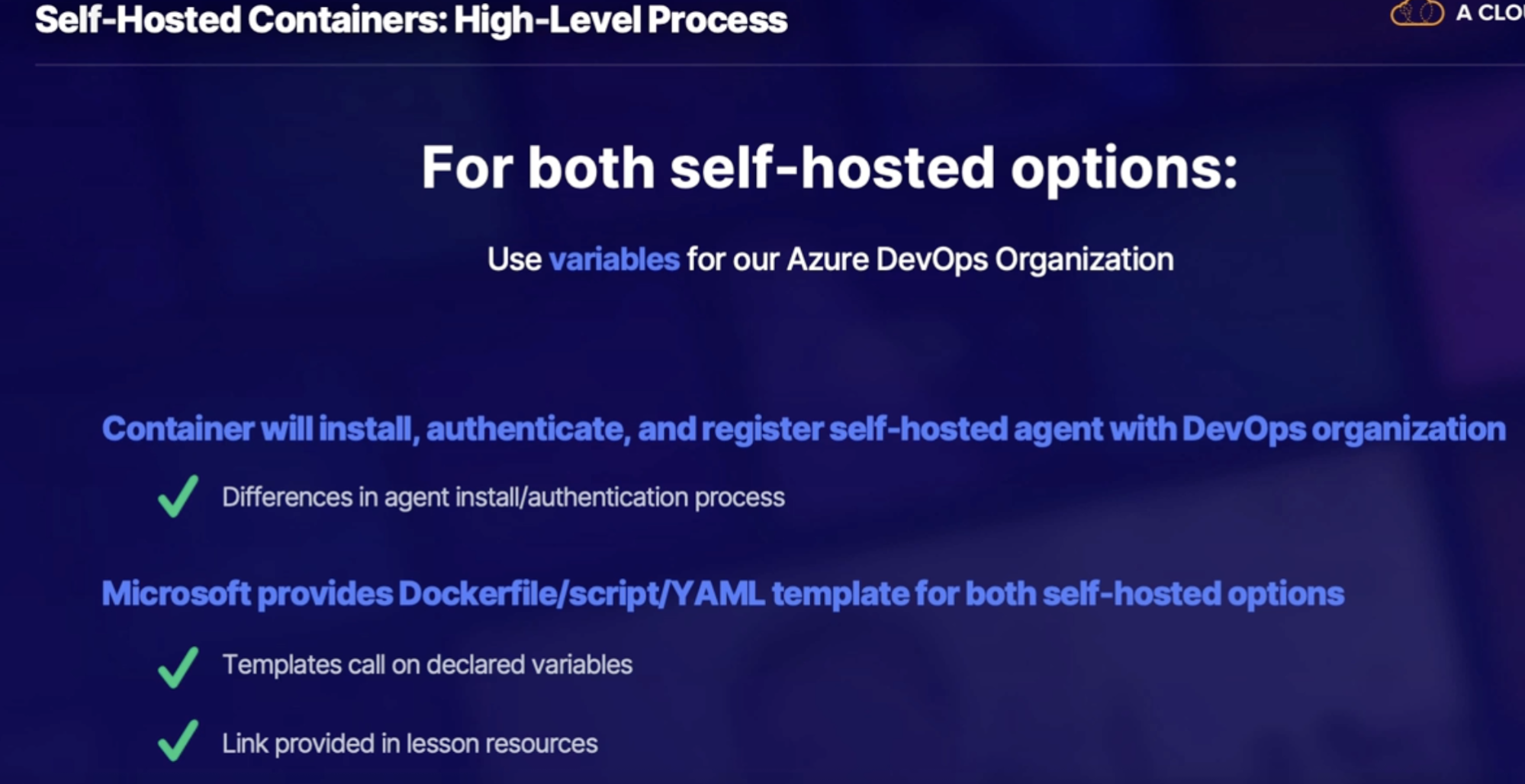

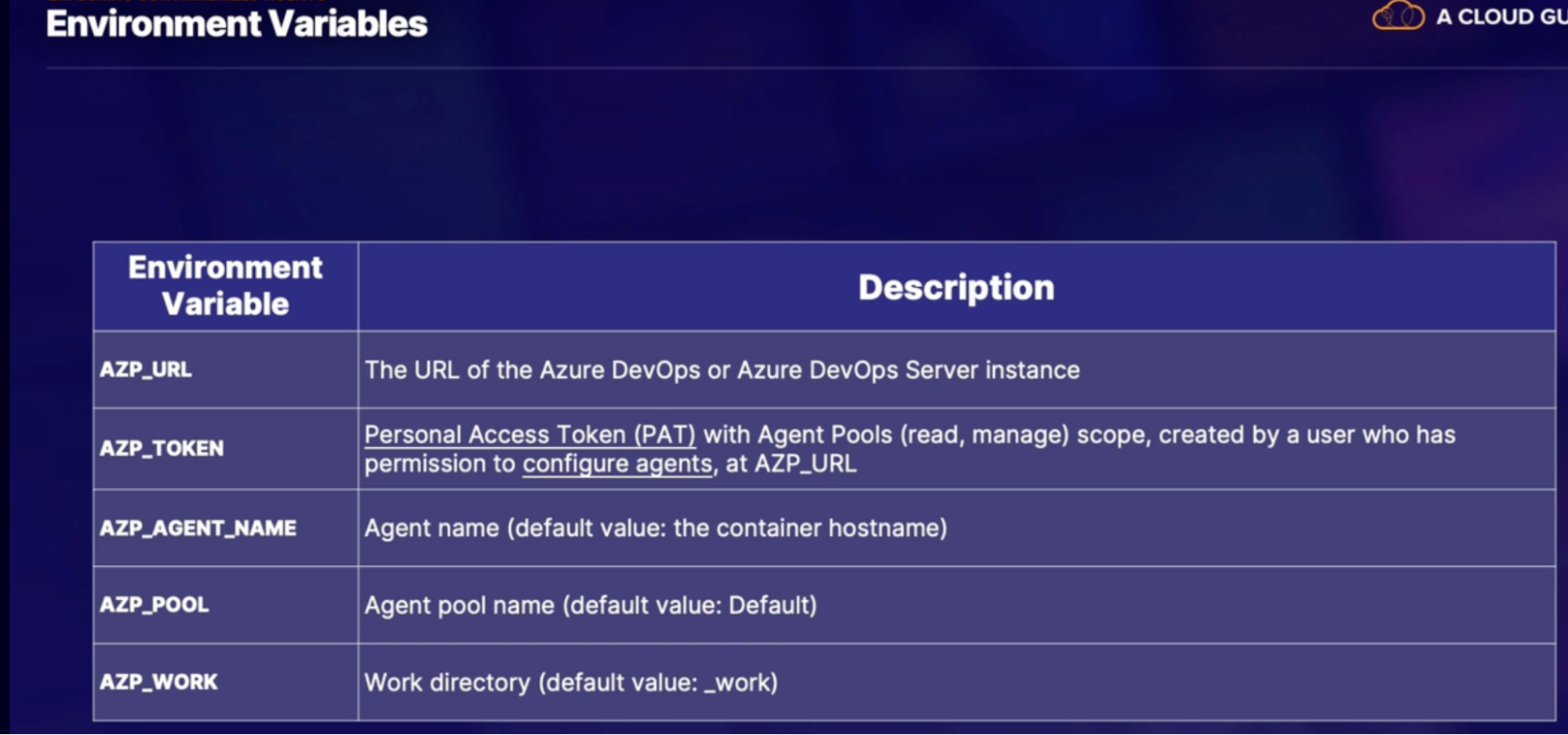

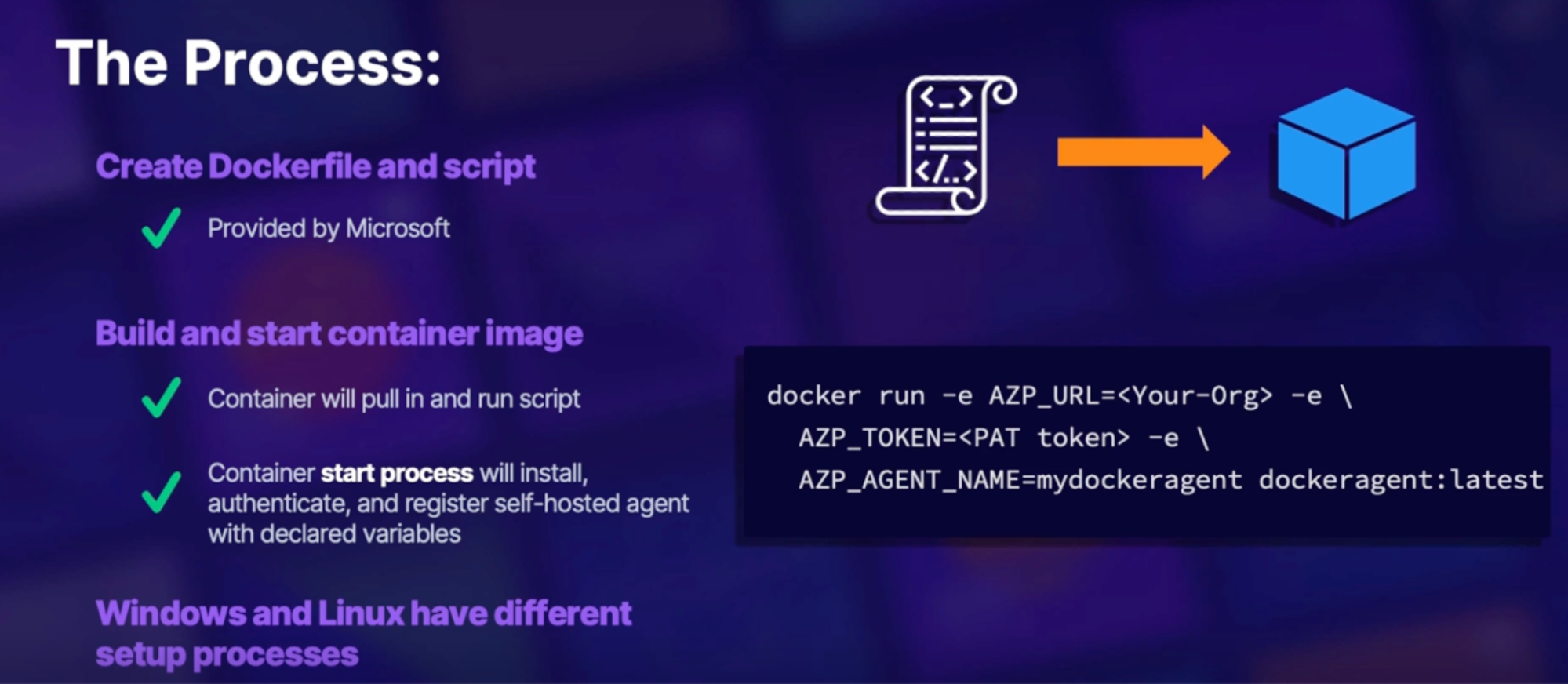

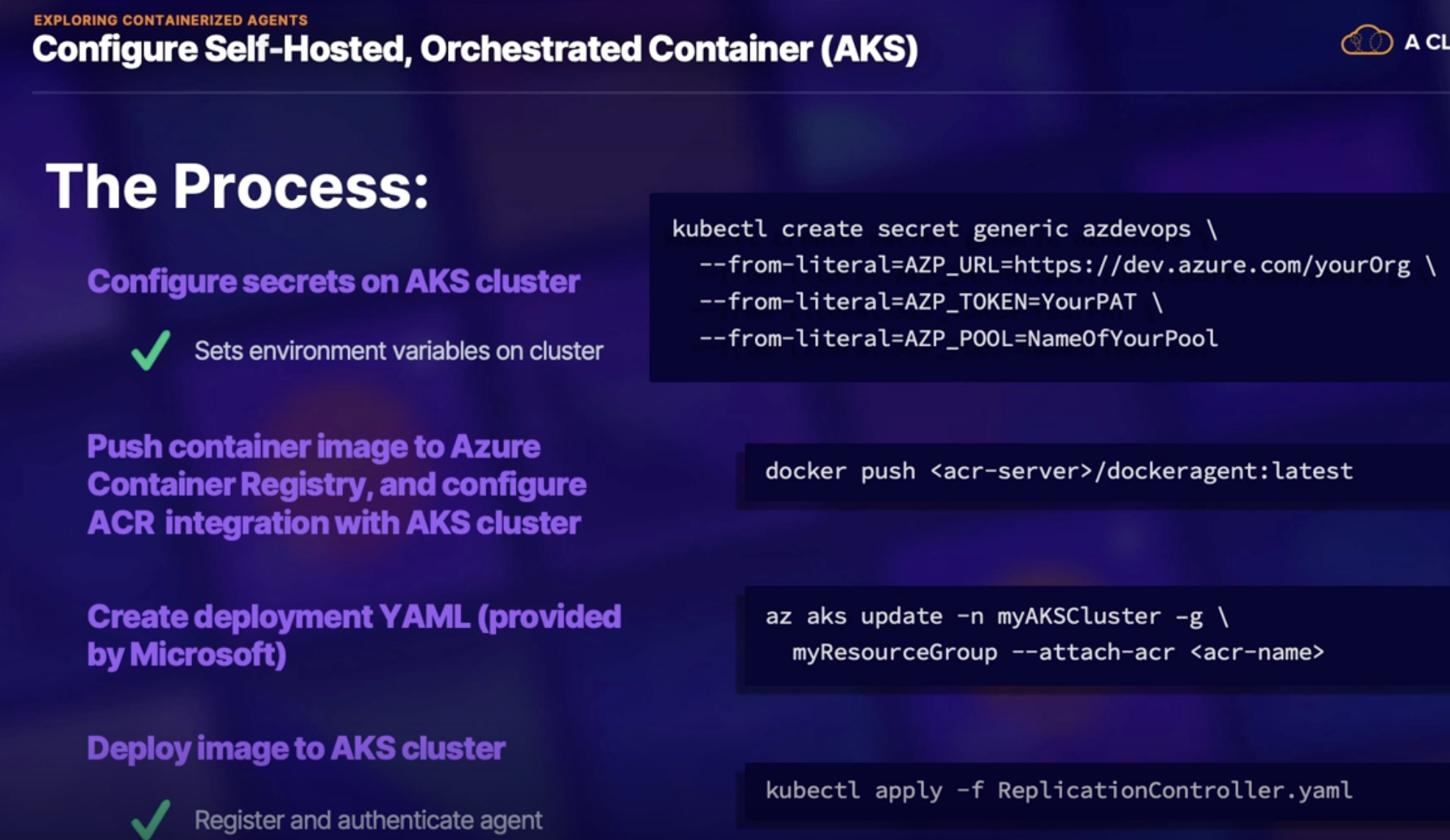

Exploring containerized agents 97

Why run a pipeline job in a container 97

Microsoft hosted agent configuration 97

Non-orchestration configuration(manual) 98

Orchestration configuration(AKS) 100

Summary 100

Summary 100

Lab: Use deployment groups in Azure DevOps to deploy a .net app 103

Lab: Integrate GitHub with Azure DevOps pipelines 104

Chap - 9: Designing a package management strategy 104

Introduction 104

What is package manager/software package 104

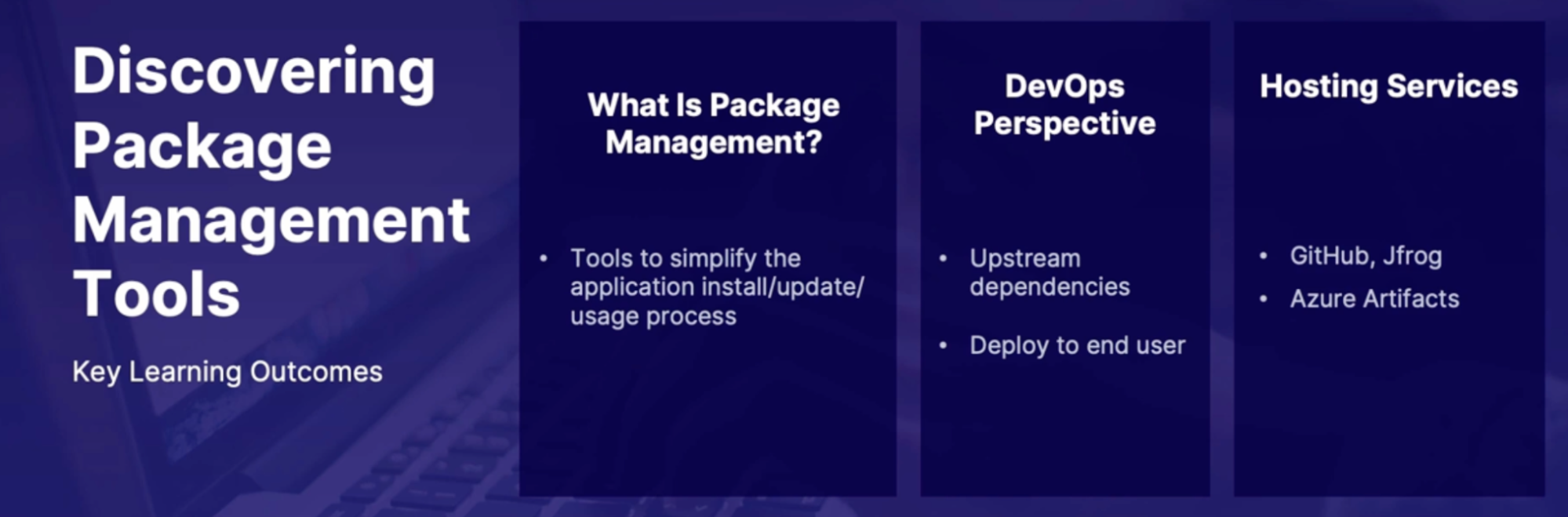

Discovering Package Management Tools 105

Development-related package managers (perspective) 105

How to manage packages 105

Package hosting service example 106

Summary 106

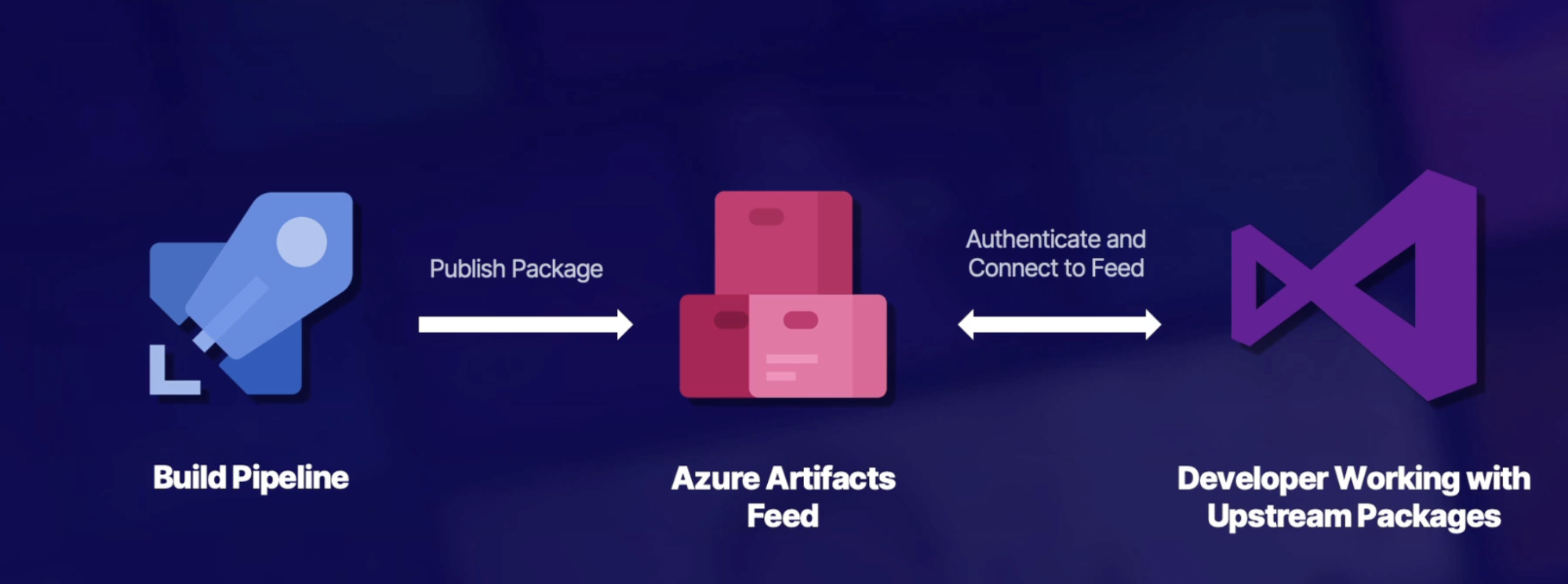

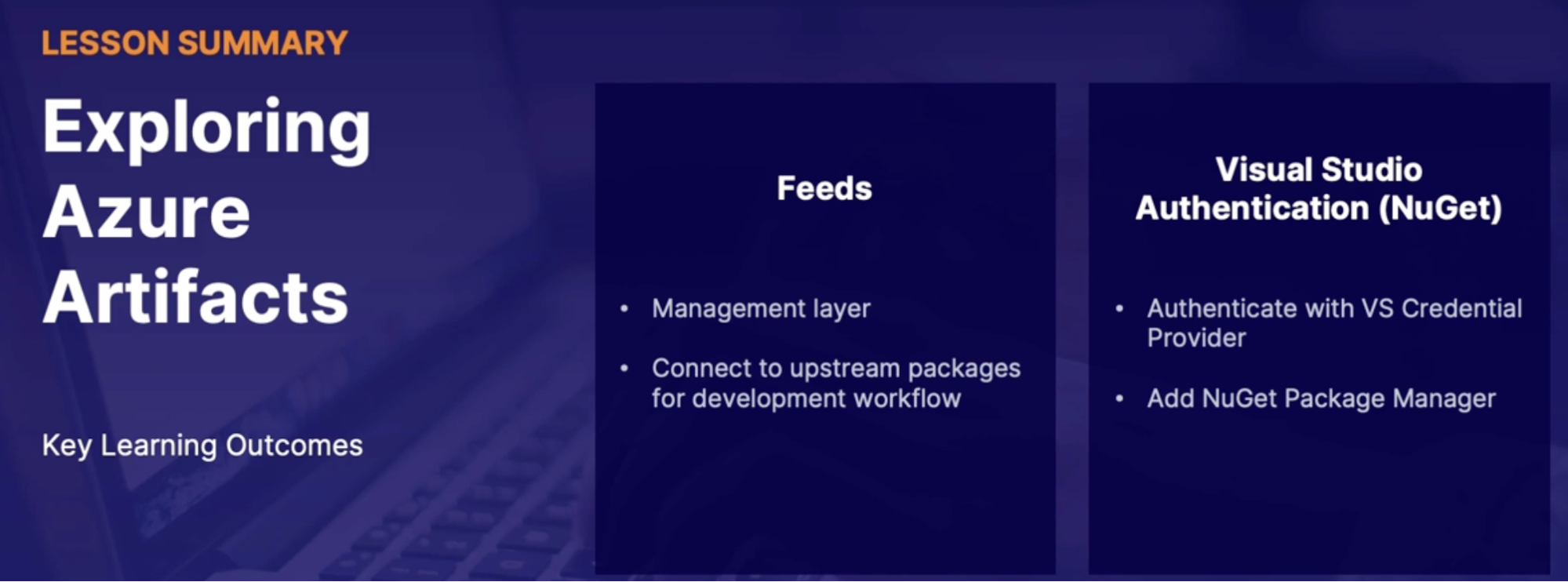

Exploring Azure artifact 106

Azure artifact 106

Feeds 106

Developer workflow with Visual Studio 106

Demo: Connecting to feeds in visual studio 107

Summary 107

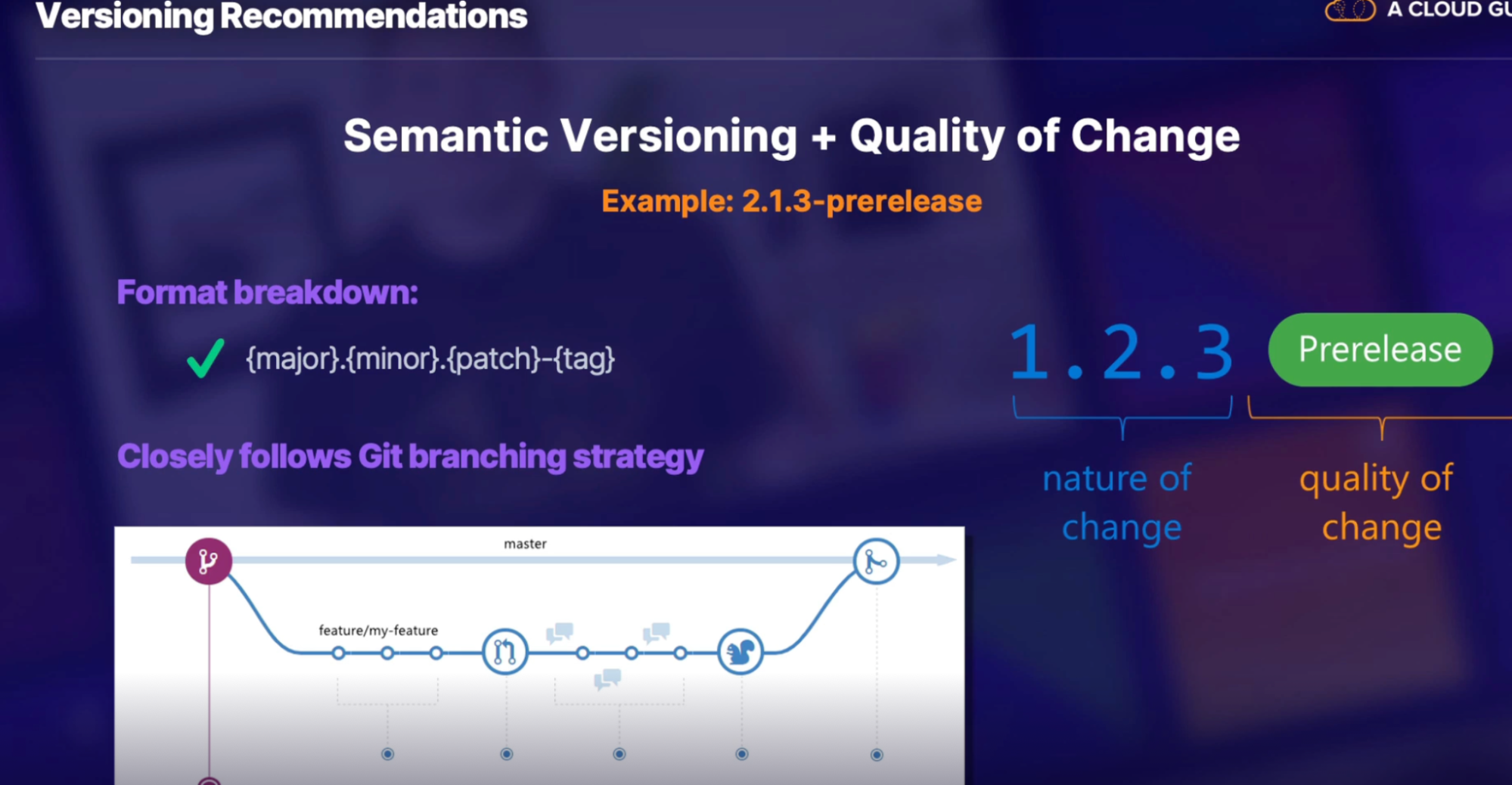

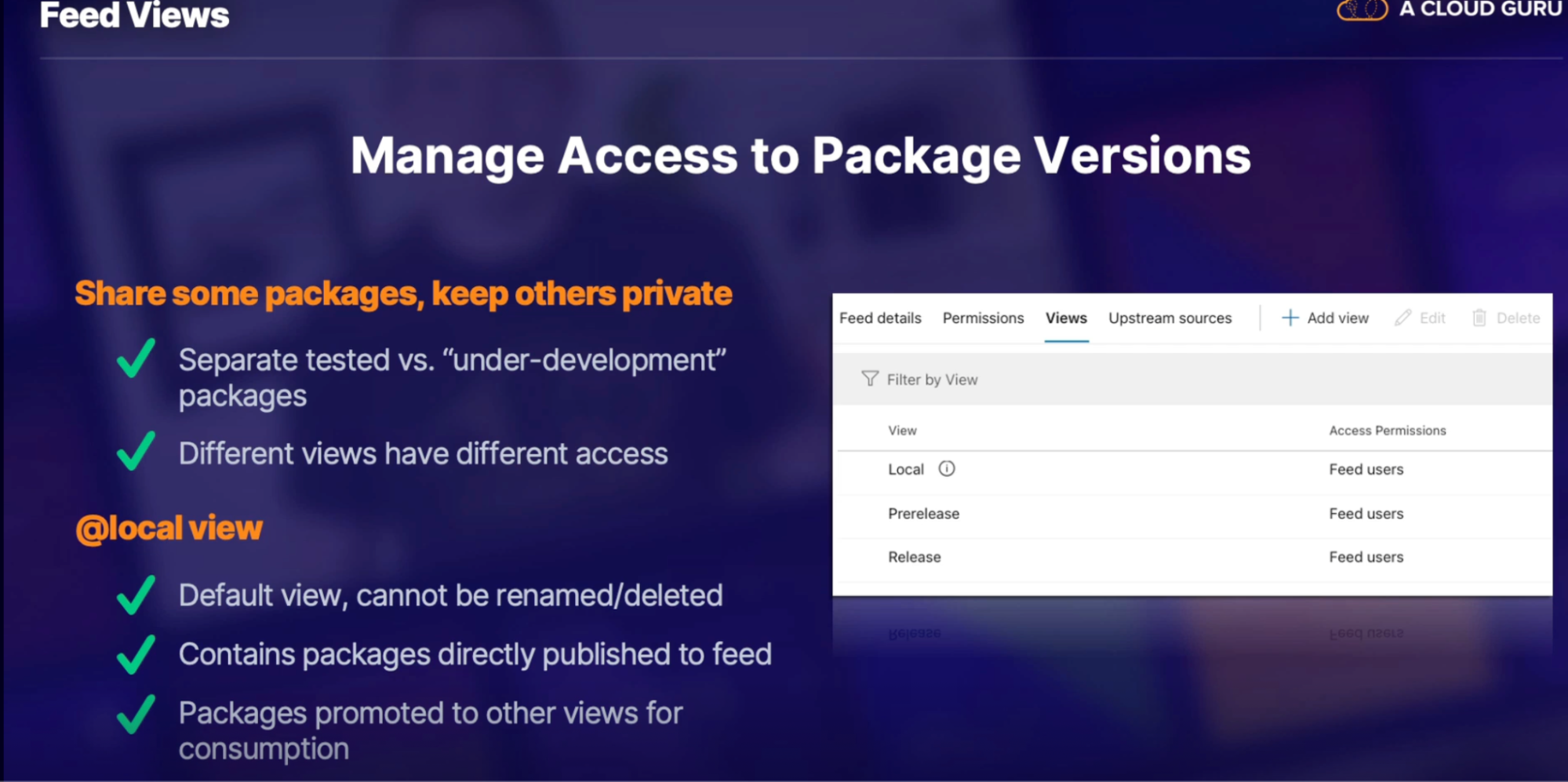

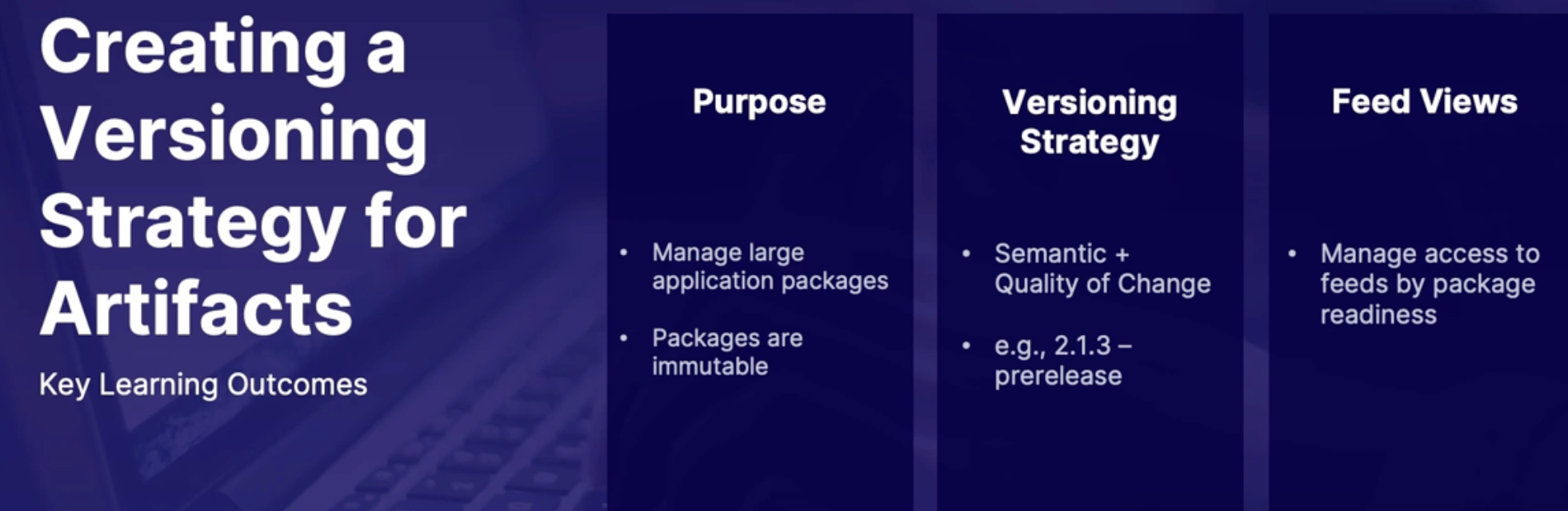

Creating a versioning Strategy for Artifact 108

Proper versioning strategy 108

Versoning recommendations 108

Feed views 108

Demo 108

Summary 108

Summary 109

Chap - 10: Designing Build automation 109

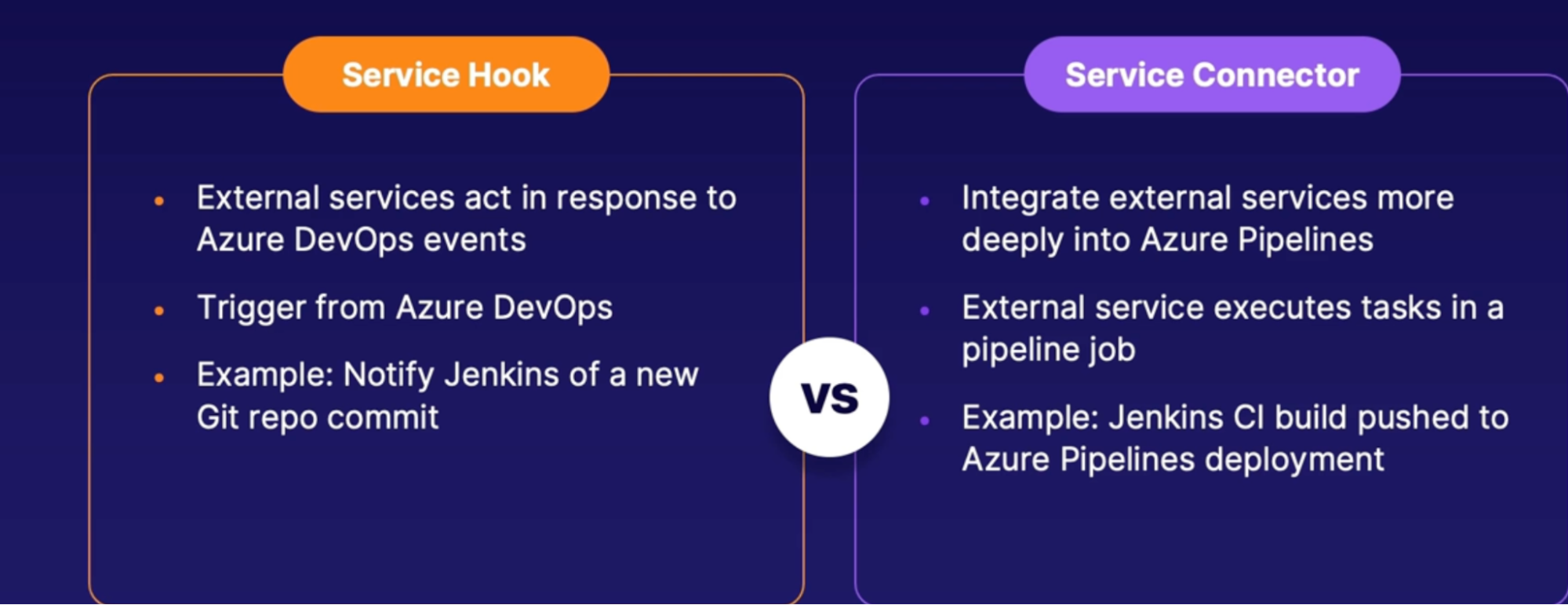

Integrate external services with Azure pipelines 110

Scenarios for connecting external tools 110

External tool connection methods 110

Popular code scanning service/tools 111

Summary 111

Visual Studio Marketplace Demo 111

Exploring Testing Strategies in your build 112

Why test code? 112

Testing methodologies 112

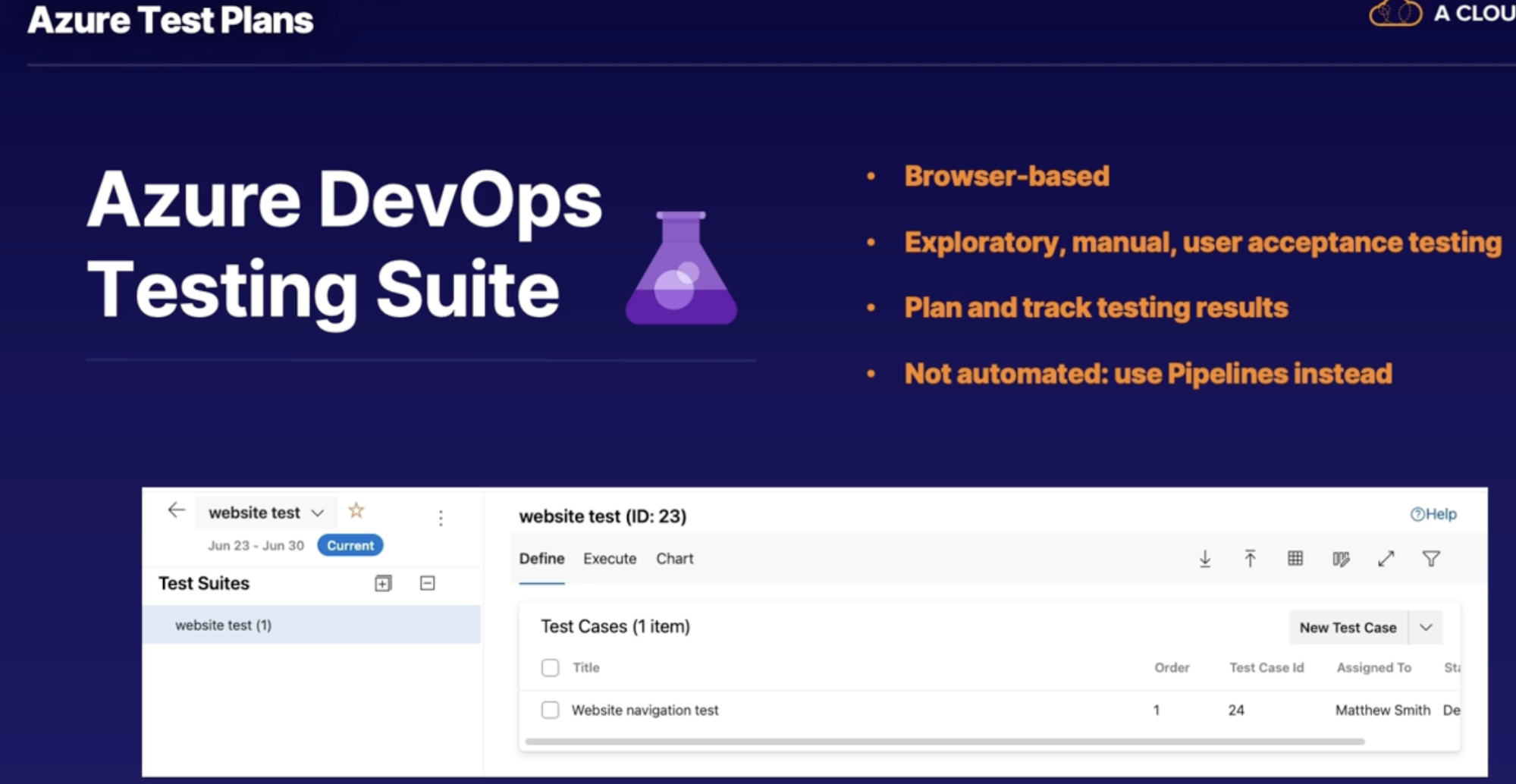

Azure test plans 112

Summary 112

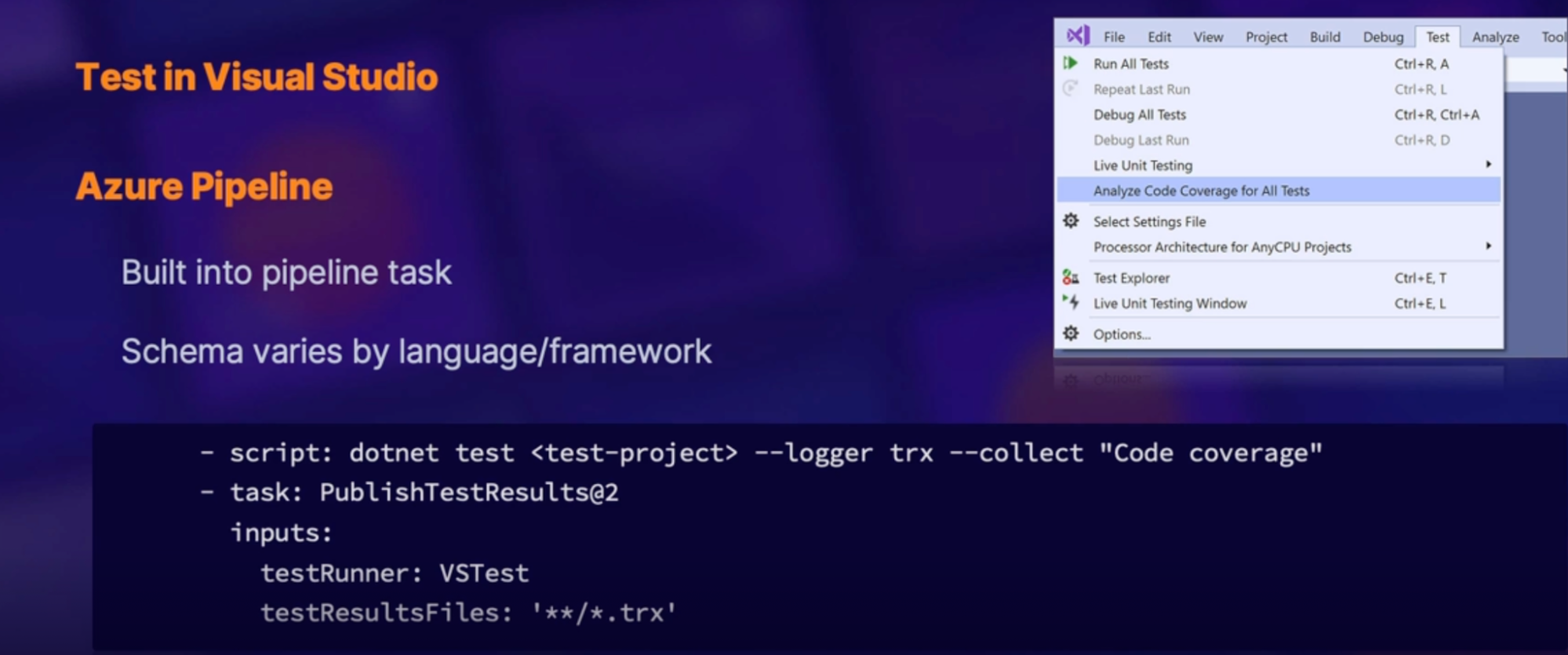

Understanding code coverage 113

What is Code Coverage 113

How code coverage tests work 113

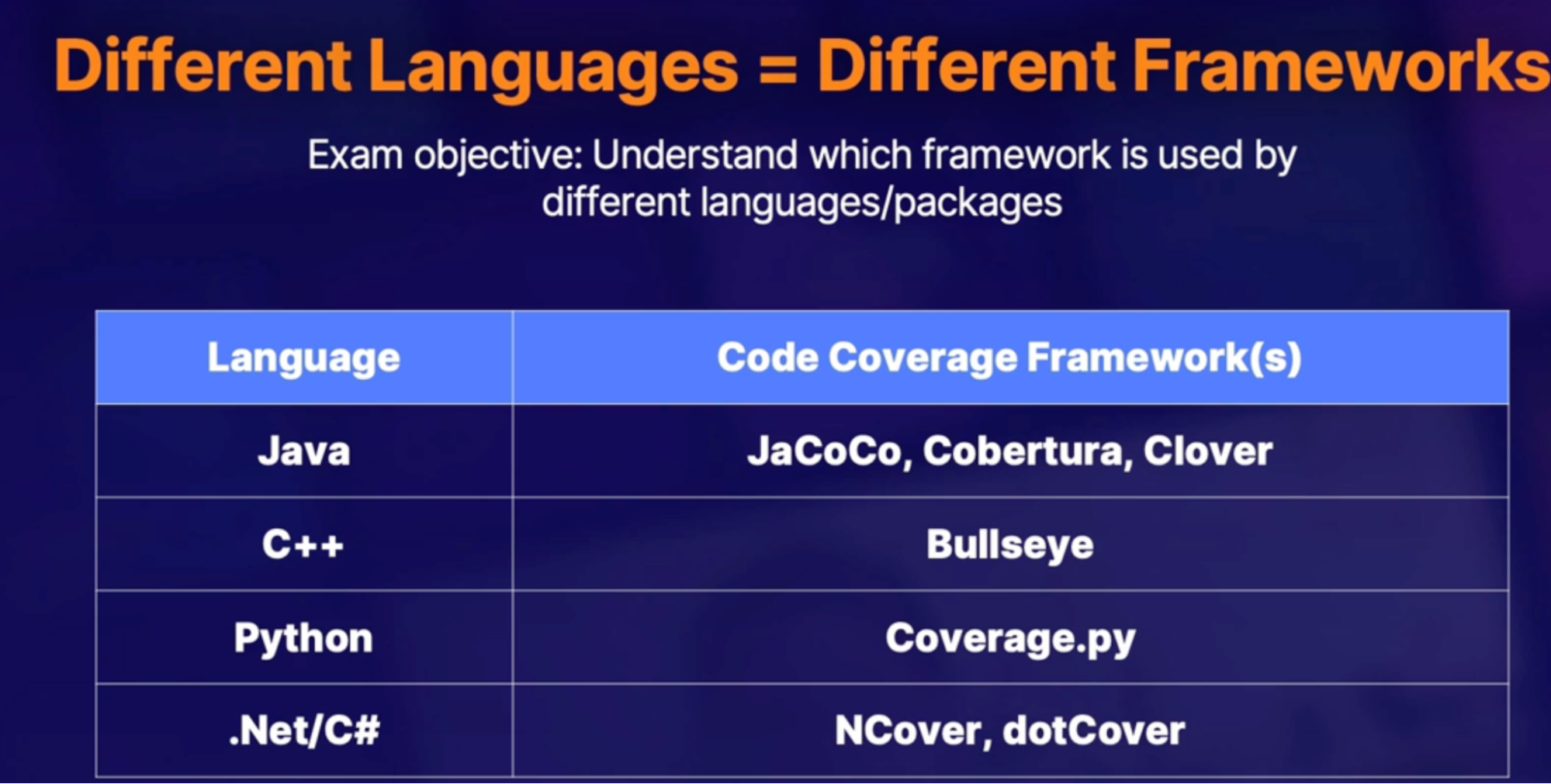

Code coverage frameworks 114

Demo 114

Summary 114

Summary 114

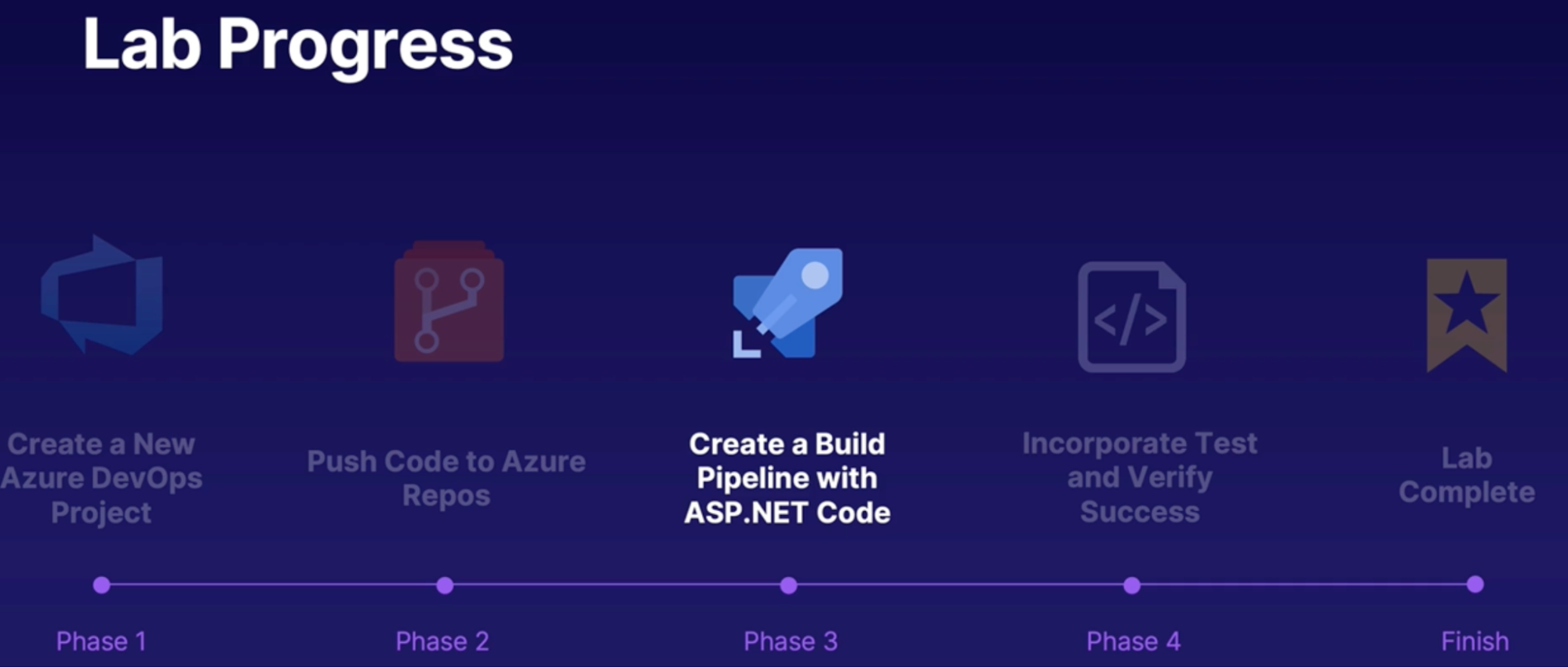

LAB: Create and Test an ASP.NET Core App in Azure Pipelines 116

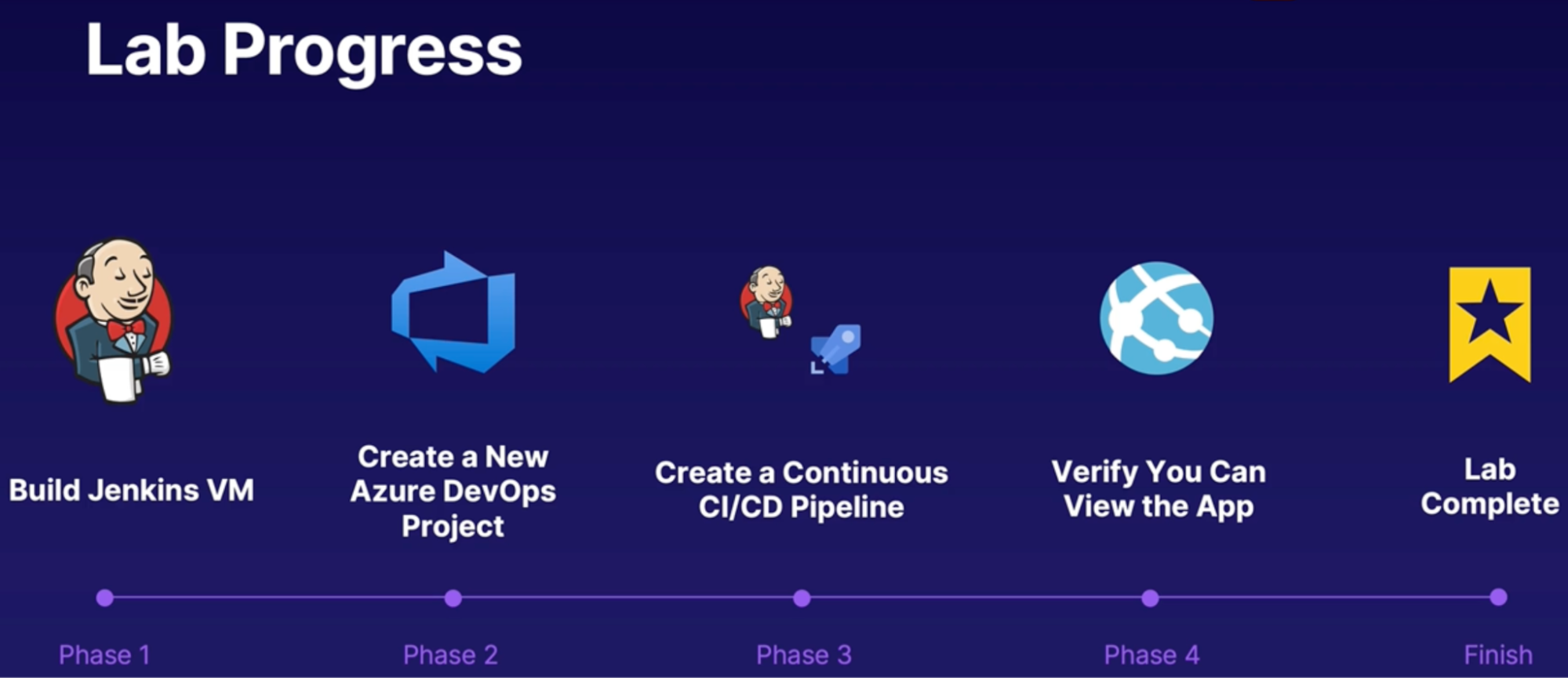

Lab: Use Jenkins and Azure DevOps to Deploy a Node.js App 116

Chap - 11: Maintaining a build strategy 117

Introduction 117

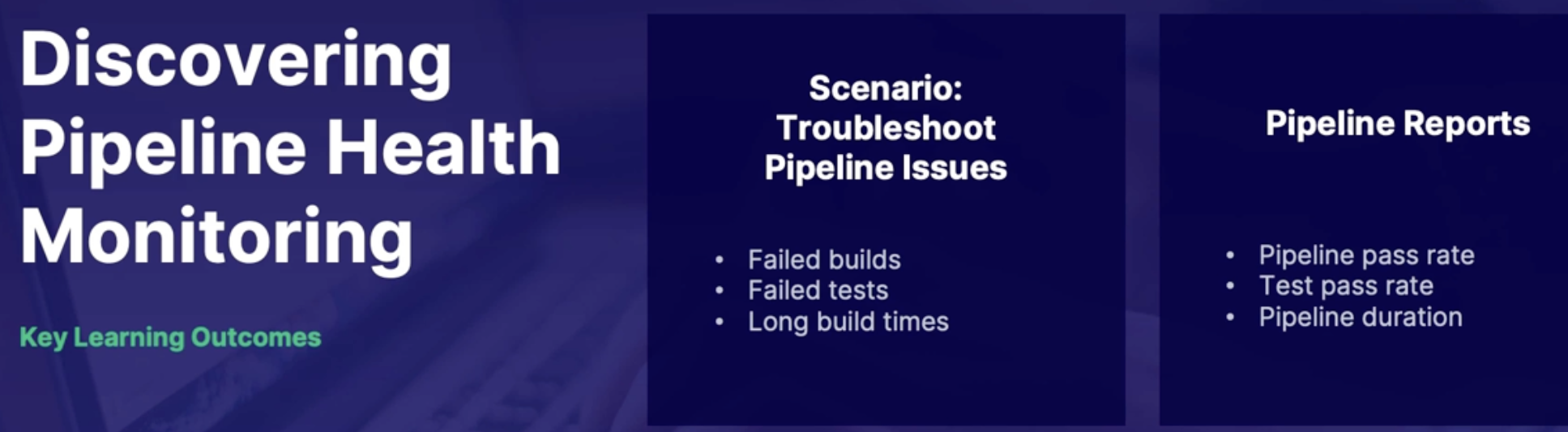

Discovering pipeline health monitoring 117

Scenarios for monitoring pipeline health 118

Pipeline reports 118

1. Pipeline pass rate 118

2. Test pass rate 118

3. Pipeline duration 118

Demo 118

Summary 118

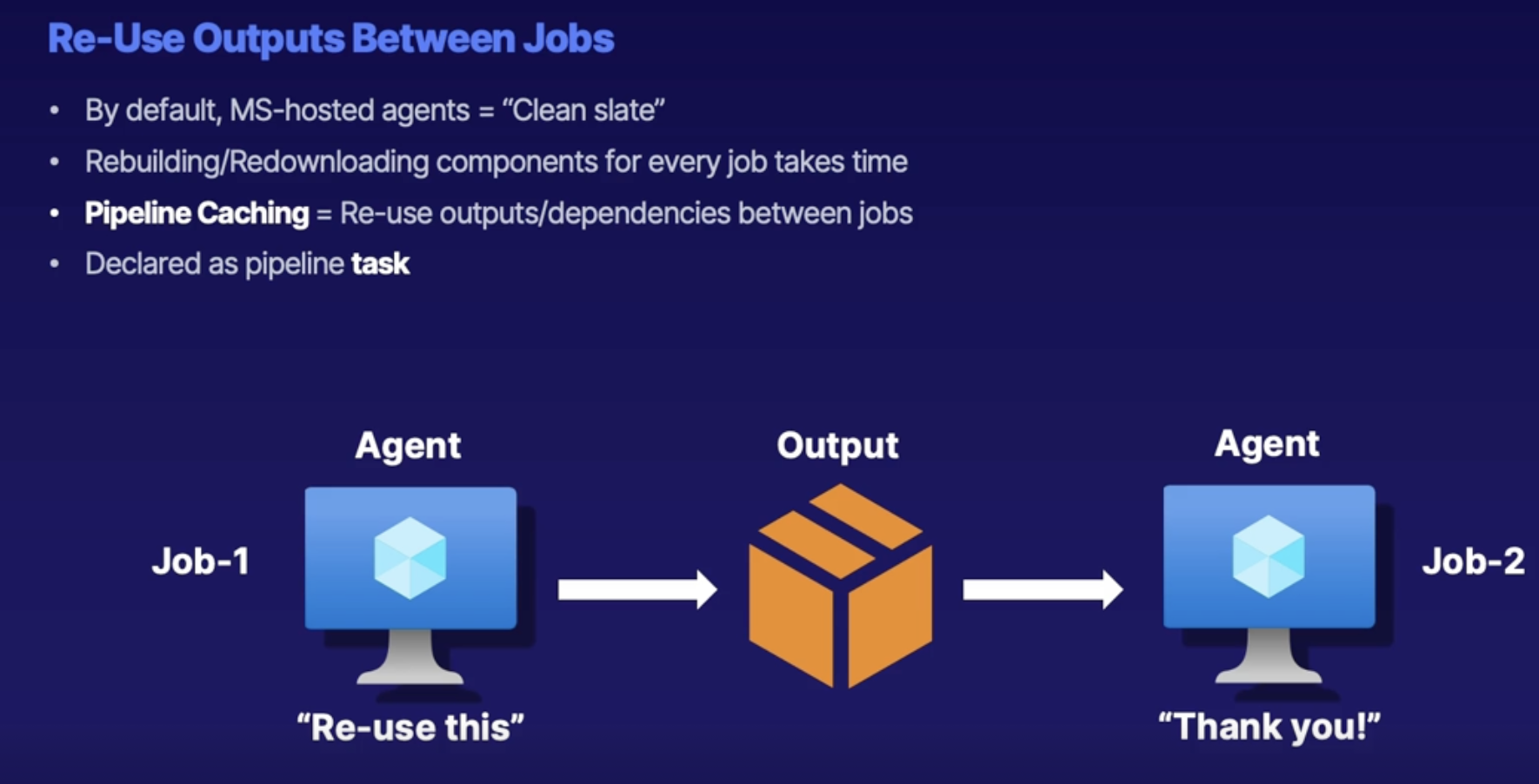

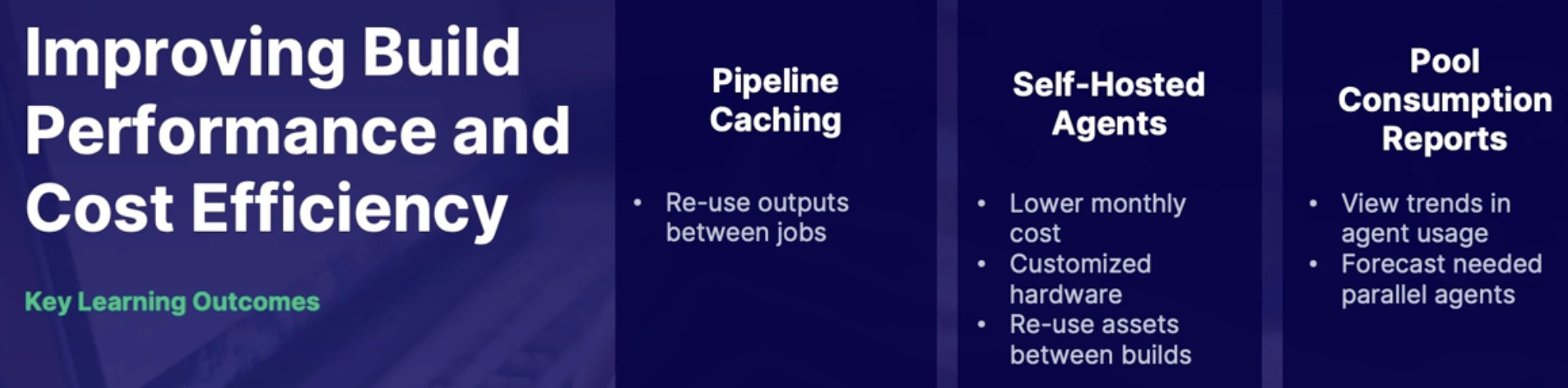

Improving build performance and cost efficiency 119

Build performance and costs 119

Pipeline caching 119

Self-hosted agents 119

Agent Pull consumption reports 120

Summary 120

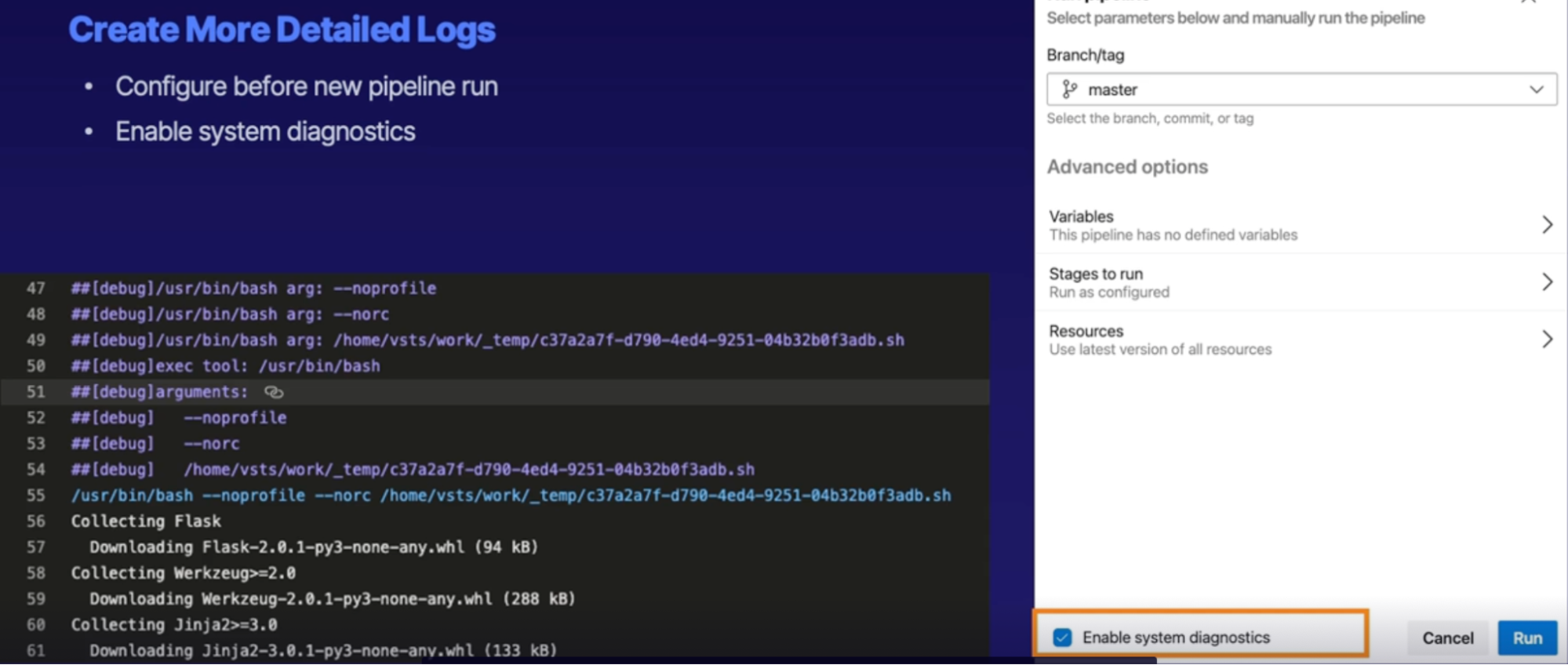

Exploring build agent analysis 120

Scenario: Troubleshoot Pipeline Failures 120

Viewing logs 120

Downloading logs 121

Configure verbose logs 121

Demo 121

Summary 121

Summary 121

Chap - 12: Designing a process for standardizing builds across organization 122

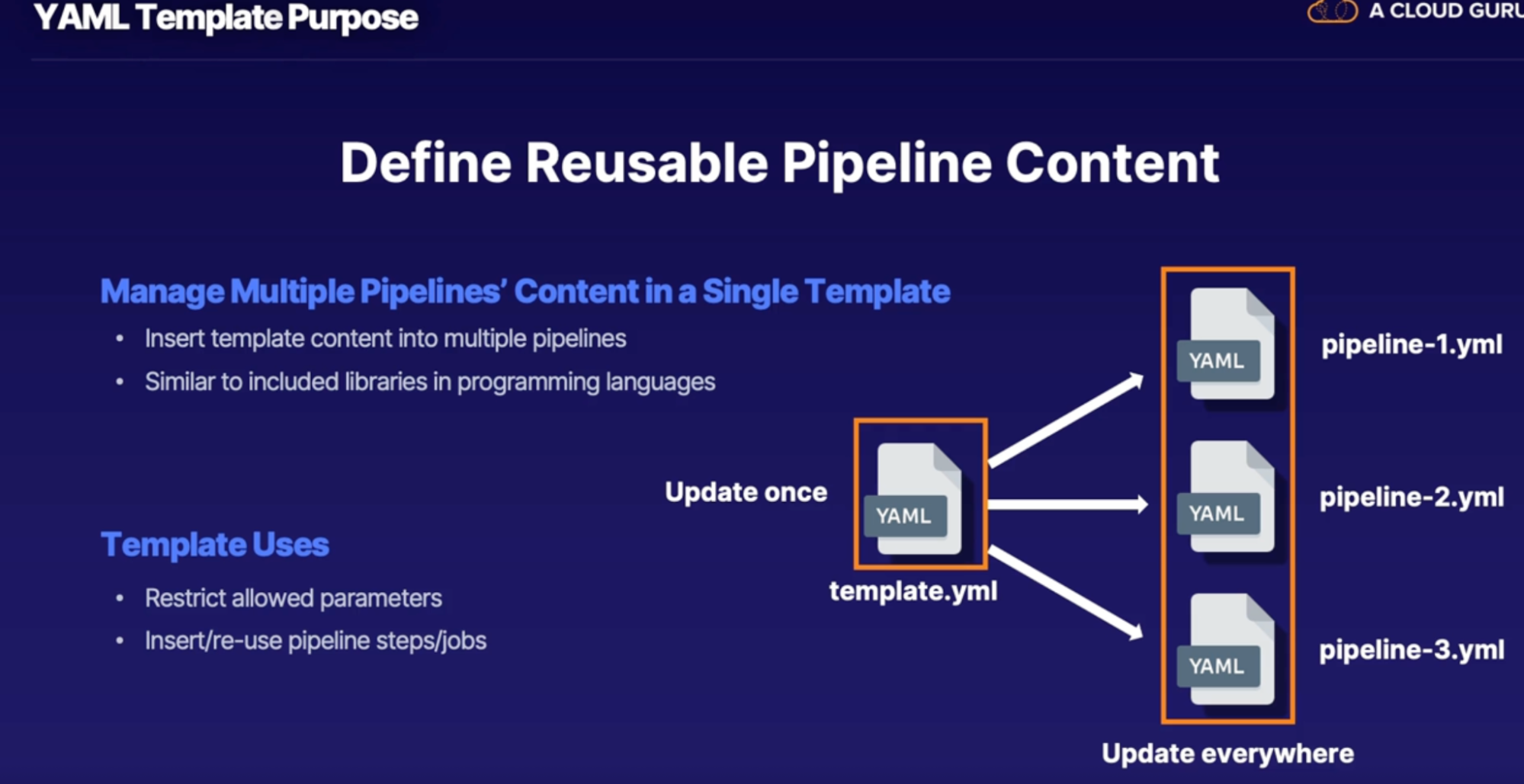

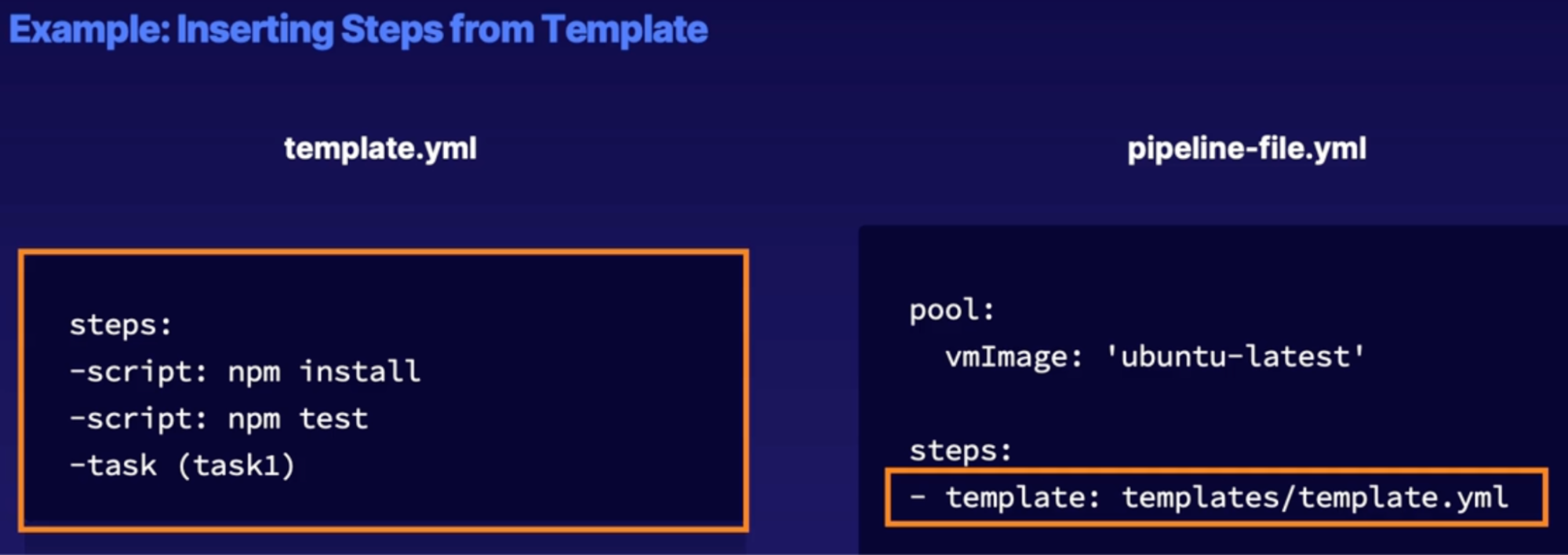

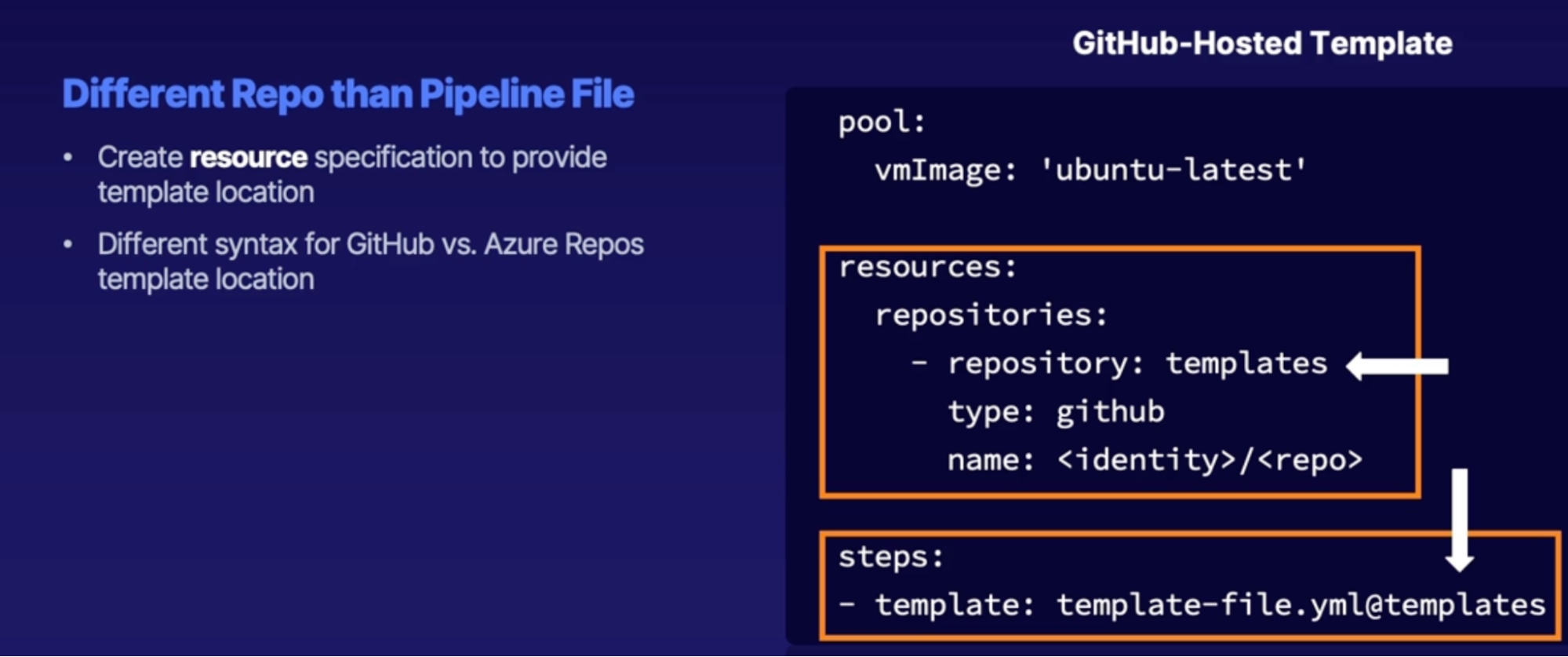

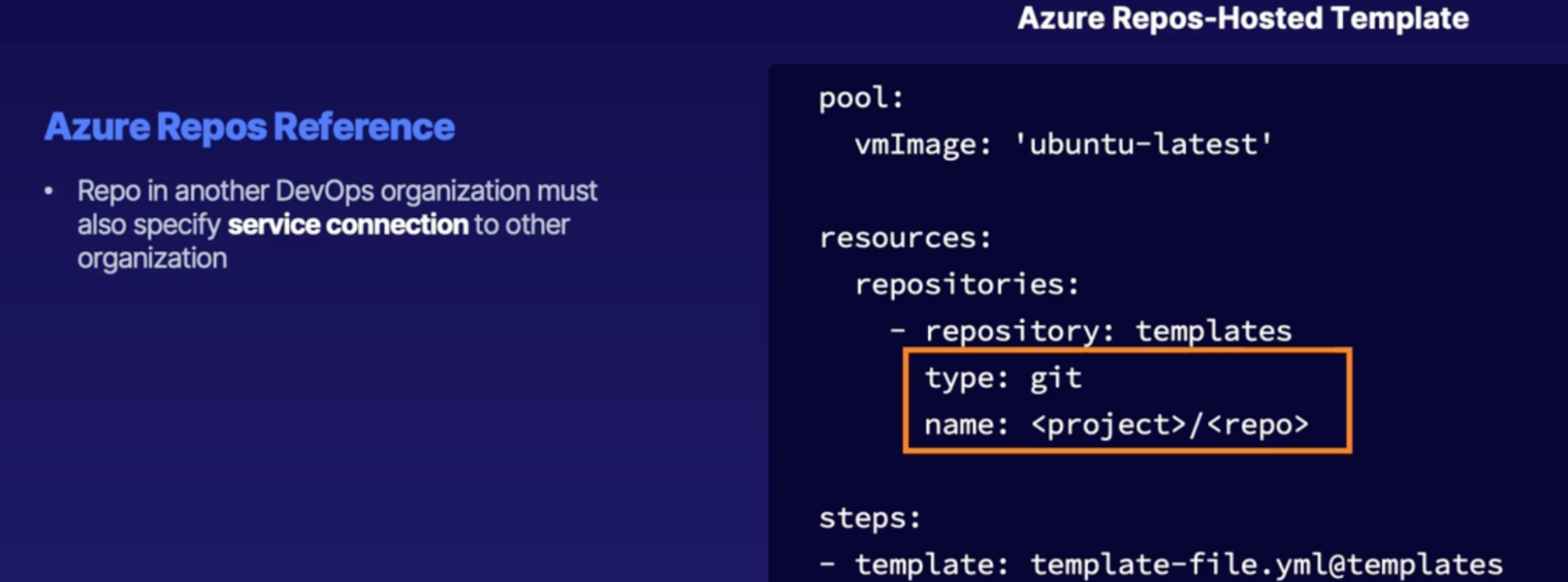

Implementing YAML templates 122

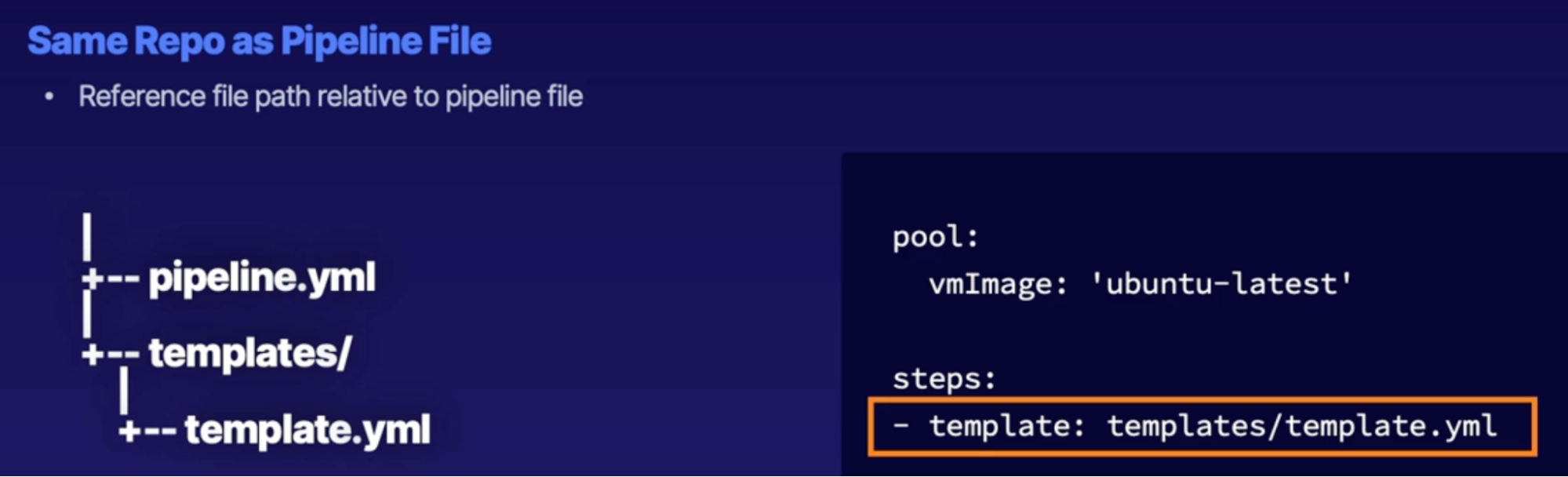

YAML template purpose 122

Inserting templates 122

Template location reference 122

Demo 125

Summary 125

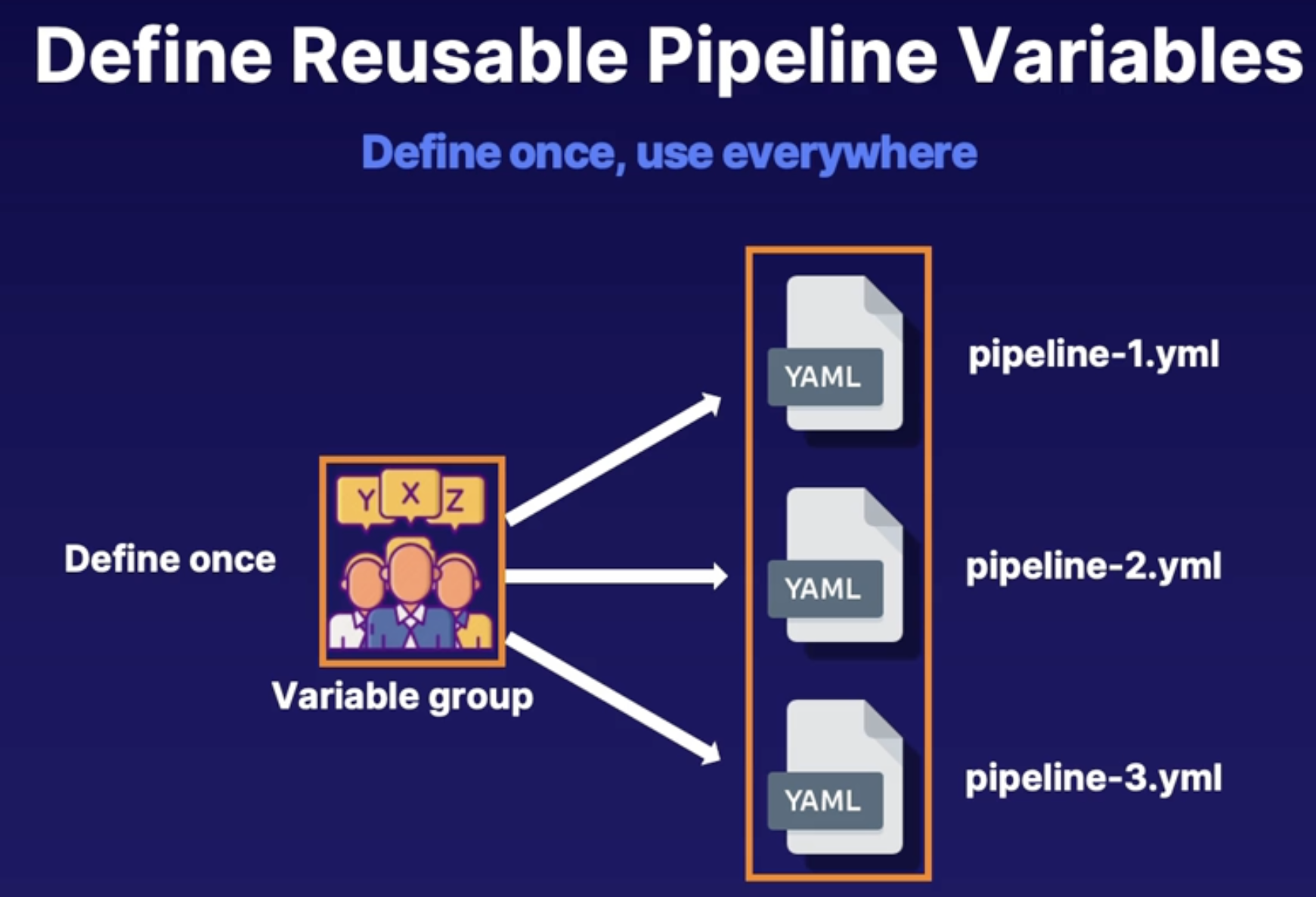

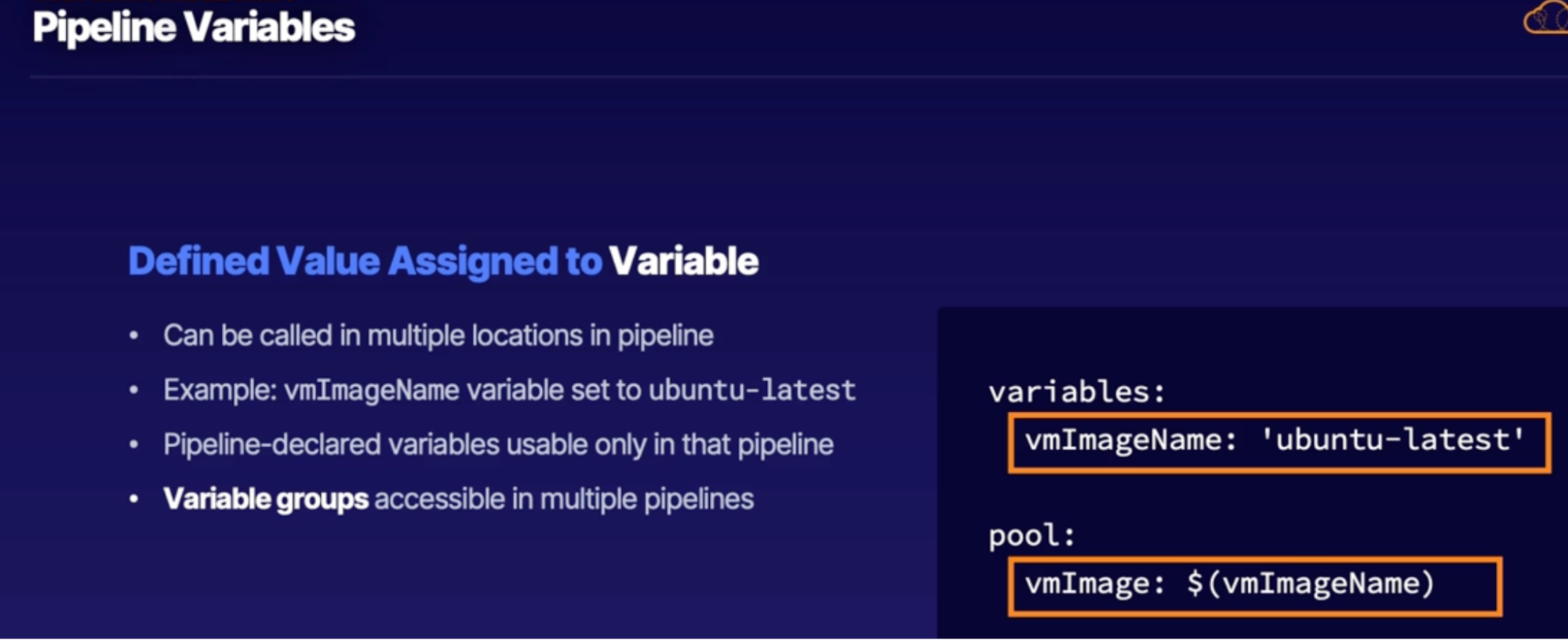

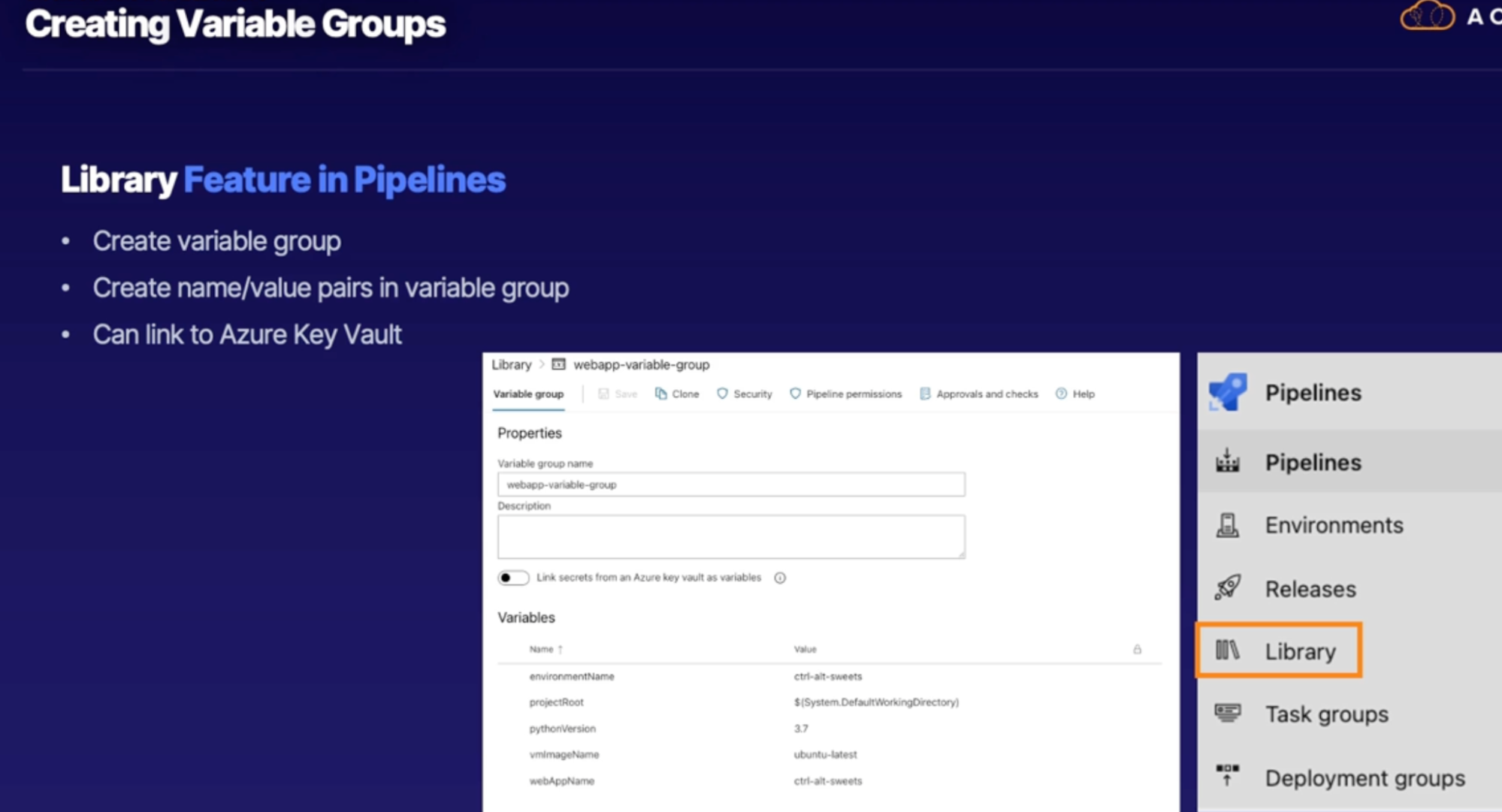

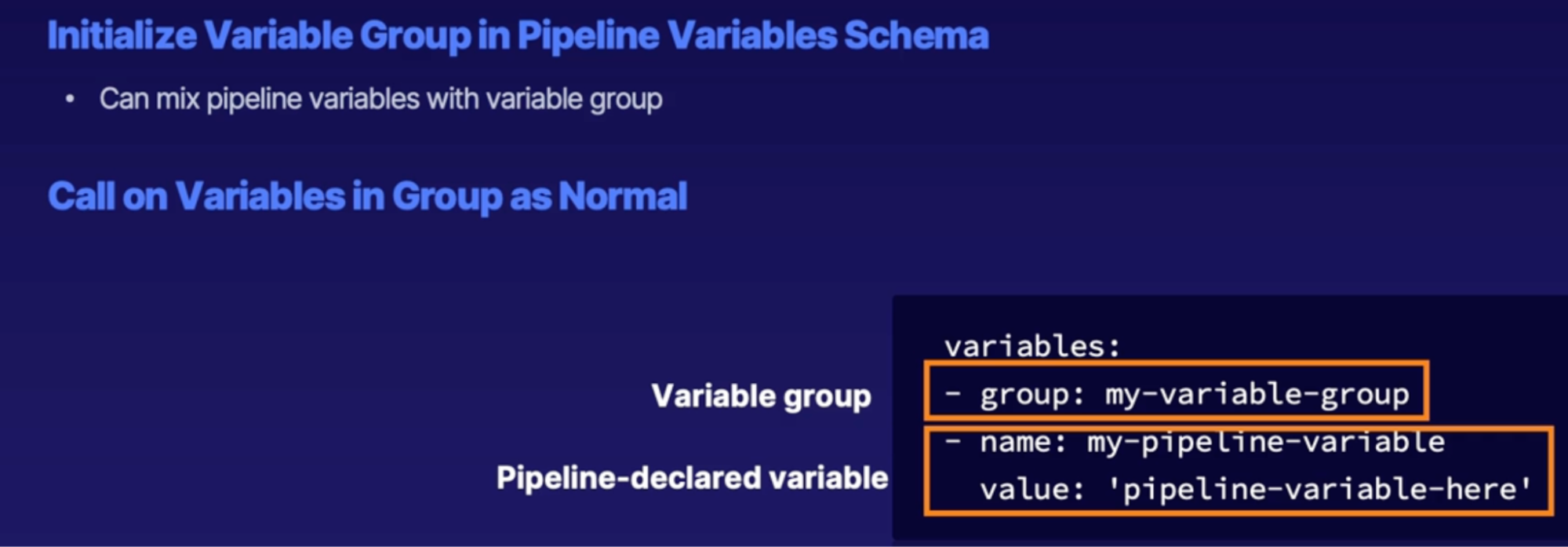

Incorporating valuable groups 125

Variable group purpose 125

Pipeline variables 125

Creating variable groups 125

Using variable groups 127

Demo 127

Summary 127

Summary 127

Chap - 13: Designing an application infrastructure management strategy 128

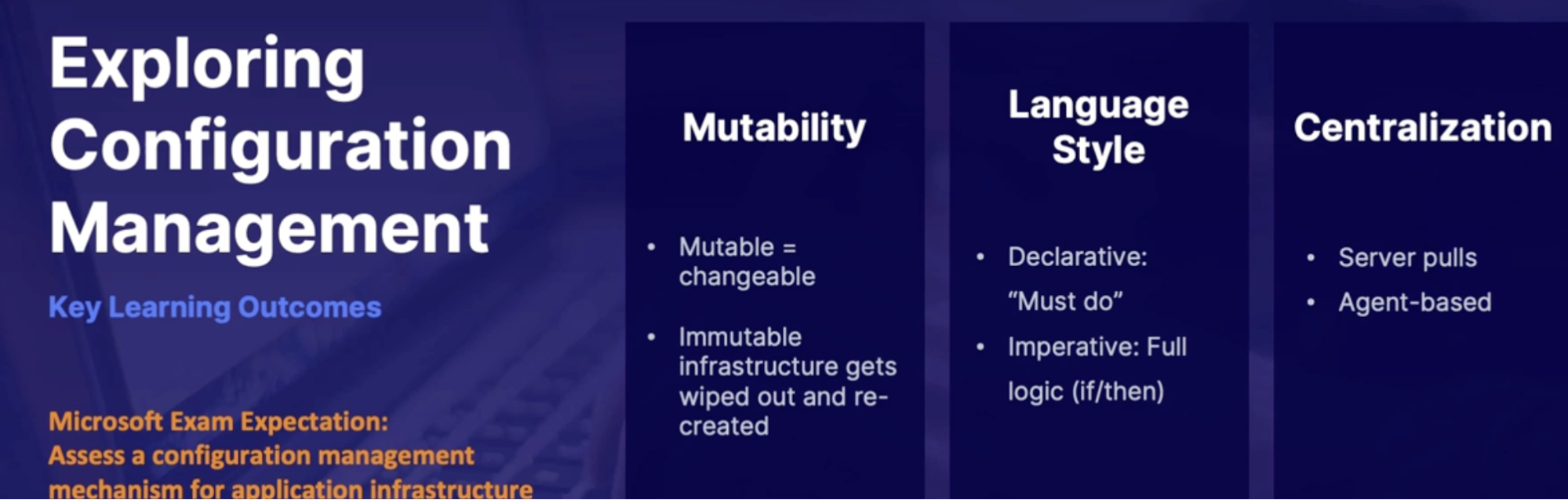

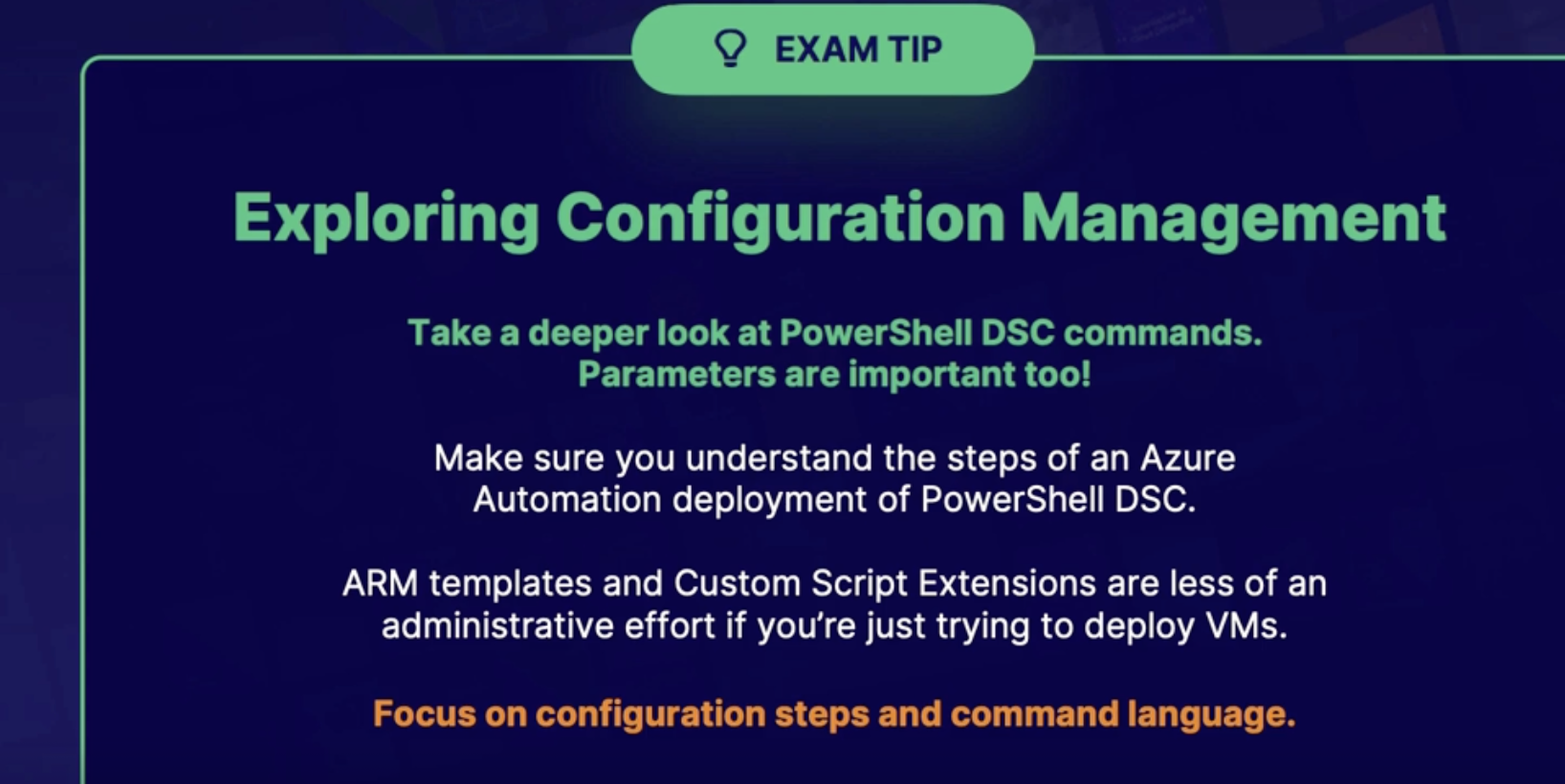

Exploring configuration management 128

What is configuration management 128

Assessing Configuration Management Mechanism 128

1. Mutable infrastructure 128

2. Imperative and declarative code 129

3. Abstraction 129

4. Simplified code process 130

Centralization 130

Agent-based management 131

Summary 131

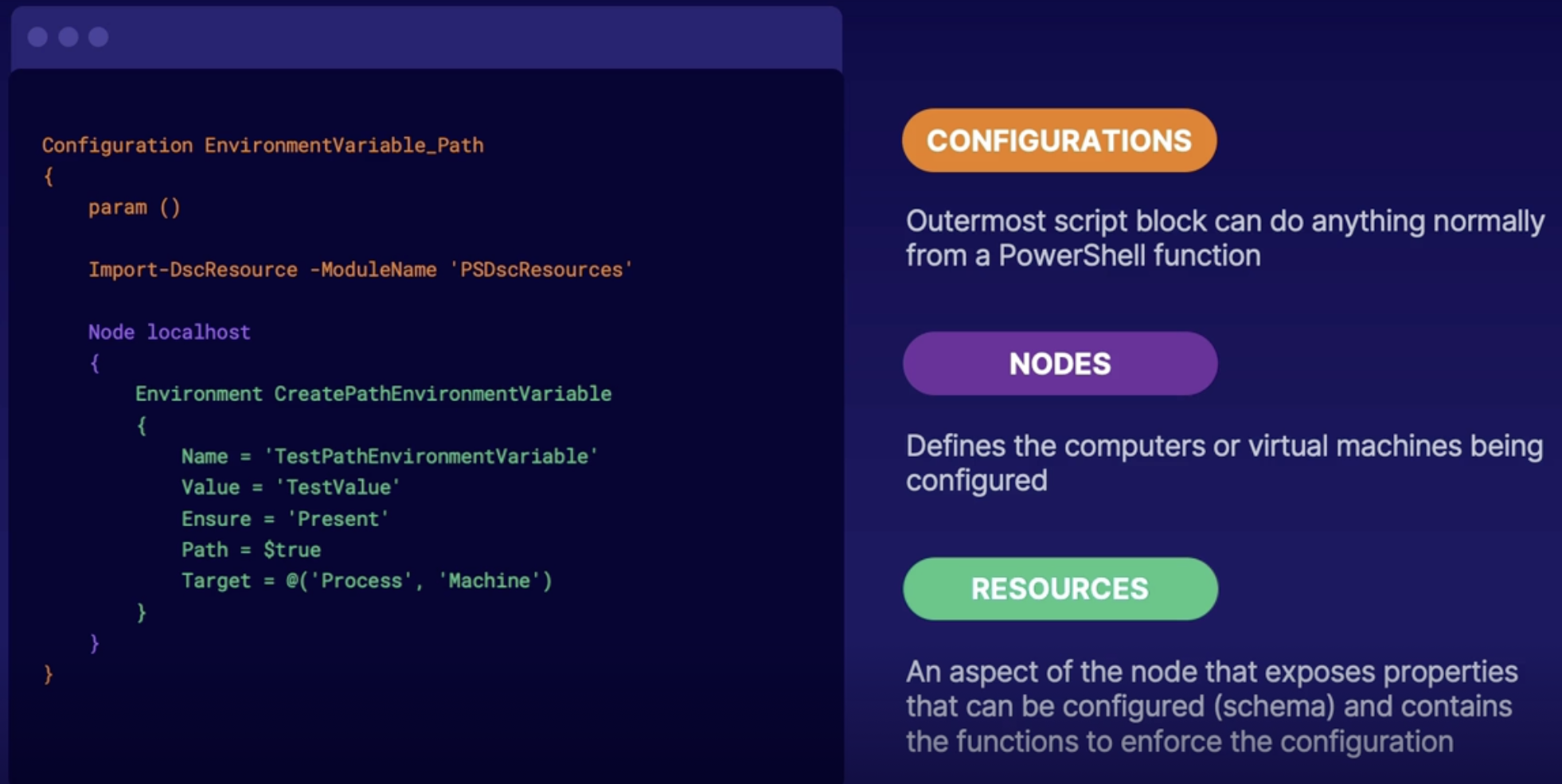

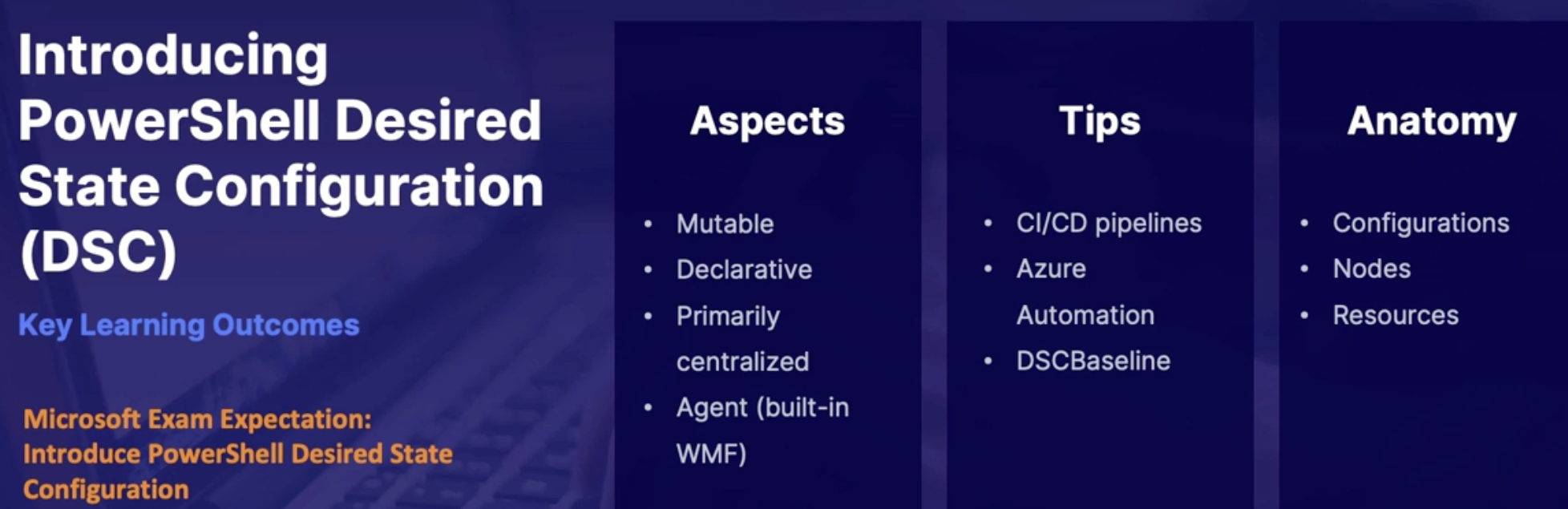

Introducing PowerShell Desired State Configuration (DSC) 131

Aspect of PowerShell DSC 131

Important consideration of PowerShell DSC 132

Anatomy of PowerShell DSC 132

Summary 132

Implementing PowerShell DSC for app infrastructure 132

Primary uses for PowerShell DSC 132

Demo: Setup PowerShell DSC for DevOps pipeline 133

Summary 133

Summary 133

Lab create a CICD pipeline using PowerShell DSC 134

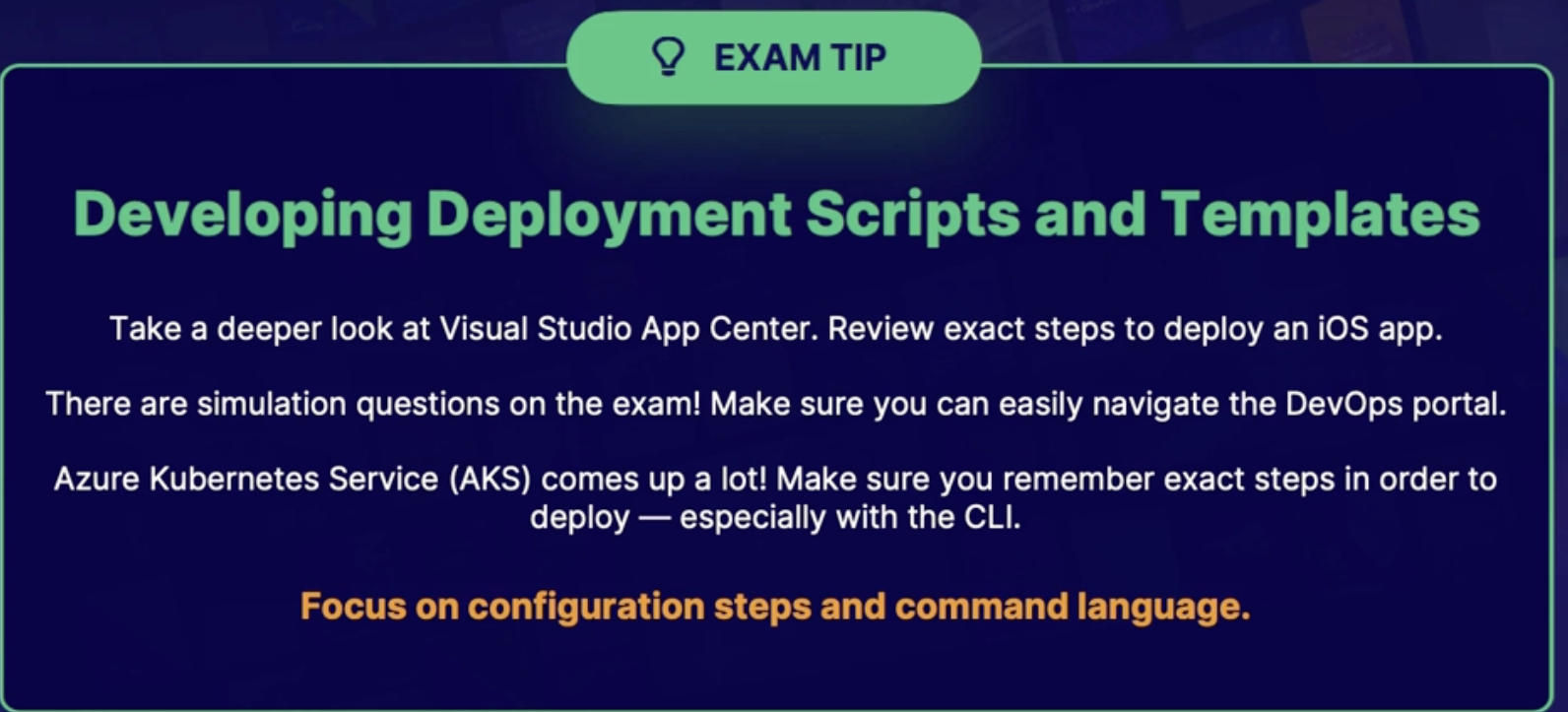

Chap - 14: Developing Deployment Scripts and Templates 134

Understanding deployment solution options 134

Deploying code 134

Deployment solution 135

Aspects of a deployment 135

Topics for evaluating deployment solutions 136

Summary 136

Exploring infrastructure as code: ARM vs. Terraform 136

Comparison 136

Code differences 136

Demo: ARM template in Azure pipeline 136

Demo: deploying terraform in Azure pipeline 137

Summary 138

Exploring infrastructure as code: PowerShell vs. CLI 138

Code differences 138

Comparison highlights 138

Demo: Deploying with both PowerShell and CLI 138

Summary 139

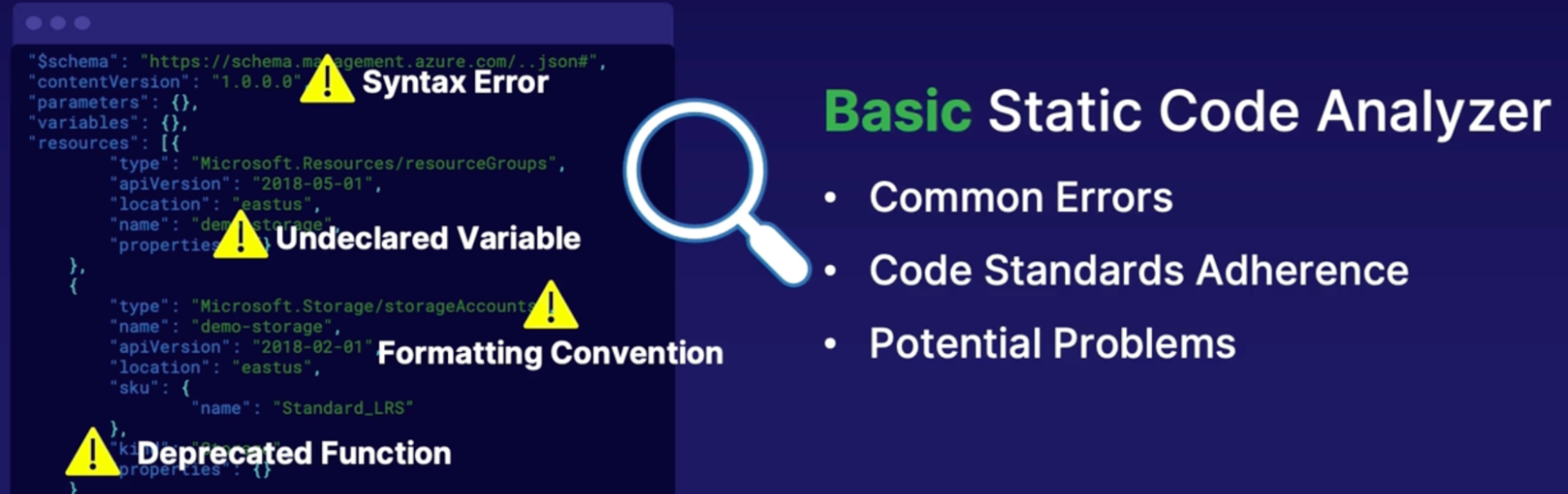

Linting ARM Templates 139

What is linting 139

Demo 139

Summary 139

Deploying a Database 140

What is DACPAC? 141

Demo 141

Summary 141

Understanding SQL Data Movement 141

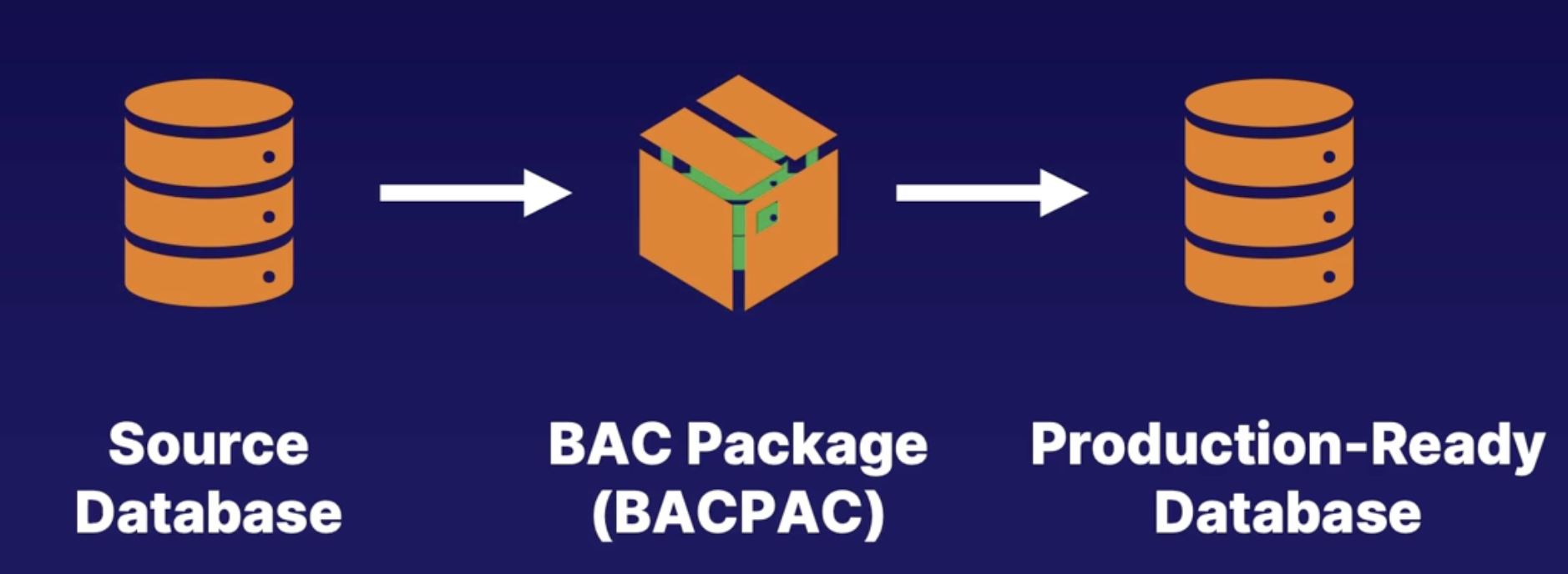

What is BACPAC? 141

Demo 142

Summary 142

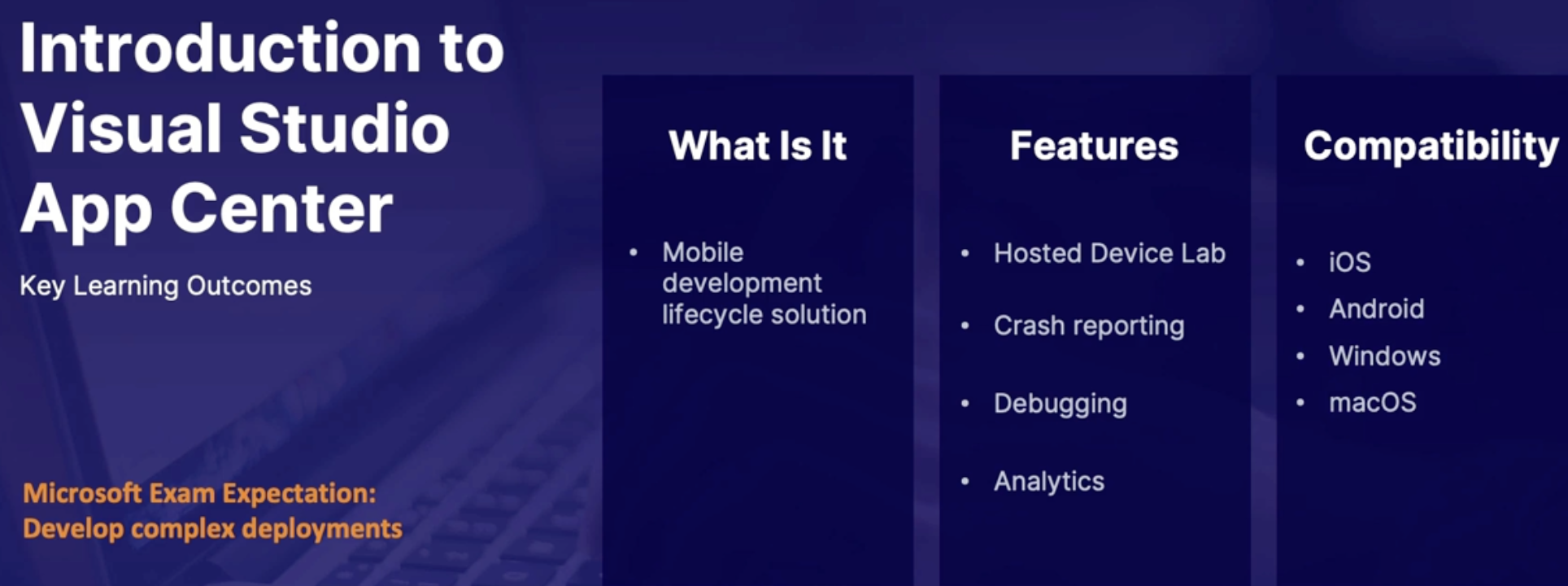

Introduction to Visual Studio App Center 143

What is App Center 143

Demo 143

Summary 143

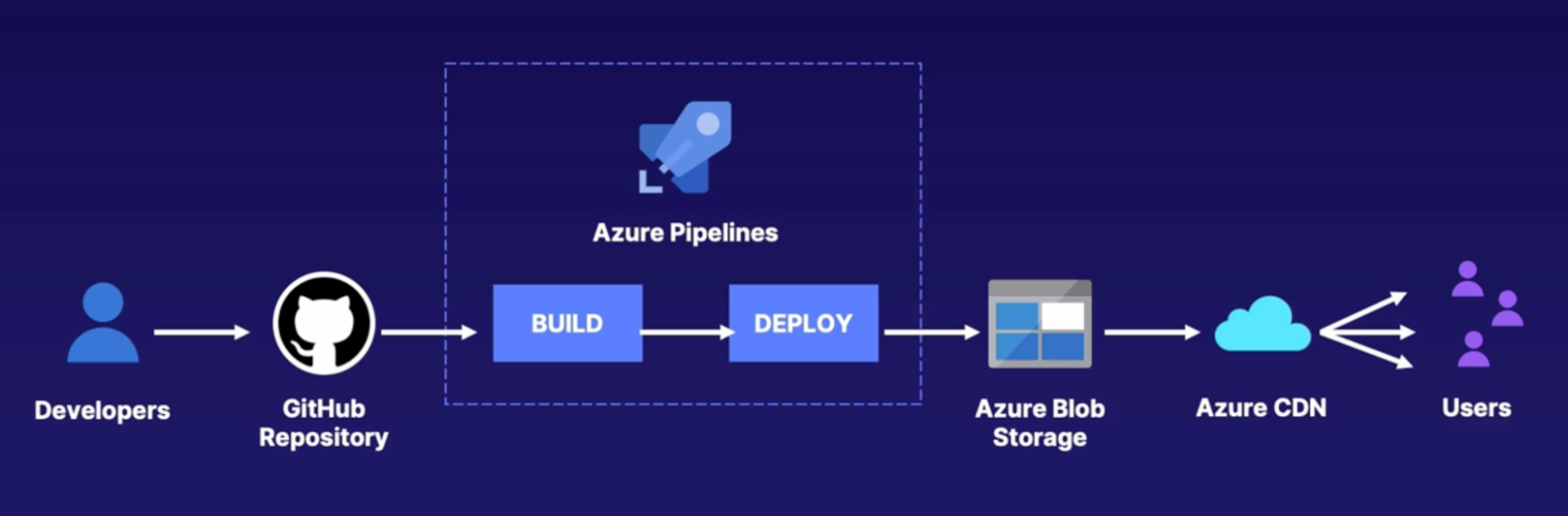

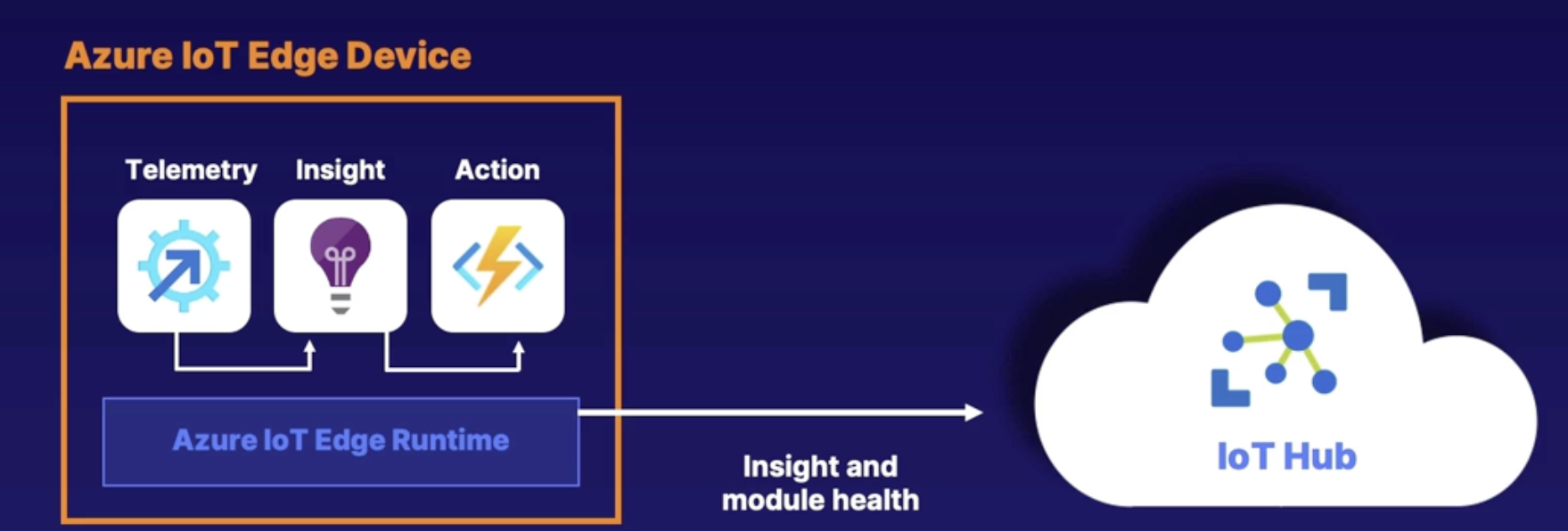

Exploring CDN and IOT deployments 143

Azure CDN deployment with DevOps pipeline 144

Azure IOT Edge deployment with DevOps pipeline 144

Demo 144

Summary 144

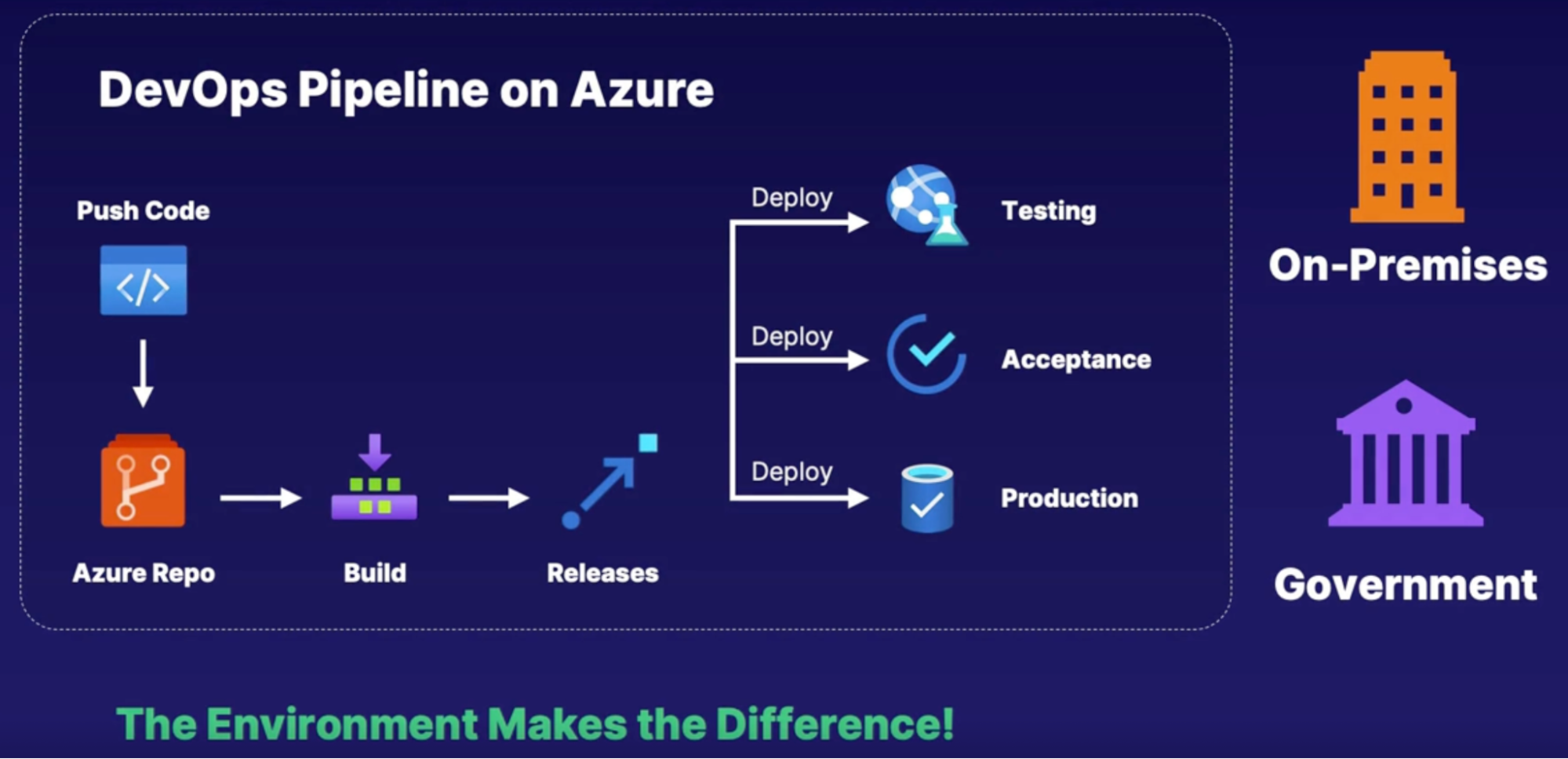

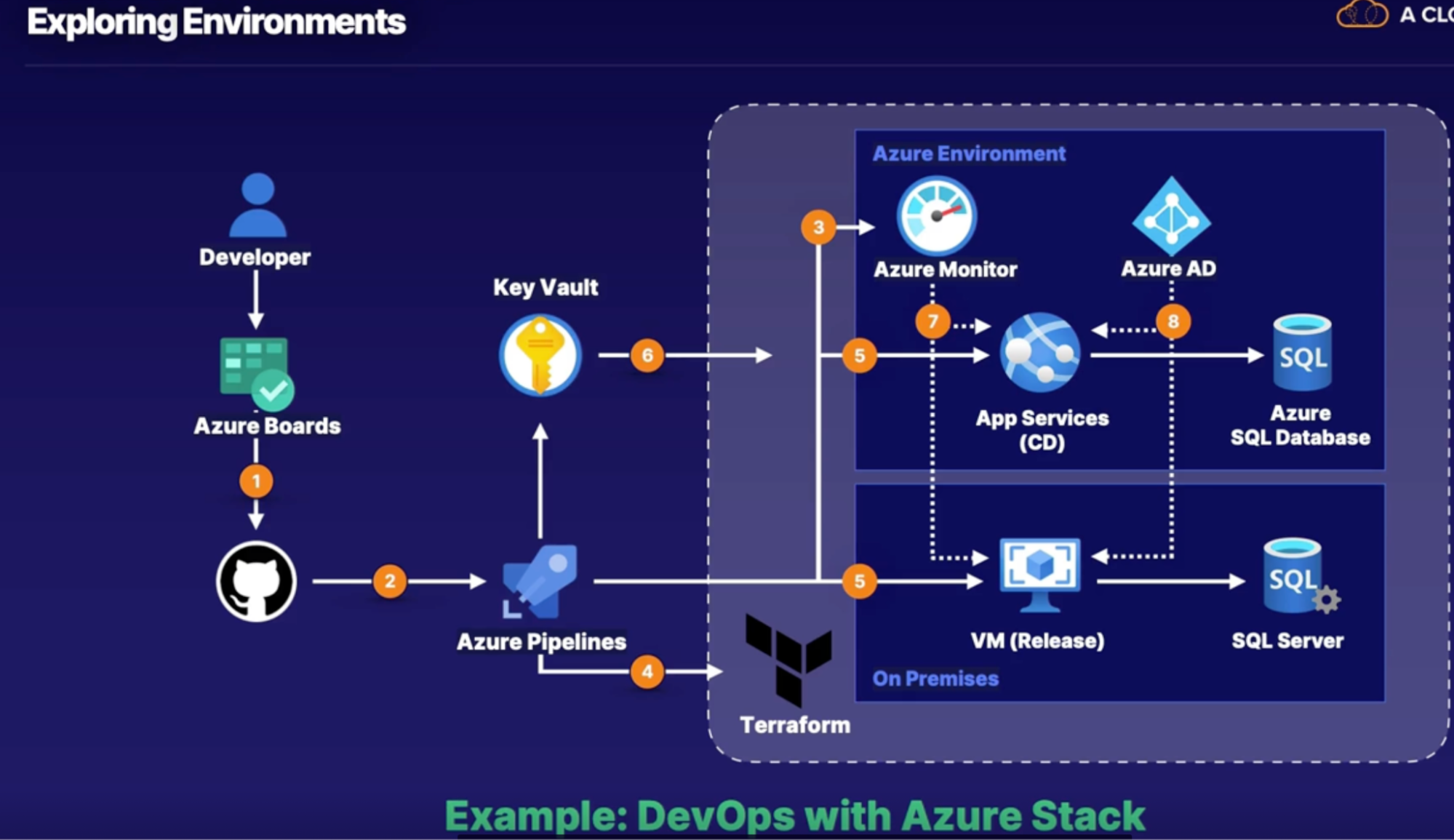

Understanding Azure Stack and sovereign cloud deployment 145

Exploring environments 145

Demo 145

Summary 146

Summary 146

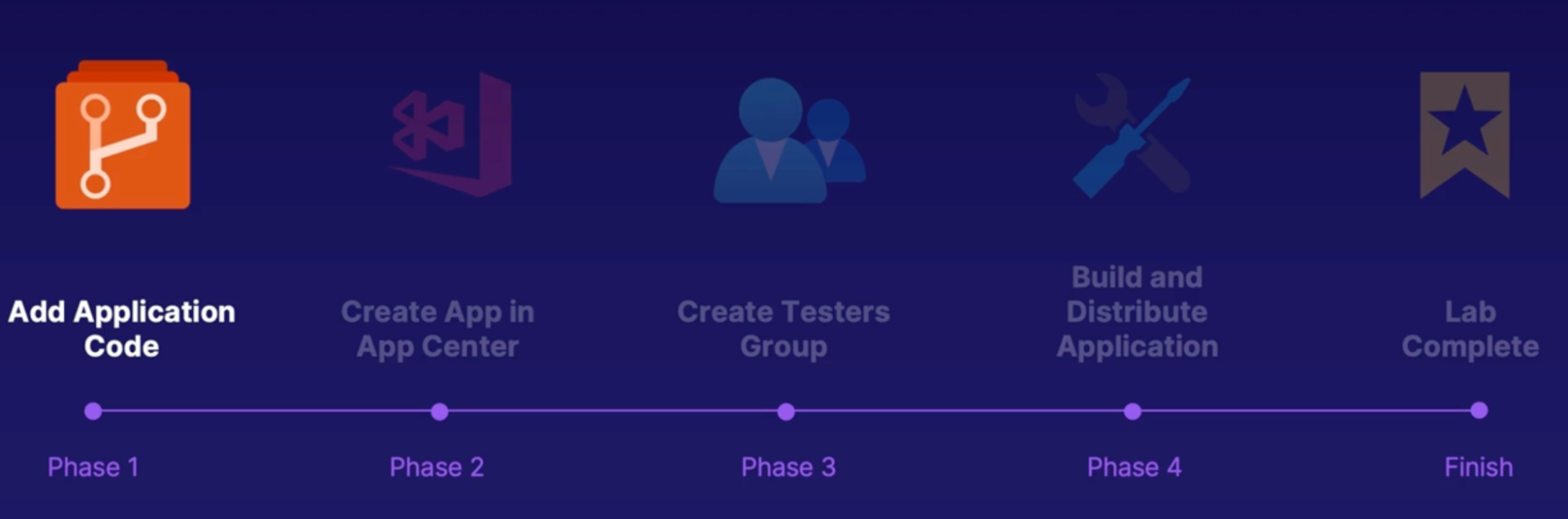

Lab: Build and Distribute an app in App center 146

Lab: Linting your ARM templates with Azure pipelines 150

Lab: Building infrastructure with Azure pipeline 151

Lab: Deploy a python app to an AKS cluster using Azure pipeline 151

Chap - 15: Implementing an Orchestration Automation Solution 152

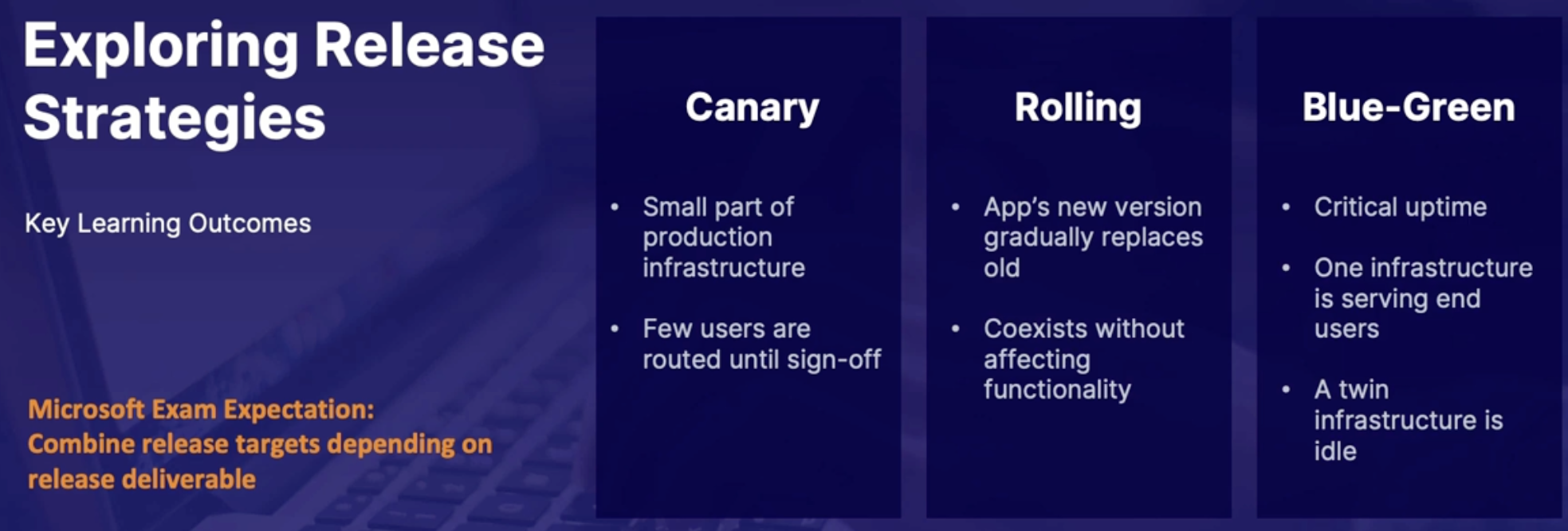

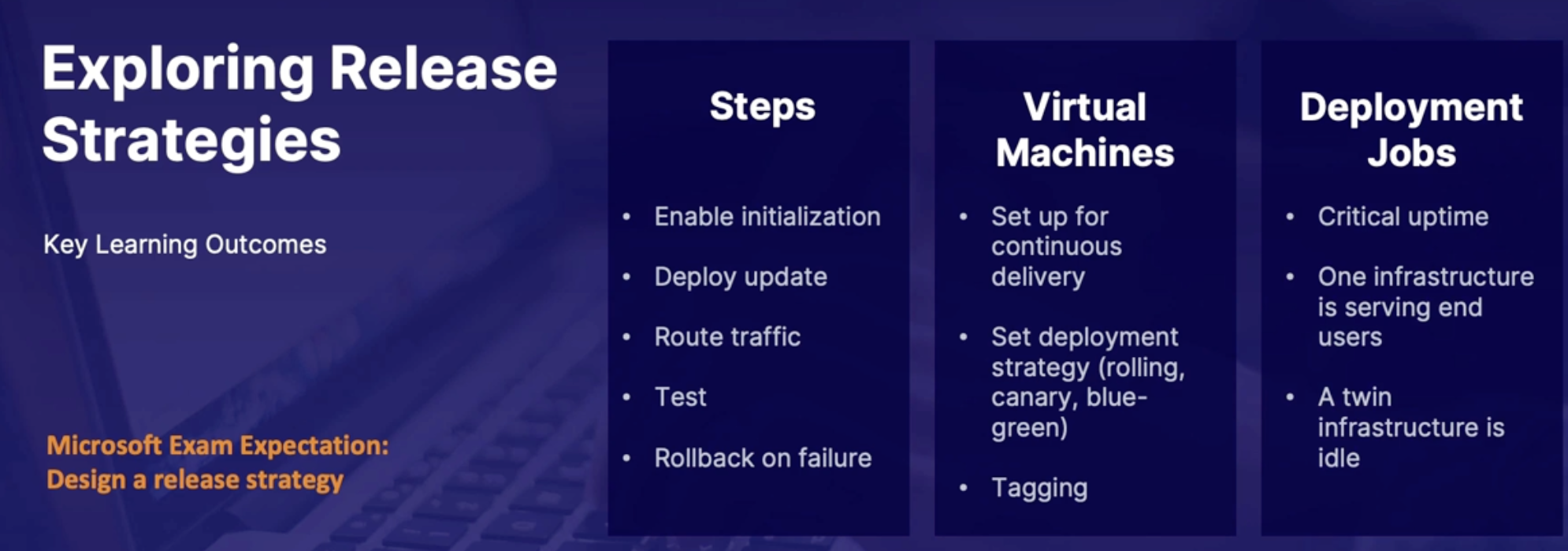

Exploring release strategy 152

Canary deployment 152

Rolling deployment 152

Blue/Green deployment 152

Summary 153

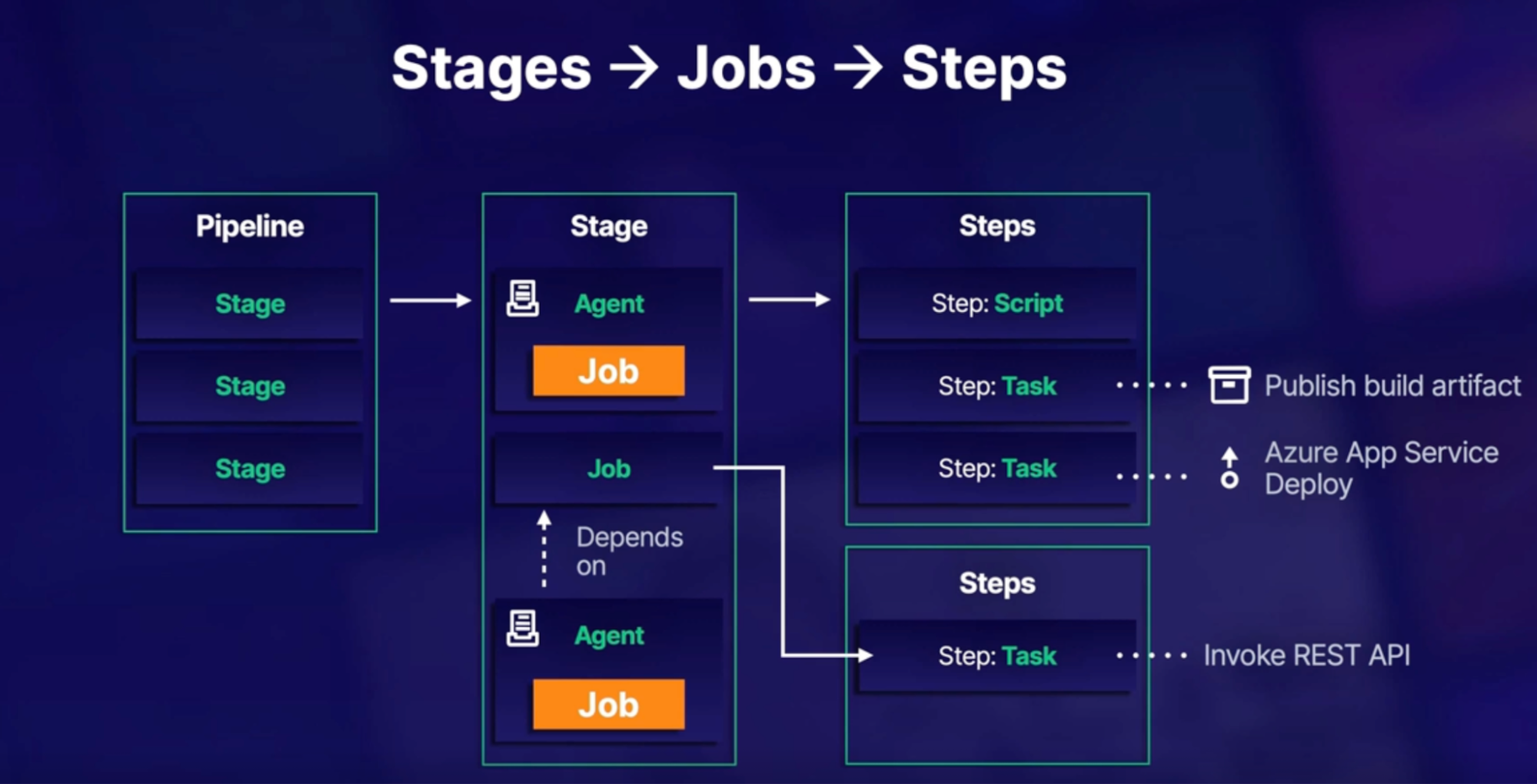

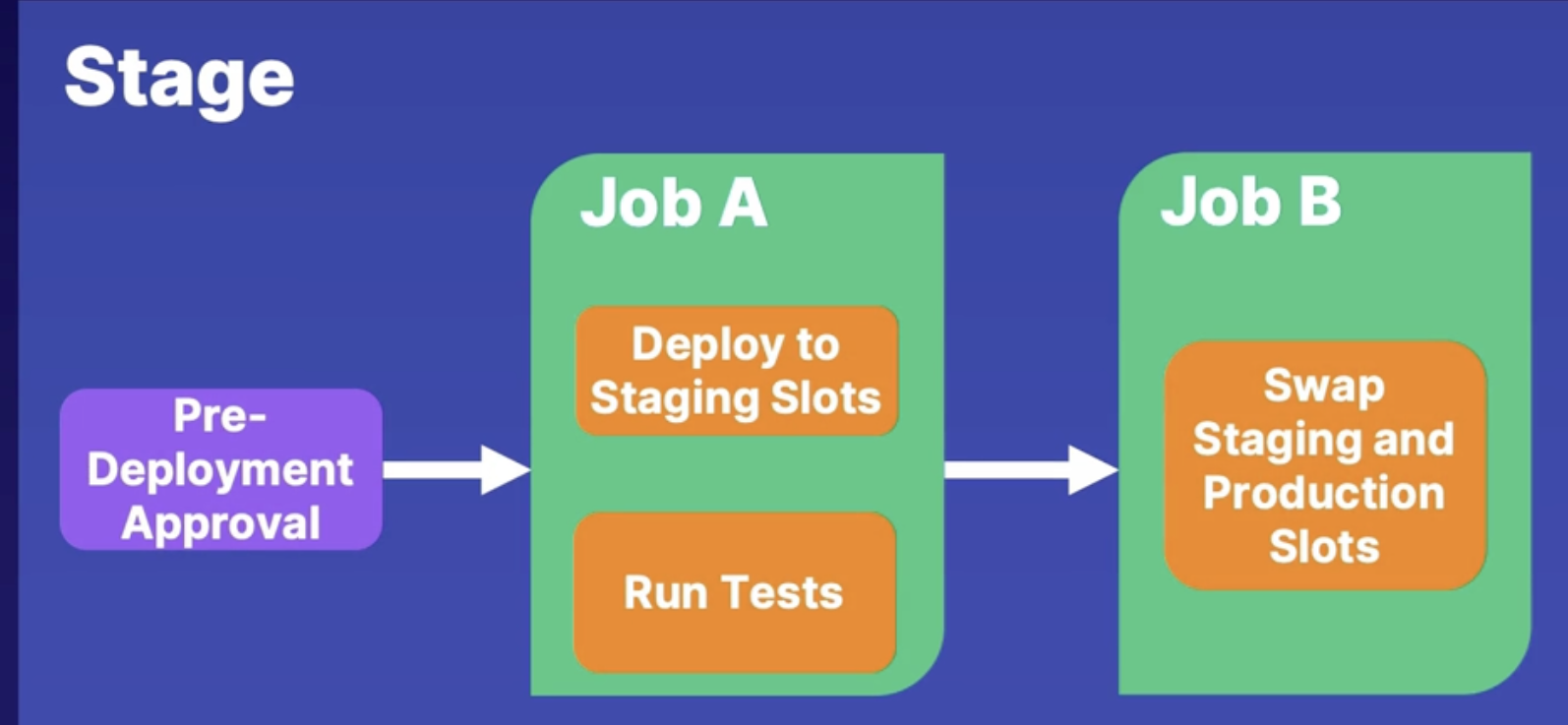

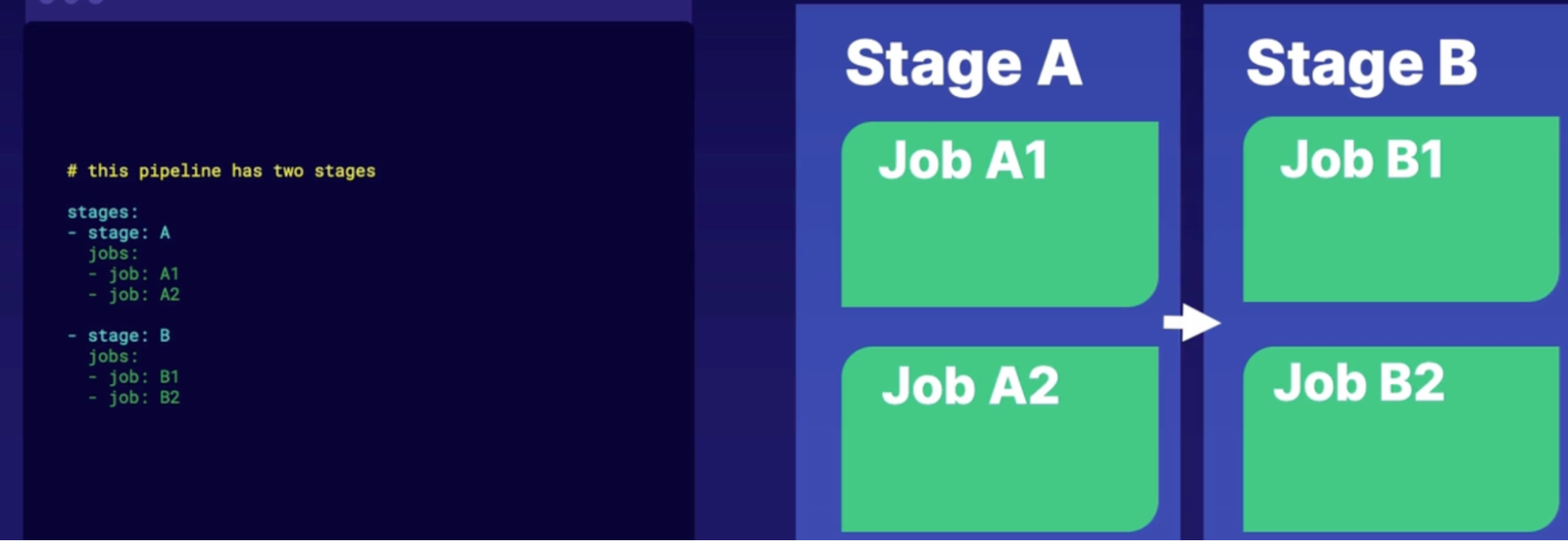

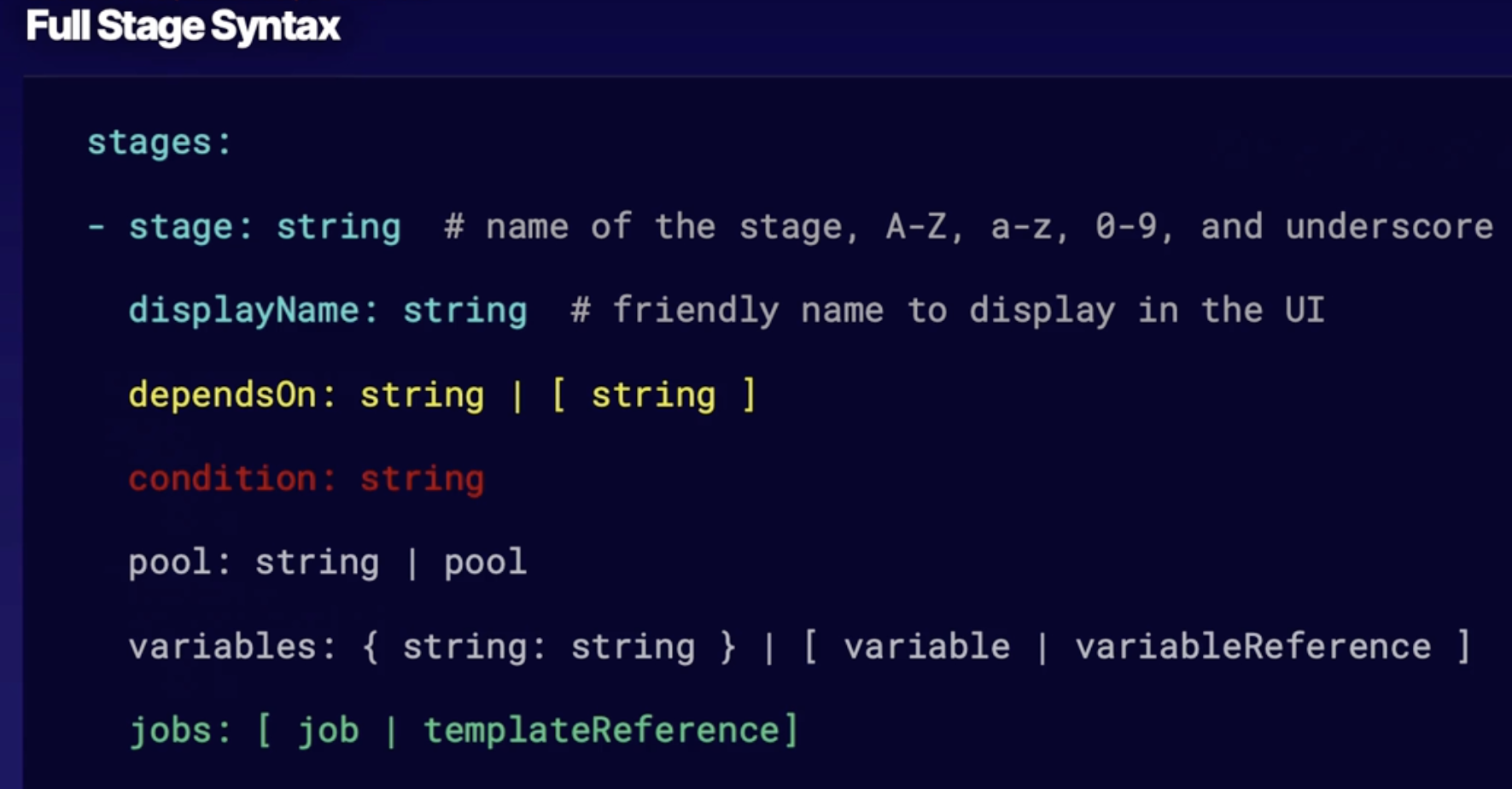

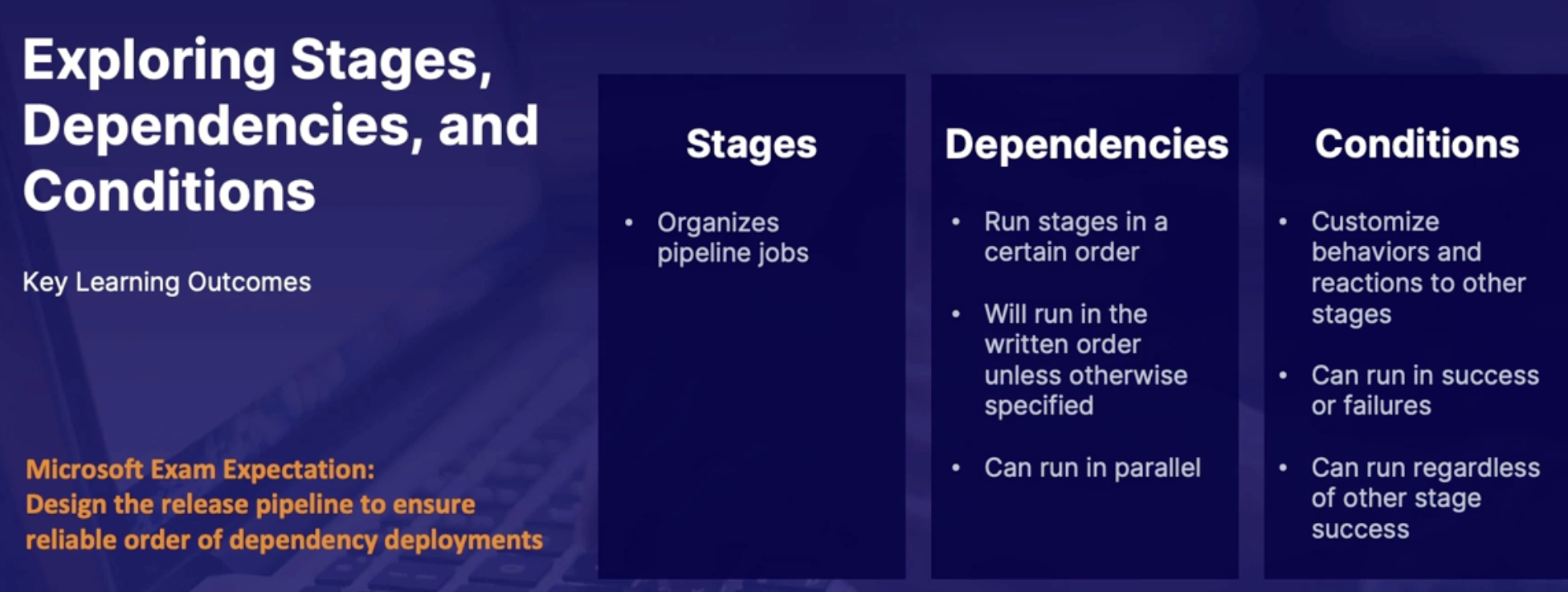

Exploring stages, dependencies and conditions 153

Release pipeline stage anatomy 153

Stages 153

Dependencies 154

Conditions 154

Full stage syntax 154

Summary 154

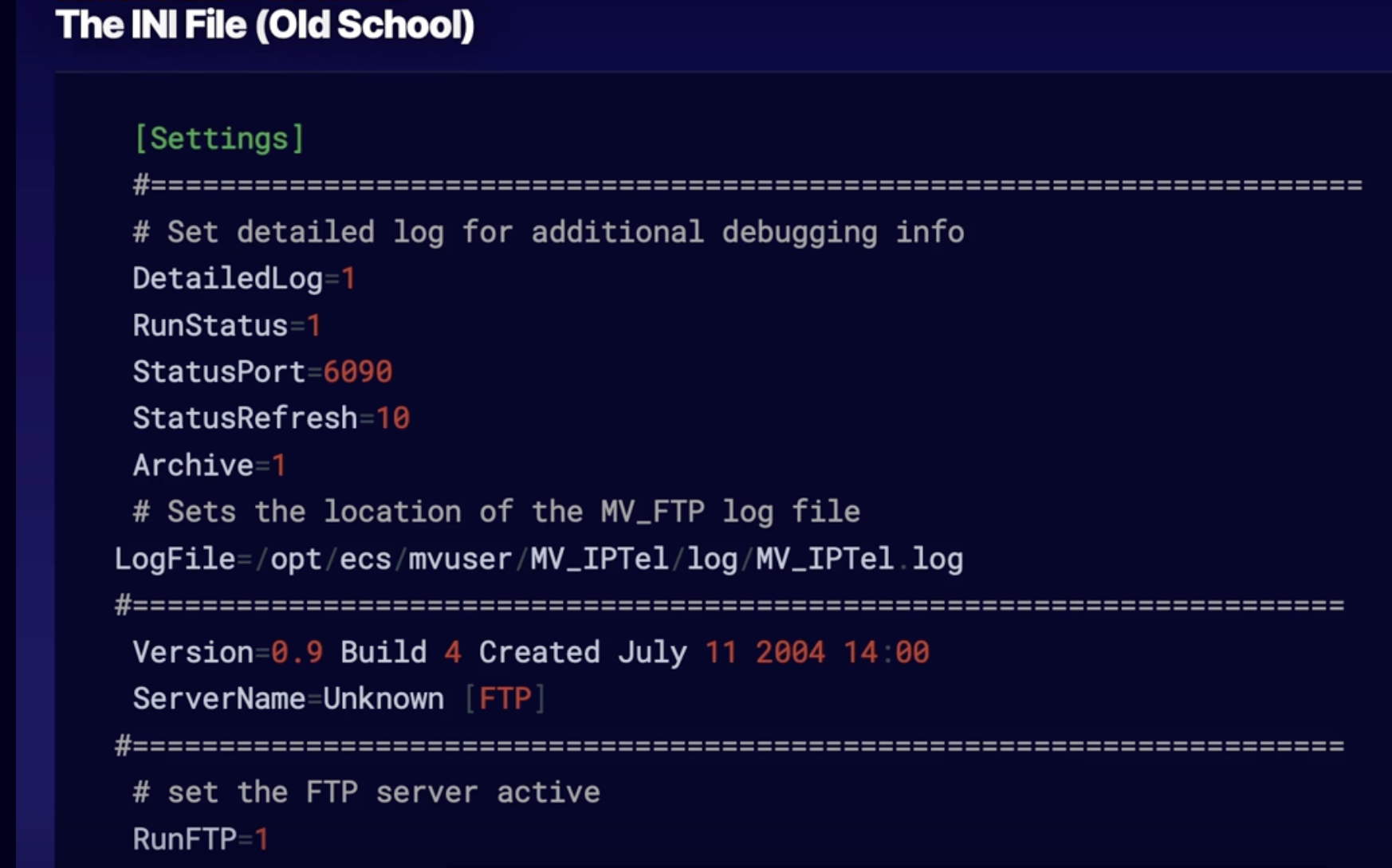

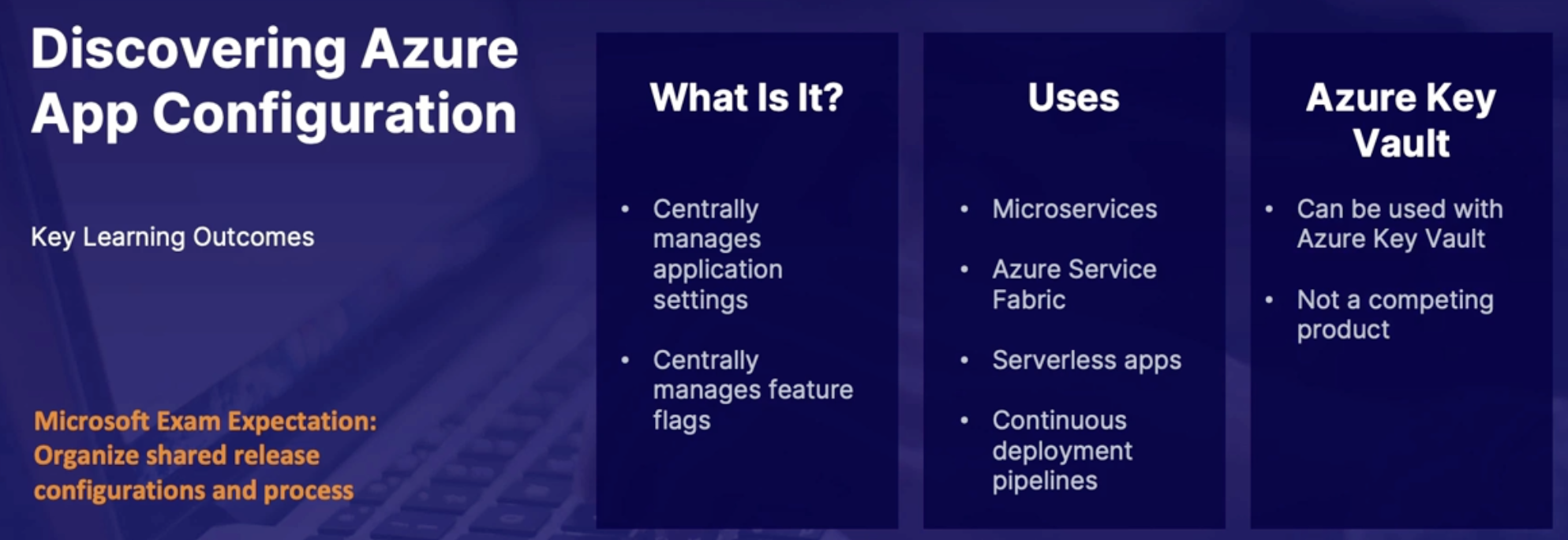

Discovering Azure app configuration 155

The INI File 155

How can you deploy app configurations? 156

What is Azure app configuration 156

Azure app configuration benefits 156

Demo 157

Summary 157

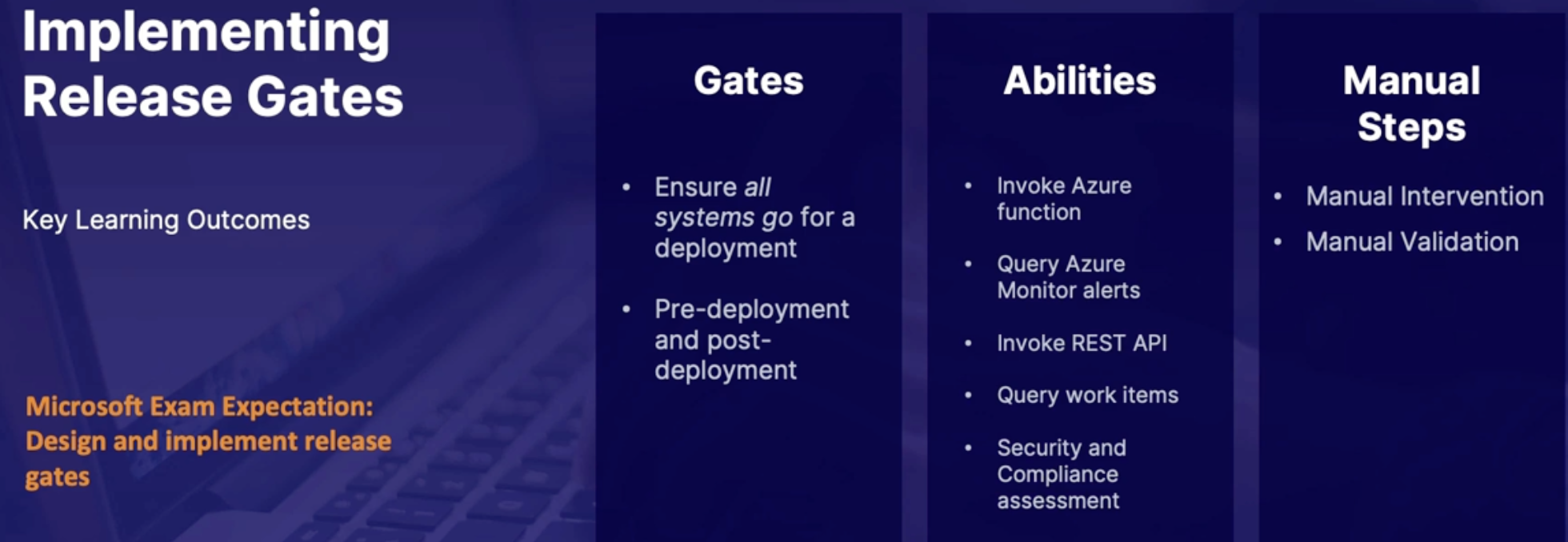

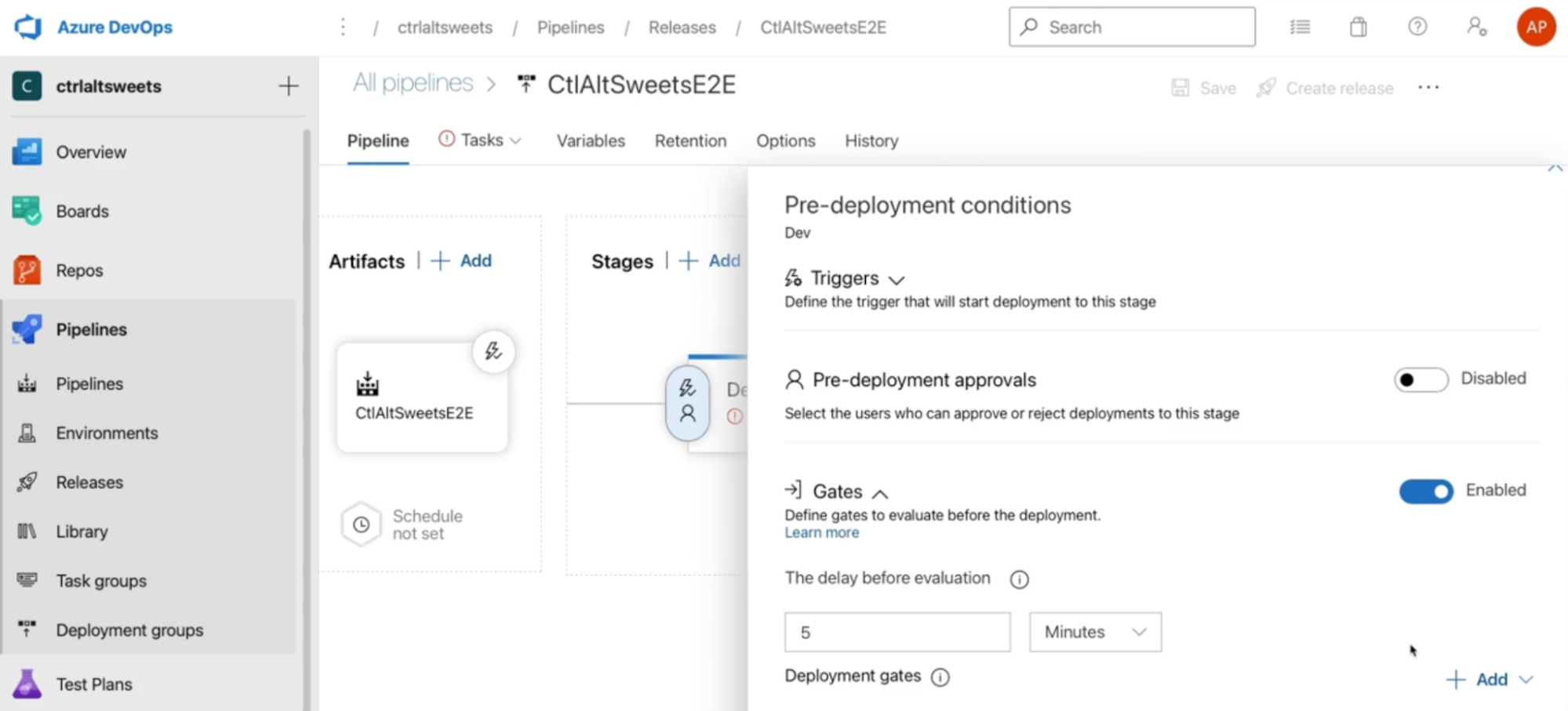

Implementing release gates 157

What are gates 157

Scenarios for gates 157

1. incident and issues management 157

Manual intervention and validations 158

Demo 158

Summary 158

Summary 158

Lab: Creating a multi-stage build in Azure pipeline to deploy a .NET app 160

Chap - 16: Planning the development environment strategy 160

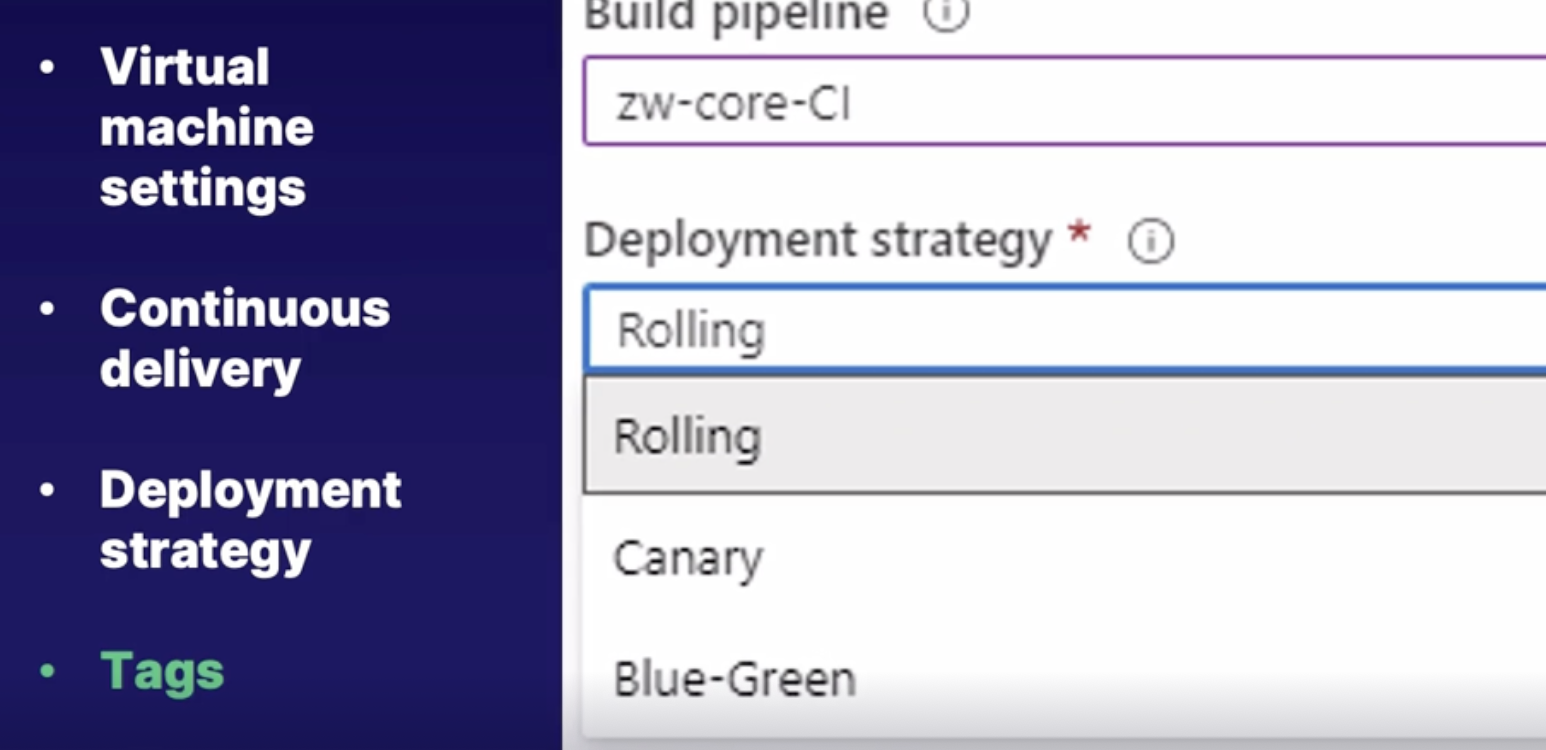

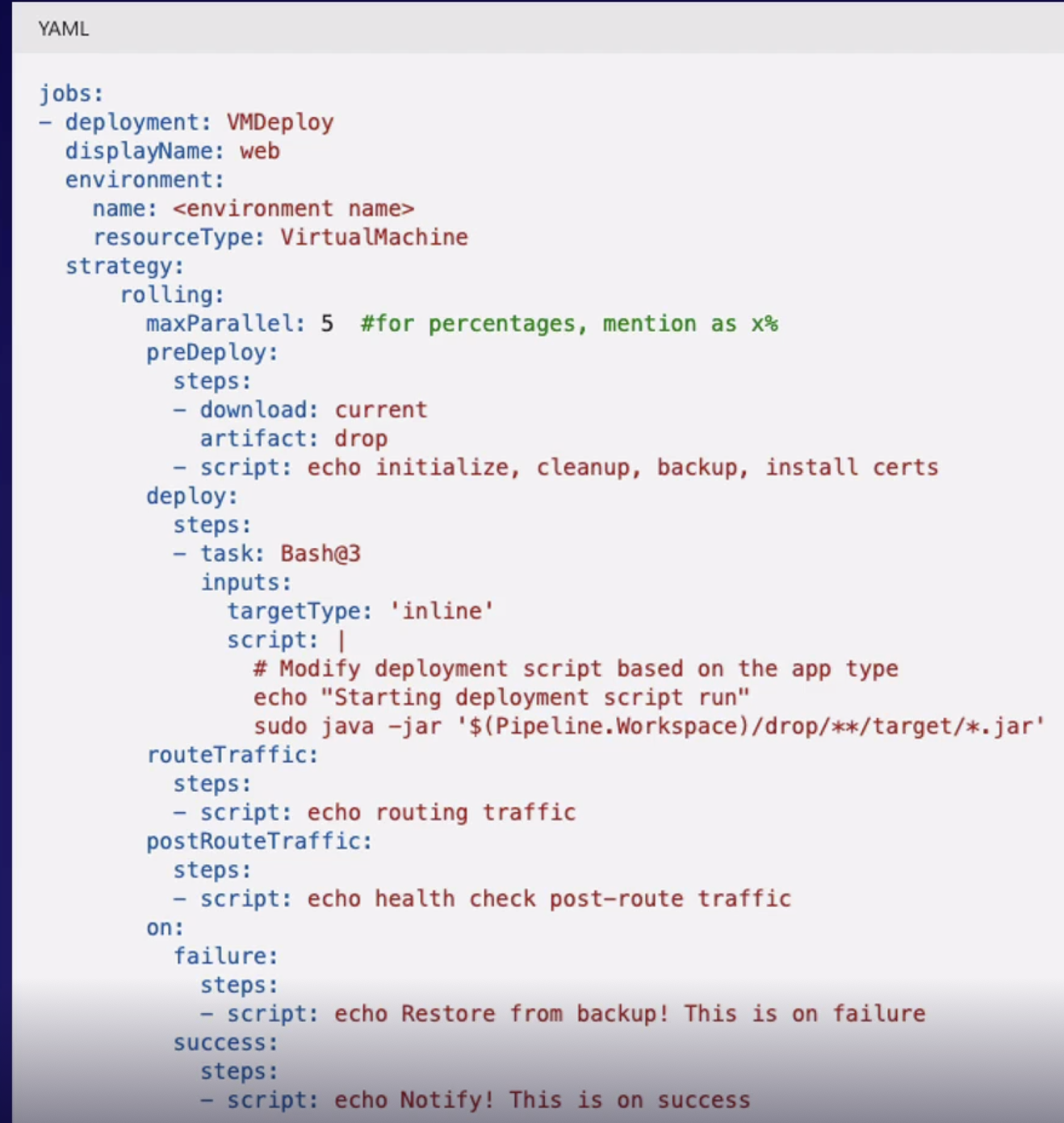

Exploring release strategies 160

Deployment strategies and steps 160

Deployment representations 161

Deployment releases using virtual machines 162

Deployment jobs 163

Summary 163

Implementing deployment slot releases 163

What are deployment slots 164

Demo 164

Summary 164

Implementing load balancer and traffic manager releases 164

Load balancer and traffic manager 164

Demo 164

Summary 165

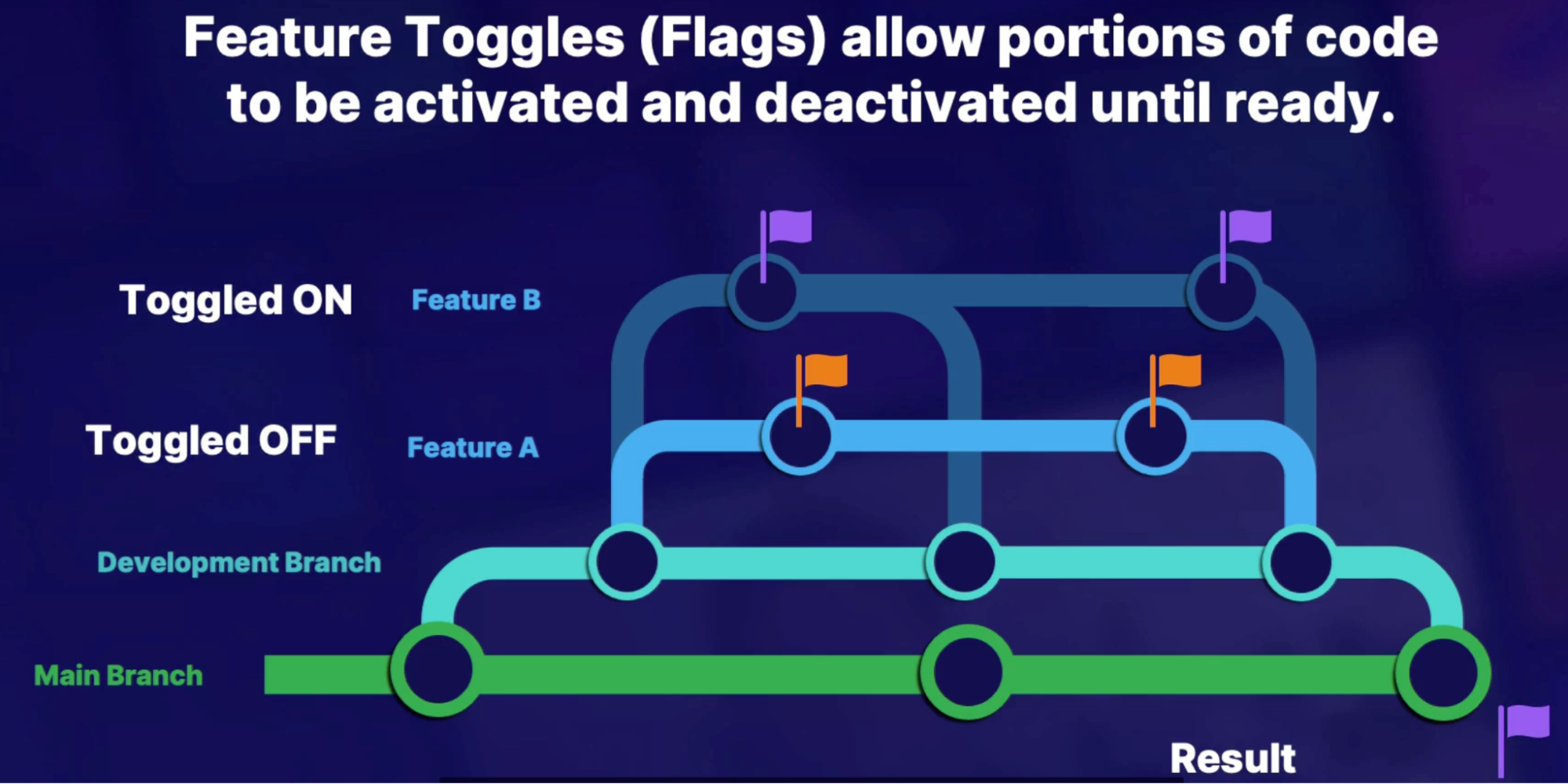

Feature toggles 165

Feature flag branching 165

Demo 165

Summary 165

Lab: Deploy a node JS app to a deployment slot in Azure DevOps 167

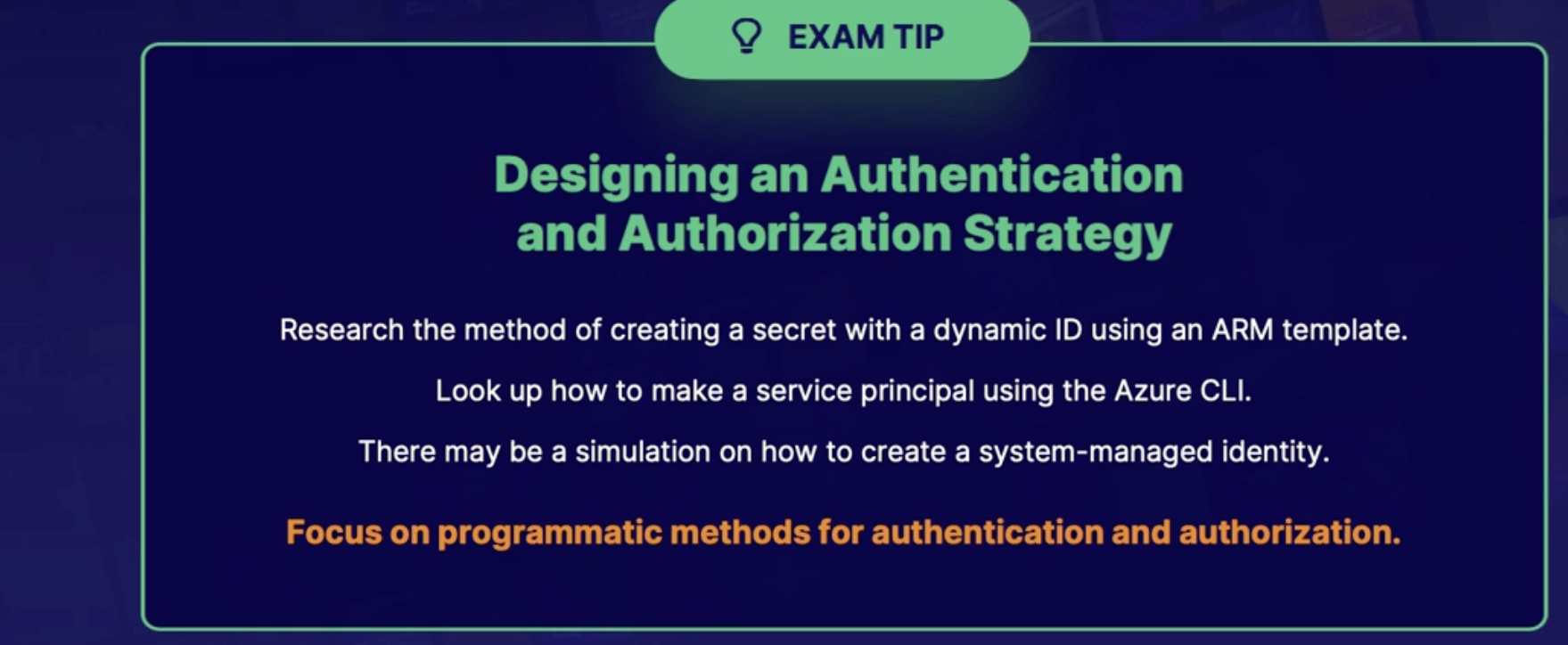

Chap - 17: Designing an Authentication and Authorization Strategy 167

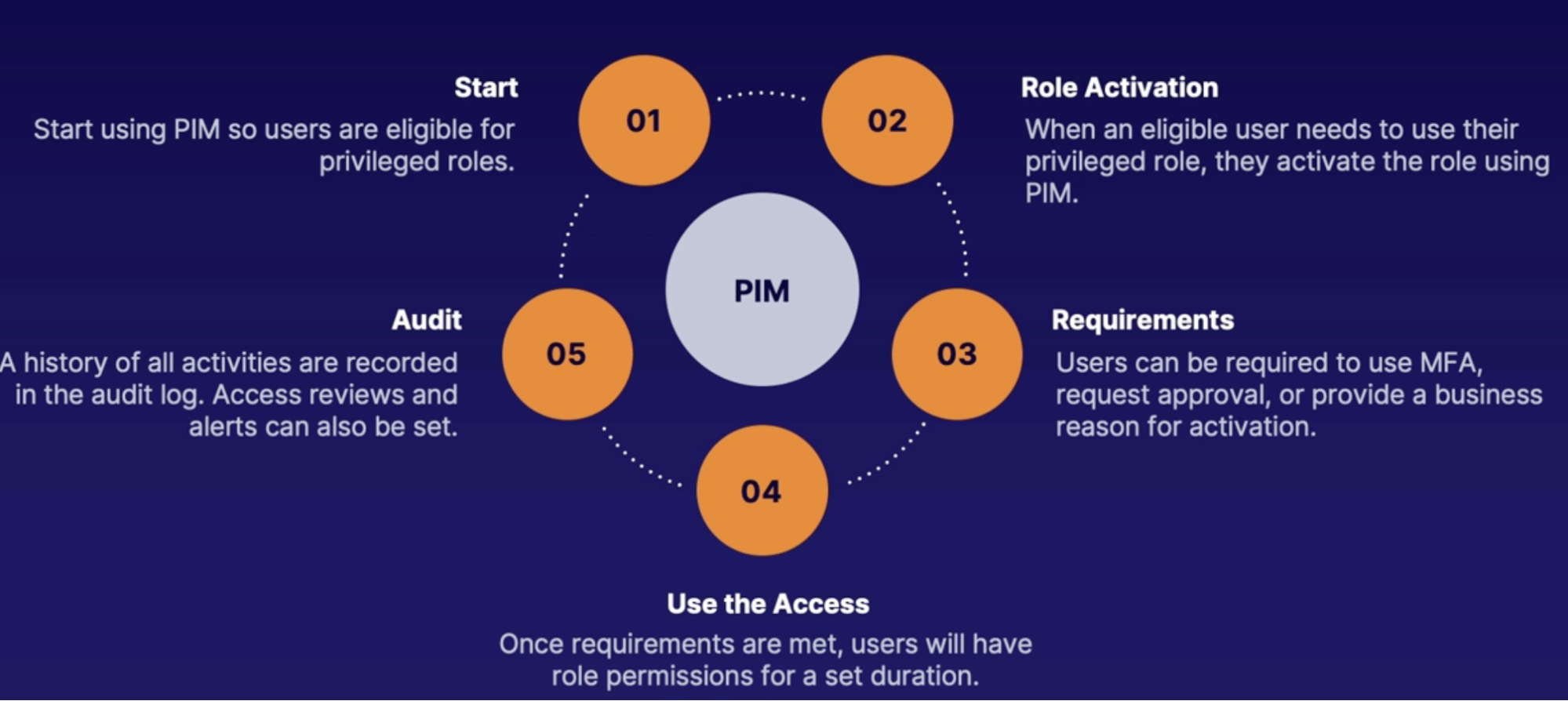

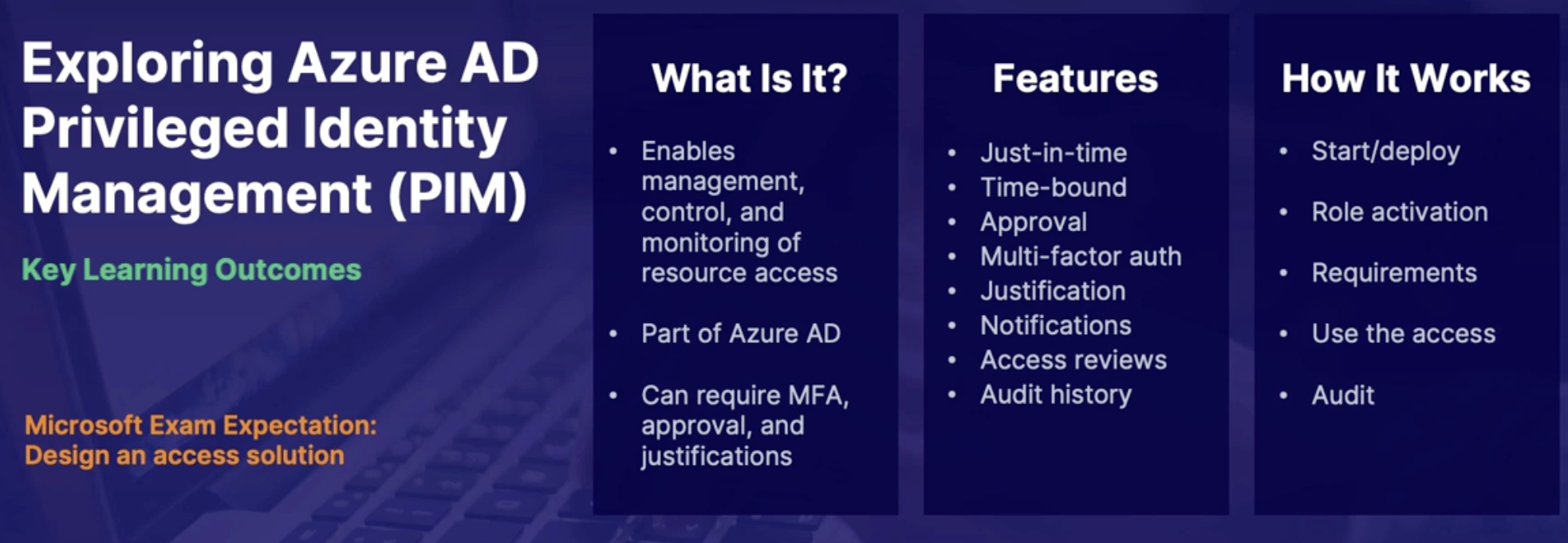

Azure AD Privileged Identity Management(PIM) 167

Why use Privileged Identity Management? 167

What is PIM? 167

What does it do? 168

How does it work? 168

Summary 168

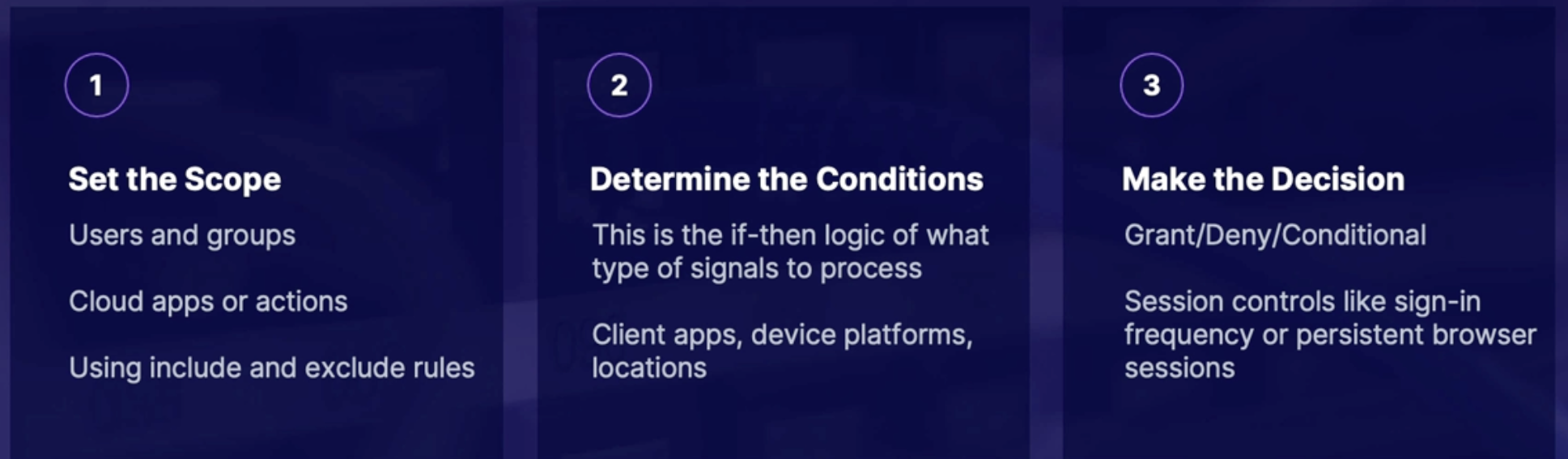

Azure AD conditional access 169

Why use conditional access 169

What is Azure AD conditional access 169

What does it do 169

How it works 169

Summary 169

Implementing multi factor authentication(MFA) 170

What is MFA 170

How it works & Available verification methods 170

Enabling multifactor authentication 170

Demo 170

Summary 170

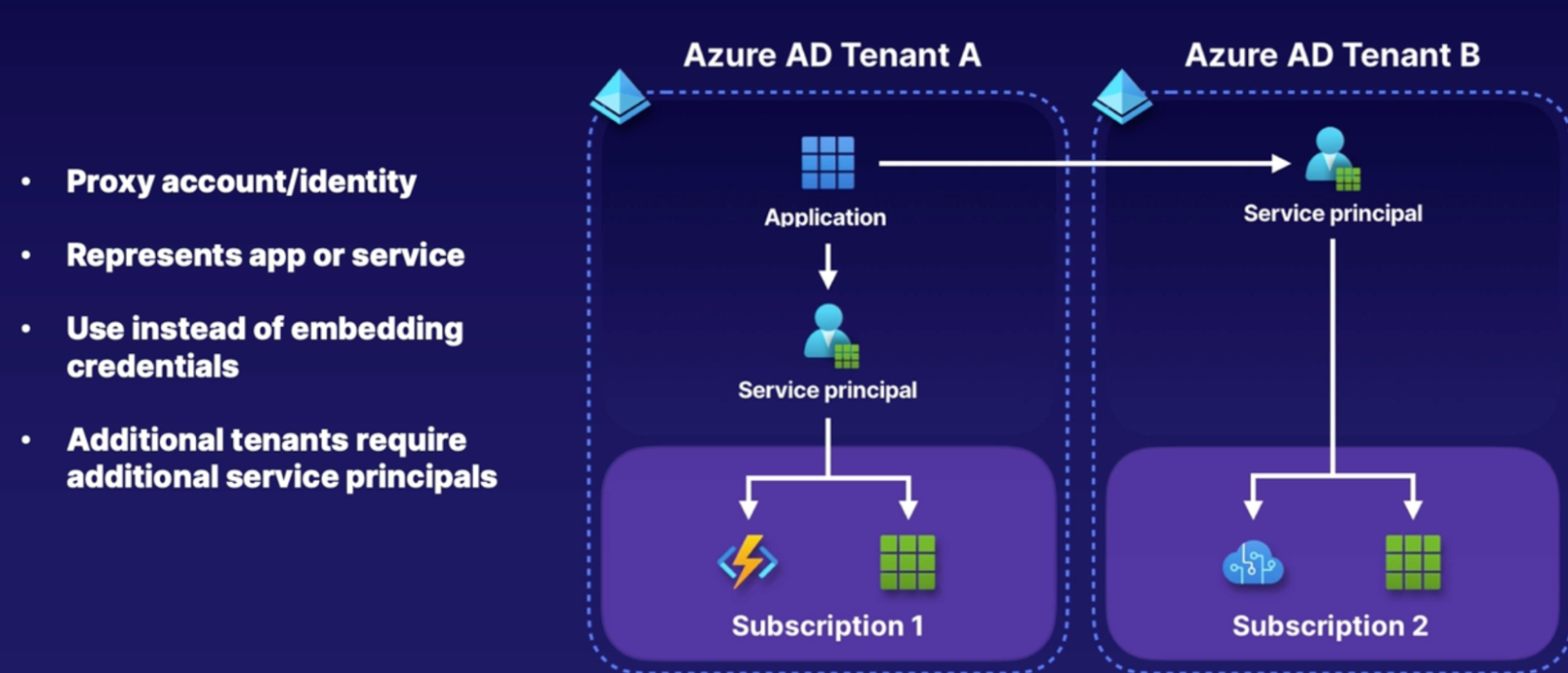

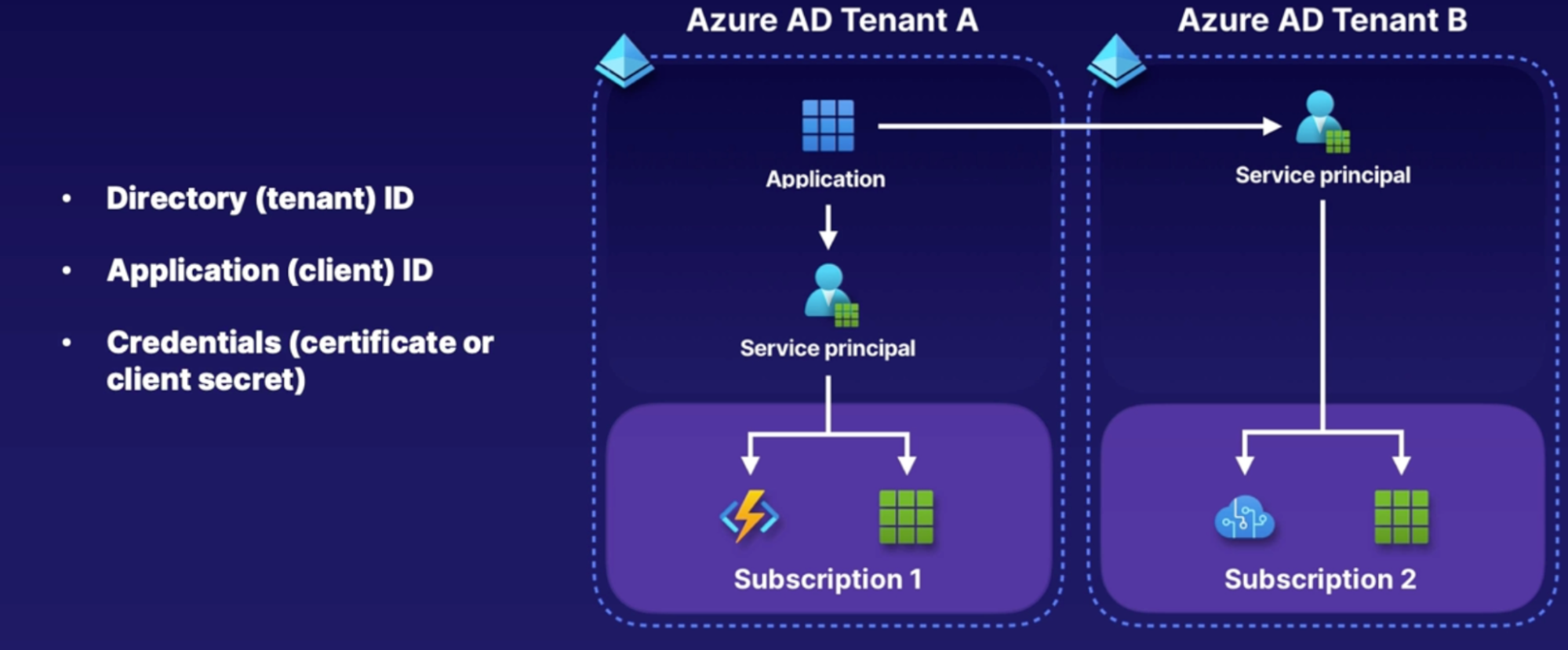

Working with service principals 170

Using service accounts in code 170

What are Azure service principles 171

How to access resources with service principals 171

Summary 171

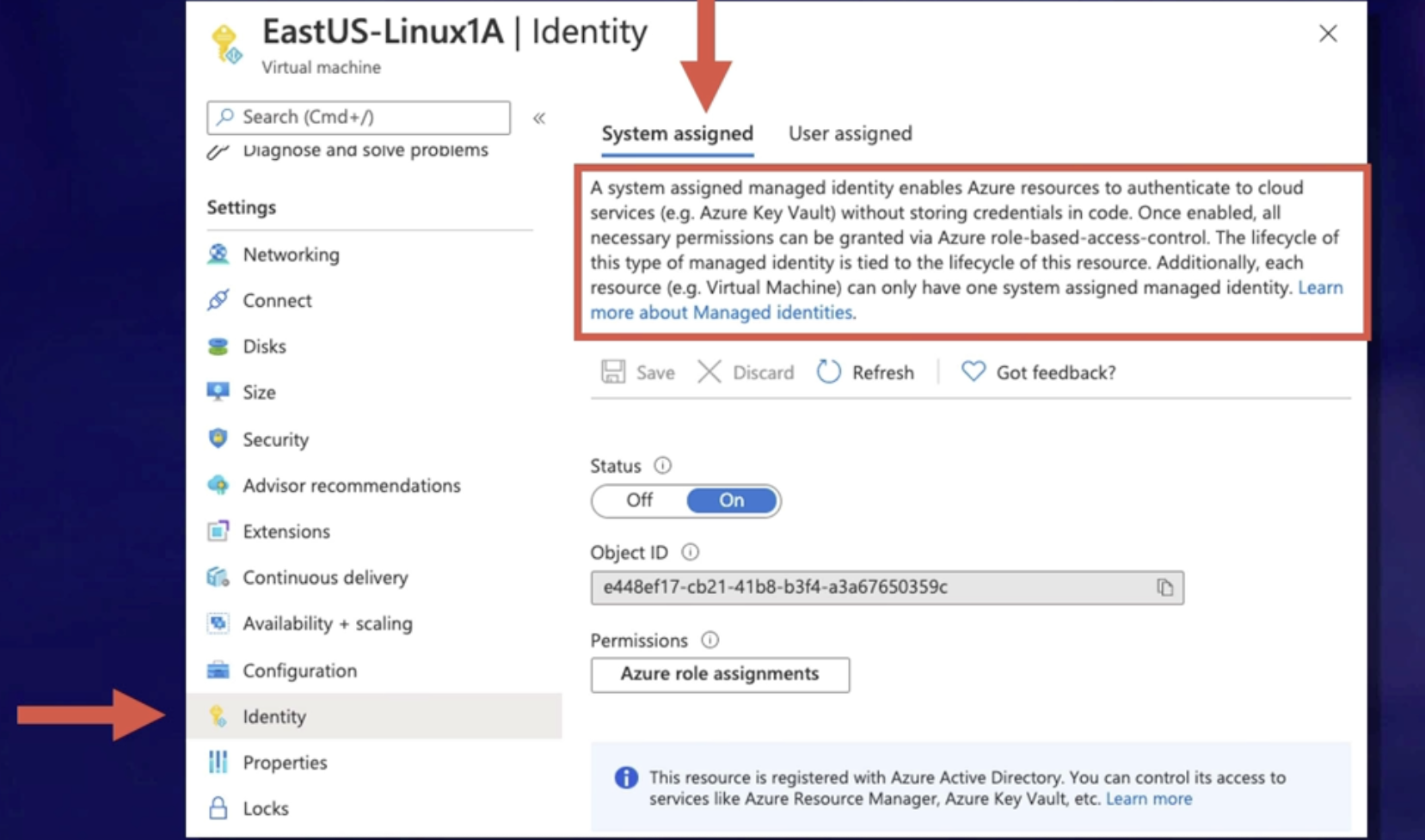

Working with managed identities 171

What is managed service identity (MSI) 171

Demo 172

Summary 172

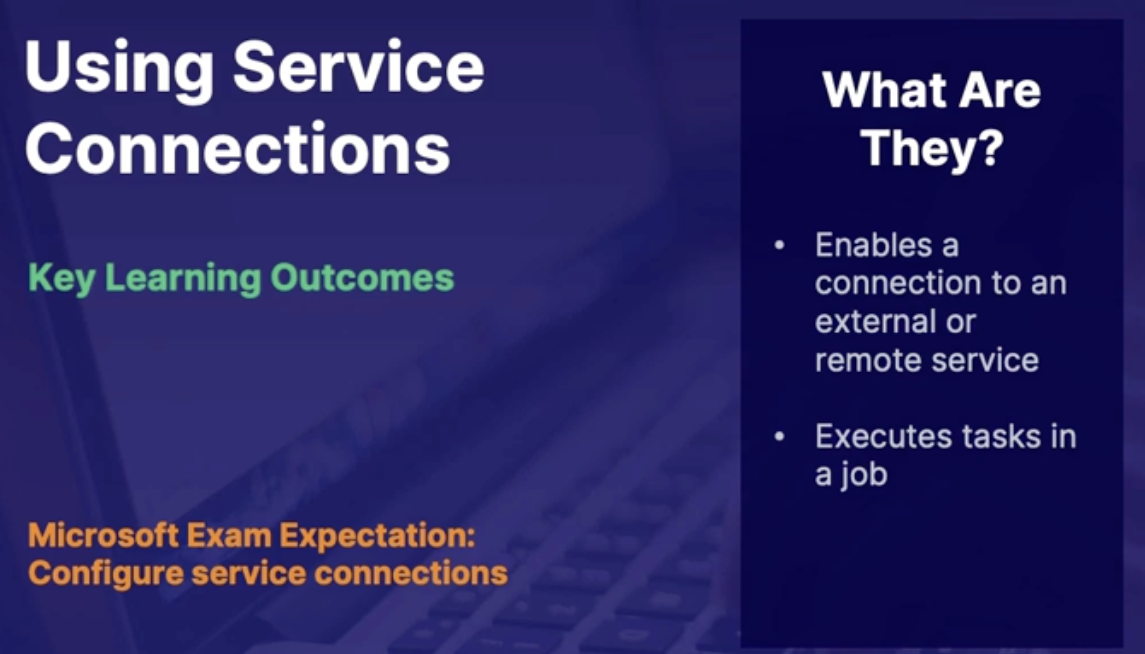

Using service connections 172

What is it 173

Demo 173

Summary 173

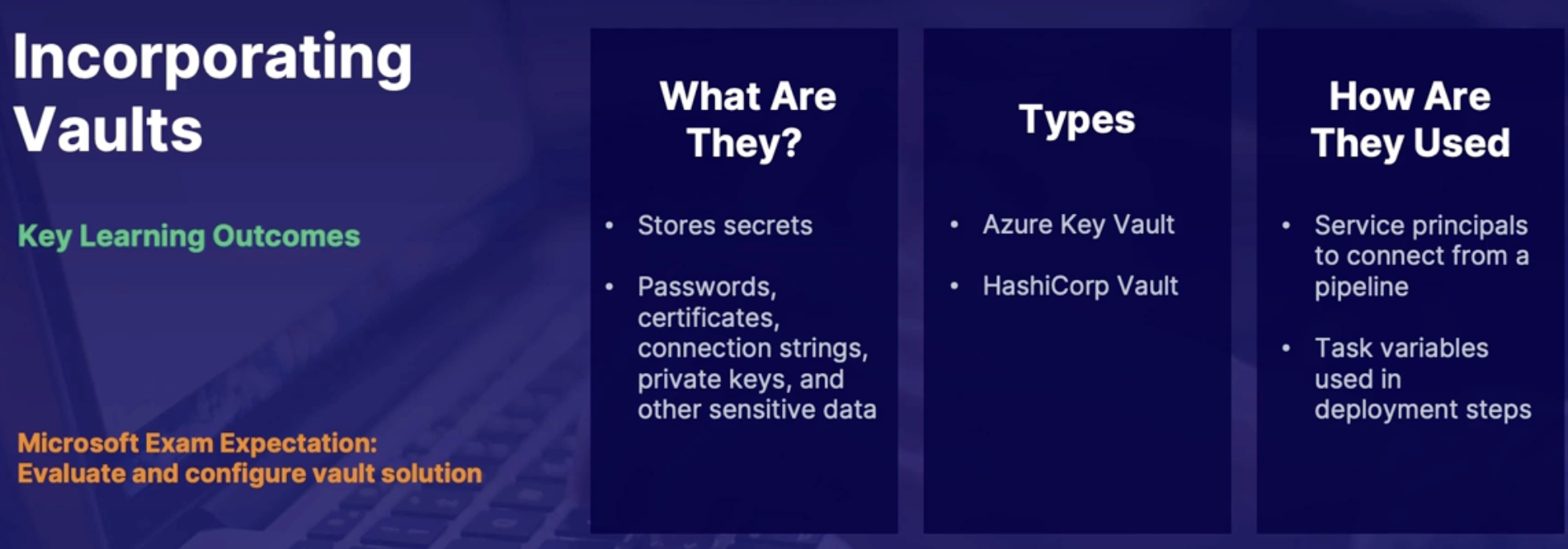

Incorporating vaults 173

What are key vaults 173

Azure key vaults 173

Azure key vault using a DevOps pipeline 173

Using HashiCorp Vault with Azure Key vault 173

Demo 173

Summary 173

Lab: Read a secret from an Azure key vault in Azure pipelines 174

Summary 174

Chap - 18: Developing Security and Compliance 177

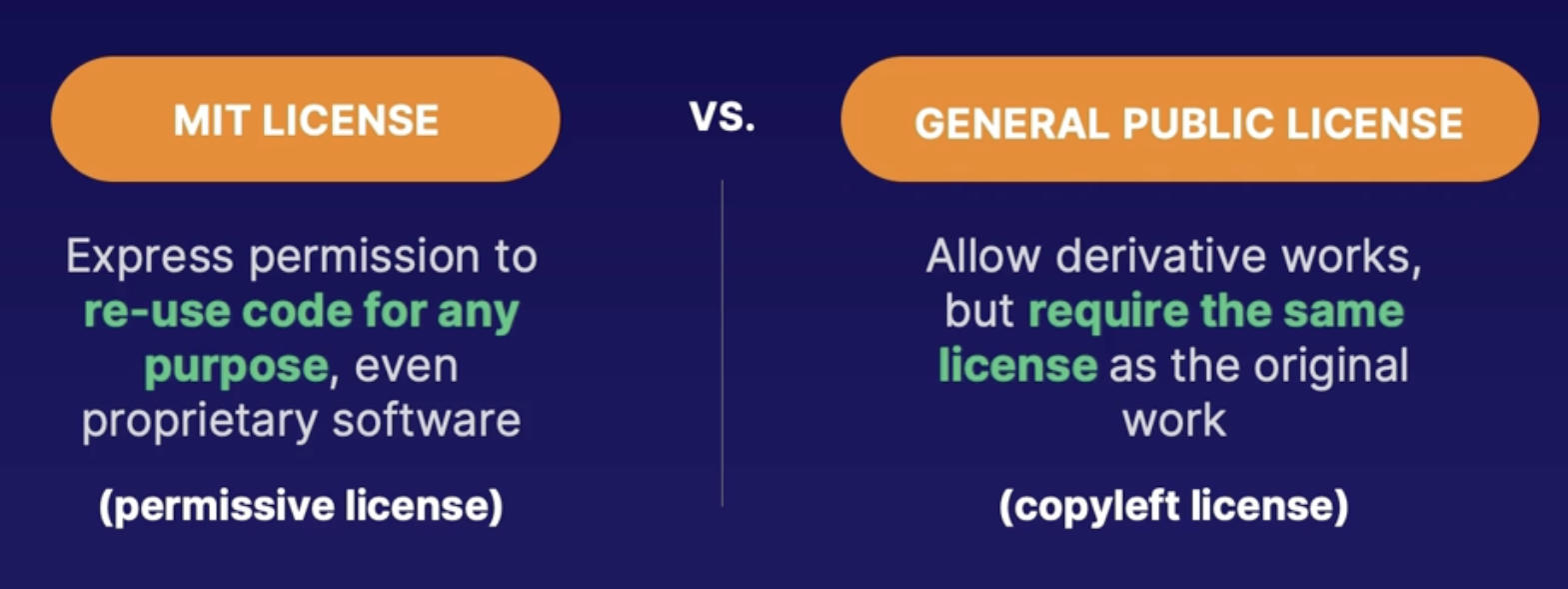

Understanding dependency scanning 177

Dependencies 177

Type of dependency scanning 177

Security dependency scanning 177

Compliance dependency scanning 177

Aspects of dependency scanning 177

Summary 177

Exploring container dependency scanning 178

Aspects of container scanning 179

Demo 179

Summary 179

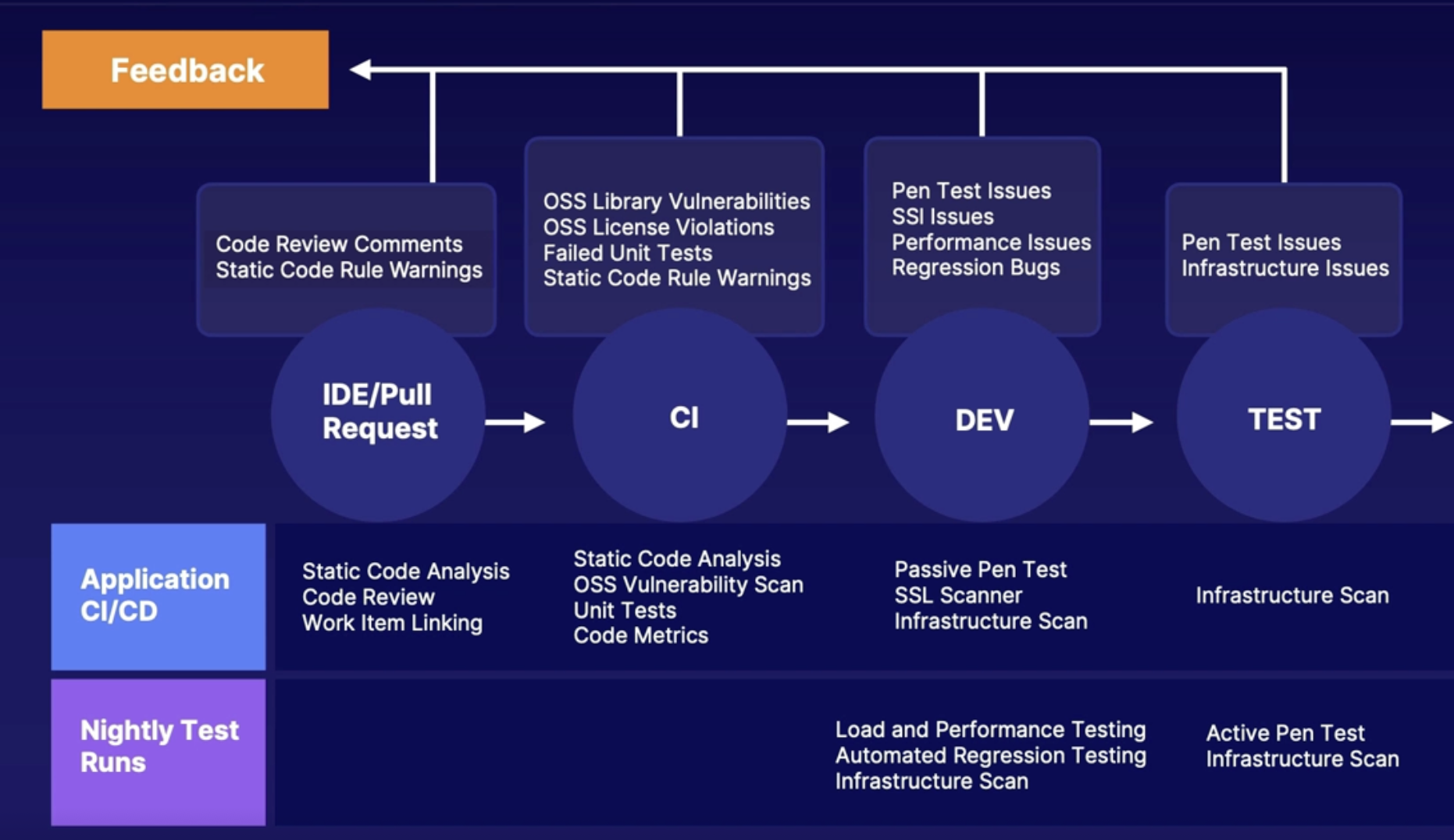

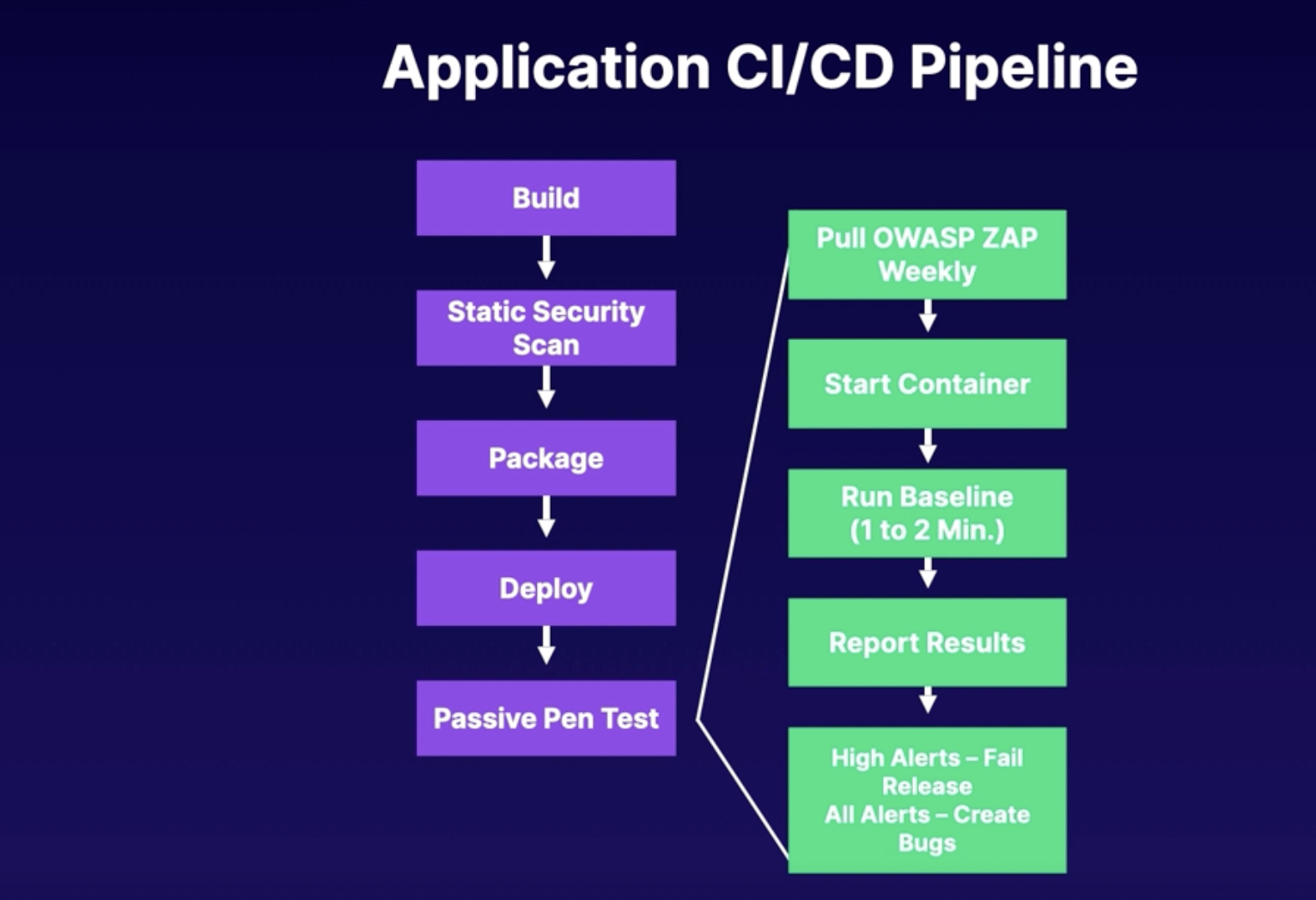

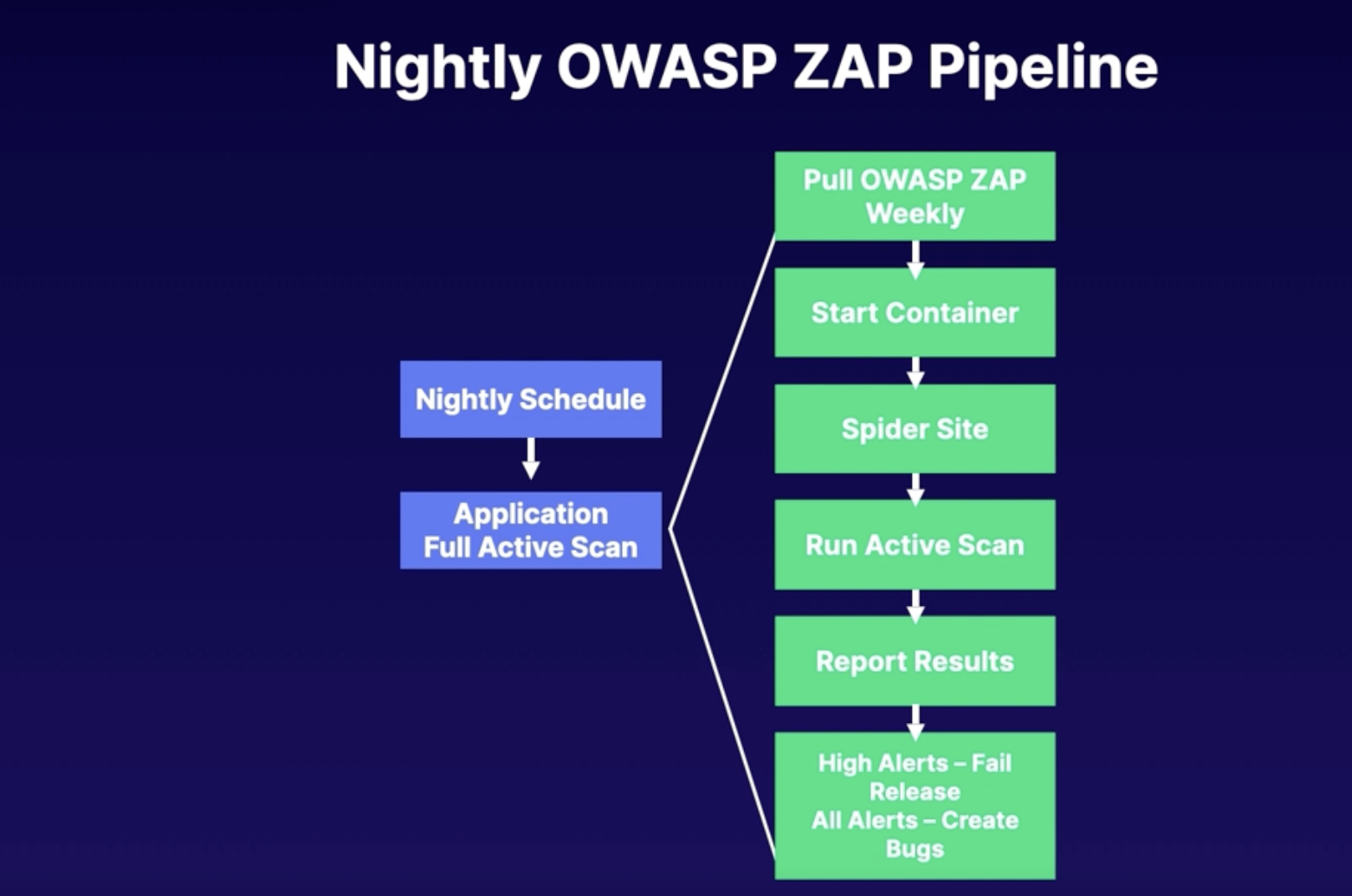

Incorporating security into your pipelines 179

Securing applications 179

Continuous security validation process 179

Secure application pipelines 180

Summary 180

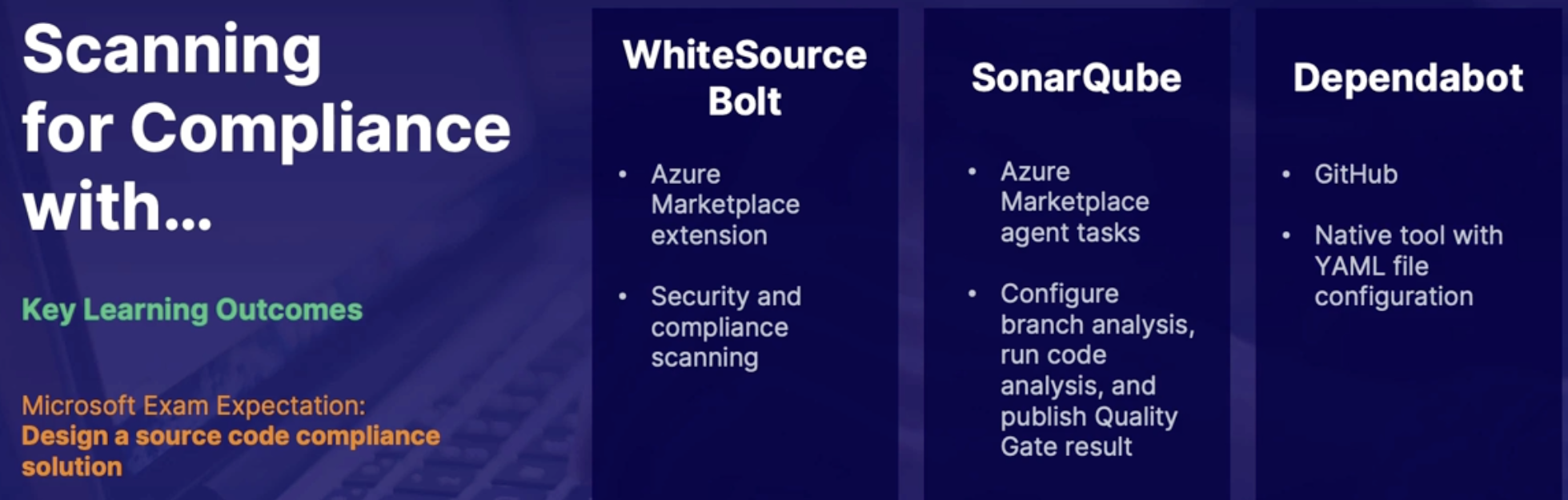

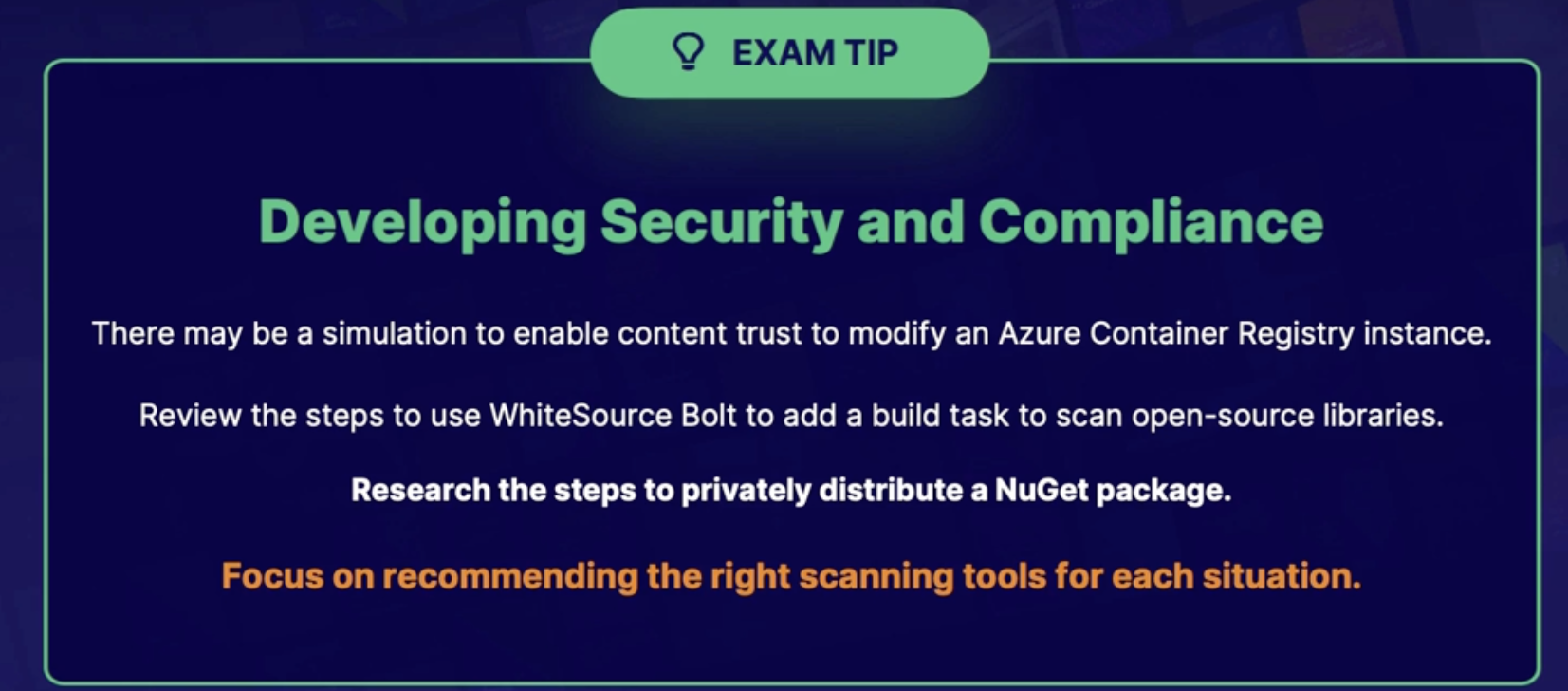

Scanning with compliance with WhiteSource Bolt, SonarQube, Dependabot 181

Summary 182

Chap - 19: Designing Governance Enforcement Mechanisms 184

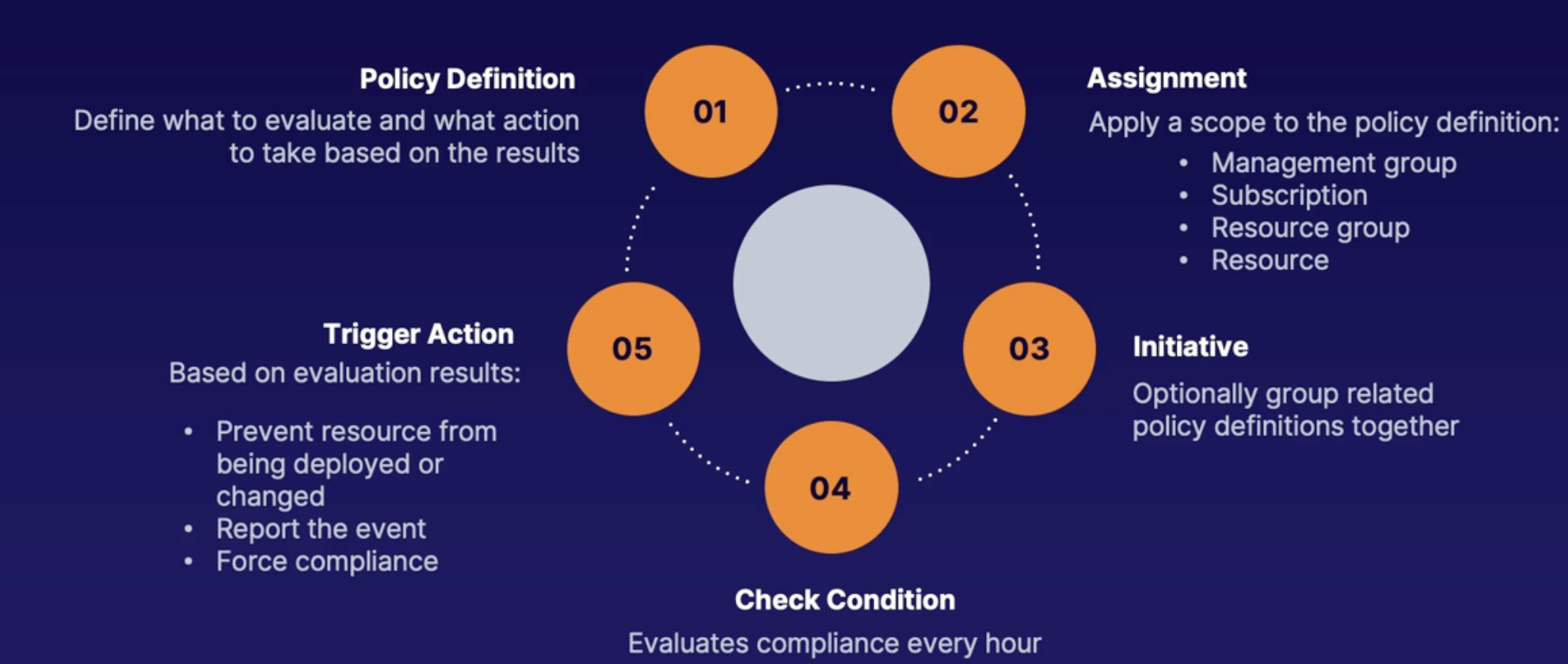

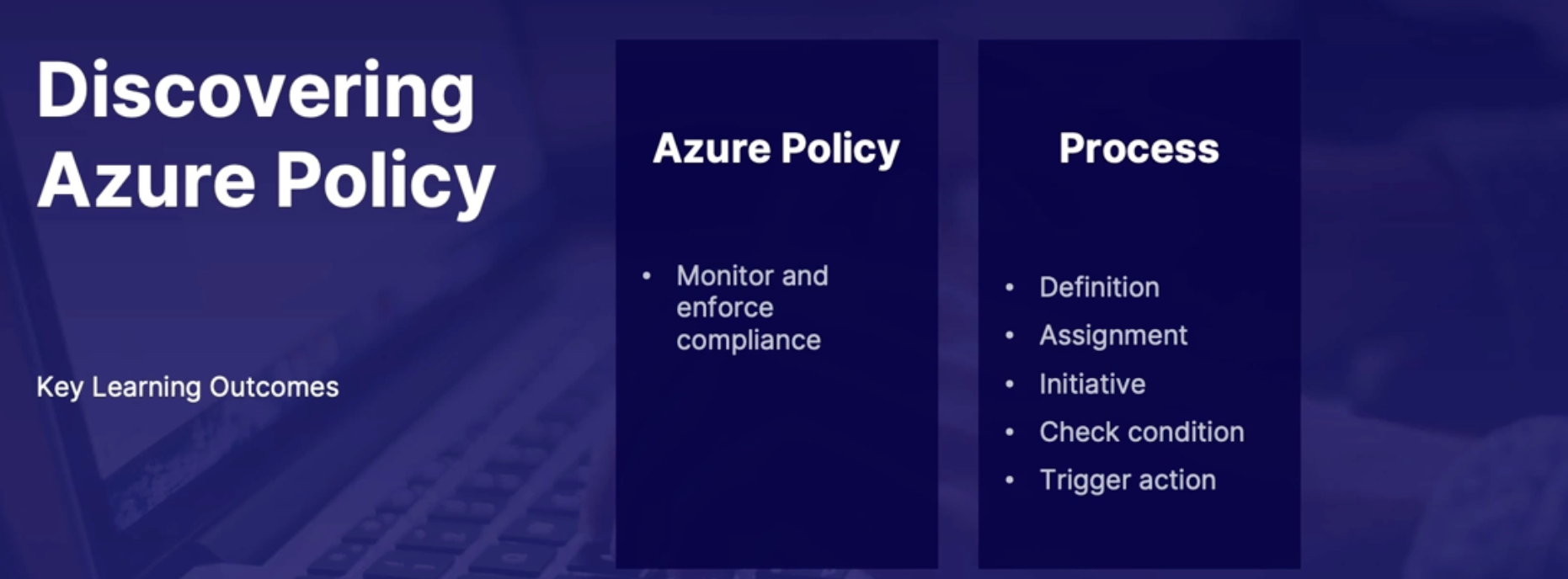

Discovering Azure policy 184

Scenario 184

Azure policy 184

Azure policy Access 184

Demo 184

1. Explore Azure policy 184

2. Explore Azure policy integration with Azure DevOps 185

Summary 185

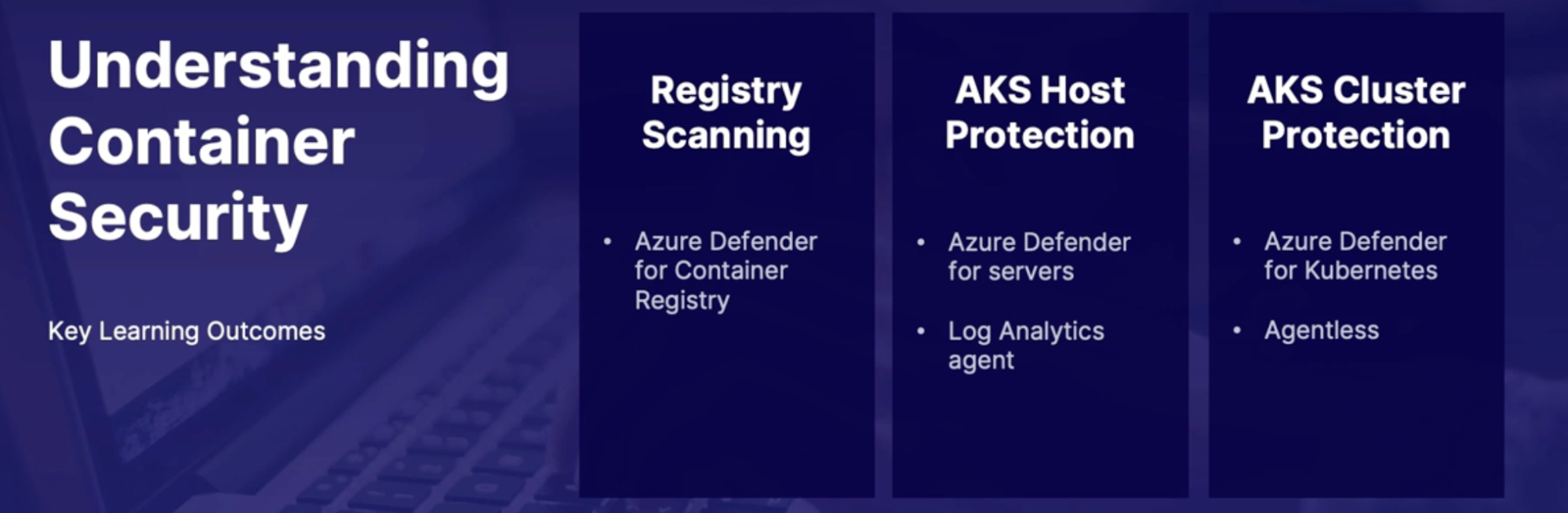

Understanding container security 185

Azure defender for container registry 185

AKS protection 185

Summary 186

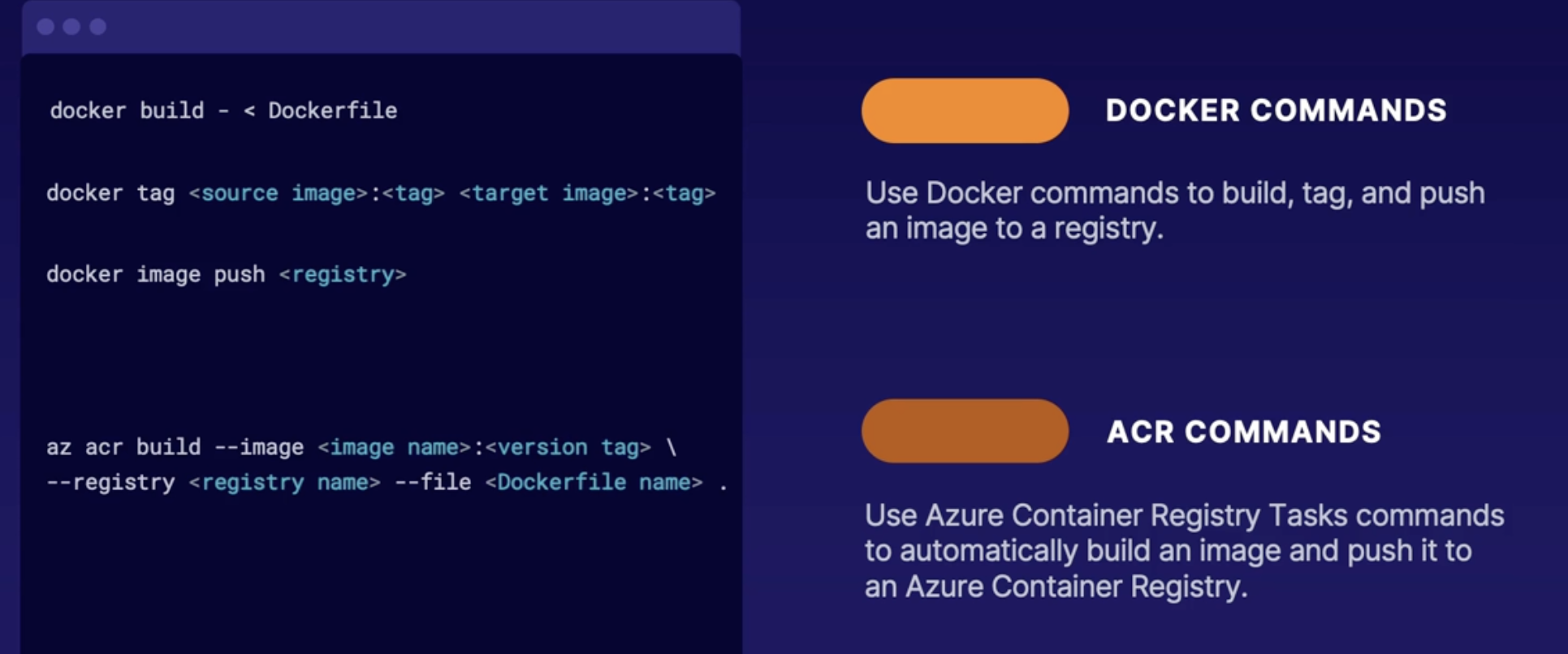

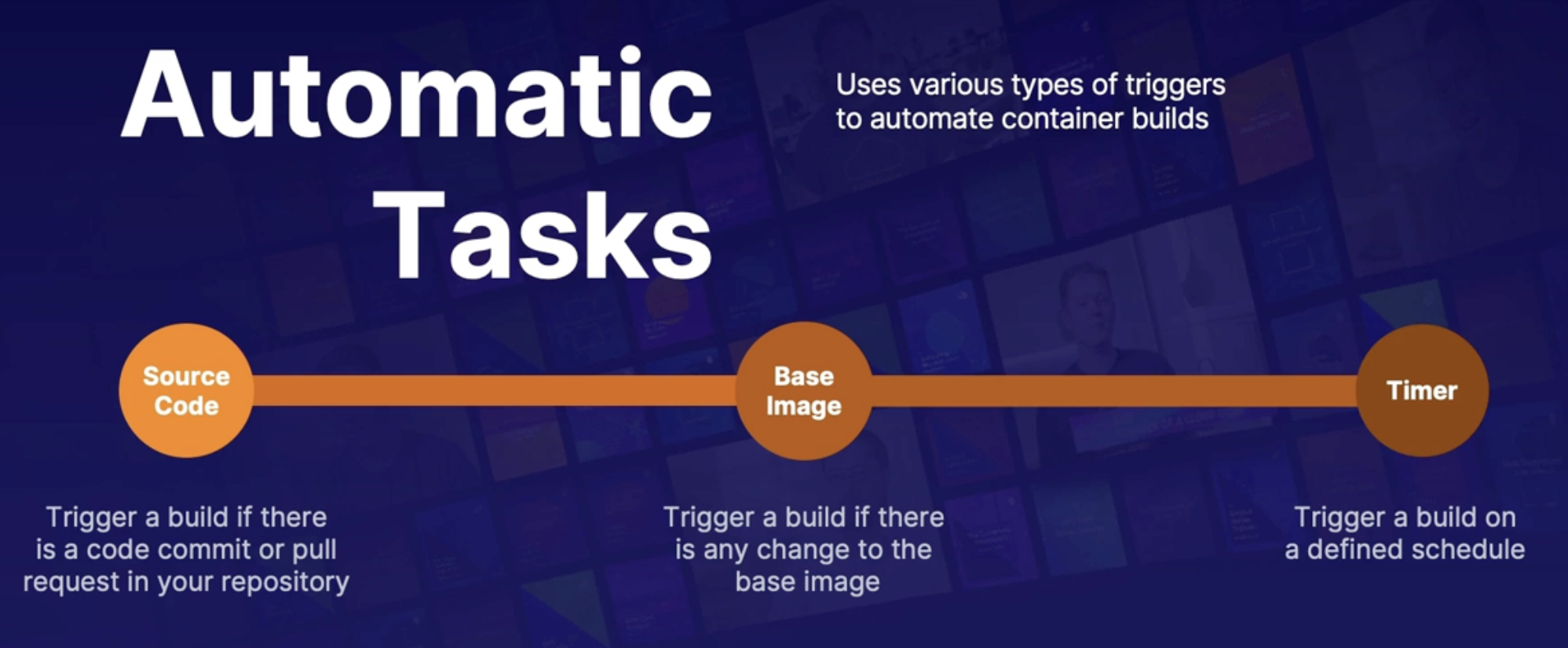

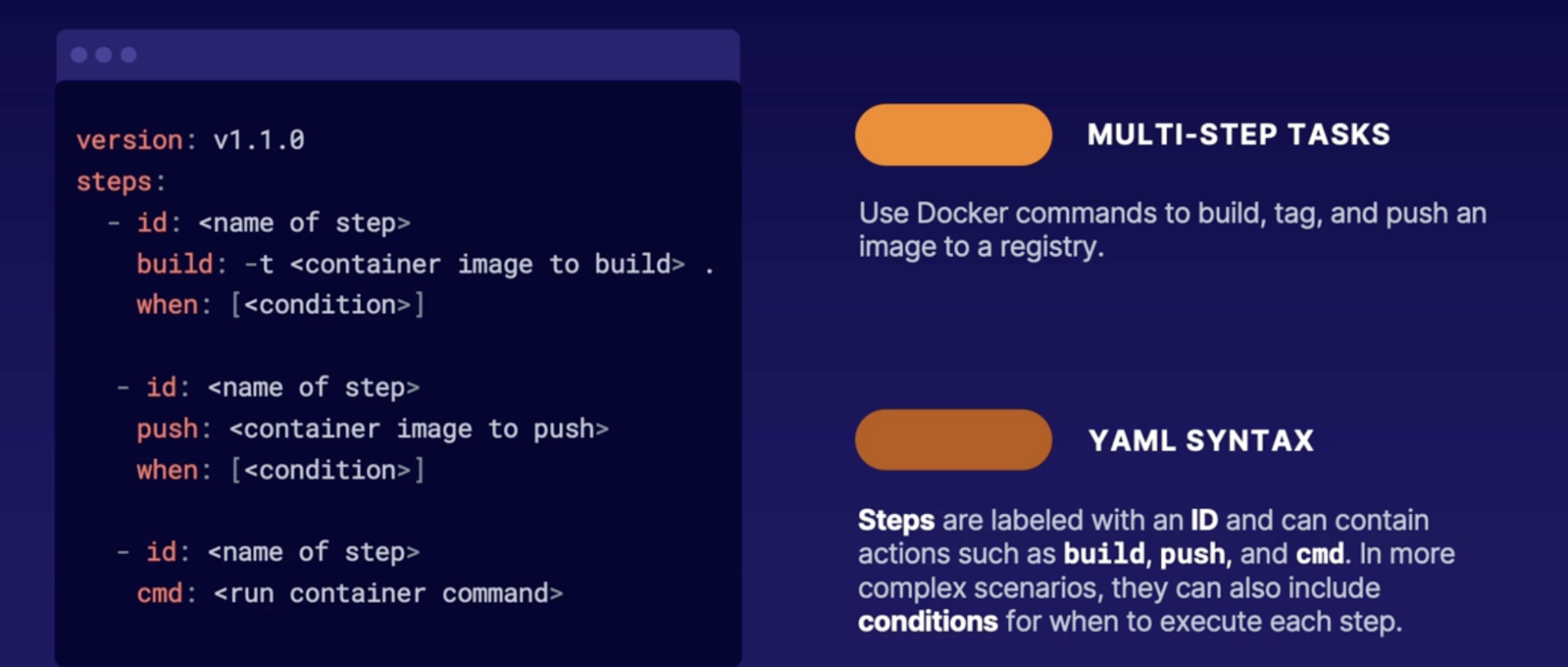

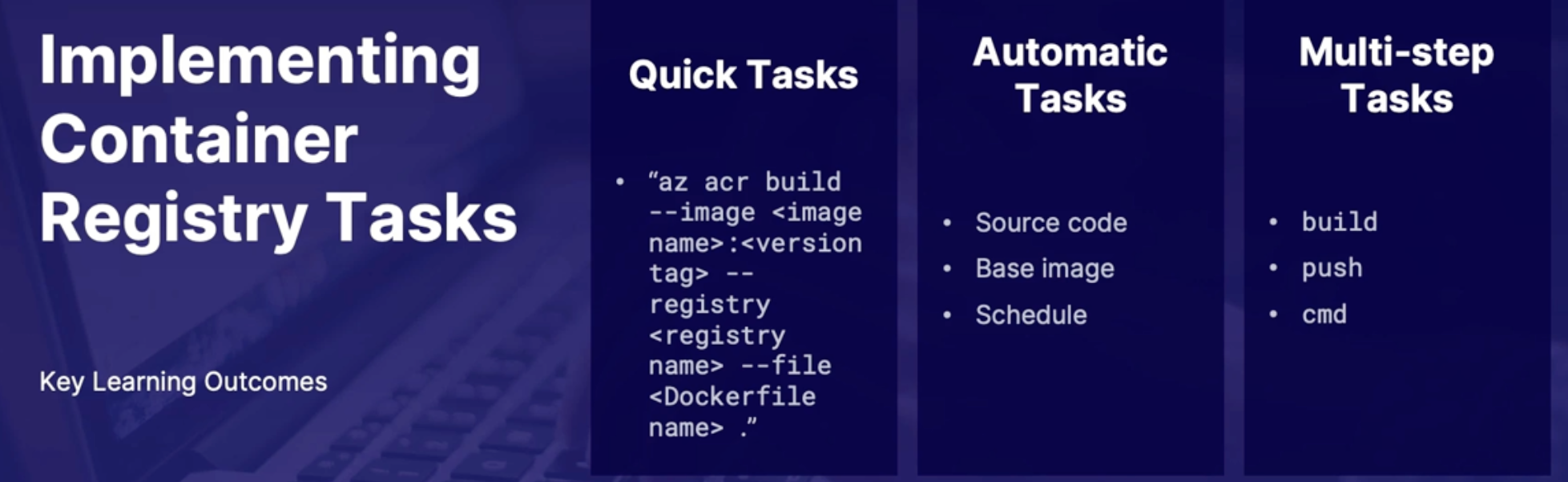

Implementing container registry tasks 186

Azure container registry 186

Tasks (Quick, Automatic, Multi-step) 187

Summary 187

Responding to security incidents 188

Emergency access accounts 188

Best practices 189

What to do after the accounts are configured 189

Demo emergency access account monitoring 189

Summary 189

Summary 189

lAB: Build and Run a Container Using Azure ACR Tasks 191

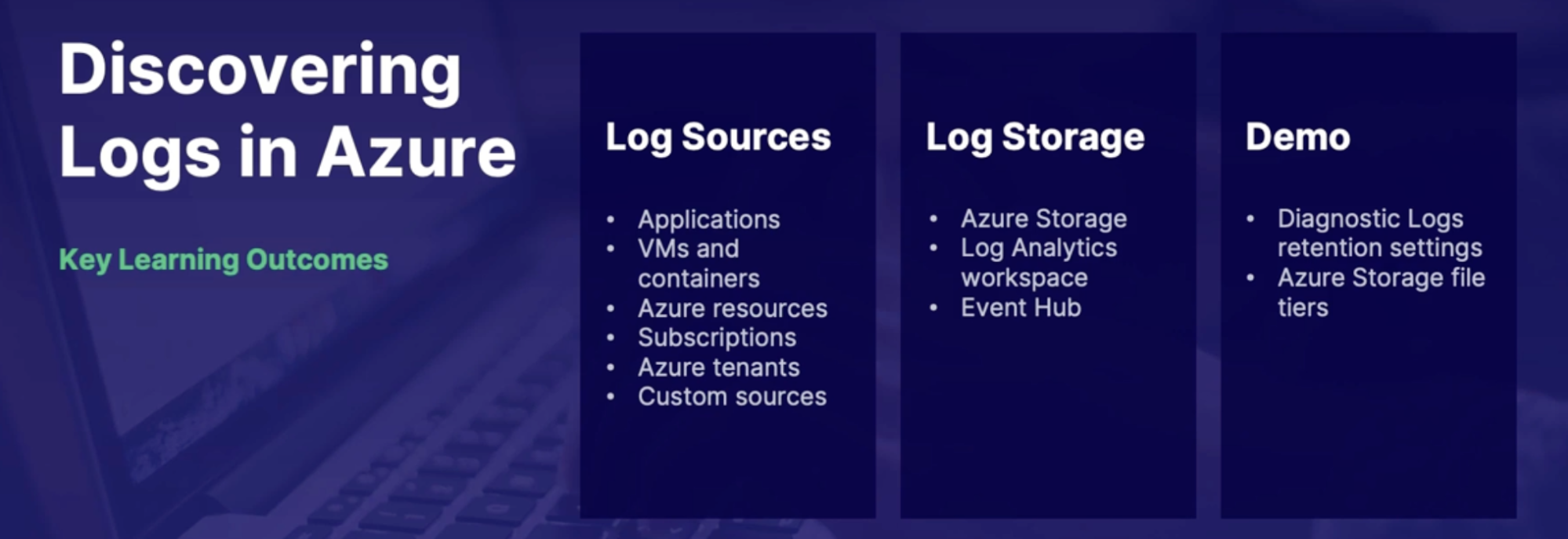

Chap - 20: Designing and Implementing Logging 191

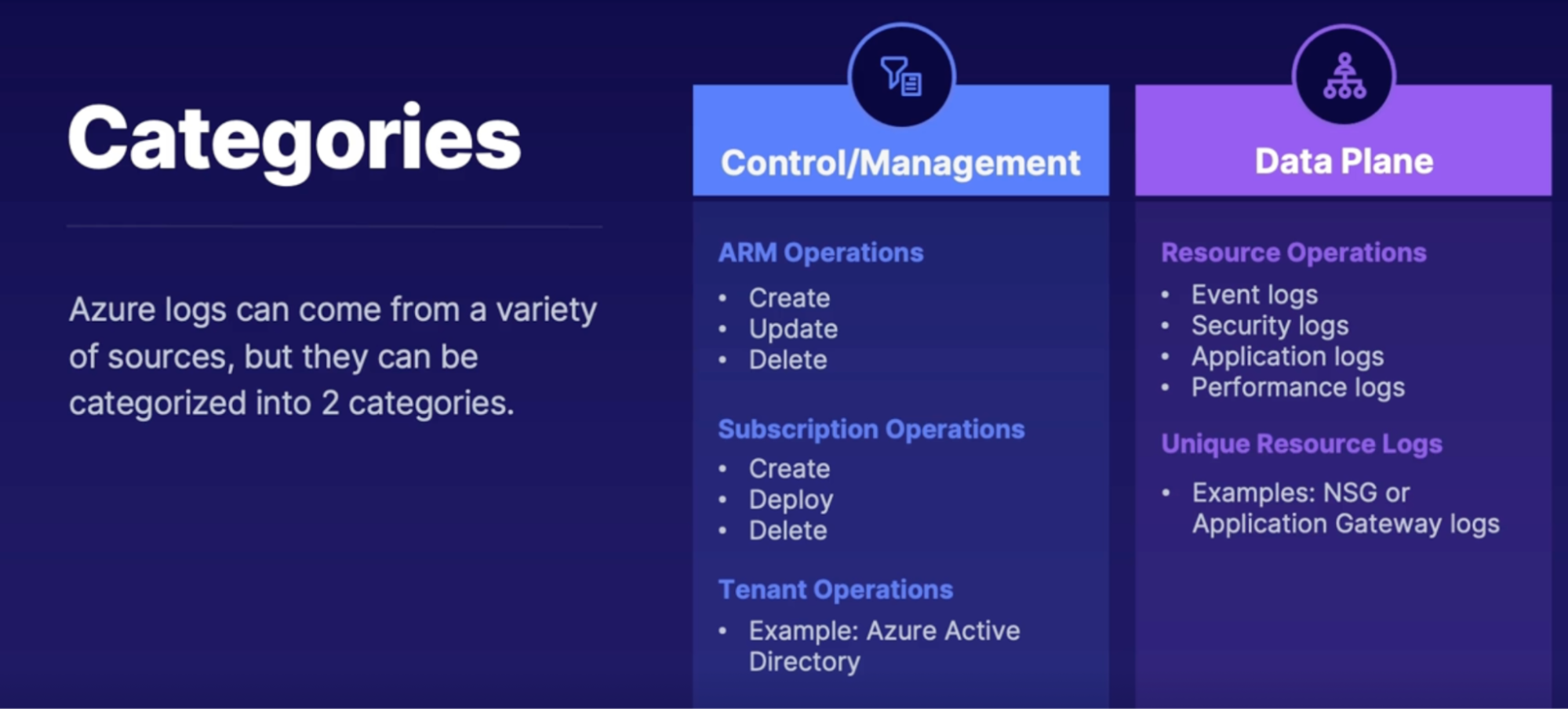

Discovering logs in Azure 191

What are logs 191

Sources of logs in Azure 191

Log categories 192

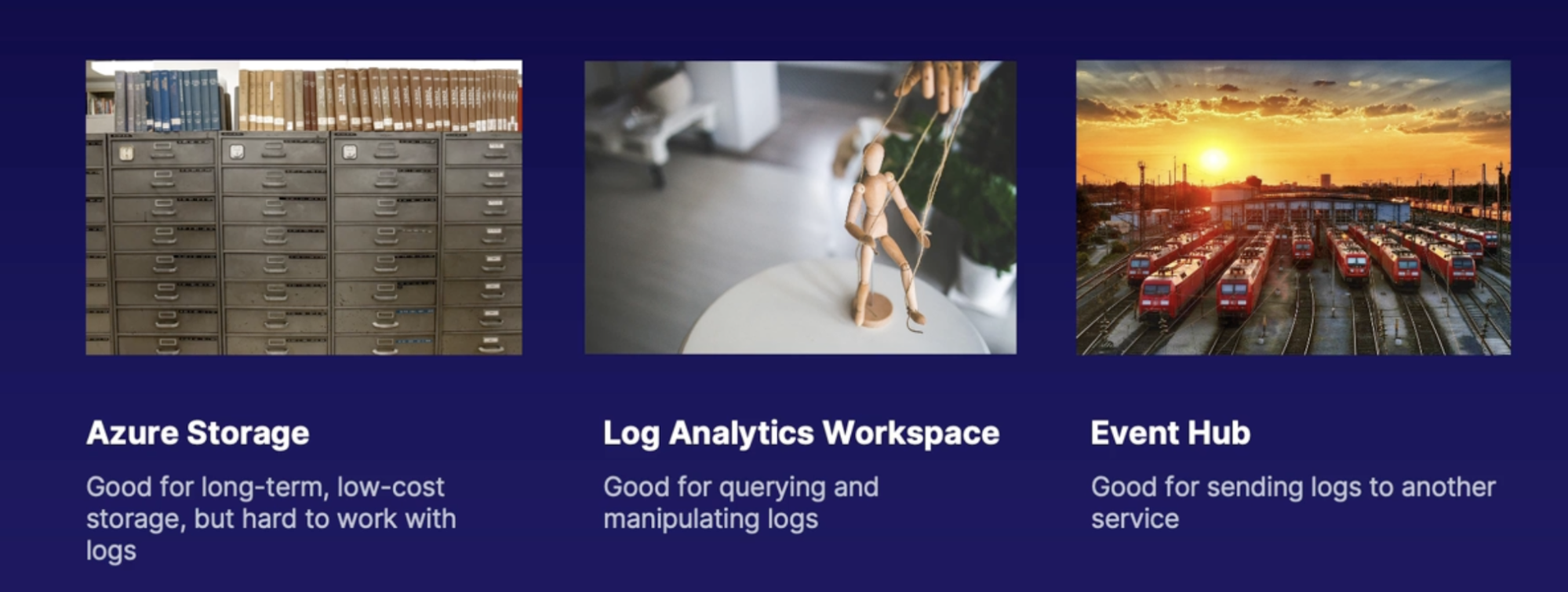

Diagnostic log storage locations 192

Demo exploring logs and configuring diagnostics 192

Summary 192

Introducing Azure monitor logs 193

Azure monitor logs 193

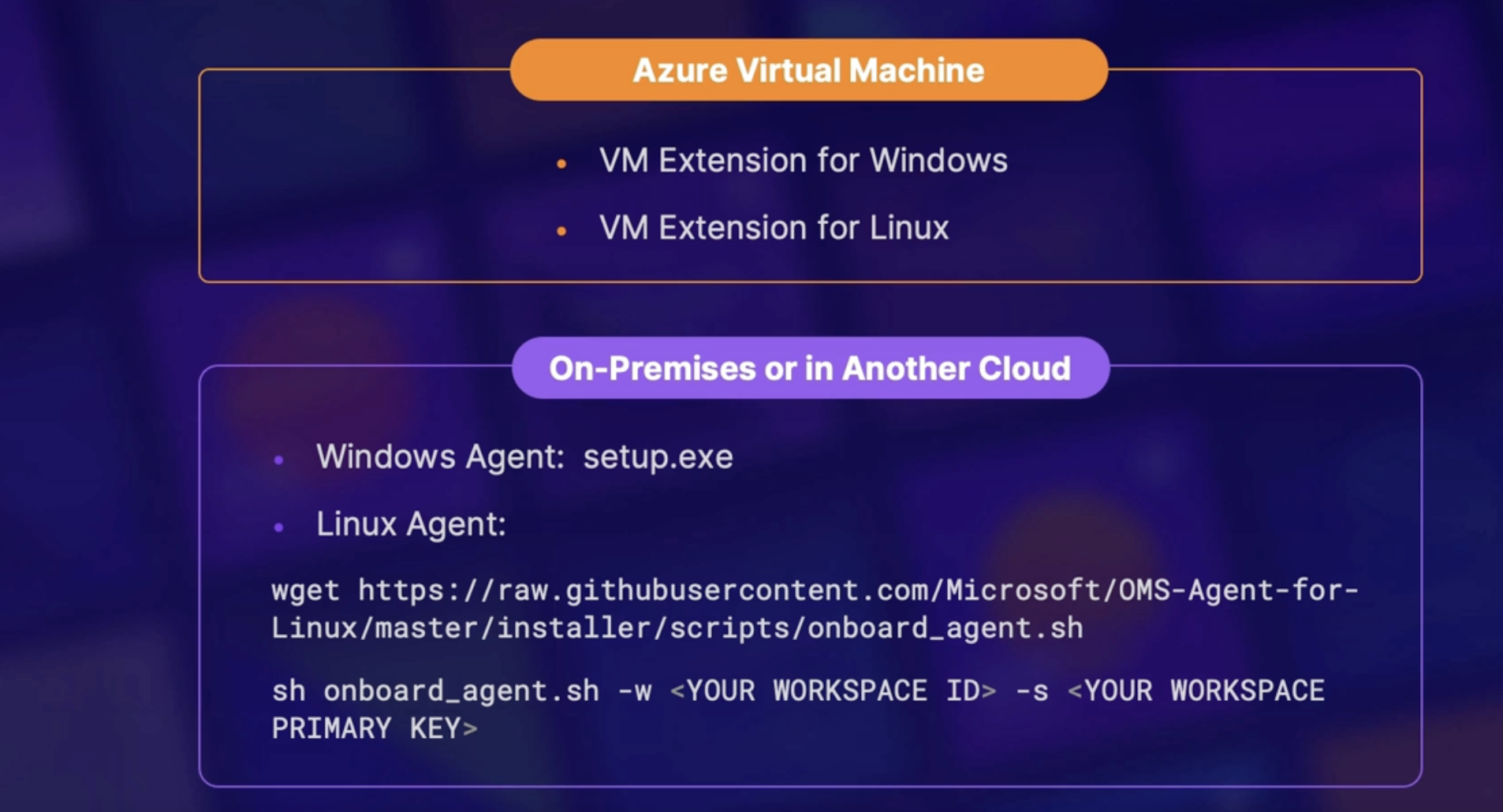

Log analytics agent 193

Demo: 193

1. Build and log analytics workspace 193

2. Configure storage retention 194

3. Assemble log analytics queries 194

Summary 194

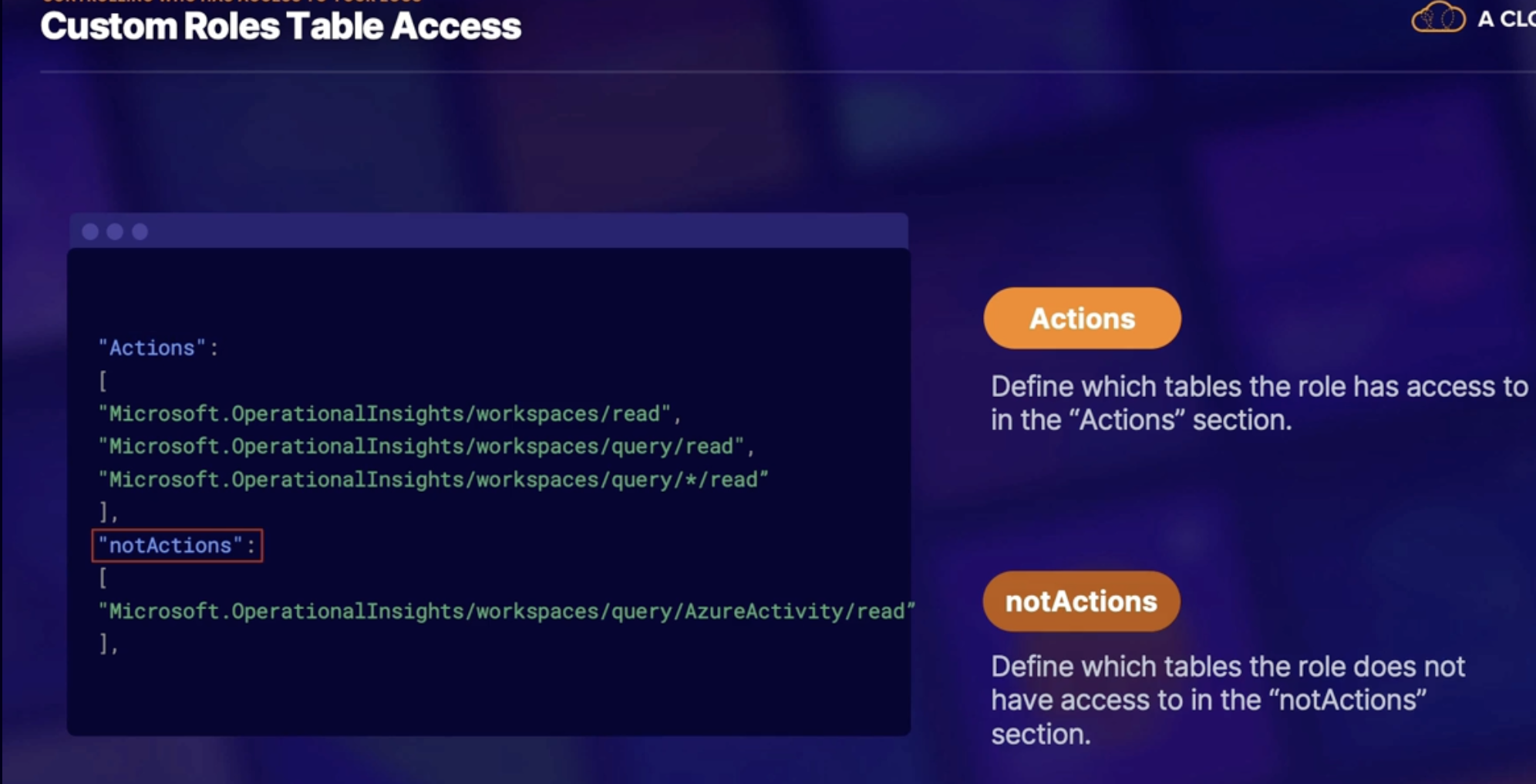

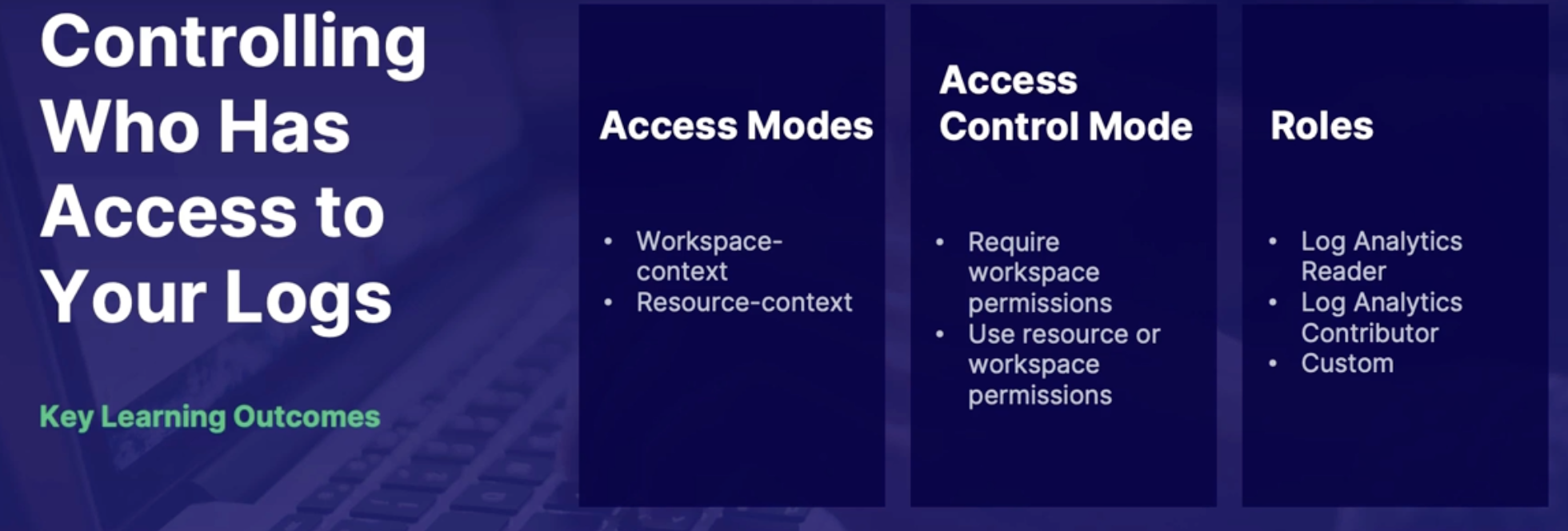

Controlling who has access to your logs 195

Scenario 195

How many workspaces to deploy 195

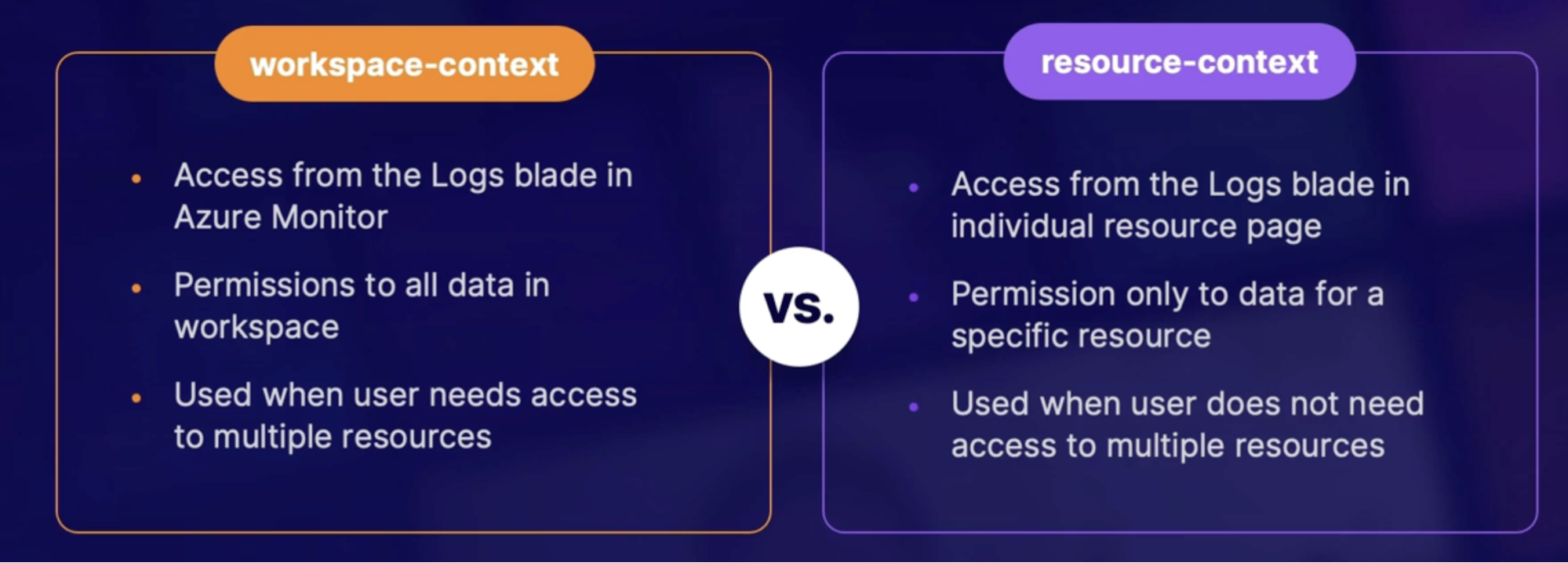

Access Modes 195

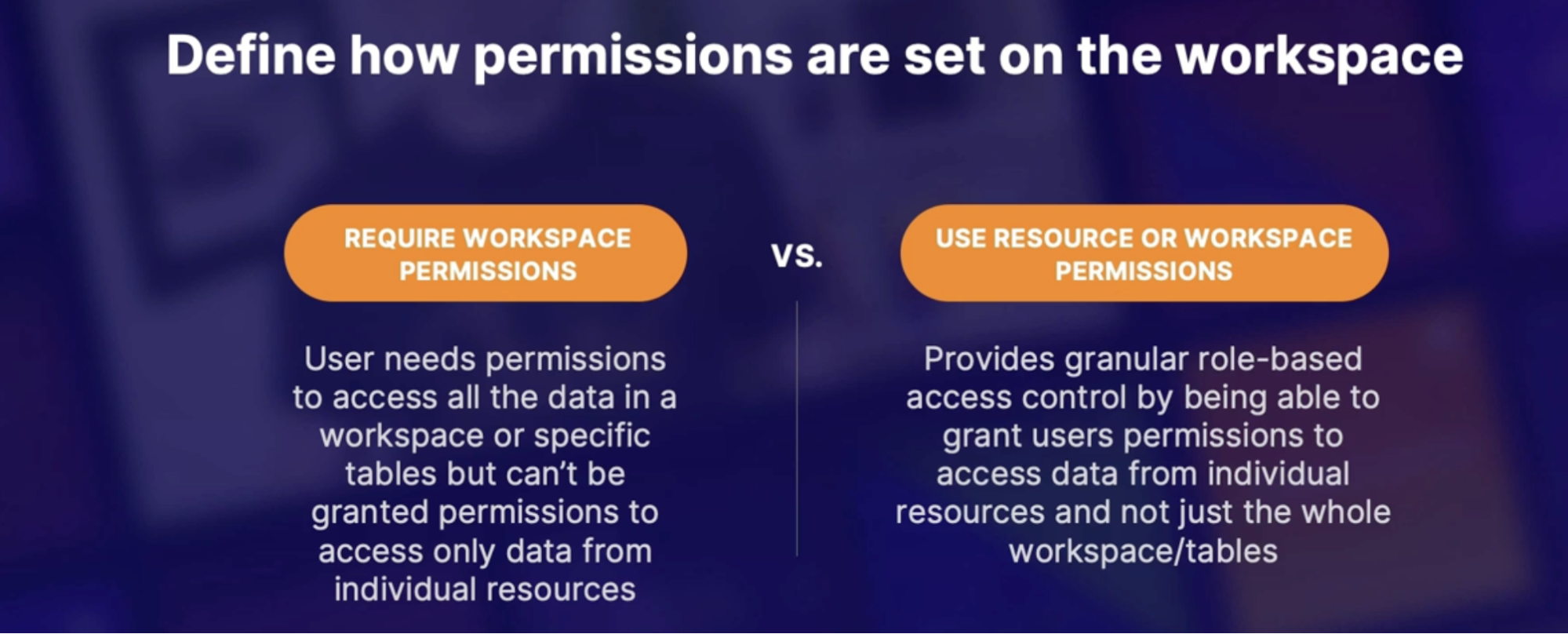

Access control modes 195

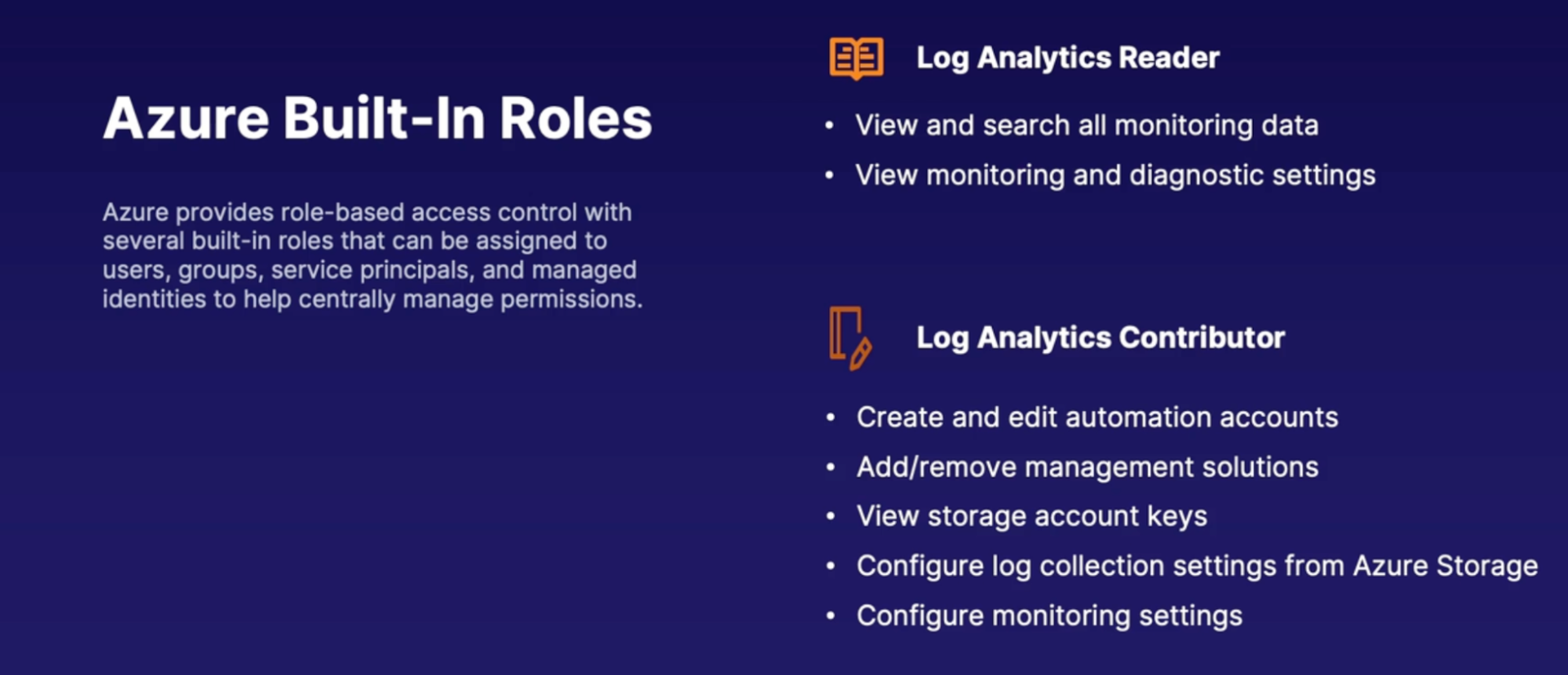

Built-In roles 195

Custom roles table access 195

Demo: configuring access control 197

Summary 197

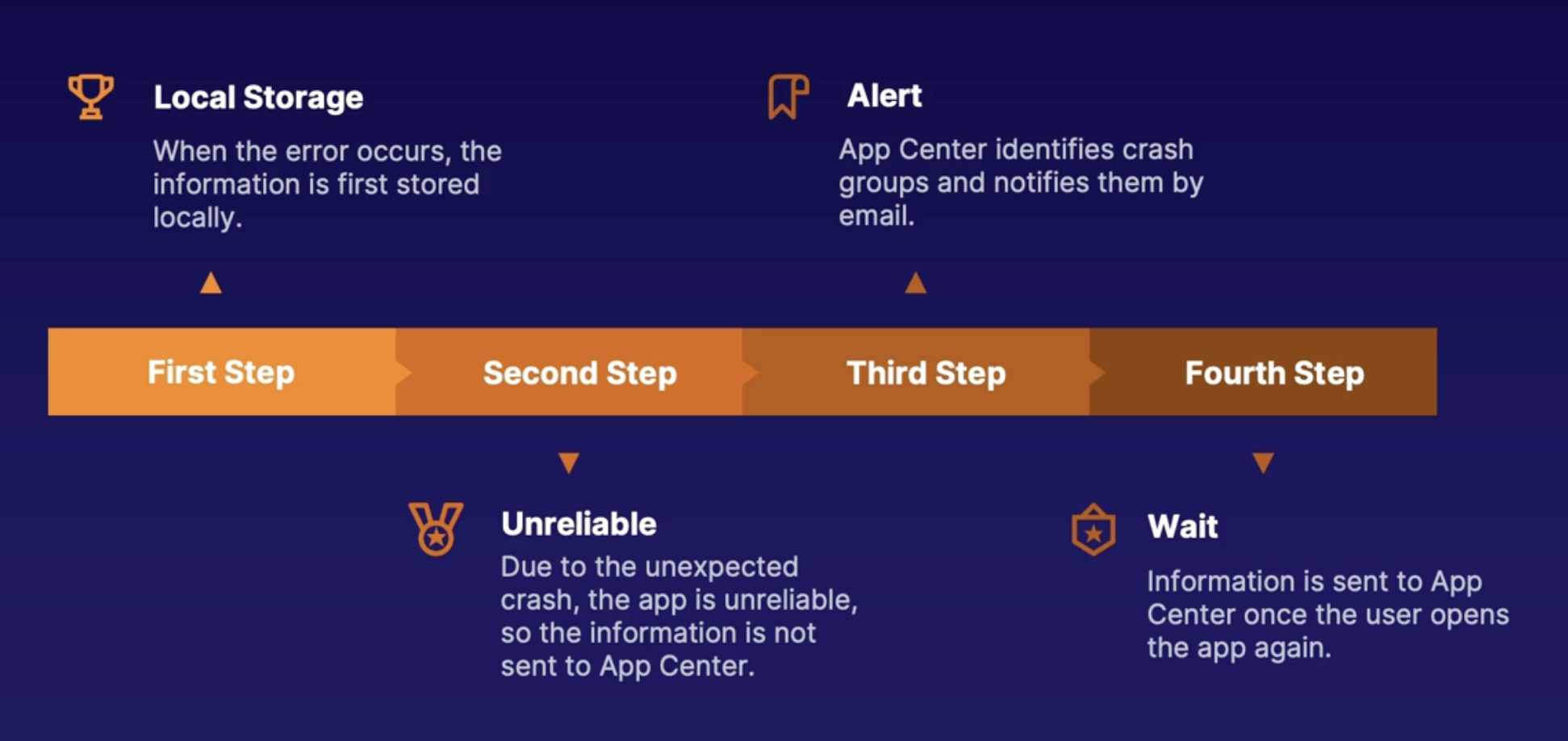

Crash analytics 197

Crash analytics 197

Visual studio App center diagnostics 198

What happens when a crash occurs? 198

Google firebase crashlytics 198

Demo 198

1. explore visual studio App Center crashes 198

2. explore Google firebase crashlytics 199

Summary 199

Summary 199

Chap - 21: Designing and Implementing Telemetry 200

Introducing distributed tracing 200

Scenario 200

Monolithic application/NTier architecture 200

Microservices/Service-based architecture 201

What do we monitor 201

Distributed Tracing 202

Demo: Application Insights tracing 202

Summary 202

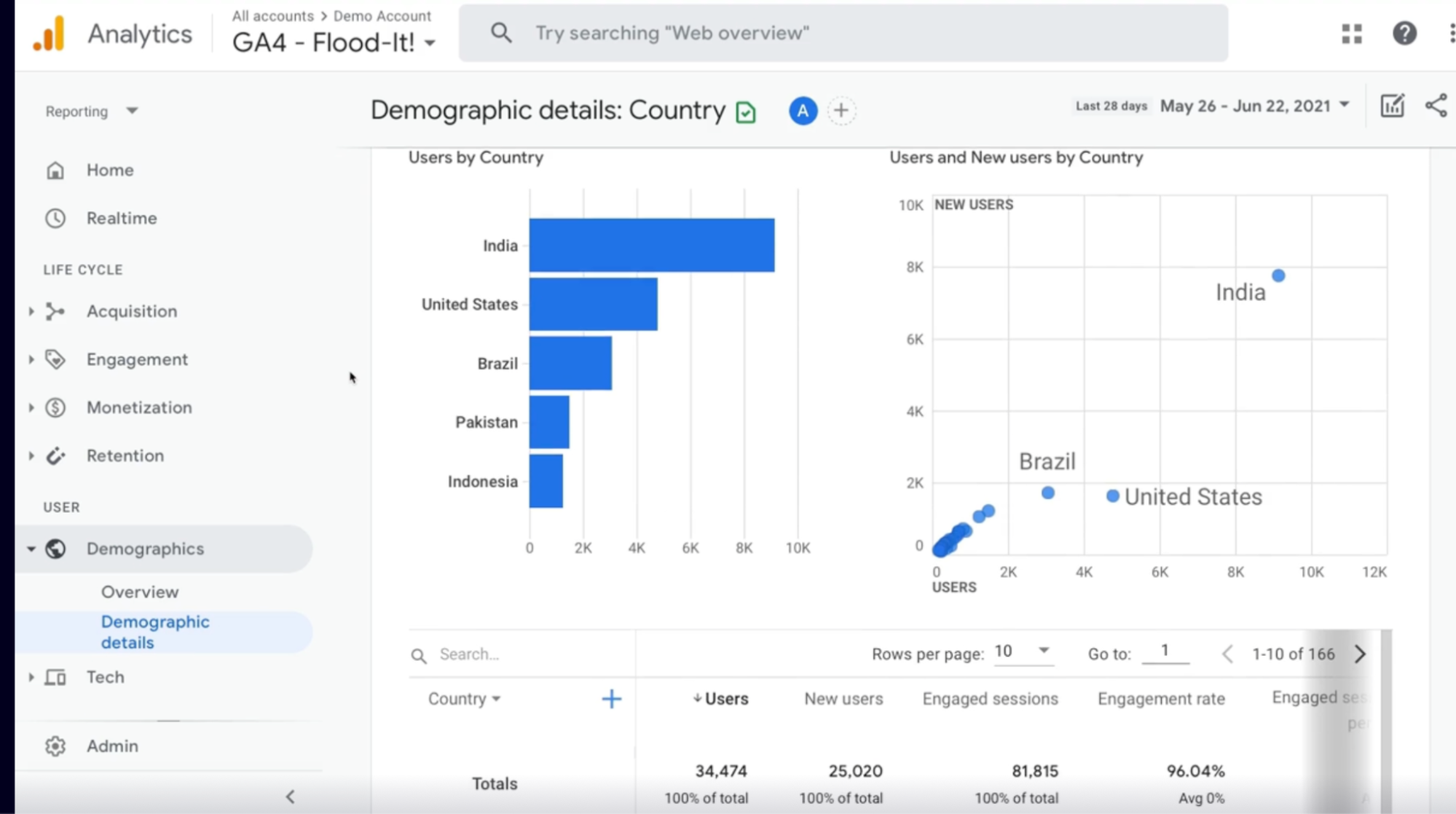

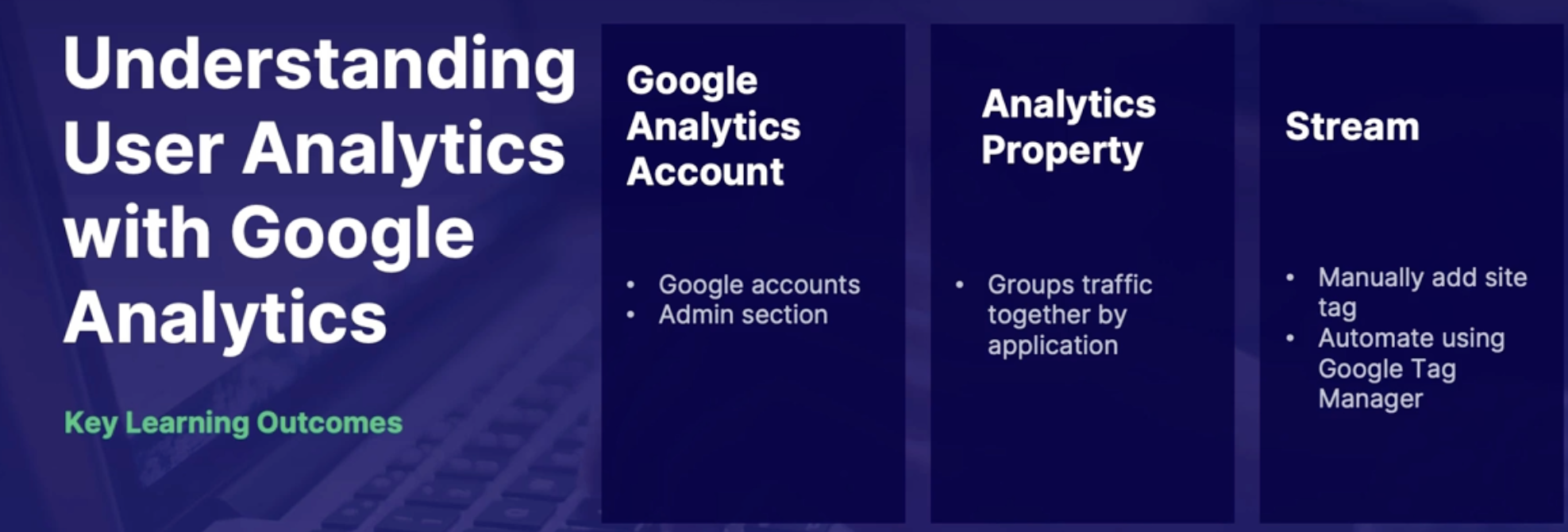

Understanding User Analytics with Application Insight and App Center 202

User analytics 202

Application Insights user analytics 203

Visual studio App Center analytics 203

Export App Center data to Azure 204

Demo 204

1. Explore App Center analytics 204

2. Export data to Azure 204

3. Explore Application Insights User Analytics 204

Summary 204

Understanding User Analytics with TestFlight in Google Analytics. 204

Google Analytics 204

How to start collecting Analytics 205

Demo: Explore Google Analytics 205

Summary 205

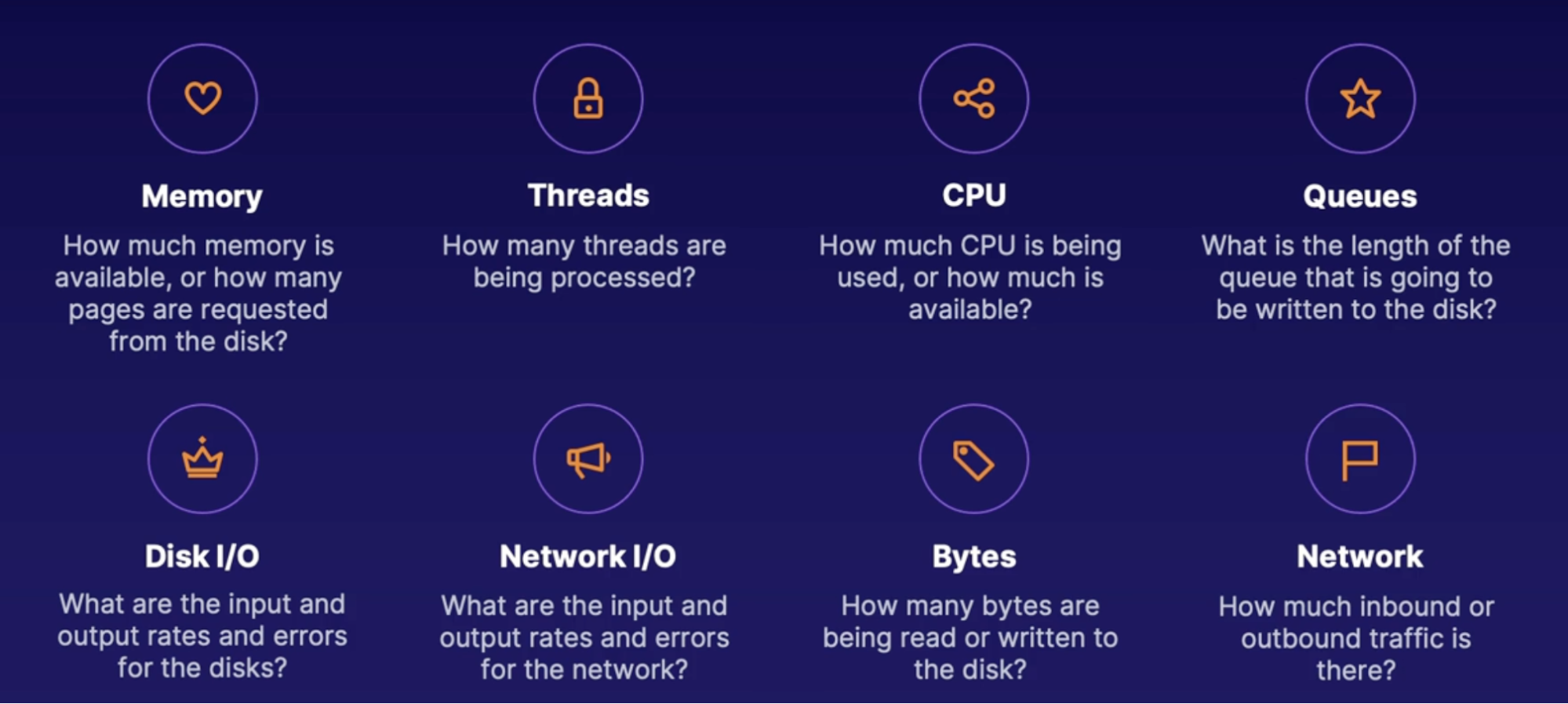

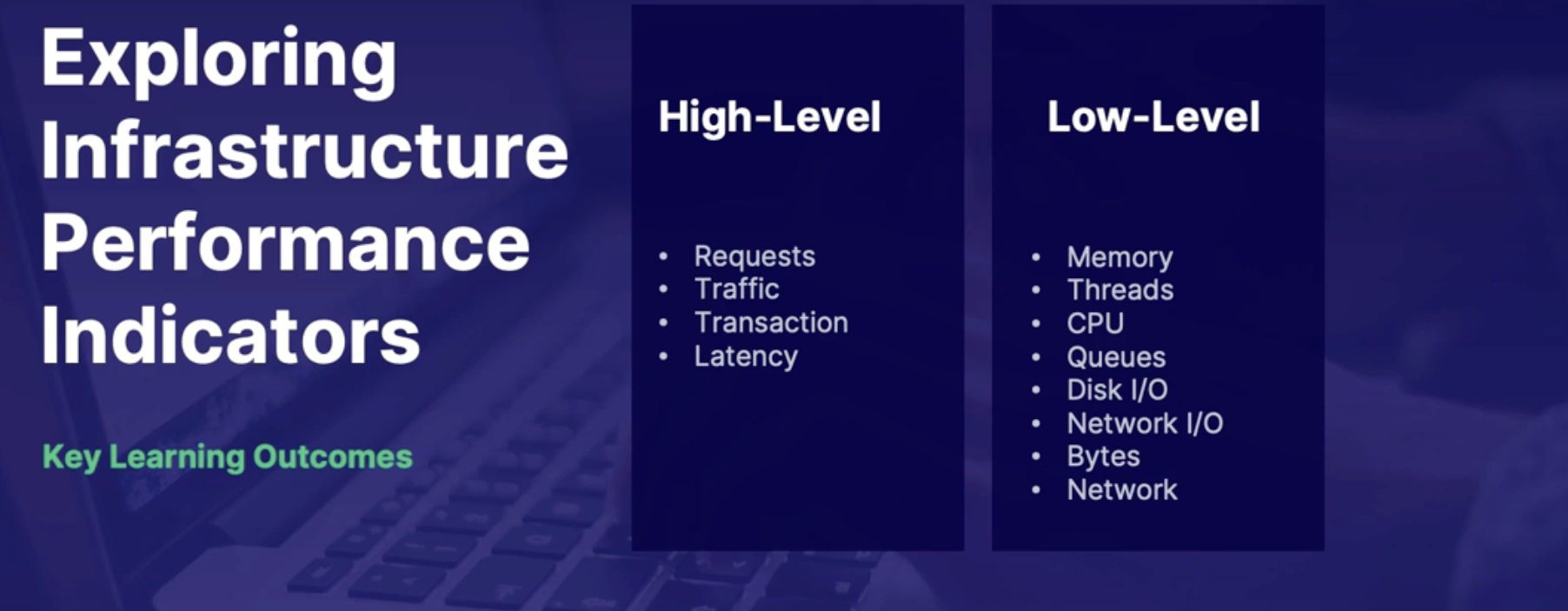

Exploring infrastructure performance indicators 206

Performance 206

High-Level performance indicators 206

Example data correlations 207

Low-level performance indicators 207

How to collect helpful data 207

Summary 207

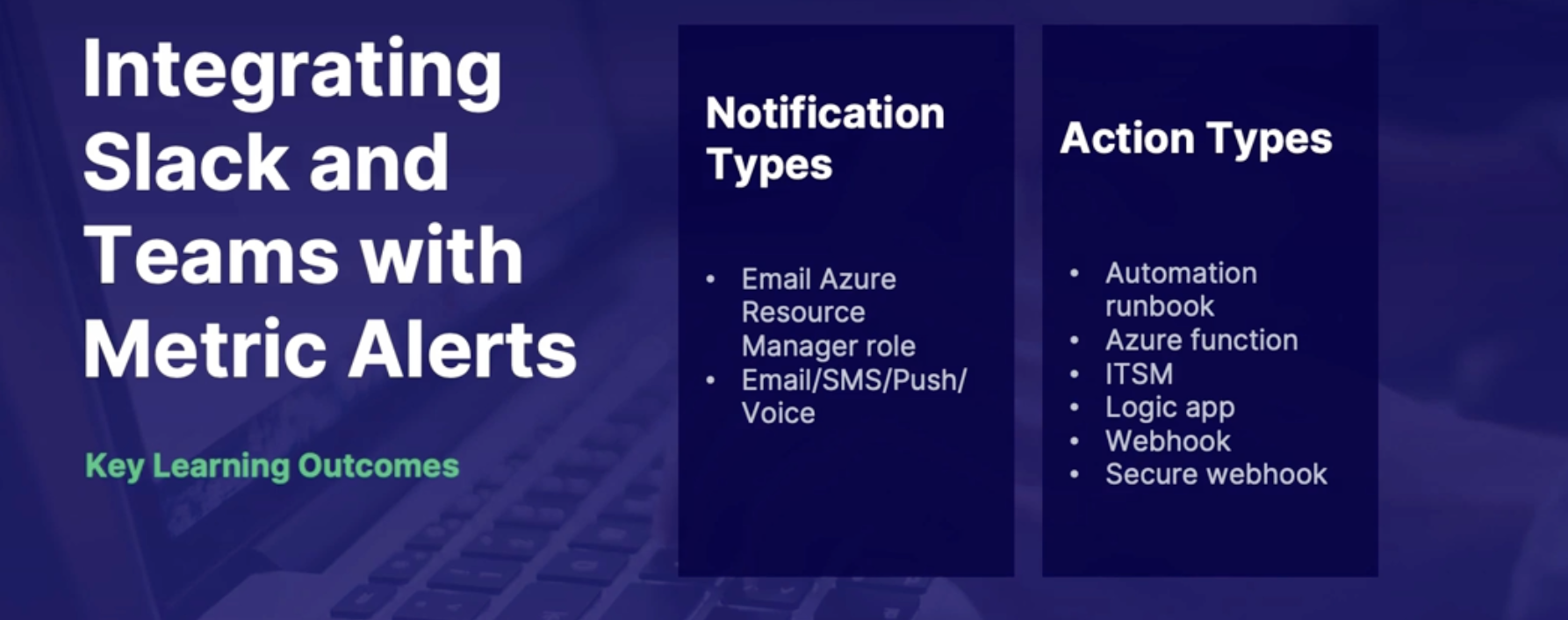

Integrating Slack and teams with metric alerts 208

Action groups 208

Notification types 208

Action types 208

Demo: trigger logic apps to send notifications to Teams and Slack 208

Summary 209

Summary 209

LAB: Subscribe to Azure Pipelines Notifications from Microsoft Teams 209

Chap - 22: Integrating Logging and Monitoring Solutions 211

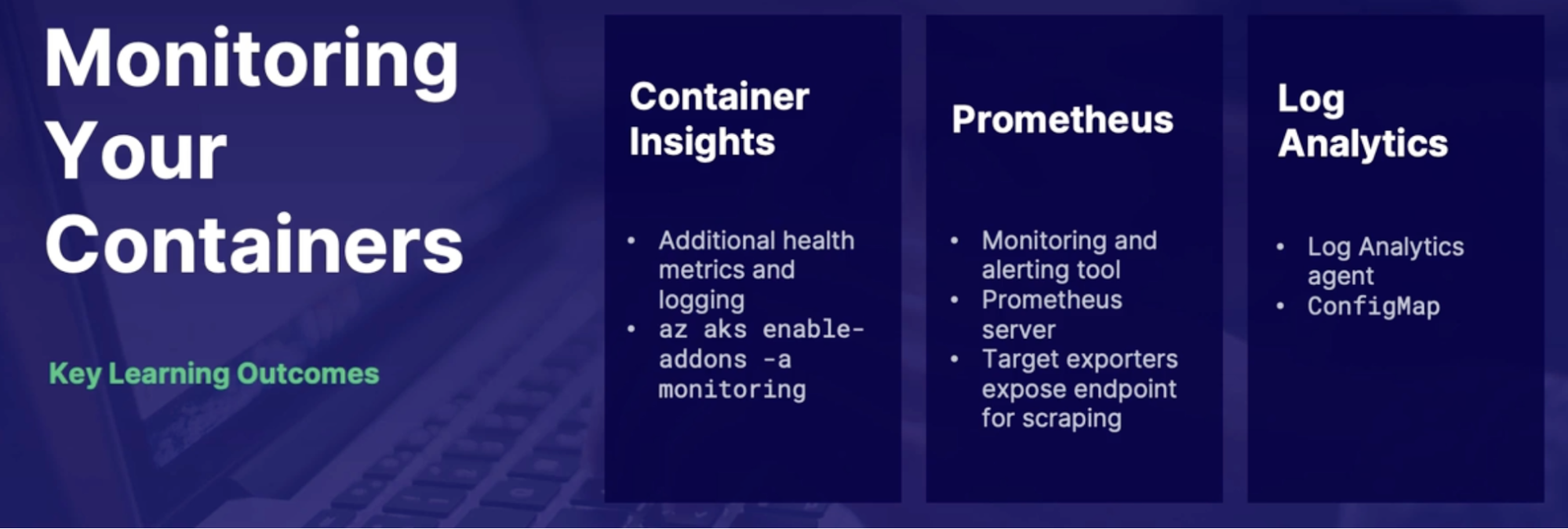

Monitoring containers 211

Azure monitor container insight 211

Azure Kubernetes service(AKS) 211

AKS Container insight configuration options 211

Prometheus 212

How do Prometheus work 212

Prometheus and Azure monitor integration. 212

Demo: 212

1. Enable container insights using the portal 212

2. Explore container health metrics 213

Summary 213

Integrating monitoring with Dynatrace and New Relic 213

Dynatrace 213

Dynatrace Integration 213

New Relic 213

Other third-party monitoring alternatives 214

Demo 214

1. Azure integration with Dynatrace 214

2. Azure integration with New Relic 214

Summary 214

Monitoring feedback loop 214

Feedback loops 214

Scenario 214

Demo: implement feedback loop using a logic app 214

Summary 215

Summary 215

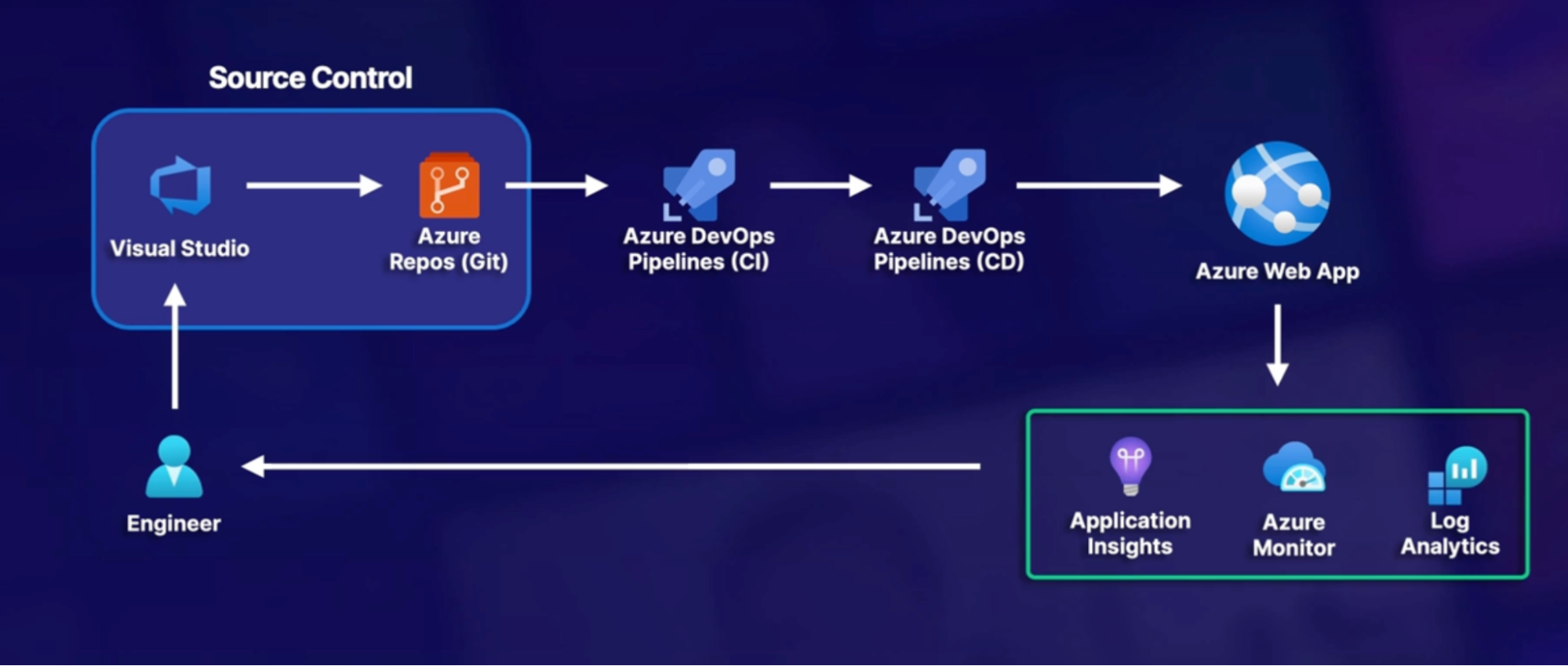

AZ - 400 DevOps Engineer

The broad range of topic

- SRE

- DevOps security and compliance

- Source control

- Azure Pipeline

- CI/CD on Azure DevOps

- Communication and Collaboration

- Monitoring and Logging

- Alerting policies

Scenario

- A company wants to migrate its workload from on-prem to the cloud - eCommerce website.

- Teams are siloed (system database, networking, etc.)

- Developer

- Operations

- Test/Qa

- Security

- New focus on changing to a DevOps approach

Chap -1: Developing an Actionable Alerting Strategy

SRE - Site Reliability Engineering

What is reliability:

- The application can be used when and how it is expected to

Concepts

- Availability: should be up anytime with no error(peak hours)

- Performance: should perform basic functionality without using multiple resources

- Latency: click should take minimum time to access/Respond

- Security

Measuring reliability

- Service level agreement(SLA):

- A service provider(Azure) says how much their service will be available.

- defines the financially backed reliability commitments from a service provider

- For example, the Azure App Service has an SLA of 99.95% for paid subscriptions,

- Service level objectives(SLO):

- The Goals that the service wants to reach to meet the agreement

- These are the various goals within the SLA that the service provider is promising.

- It’s an agreement within an SLA about a specific metric like uptime or response time.

- SLOs are what set customer expectations and tell IT and DevOps teams what goals they need to hit and measure themselves against.

- Service level indicator(SLI):

- which are the actual specific metrics coming from the service that are used to make the goals and the agreements.

- Actual service metrics behind the commitment

What is SRE

The approach was created at Google in 2003 by Benjamin Treynor Sloss, who described it in an interview as, "What happens when you ask a software engineer to do an operations function?"

What:

- Developers that usually develop the software, but are not included in the support, are being brought in to use software engineering practices to solve problems.

Why/Goal:

- The goal being to remove as much manual labor as possible using automation, which will help rescue time and effort spent on fixing production issues.

- This also creates a healthy feedback loop because the same people that are developing the application are supporting it so that they can learn from the production issues and hopefully prevent them in the next deployment from happening again.

Why do we need SRE

What

Generally, in traditional environments, reliability is not focused on.

Product managers don't view it as part of their concerns and will often categorize reliability

as a nonfunctional requirement.

issue

The result is that work gets siloed.

Software engineers focus on development and then throw it over to the wall to the operators

who end up supporting an application that they're not very familiar with and then perform inefficient and manual fixes.

Resolve

So with site reliability engineering, you have engineers working the full stack. Often, production issues are software problems, so the same developers that wrote the code

are also supporting the application, and they can go back and edit the code to increase performance and develop automation to reduce errors and save time.

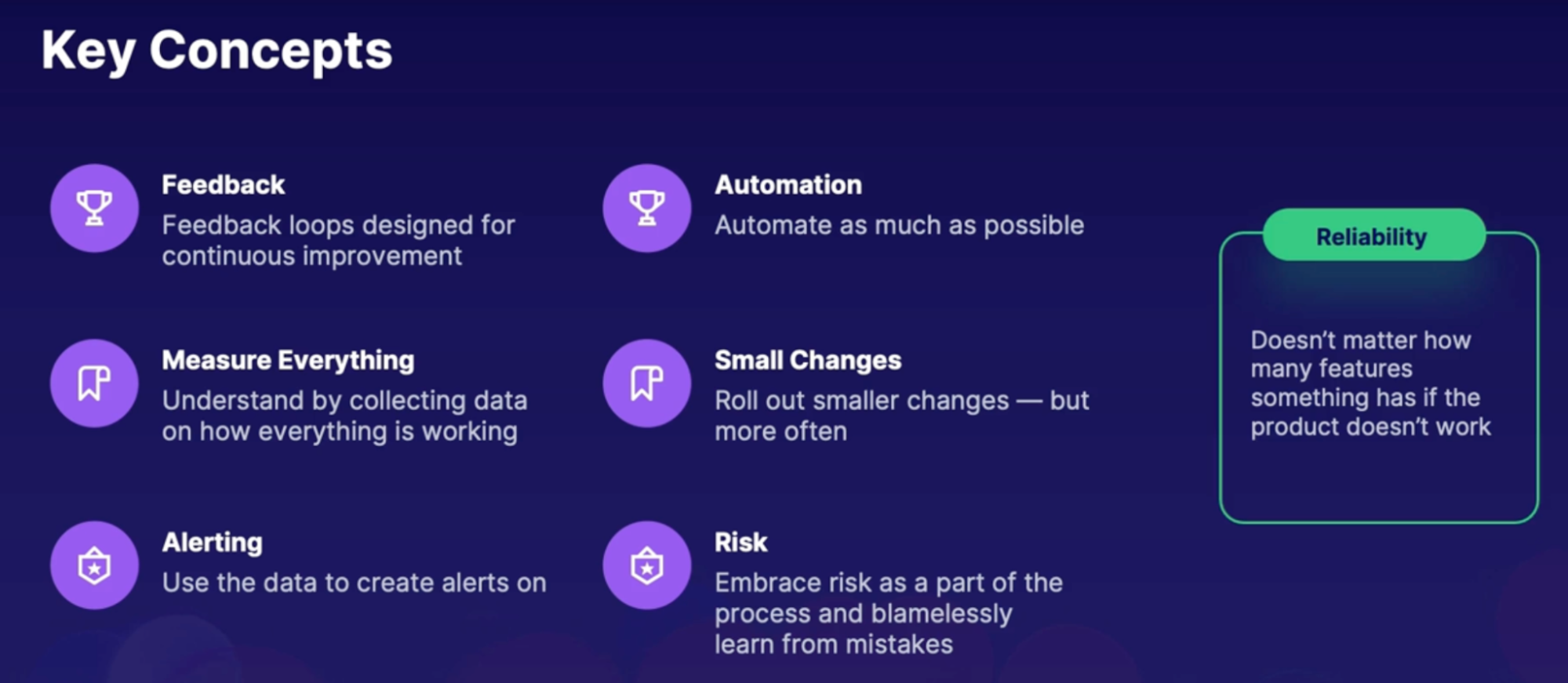

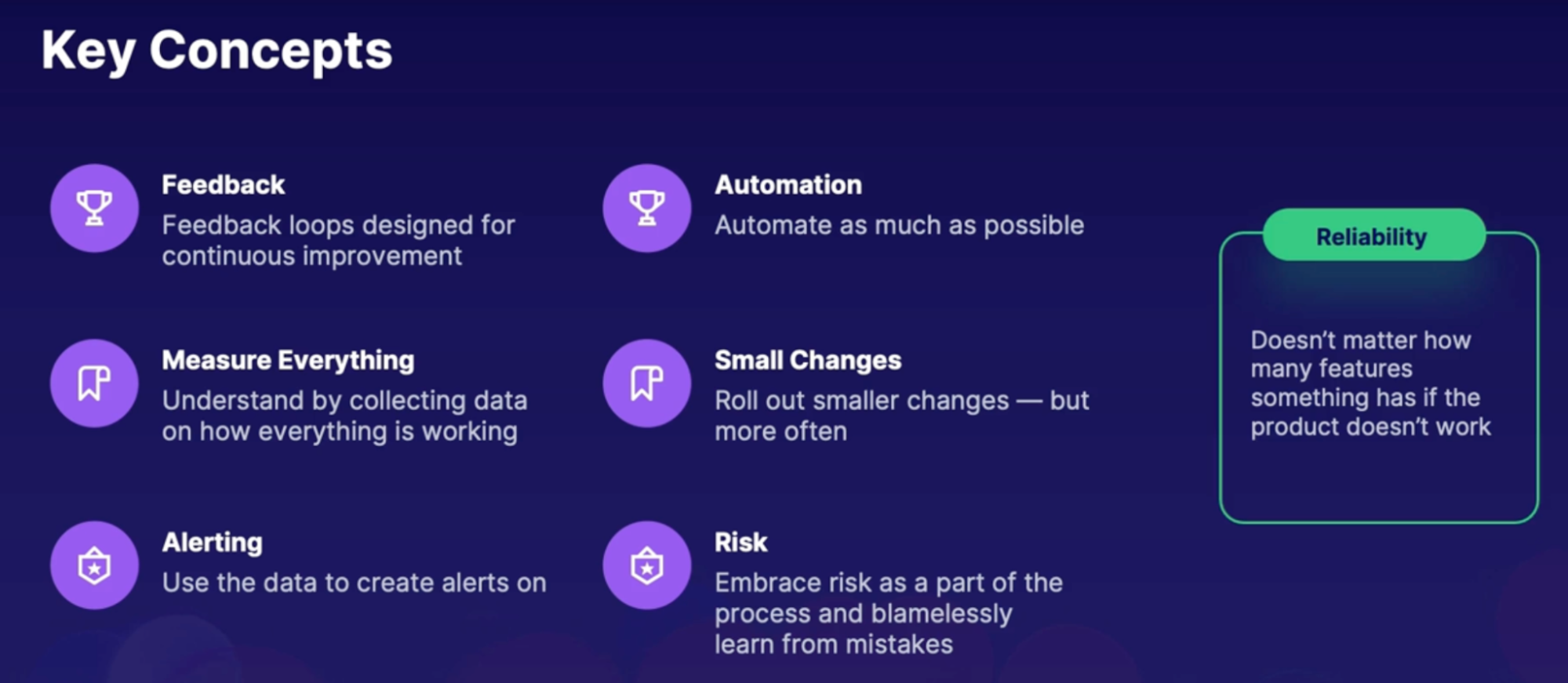

Key concepts

- Feedback

- Measuring everything

- Alerting

- Automation

- Small changes

- Risk

SRE vs. DevOps

DevOps | SRE |

DevOps is more of a culture of a group or a company where the roles of the developer and operators are brought together. | SRE is more of an engineering discipline that uses DevOps principles. |

if there's a problem, the operators will bring in a developer to help solve the problem.

| if there's a problem, the operators will bring in a developer to help solve the problem.

|

focus is more on development and deploying faster,

Focus- development → testing → production | Focus- development ← testing ← production |

Summary

- Reliability can be described in availability, performance, latency and security

- SRE takes development practices to solve operations problems

- site reliability engineers are effective because they can edit the code across the stack

- SLA is the agreement made with the customer on how reliable their system or service will be

- SLO defines those goals for the agreement

- SLI comes from the actual metrics and data from the system to be used to create goals

- SRE focuses on production and then looks back, whereas DevOps focuses on development and deployment to the next stage

Exploring metrics charts and dashboards

Intro to Azure monitoring

General location to gather information from your applications, infrastructure, and Azure resources

For the most part, this information is stored in 2 ways.

- Metrics:

- Metrics are various pieces of numerical data that describe/measure how something is performing at a certain period of time, as well as what resources it is consuming // performance counters

- Data points that describe system performance and the resources consumed at a certain point in time

- Examples like CPU, memory, or network traffic.

- Logs:

- are messages that are generated by certain events in your system.

- examples like errors or traces.

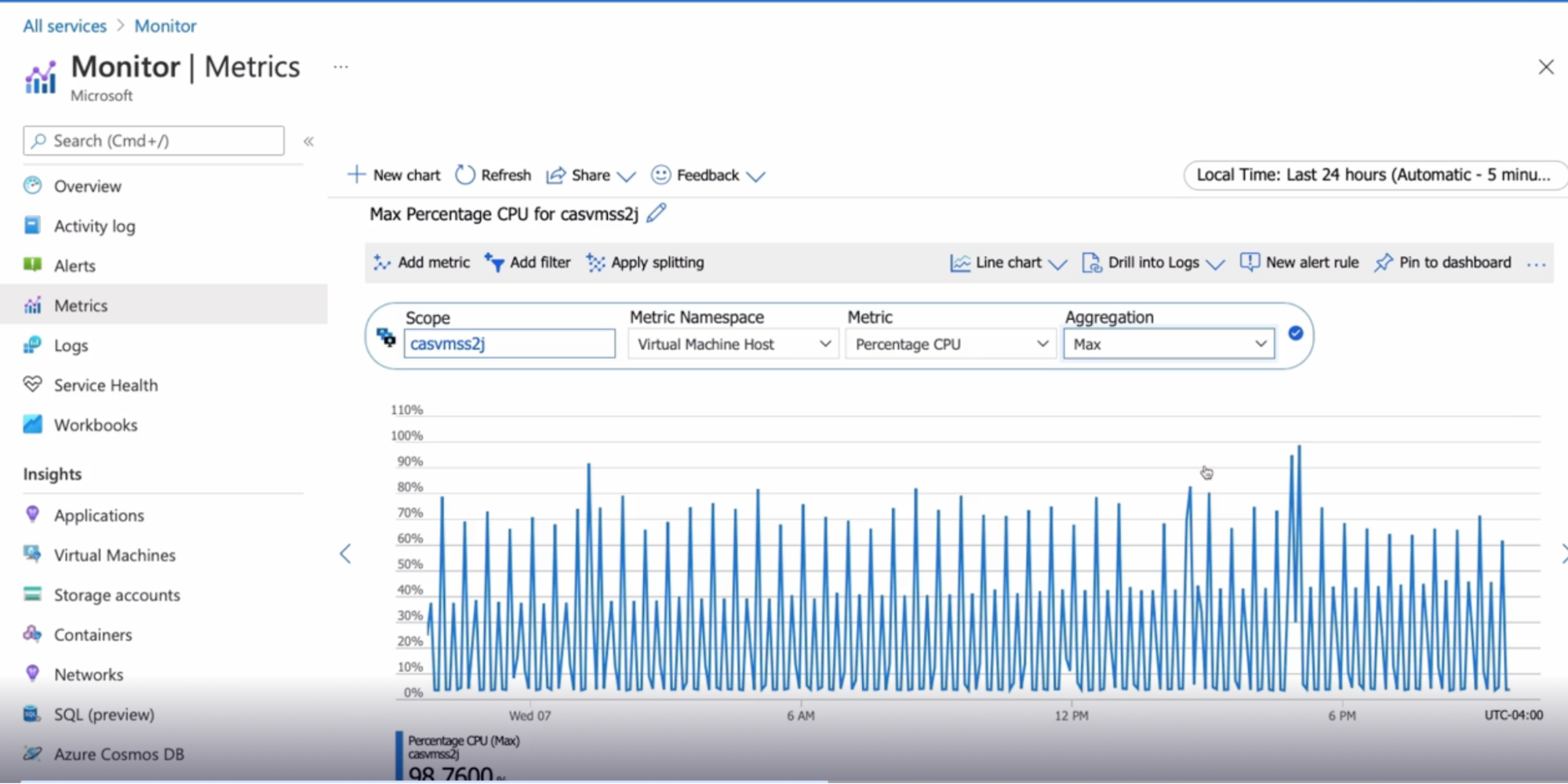

Azure Monitor metrics overview

what you can do with metrics?

- Visualize

- the data in various charts and graphs using Metrics Explorer.

- can save them to a dashboard or use them in a workbook.

- Analyze

- the data in the Metrics Explorer to see how systems are performing over time or compare it to other resources.

- Alert

- create alerts based on metrics data

- Example: VM reached a certain CPU threshold

- Automate

- implement autoscaling based on a certain level of traffic.

Demo

Create a chart to view metrics

Add additional metrics and resources

Add charts to dashboards

Summary

Implementing application Health checks

Scenario

A company using Azure monitors metrics for infrastructure health

Problem is this does not necessarily show the health of their actual applications.

So far, we've been doing manual checks to ensure the application is up.

Need: We are looking for a more automated process

Solution: Application Insights

What is Application Insight

Comes under Azure monitor service

Application Insights is a robust solution in and of itself

What

- It's designed to help you understand the health of your application.

- It tells you how the applications are doing, and how they are being used.

Features

It will give you information about

- Performance, user engagement, and retention

- will track things like request and failure rates, response times, page information like page views, or what pages are visited most often and when

- user information, such as where the users are connecting from, and how many users there are.

- will give you data on application exceptions where you can drill into the stack traces and exceptions in context with related requests.

- show you application dependency map

Availability

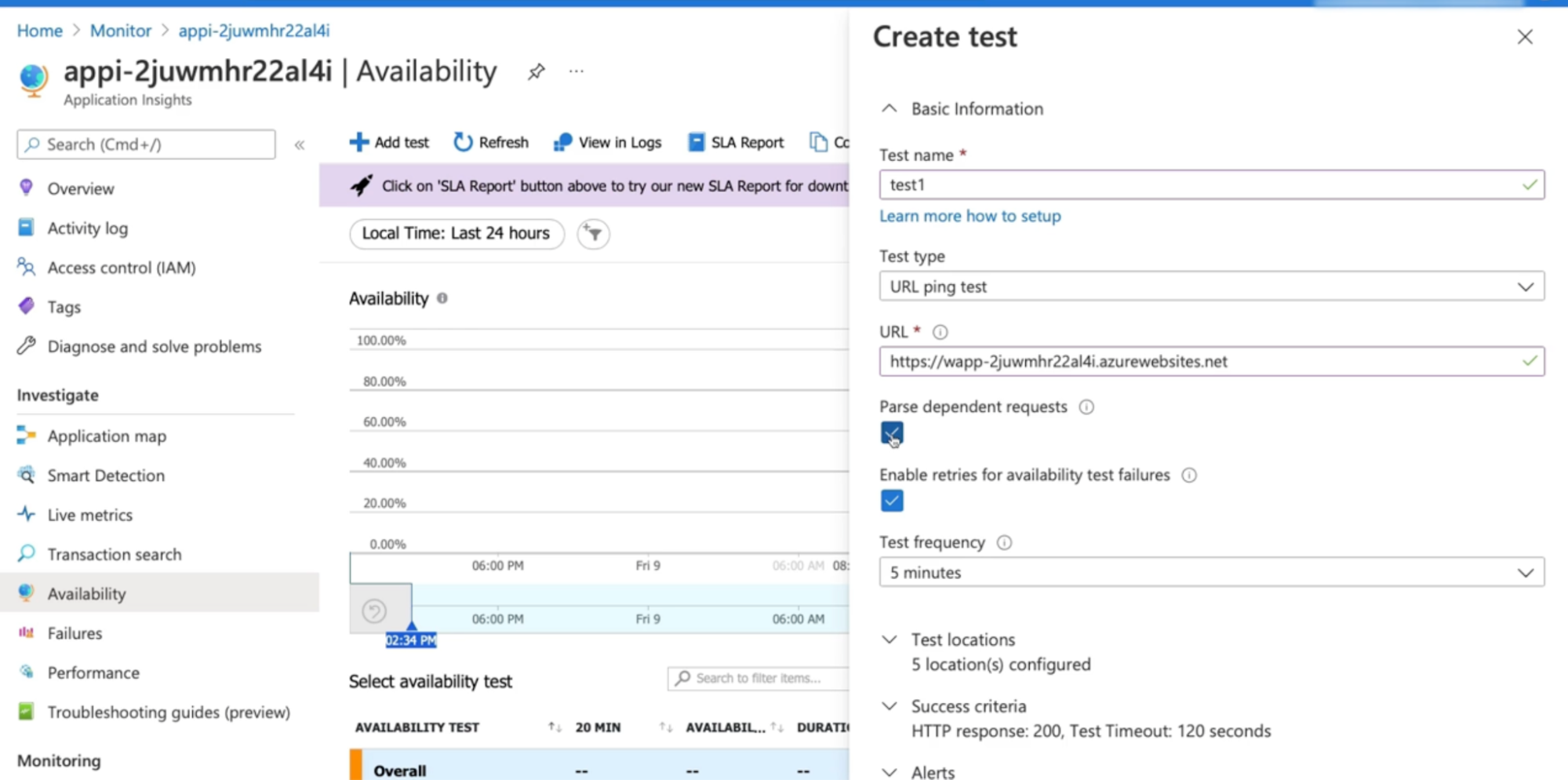

With Application Insights, you can configure a URL ping test, and this will send an HTTP request to a specific URL(your website URL) to see if there's a response.

- let you know how long it takes to make the request and the success rate.

- The ability to add dependent requests, which will test the availability of various files on a webpage, like images, or scripts, or style files.

- Enable retries recommended

- Test frequency: set how often you want the test to run and choose where you want to request to come from. // run test every 5 mins

- can select a minimum of 5 locations and a maximum of 16 locations to run the test from. // these are the location from where your website availability are checked to ensure your website is available across whole locations

- ability to set alerts on any failures.

Demo

Configure URL ping test

Configure health alert

Summary

- App insight gives information on your applications, such as how it's doing and how they are being used.

- provides availability tests, such as a URL ping test, which sends an HTTP request to a URL that you specify, and lets you know if there's a successful response.

- URL ping test can be found in the Availability section in app insight

- can add dependent requests to test the availability of certain files on a webpage, as opposed to just testing the entire webpage itself.

- Microsoft recommends enabling retries because many times, a site will come back up, and it's just a blip.

- can configure alerts based on those tests.

Discovering service and resource health alerts

Azure status

https://status.azure.com/en-ca/status

public-facing website that displays

- the health of all the services in Azure in all the different regions.

- if any outages or issues are going on.

- View a history of all the previous incidents.

Things that are out of your control but can affect your resources in Azure.

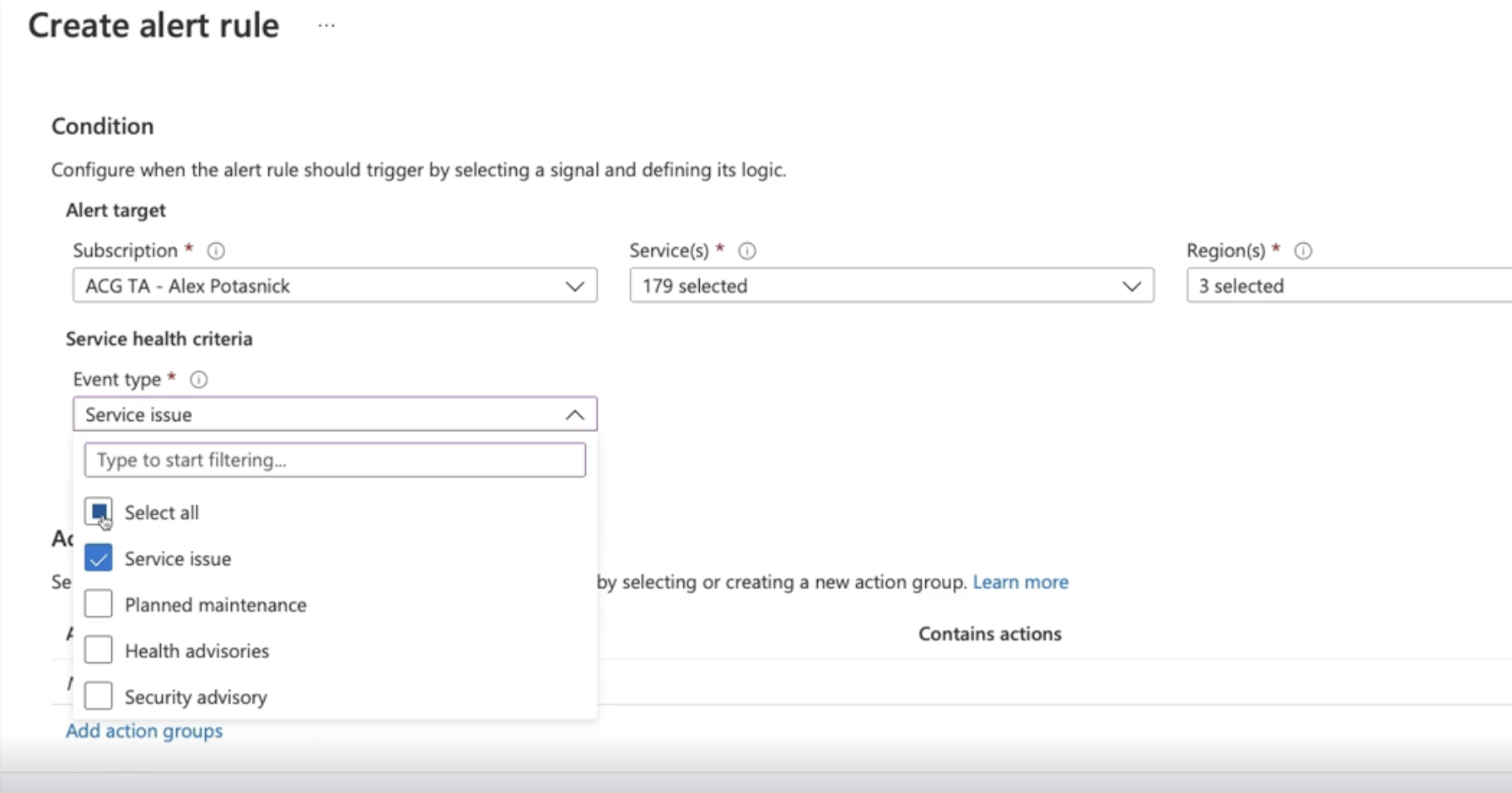

Service health

- Service health is under the Azure Monitor umbrella

- Use it for a more personalized experience

- only shows you information that affects you // from Azure side

- service issues: provide different types of messages such as service issues, which tells you information about any problems with services and regions that you have resources in.

- Planned maintenance: Any work that Azure will be doing that may affect the availability of your resources.

- Example: like implementing fault and update domains from Azure

- Health advisories: are if there are any changes to Azure services that you're using.

- Example: if there are any features that are not going to be available anymore or if any changes in the Azure service require you to update a framework or something like that.

- security advisories: provide notifications on things like platform vulnerabilities or security and privacy breaches at the Azure service level.

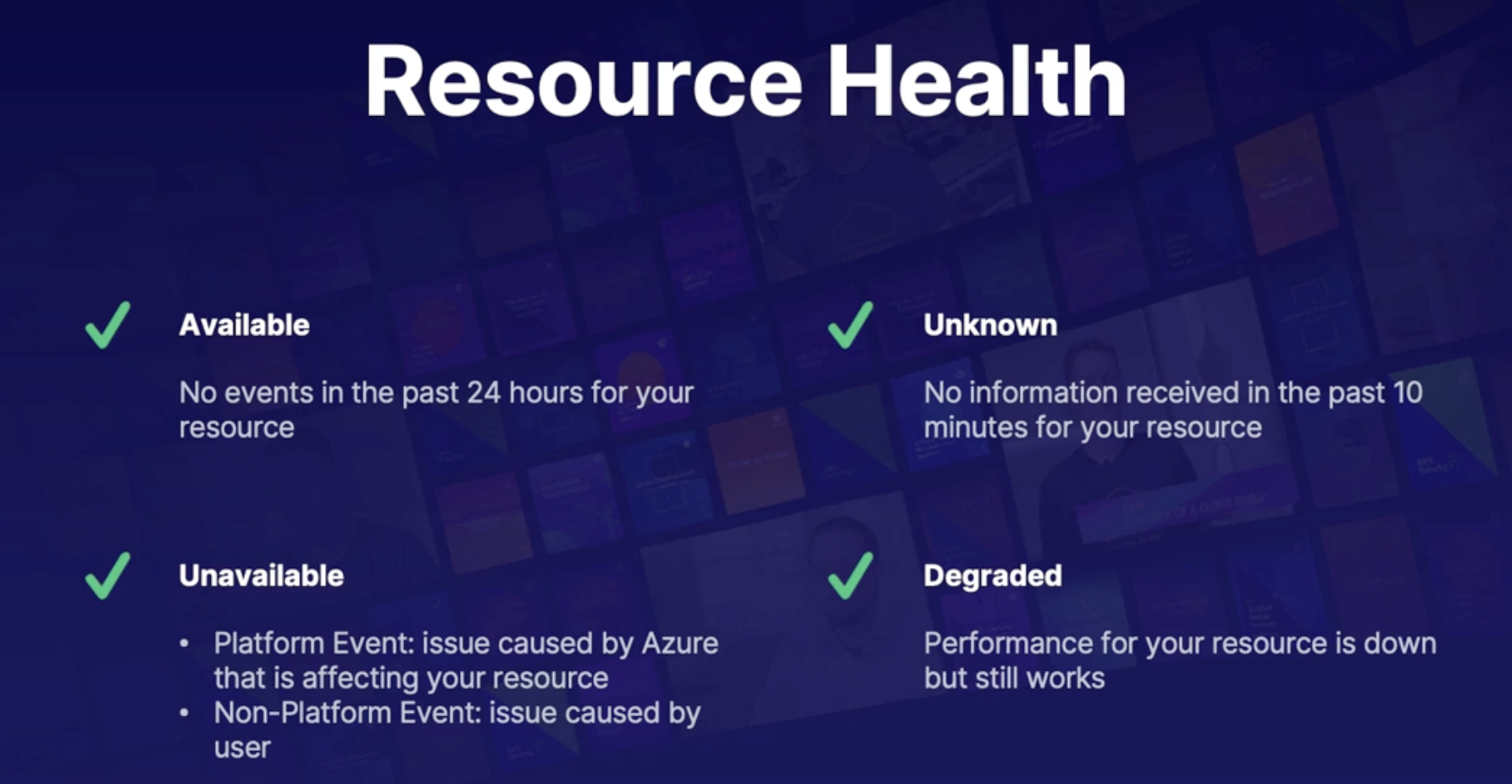

Resource health

What: shows you health information about your individual resources.

How: by using signals from other Azure services to perform certain checks.

Demo

Azure status page - https://status.azure.com/en-ca/status

View service health pages and create alerts

Monitor → resource health → add service health alert

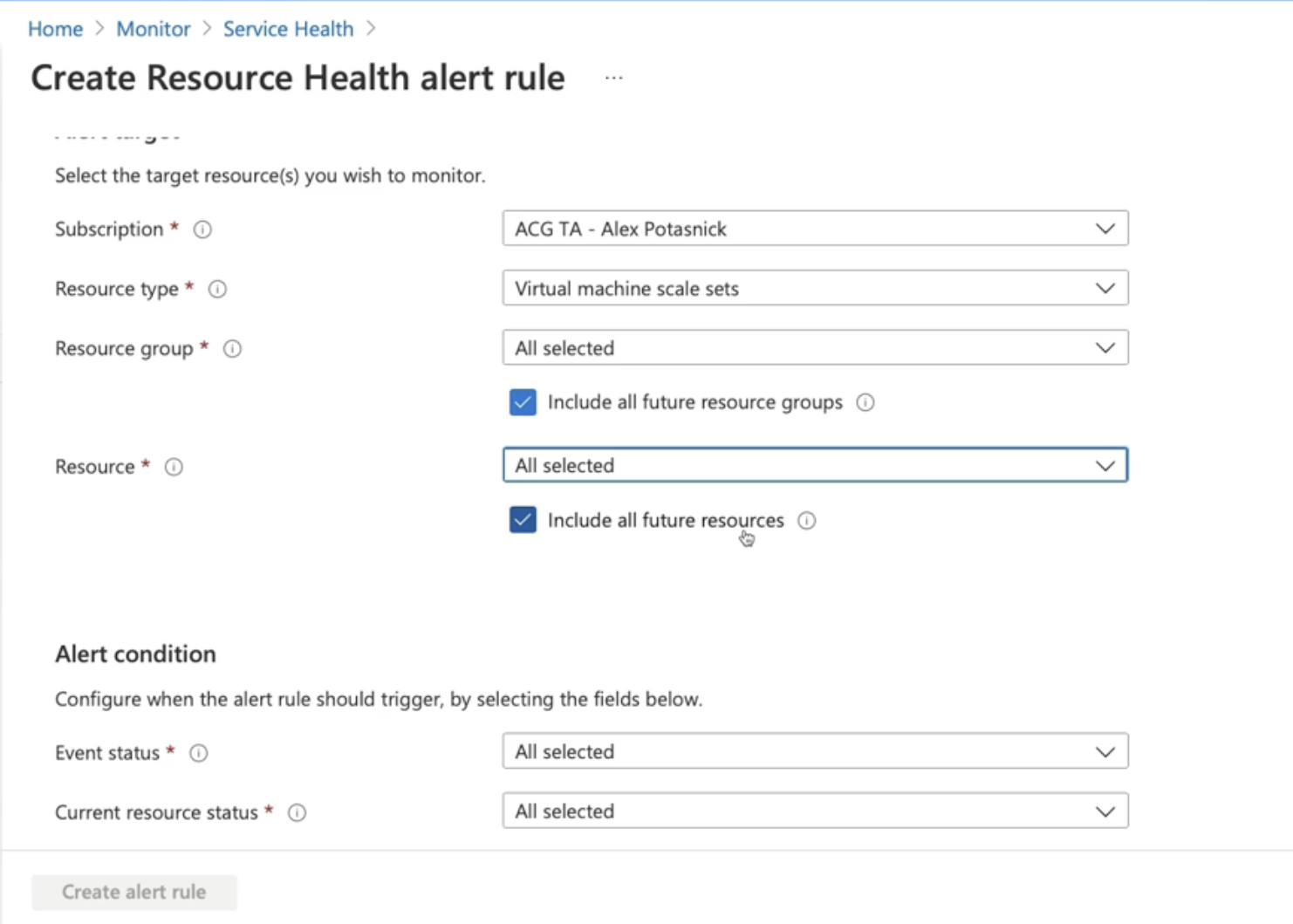

View resource health and create alerts

- Can filter resource group, resource, future resources

- Active resources

Monitor → resource health → add resource health alert

Summary

- Azure status page provides data for all services and regions

- Service health page provides data for the services and region you are using

- Service health alerts can be configured to notify an action group based on configurable events and conditions

- Resource health provides data for individual resources

- you can create alerts on specified resources based on certain resource health statuses and conditions

Self-healing alerts

Scenario

What

- they have created alerts on availability, service, and resource health

- Currently only notified when things go wrong

Need

- They wanted to know when the environment changes // want notifications

- Ex: wants to notify when scaling up and down

Vertical vs horizontal scaling

Vertical scaling | Horizontal scaling |

Change the size of the VM | Change the number of VM |

Still have 1 VM | Can have multiple VM sharing the load equally |

App Service vs. VMSS(Vitual machine scale set)

Horizontal scaling on PaaS(App Service) & IaaS(VMSS)

App service - PaaS | VMSS - IaaS |

No access to underlying machine → you just manage the code → worry about code not hardware | Full access to VM |

Higher flaxibity and less management // worry about code not hardware

| lower flaxibity and higher management // ability to manage VM and have access to environment variables, registry, file system, local policies |

Built in load balancing | Require separate load balancer |

Auto scaling - scale up | Auto scaling - scale out |

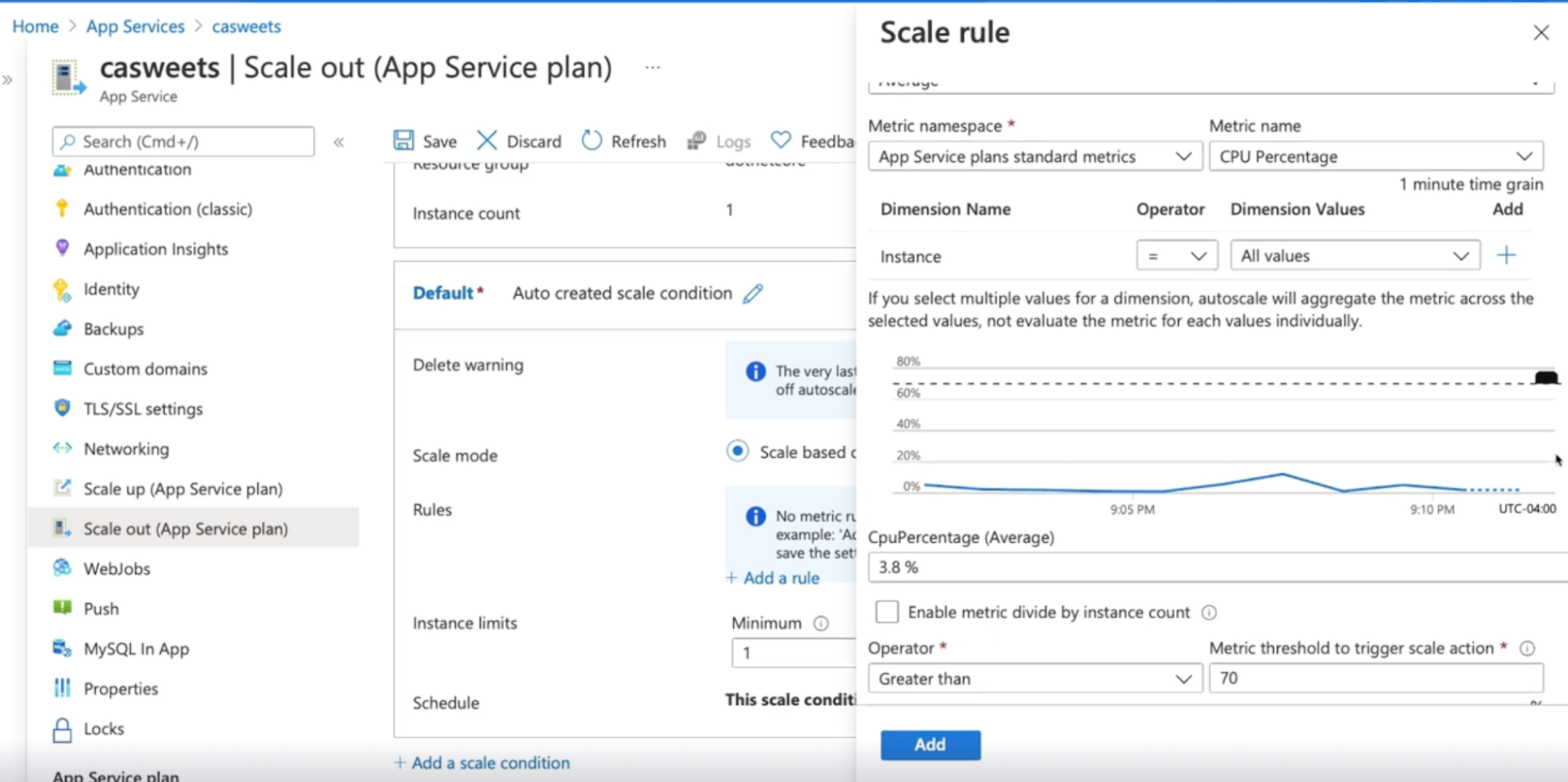

Autoscaling process

How:

- decide on the data input

- Option 1: Autoscaling could be configured based on a specific time, like if you know that at certain times you'll need more computing power. // sale time

- Option 2: configure autoscaling based on certain metrics.

- create rules like, if the CPU percentage is over 70% , to trigger an action,

- and that action would either increase or decrease the number of instances.

- Other actions can be triggered, such as sending notifications or sending a webhook that could be used for automation activities like a runbook, a function, or a logic app.

- Summary: a rule could be created to trigger a scaling action when certain metrics or time is reached.

Demo

Configure autoscale notifications for App service and VMSS

Auto scaling on app service

Auto scaling on VMSS

- Click on Virtual machine scale set

- Click on scaling under setting

- Click on custom auto-scale

- Choose scale based o metrics

- Configure rest steps

Summary

- Vertical scaling is changing the size, and horizontal is changing the number of VM

- Autoscaling can be configured by matrix or scheduled to a specific time

- autoscaling settings can be found in scale out section of a web app

- autoscaling settings can be found in the scaling section of a VMSS

- Notification can be set in the notify tab once autoscaling is configured

Chap - 2: Designing Failure Prediction Strategy

Introduction

What: analyzing a system with regards to load and failure conditions.

Exploring System load and failure conditions

Everything fail sometimes

What: everything fails whether it is hardware or software it will eventually fail at some point.

Need: goal is to be prepared for any eventuality before being notified by the end-user

Solution: collecting logs and metrics is so important so that we can analyze them and notice patterns to identify even if a failure is likely to happen.

What is failure mode analysis?

What: identify and prevent as many failures as possible

- Fault points: happens as part of the design phase when you try to determine any single point of failure

- fault points: any place in the architecture that can fail

- fault modes: all the ways a fault points can fail

- Rate risk and severity: by asking how likely it will fail and what is the impact

- would there be any data loss and if so can we afford that data loss or will there be any financial or business loss

- Determined response: how the application will respond in recover from a failure

How to plan for failure

Important questions to ask when making your analysis

- Understand the application

- what is application, what it does, how it does?

- what are the components and resources that are being used?

- are there any SLA for those component resources or are there SLAs for certain pricing tiers like standard or premium resource or performance limit in Azure

- determine if the system is critical or not critical

- If yes, the system should be running all the time, if no you could afford downtime

- know what are the components are connected to it and dependencies

- If the dependencies fails then it might cause the connected components to fire

- How are the users connecting to the system (if users are in AD then and an AD outage will cause a failure)

- external dependencies like third-party services

How can we reduce failure?

- Faulty domains: implement for domains where applicable

- make sure that your resources are hosted on a separate rack within the data center

- Zones: use zone-redundant storage, data, and availability zones where applicable

- Cross region: use geo-redundant storage and have a read access data replica and site recovery plan in another region(when the entire region is down)

- Scaling: use auto-scaling

Performance Testing

What: important way to understand our application are capable of, so that we can plan to prevent any situation that cannot handle

Types

- Load testing: test application can handle normal to heavy load

- You would know what the normal load is by gathering metrics and telemetry to understand what the normal numbers are

- Stress testing: attempt to overload the system to see the actual upper limits are

- Spikes: a best practice is to include a buffer to account for random spikes

Summary

- failure mode analysis is a part of the design phase to identify any possible failures that may occur

- to plan for failure, understand the whole environment, and uptime expectations(Front and back and dependencies everything)

- When it comes to performance testing, load testing makes sure the application can handle a normal too heavy load

- stress testing is used to find the upper limits of what the application can handle

Understanding failure prediction

You cannot prepare for everything

- Some failures can only be protected by analyzing trends from the historical usage Data and metrics after an application has been deployed

- Sometime something needs to be filled before we can learn from it

- Post Mortem sessions are for learning not blaming

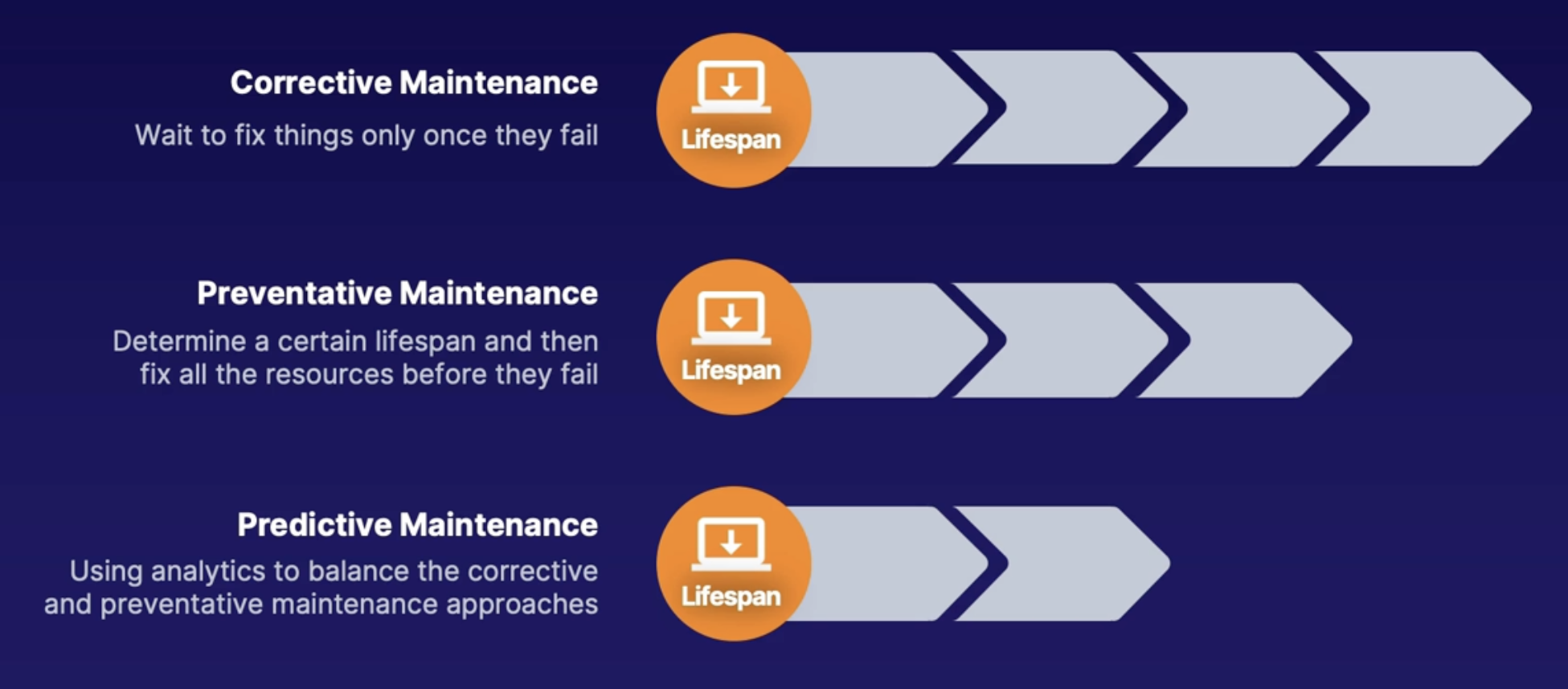

What is predictive maintenance PdM

Different approaches you can take when it comes to maintenance.

- Wait to fix things once they fail

- Example: Let's say we have a few VM with various lifespans. This approach will wait until each and every virtual machine will fail and only then fix the issue.

- this approach allows you to maximize the use of your resources up until they fail.

- The downside to this approach is that this will cause downtime and unscheduled maintenance.

- And this can be hard for the team that's doing the maintenance because it might mean that they have to schedule off-hours work or on the weekends.

- you try to come up with how long it will take before the resource fails, and then try to fix or replace all those resources at the same time before they fail.

- Or alternatively, once 1 resource fails, go ahead and fix or replace all the others, because once 1 resource fails, that's the new lifespan.

- So for example, even if one resource has a shorter lifespan, we now set that lifespan for all the other virtual machines. And then we go ahead and fix all the other virtual machines at that point so that we know that we're fixing it before it will actually fail.

- helps solve the problem with unscheduled maintenance and can prevent many things from failing at once.

- you won't be getting the full usefulness of the resource because you're fixing or replacing it before it fails.

- hybrid approach between corrective and preventive maintenance

- using analytics like matrix elementary and alerts to understand when a failure occurs

- this helps utilize the resources in the most optimal way by fixing or replacing each resource just before they are going to fail

- important on mission-critical systems expectation is running the system all the time with no downtime

- this approach also encourages capturing KPI’s(key performance indicators) which determine the health of the components of the system

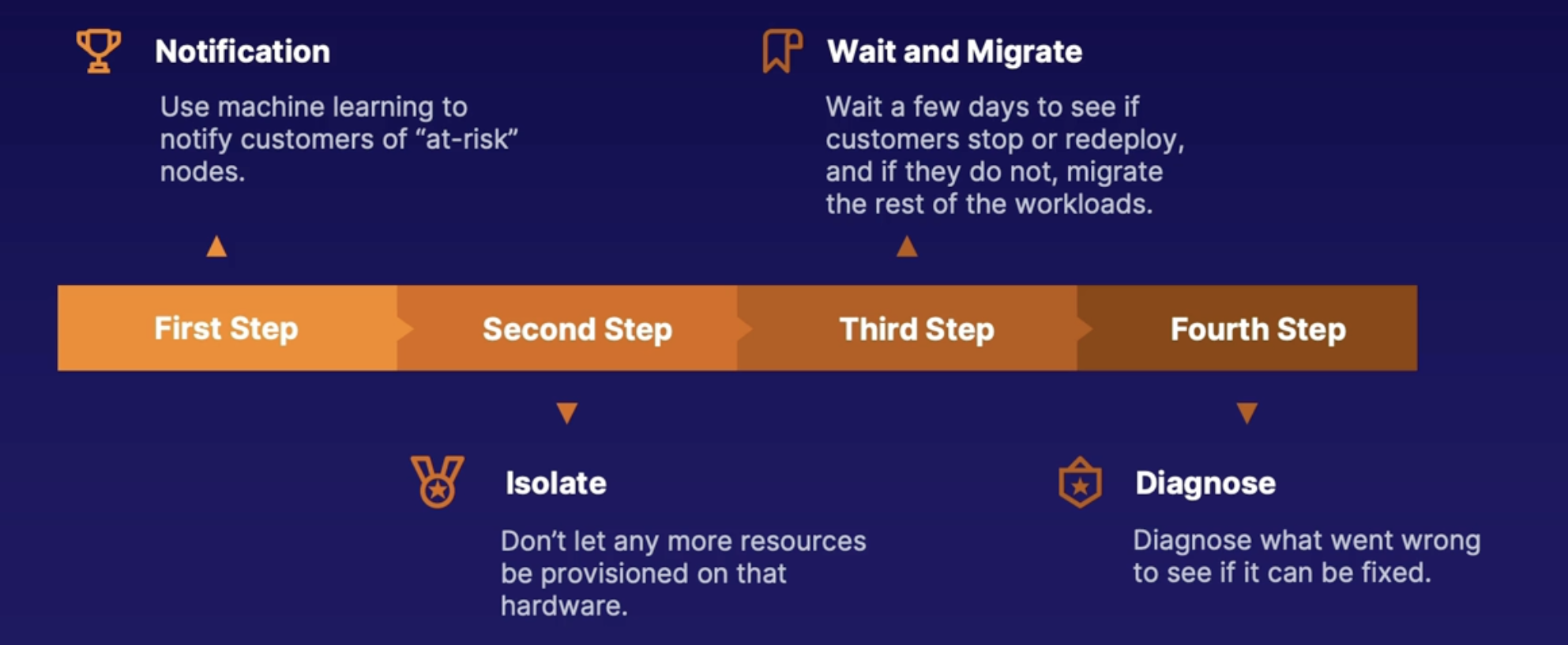

How Microsoft used to do PdM

Approach to predict and mitigate host failures

Previous approach

- Notification: use machine learning to notify customers of at-risk nodes

- Isolate: don’t let any more resources be provisioned on that hardware

- Wait and migrate: wait a few days to see if customers stop or redeploy, and if they do not migrate the rest of the workload

- Diagnose: what went wrong to see if it can be fixed

How does Microsoft do PdM now

New approach: Project Narya

uses more machine learning to focus more on customer impact

Reduce false positives and downtimes: sometimes the hardware was too damaged to wait or was not as bad as they thought

More signals: continuously grow the number of signals to determine health

More mitigation types: will respond with more types of mitigation and continue to analyze what are the best mitigations

Summary

- preventive maintenance establishes a productive lifespan and tries to fix things before they break

- predictive maintenance uses data and analytics to combine the corrective and preventive maintenance approaches

Understanding baseline metrics

Scenario

Performance testing is hard since each environment has a different load(dev, test, prod)

- Normal system behavior is different for each environment

Hence it’s difficult to do performance testing without proper baseline(what the normal load is?)

Why create baseline

Baseline

- tells us what are the normal conditions and expected behaviour

- Once it’s established you understand what a healthy state is, when there is a change in the state, create an alert

How to create baseline

Azure provided tools to create baseline metrics and workloads

- Log analytics and Metrics explorer: Create queries and charts to capture and analyze data

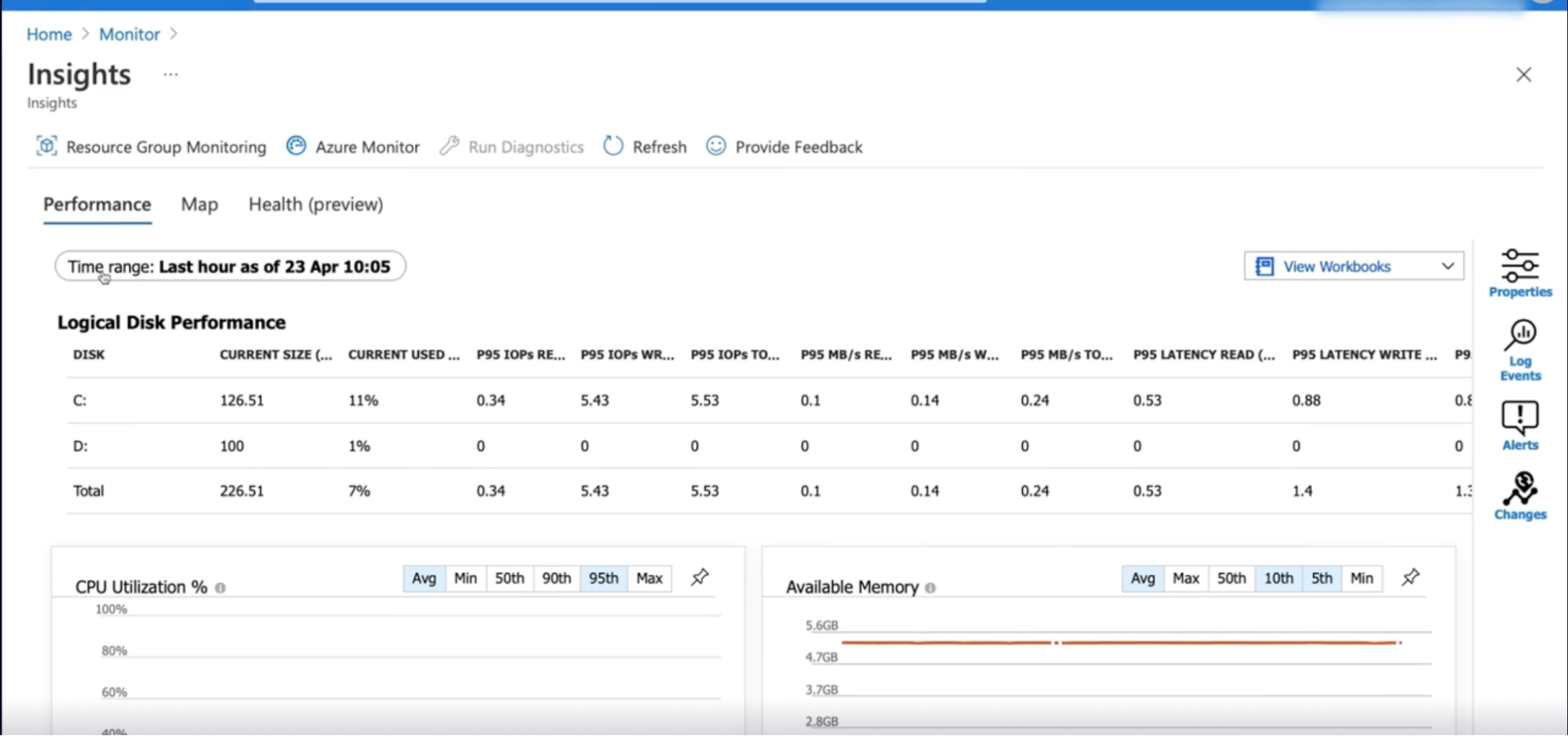

- Azure monitor insights: provides recommended metrics and dashboards for several services

- Click on monitor

- Navigate to middle section insights

- Insight for services includes VM, storage account, containers, network etc

- Click on one of that services and will give you a resource map

- Click on the performance tab: to see metrics and chart

- Disk performance

- CPU Utilization

- Available memory

- IOPS

To set the baseline

- Change the time range to a week or 2 weeks

- See the trend

- Set the baseline based on trend

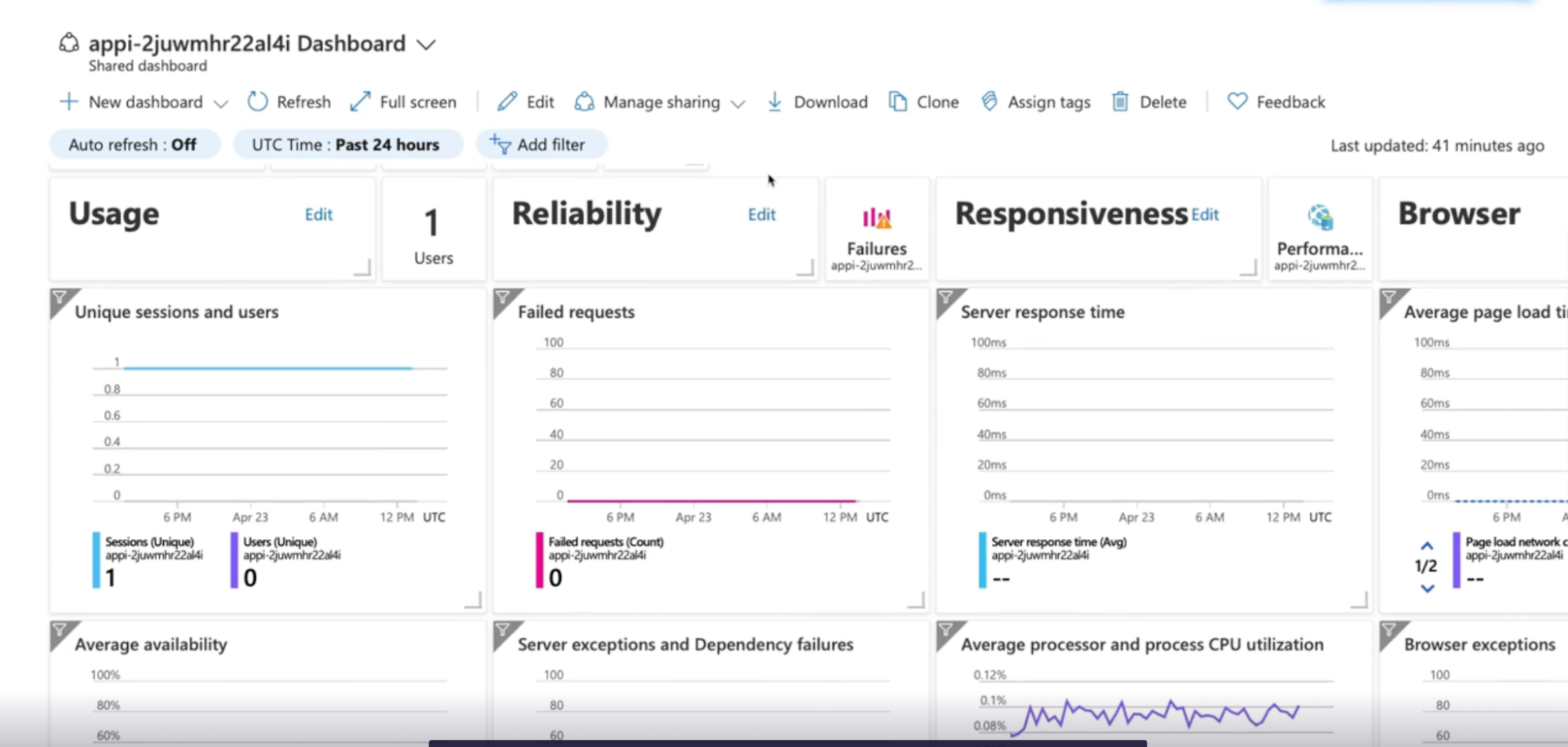

- Application Insights: provides recommended metrics and dashboard for an application

- ex: Your function is taking too long // normally takes 20 ms to call database, not it’s taking 50 ms

Steps

- Click on app insight

- See all the charts pre-configured by Azure

- Click on Application dashboard to see preconfigured application dashboard

Demo

Explore Azure monitor insight

See the pictures above for each Azure services

Summary

- A baseline can help you identify when a system is not in a healthy state so that alerts and improvements can be implemented

- Setup baseline

- Create alert

- Azure monitor insights provide recommended charts and metrics for Azure resources

- Application Insights provides recommended charts and metrics for applications

Discovering Application Insight smart detection and dynamic threshold

Scenario

- now using baselines for their performance testing

- looking into using that health baseline to create alerts

- want alerts to be adaptable to future changes that might alter the baseline(because in future baseline will change, so they want to automatically adjust the alert to the new baseline as things evolve or will they have to review the baselines every quarter or something to decide if they are still relevant)

Dynamic threshold advantages

Advantage over static threshold alerts Machine learning:

- Machine learning: is applied to analyze historical data to understand when there are anomalies

- less manual work: don’t have to manually figure out what the threshold should be

- set it and forget: can be set to apply to any future resources and will continue to analyze data and adapt to changes

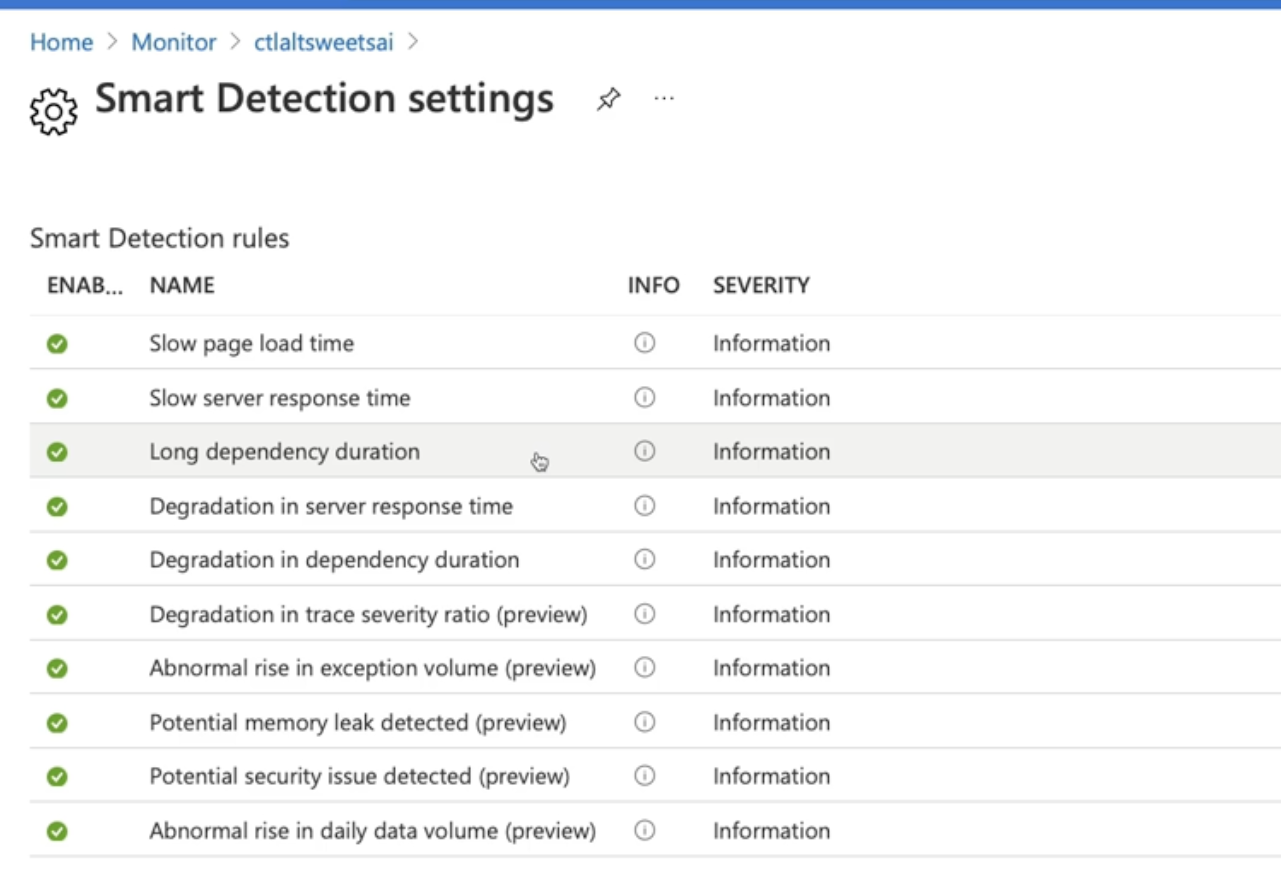

Application Insights smart detection

- Machine learning: analyze telemetry data to detect anomalies

- Built-in: once There is enough time in data to analyze it will be configured automatically

- Alerting: provides information based on findings as well as information as to why there might be an issue

Smart detection categories

- Failures

- failure rates: figure out what the expected number of failures should be

- continuous monitoring: alerts in near real-time

- alert context: provides information as to why it might have failed.

Needs:

- Minimum amount of data and 24 hours to start

- Performance

- page responses: if Beach takes too long to load or if operations or responses from dependencies are too slow

- daily analysis: sends a notification once a day

- alert context: provides information as to why it is running slow

Needs:

- The minimum amount of data and 8 days to start

Demo

Create an alert which dynamic thresholds

- With high threshold sensitivity, you’ll get more alerts (ex: max-14%, min-4% of VM CPU utilization) - because it’s likely that your CPU utilization will reach 14% more frequently than 17% - hence more alert

- With low threshold sensitivity, you’ll get less alert (ex: max-17%, min-2% of VM CPU utilization)

- Hight threshold takes the lowest

Create smart detection alerts

Steps:

- Navigate to your Application Insight instance

- Under investigate, click on smart detection

- Click on settings to see details

Summary

- Dynamic threshold apply machine learning to determine the proper appropriate metrics to be used as a threshold

- Smart detection applies machine learning towards application telemetry to notify you of anomalies

- Smart detection will continuously monitor failures and provide contextual information as to why it failed

- Smart detection will analyze performance once a day to let you know about slow response times

Summary

Chap - 3: Designing and Implementing Health Check

Deciding which dependencies to set alerts on

What is a dependency?

is one component that relies on another component to perform some function

each dependency exists because each component brings something unique. ex: HTTP calls, database calls, file system calls

Types of dependencies

- Internal: which are components that are within the application itself

- External: components that are not part of the application but our component that the application uses like third-party services. ex: when an application uses location service and utilizes the Google map API

- Dependencies in terms of setting up alerts - Strong vs weak

- Strong: strong dependency is a situation where when an application fails and the application doesn’t work at all

- Weak: it’s a situation where dependencies fail but the application still runs

Application Insights dependency tracking

- Track and monitor:

- helps identify strong dependencies by tracking and monitoring calls.

- this tells us if things are failing or not. Once we know if things are failing or not then we can observe how the application reacts to that dependencies

- if the application doesn’t work at all with those dependencies failing then this will be a case of a strong dependency

- Automatic tracking with .NET/.Net core: tracking is configured by default/automatically if using .NET/.Net core SDK for Application Insights

- Manual dependency tracking: configured using the TrackDependency API

- AJAX from webpages: application inside JavaScript SDK will collect AJAX call automatically

Which dependencies are tracked in Application Insights

Automatic | Manual |

HTTP and HTTPS calls | Cosmos db with TCP // configur using TrackDependency API |

WCF if using HTTP bindings | Radis |

SQL calls using SqlClient |

|

Azure storage with Azure storage clients |

|

EventHub client SDK |

|

ServiceBus client SDK |

|

Azure Cosmos DB with HTTP/ HTTPS |

|

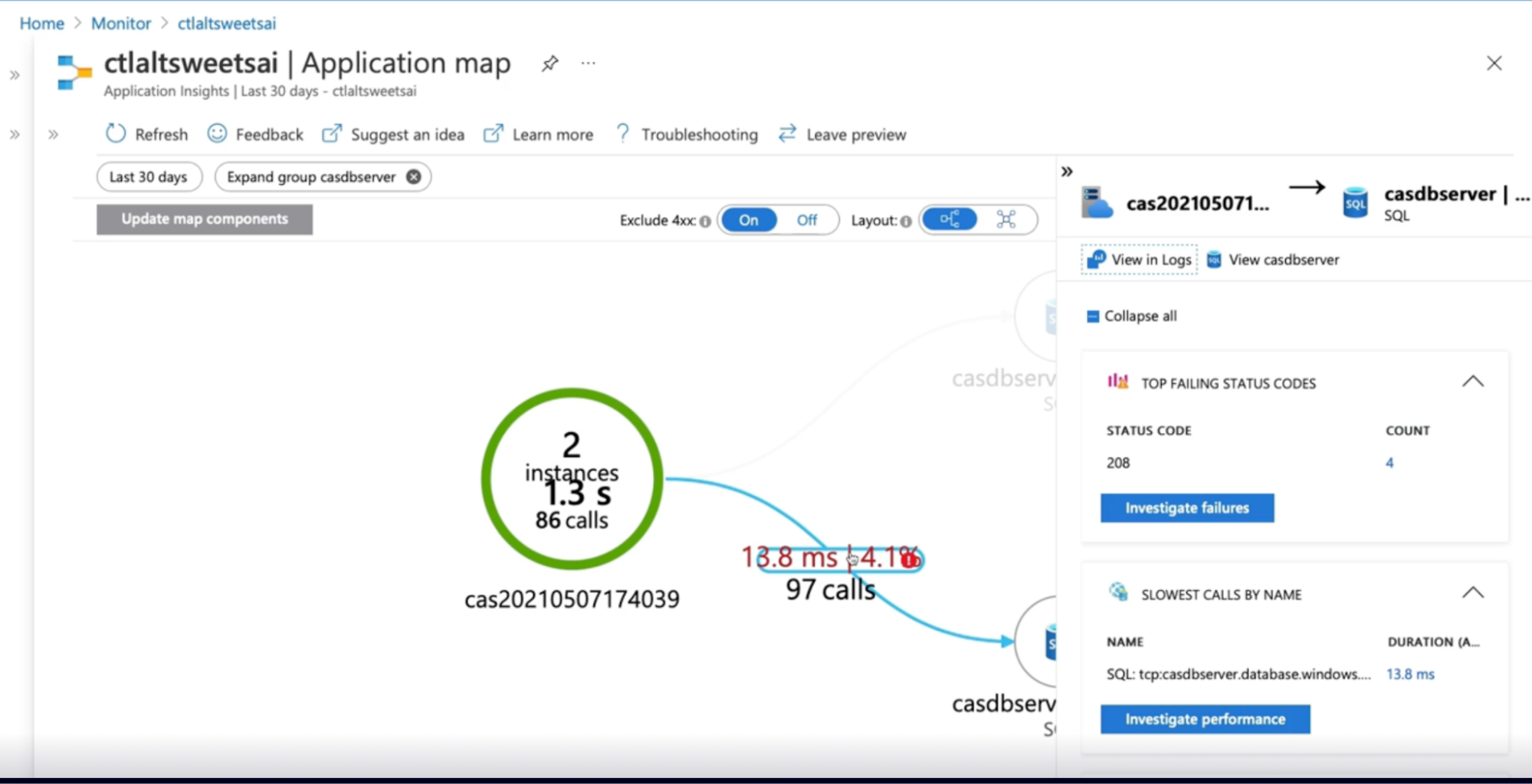

Where can I find dependency data

Gives you application focused dependency information

- Application Map(Application Insight): Provides handy visualization of all the components in your application

- Transaction diagnostics: which you can use to track the transactions as they pass through all the different systems

- Browsers: Browser information so you can see Ajax calls from the browsers and users

- Log analytics: used to create custom queries against dependency data

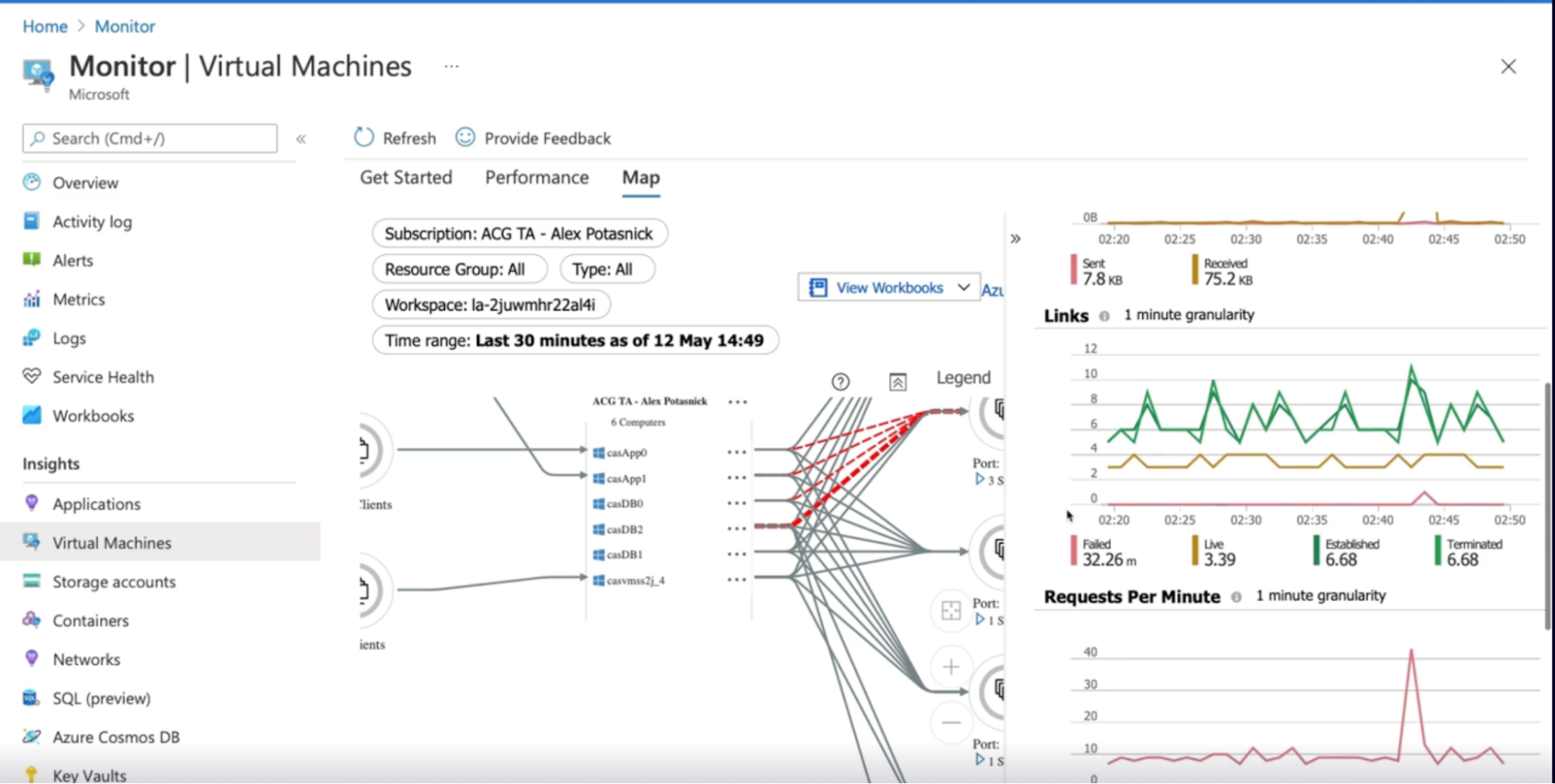

Application dependencies on virtual machines

Gives you VM-focused dependency information.

In order to see the dependencies information, you’ll need to install

- Agent: dependency agent needs to be installed

- Processes: shows dependencies by looking for processes that are running with

- connections between the servers that are active

- any inbound outbound connection latency

- TCP connected ports

- Views: from the VM it will show you information just local to that VM, VMSS or from Azure monitor(all components or cluster)

- Connection metrics:

- response time

- how many requests

- traffic

- links

- fail connections

Demo exploring dependencies

- Dependencies data in app insight

- Click on app insight instance

- Under investigate, click on application map

- Click on investigate failure and performance to drill down to details

- Dependencies data in VM

- Under monitor → Insights → VM

- Click on Map tab // to see the info on scale set

- Use this info to see what happened, when and why

Summary

- dependencies are components in an application that rely on each other for specific functions

- Dependency tracking is automatically configured with the.net and.net core SDK for Application Insights

- Manual dependency tracking can be configured using the track dependency API

- The application map provides visualization of application dependencies and correlated data

- A virtual machine application dependency map can be found in Azure monitor with system information and connection metrics

Exploring service level objectives SLO

with SLO, Configure our services based on the response times

What makes an SLO?

First, gather

- SLI: actual metrics from the system which tell us how the application is performing. Use those metrics to create targets.

- Target: which is the upper limit of how we want the system to perform, How reliable and available it is. once you have SLIs and targets, include Timespan

- Timespan: The amount of time/time range for SLI to reach to target (acceptable time for the SLI to reach the target limit)

Example: CPU should not exceed 70% over one hour; if so trigger alert

Idea is that we just want to make sure that the system can handle that load.

How is an SLO helpful?

Once SLO is established, how it can help us

- Once we have the SLOs in place, then we have an idea of what compute options we should be choosing when configuring our system. // hence make an informed choice on compute options

- They also help set the customer expectations

- on things like how fast an application will be and how reliable it is.

- gives the customer an idea of what the system or application can handle.

Callouts

- The SLO should be re-evaluated from time to time because things change.

- for example, if originally, when a company was first starting, there was an SLO where an application can handle 100 SQL transactions per minute,

- and now that the company has grown, they need to handle 500 SQL transactions per minute, then those SLOs will be reevaluated, and they would configure their SQL databases accordingly.

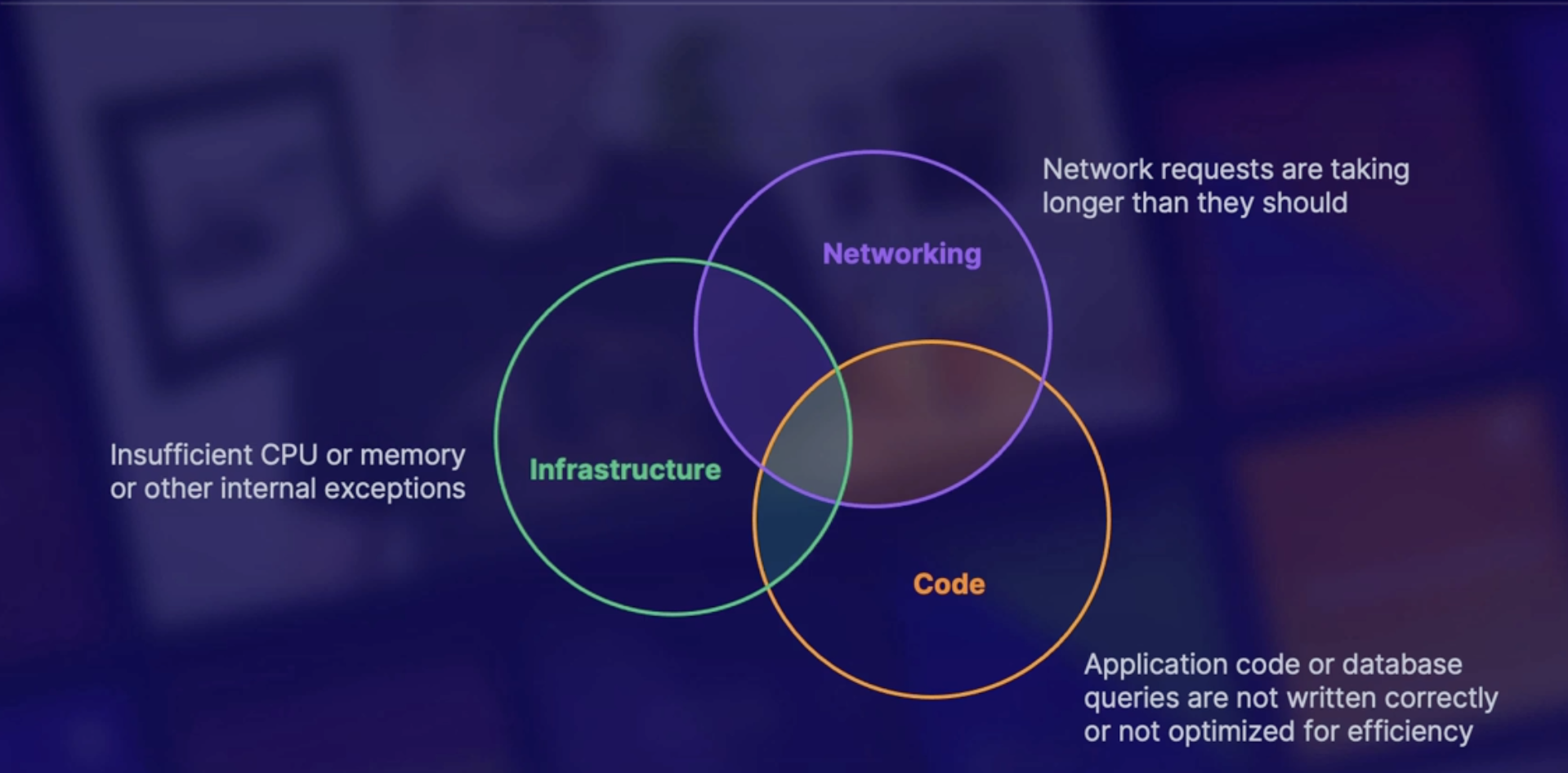

SLO’s and response time-outs

Questions: why is my app running so slow?

Answer: number of reasons

- First, it could be a networking issue, where the network requests are taking longer than they really should.

- it could be something in the code where the application logic or database queries aren't written as succinctly or optimized to be as efficient as they can be.

- This can also be an infrastructure problem, where the infrastructure in place isn't designed to handle the amount of load that the application is bringing in.

So once we have our SLOs, it gives us an idea of what we want our application or system to look like. And then we can adjust any of the things in these categories to meet those expectations.

Demo: Configure Azure SQL to meet SLO‘s

Steps:

- You have Azure SQL database. it hasn't been used in the past hour or so.

- Click on Compute and Storage to see database was deployed to be a general purpose database

- hardware configuration, it's a Gen5, with up to 80 vCores, and up to 408 gigabytes of memory.

- we only have 2 vCores provisioned

- we have a summary of what we just said, and it shows us a performance graph to let us know if we're optimizing our hardware.

- run a workload to see how it handles.

- logged into a virtual machine that's connected to the Azure SQL database, and run a workload.

- it's creating 20 threads to process queries. And we're going to see how the hardware performs while this workload is running.

- navigate down to the Metrics section under Monitoring

- choose a CPU percentage metric with your database

- change the scope here from 24 hours to let's say the last hour.

- And as we can see here, we've reached 100% CPU. // repose time is slow as it’s maxing out 100%

- Compute and storage → change vCores to 6

- Repeat the step 6,7,8 to see it’s running 20 threads as usual

- Cpu percentage hit 54% as compare to 100% previously

Call outs

- Run the database workload(see how many threads are created/running)

- Check the metric whether your workload causes the database to reach 100% CPU utilization

- If so increase the CPU Core

Summary

- An SLO is made up of an SLI, along with limit and timespan

- Once the SLO’s published we can choose a computer option to meet those expectations

- networking, code and infrastructure can all create situations where the system does not meet the SLO.

Understanding partial health situation

Health monitoring

What? - How can we design our environment to handle partial health situations?

TODO -

- The most important thing we can do is to understand when, how, and why those situations are occurring.

- Therefore, we need health monitoring, which gives us insight into the system’s health.

- System health: the system is healthy when it is up and performing the functions it is designed for

- Alerting: There should be an alerting system in place to notify when something is failing as soon as possible

- Traffic light system: Red(unhealthy), yellow(partially healthy), green(healthy) // by dashboard

Health monitoring data

- When configuring your health monitoring, it should be clear which parts of the system are healthy and which parts are unhealthy.

- And also to distinguish between transient and non-transient failures.

In order to do this, we can utilize things like

- User request tracing: which requests from the user has passed or failed and how long did they take

- Synthetic user monitoring: emulates the steps that a user would take when they are using an application

- this will show you how your application responds to typical user behavior, which can help predict when a system is going to fail, and then you can take precautions to prevent that situation from happening.

- Trace and event logs: We also need to make sure that we're capturing trace and event logs.

- Trace logs come from the application,

- event logs come from the system that's hosting the application.

- Logs generated from the application and the hosting infrastructure

- Endpoint monitoring: system or application endpoints that are exposed for use as a health check

Telemetry correlation

What

- Application Insights uses telemetry correlation to find out which component is causing the failures or is causing the performance to go down.

Why

- the idea behind this is to track the transactions from end to end to make sure that there are no issues in the application and system-critical flows.

- The idea is that if, let's say a dependency is down, then we can see how the other components will also go down as well.

- And within each of those components, we want to correlate any application events with platform-level metrics to get a full picture of what's going on.

- platform-level metrics: CPU, network traffic, disk operations per second with any application errors.

- Example: So for example, if let's say a certain function is looping continuously, and at the same time, we see that there's a high disk operation per second, those are probably related.

Application logs best practices

What: we also want to make sure that our logs are written in a way that's most helpful and actionable to us.

Some best practices are

- production log data: log data should be coming from the production environment to get an accurate picture of the production state

- Symantec/Structured logging: consistent log structure that helps simplify their consumption and makes them easier to analyze (situation where application generates a text file with all the logs in it and it’s impossible to find anything in the chain file)

- log events and service boundaries: using IT to help track transactions through various components(use a correlation ID to track the transaction and find out where and why it fails)

- Asynchronous logging: logging operates independently(because if we use synchronous logging, it can fill up and block the application code)

- Separate application logging and auditing: keep application Auditing logs separate so no transactions get dropped

- Azure policy: to enforce consistent diagnostic settings

Endpoint health monitoring

What

- Endpoint health monitoring provides functional checks in an application to let you know that each part of a system is healthy.

- help us determine partial health situations because it checks certain endpoints in the application to see if there's a successful response.

Examples

- response code: looks to see if there is a 200 response indicating there are no errors

- response content: analyze response content to determine if there are parts of the page that are failing even if you have 200 responses

- response time: Measure how long it takes to get a response

- external components: checks third-party and external components like CDN

- certificates: check to see if any SSL certificates are expiring

- DNS lookup: make sure DNS lookup is configured correctly and that there are no missed directs

Summary

- The system is considered healthy when it is up and running and performing the function that it was designed to do

- when monitoring is held it should be clear what the failure is happening

- telemetry correlation takes application in system event logs into account to provide a full picture across the stack

- Application logs should be consistently structured, easy to analyze, and traceable across service boundaries

- Endpoint health monitoring can be used on multiple endpoints to determine health and partial health status

Improving recovery time

Why do we need a recovery plan

Why do we need to make sure that we have a Disaster Recovery plan?

What are the things that can happen that can affect our business continuity?

Recovery situation includes

- Ransomware: type of malicious software that's designed to block access to your system until you pay them a certain amount of money.

- Data corruption or deletion:

- VM was doing some updates and it crashed, and the data on that machine got corrupted,

- or maybe somebody accidentally deleted something from a database or a storage account, and they weren't able to recover from it.

- Outages

- Networking, DNS issues, natural disaster

- Compliance

- Organization that you're working for requires you to have a business continuity plan to be compliant with their security policies.

High availability(HA) Vs disaster recovery(DR)

HA | DR |

Goal is to keep the application up and running in case of a local failure | goal is to make sure that the application can be recovered in an alternate site in a case of a regional failure // failover to secondary region |

| failover to secondary region against planned(planned outages) - we try best to prevent data loss unplanned events(natural disaster) - need to determine howmuch data we are willing to lose |

Recovery Point Objective(RPO) Vs Recovery Time Objective(RTO)

What:

- there will be some data that's lost. So we need to determine how much data we're willing to lose.

- in order to make that determination, we need to establish an RTO and an RPO.

RPO | RTO |

In case of an outage, how much data are you willing to lose | In case of an outage howlong can you afford to take to get your system back up and running // this is the measurement that you would use to determine how long your system could be unavailable.

|

EX: company is willing to lose 30 mins of data loss | EX: we want our system back up and running within an hour. |

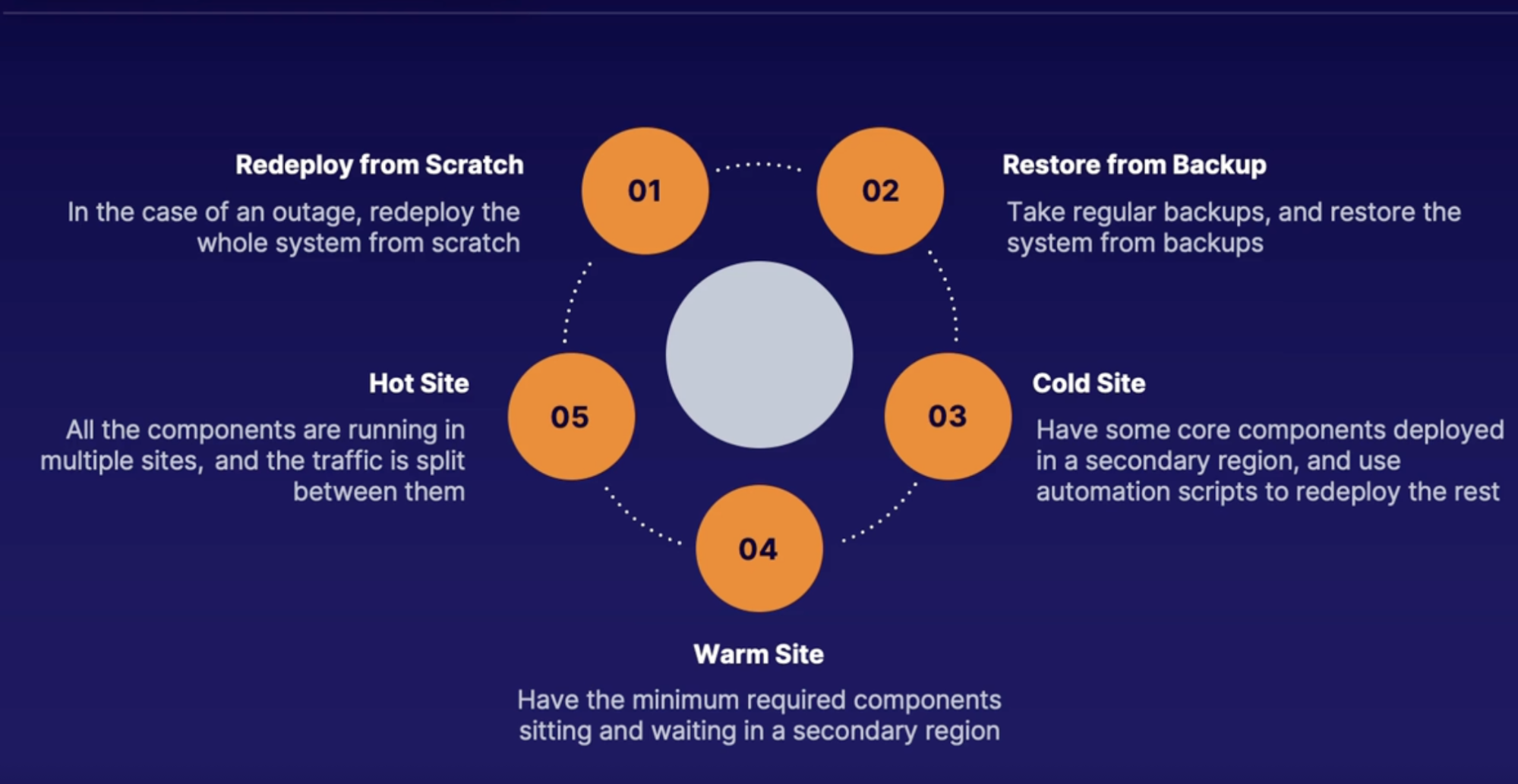

Business continuity strategies

Strategies we can employ to make sure that we meet our RPO and RTO?

First of all, we need to ensure that we don't just protect the application because there might be dependencies in your environment that are just as important, which we refer to as strong dependencies meaning that without these dependencies, your application can't run.

And it's also important to remember that different situations require different strategies.

- Redeploy from scratch:

- incase of an outage

- for a noncritical system that you don't need a guaranteed RTO because using this strategy would take the highest Recovery Time Objective(RTO) because you're starting from scratch.

- Restore from backup

- Take regular backups(of various parts of the system like the databases, the files, the virtual machines) and restore the system from backups.

- So when outage, you would just restore the components from the most recent backup, and depending on the Recovery Point Objective, how much data you're willing to lose, that will determine how often you take those backups. // Meaning, the more frequently you take backups means a lower RTO.

- Cold site

- this is where you keep some of the core components of a system deployed in a Disaster Recovery region in case there's an outage.

- Then you have the rest of the components deployed using automation scripts.

- Warm site

- active passive or standby

- this is where you have a scaled down version with the minimum required components needed to run deployed in a DR region, but just sitting there and waiting in case of an outage, meaning that there's no production traffic being sent to this DR location.

- this would be used in a case where a system is not designed to be spread across multiple regions.

- The RTO would be the time it takes to turn on any of the components if they're off or how long it takes to switch traffic to this second location.

- Hot site

- active/active, or hot spare, or multi-site

- this is where you have a full environment running across multiple regions with traffic being split to both of those regions.

RTO improvement options

So when trying to figure out what option you should use to improve your Recovery Time Objective,

- you need to assess your current environment and the situation that you're in, and decide how you want to balance RTO and RPO versus the cost because these things have an inverse relationship.

- So for example, were you to decide to use the

- hot site strategy - that would have the highest cost because you have your full environment deployed and running in multiple regions but it would also give you the lowest RTO and RPO because there would be virtually no recovery time;

- whereas, if we were to redeploy from scratch - that would be the least expensive option because you don't have anything running or deployed in anywhere else besides for your current region, but the RTO and RPO would be the highest because it would take the longest time to recover from an outage in that situation.

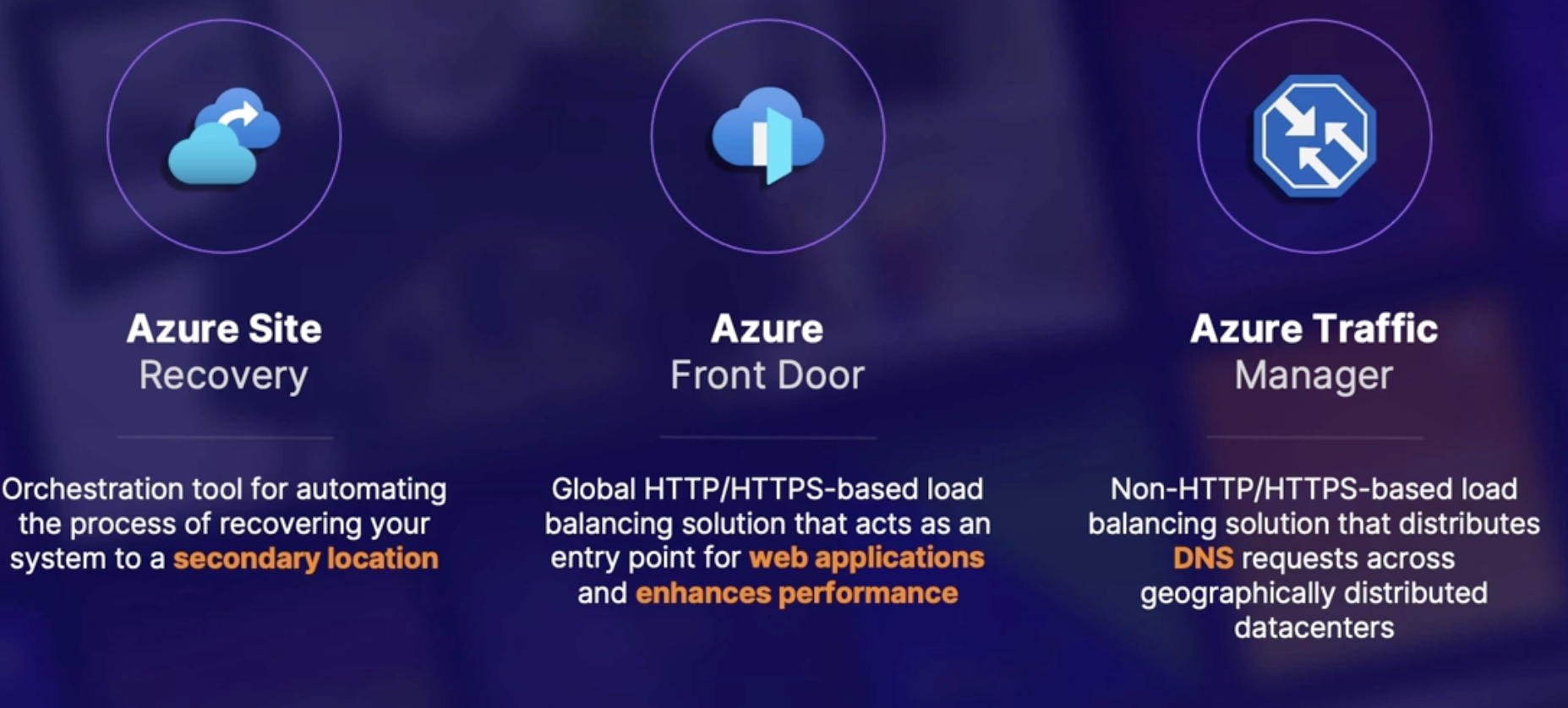

Azure services that help reduce RTO

- Azure Site Recovery:

- Azure front door

- Azure traffic manager

Summary

- High availability focus on local failure

- Disaster recovery focuses on regional failure

- recovery point objective RPO quantifies acceptable data loss

- recovery time objective RTO determines how long a system can be unavailable

- as we move down this list of DR & HA strategies, we reduce the RTO but the cost of the solution increases

Exploring computer resource health checks

App service Health checks

Azure App Service has a built-in health check where it routes traffic only to healthy instances.

in order to configure this, you need to present a path to verify the health. this can be something like

- Endpoint Check:

- If the specified path returns a status code of 2XX within 60 seconds it is healthy. this could be an endpoint of database or application itself.

- if a response is longer than 60 seconds and returns a 500 status code then it’s deemed unhealthy

- it will ping the instance twice and remove it after two unsuccessful pings

- Reboot after removal: after removal the instance will continue to ping and then reboot

- Replace: if the instance remains unhealthy after one hour it will be replaced with a healthy instance

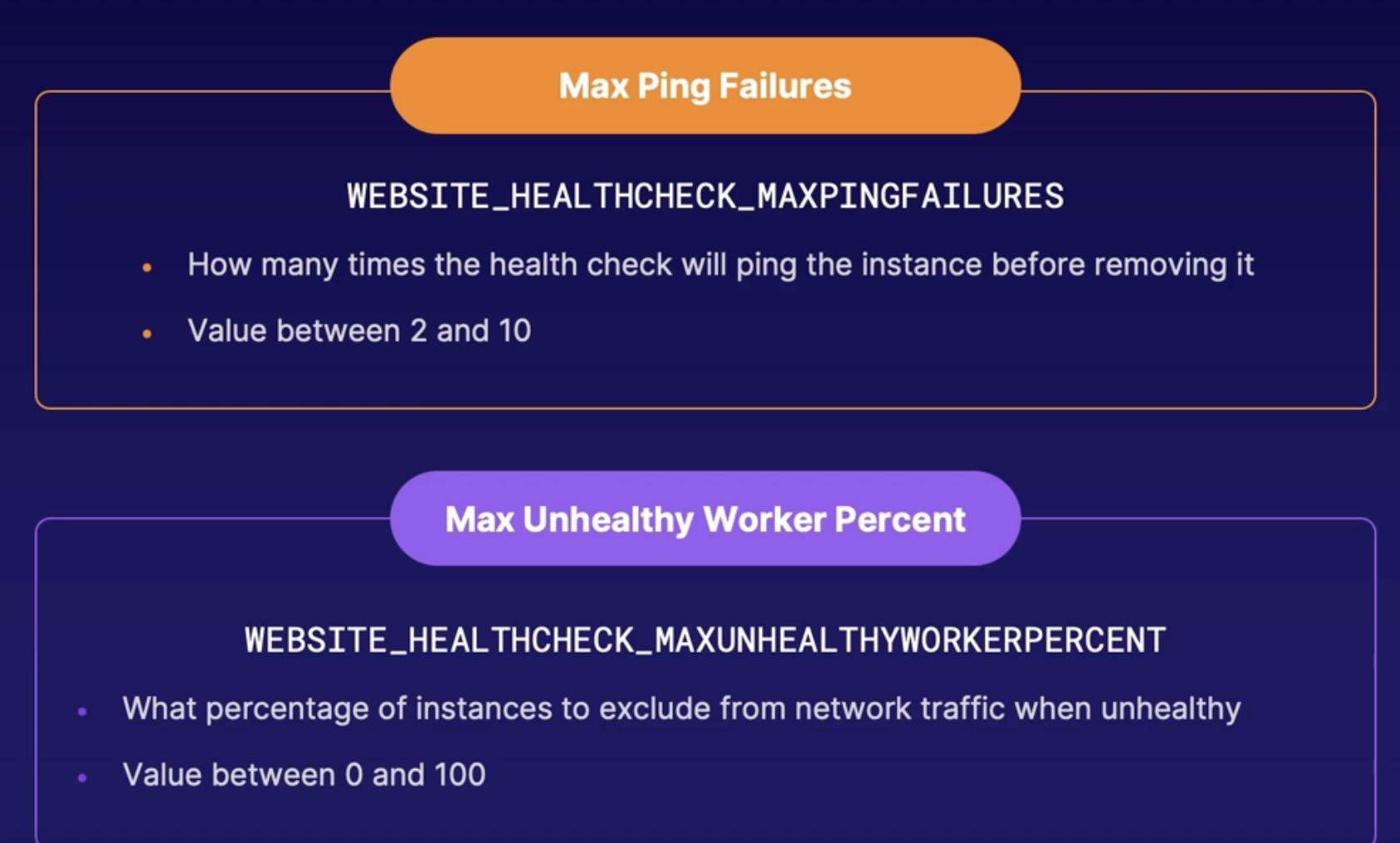

Customize Web App health checks

How?

- this health check can be customized in the app settings by using the WEBSITE_HEALTHCHECK_MAXPINGFAILURES app setting

- where you can specify how many times the health check will ping the instance before removing it.

- And you can choose between 2 to 10 times.

- You can also configure the EBSITE_HEALTHCHECK_MAXUNHEALTHYWORKERPERCENT setting

- where, by default, if the health check deems an instance to be unhealthy, it will only exclude network traffic to up to 50% of the instances to try to avoid the remaining instances getting overwhelmed.

VMSS Health extension

What: this let you know if any of the VMs in the scale set are unhealthy,

How: and it does this by checking an endpoint to determine its health.

- you can deploy this by PowerShell, CLI, an ARM template.

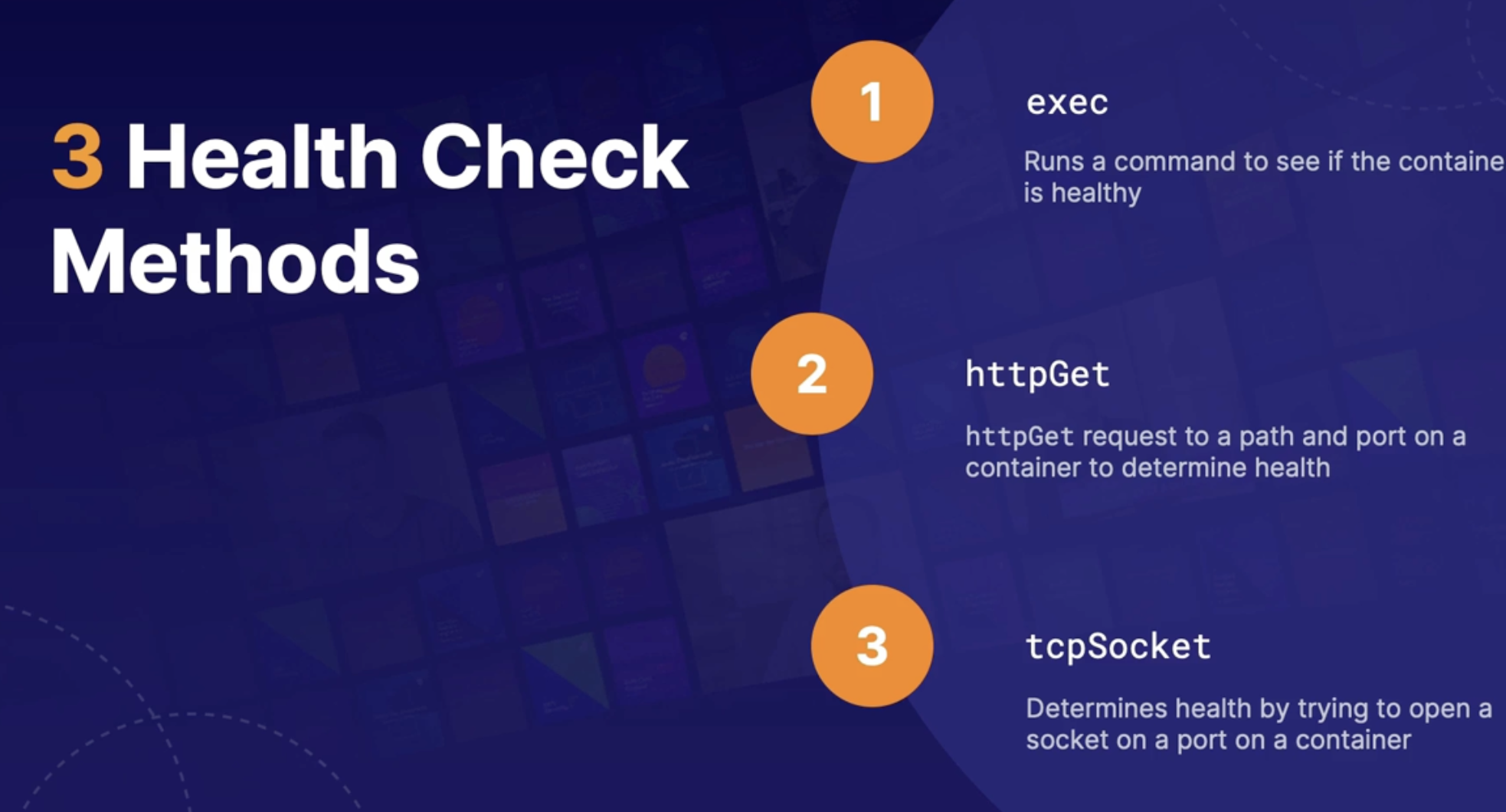

Container health check types

Kubernetes can automatically restart unhealthy containers, but by default, Kubernetes will only consider the container to be unhealthy if the container process stops.

And this is where liveness probes come in.

- Liveness

- customize how to determine if the container is healthy

- runs continuously on a schedule

- Startup

- checks held in container is starting up

- Use case : legacy app that takes a long time to startup

- no support by an ACI. only in AKS

- Readiness

- checks when a container is ready to accept request as it starts up

- prevent traffic to pods that are not yet ready

Liveness Check Example

https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-startup-probes/

Type: LIVENESS

Method: exec

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5 |

Startup Check Example

Type: startup

Method: HTTPGET

startupProbe:

httpGet:

path: /healthz

port: liveness-port

failureThreshold: 30

periodSeconds: 10 |

Readiness Check example

readinessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 10 |

Summary

- App service Web Apps have a built in health check that can be configured to check a specified in point for health status

- web apps are healthy if the response returns eight 200 response code with 60 seconds

- an application health extension can be deployed to check the health of VMs in a virtual machine scale set

- You can determine container health by executing a command, sending an http request, and attempting to open a TCP socket

- container liveness probes allow you to configure custom health checks that run continuously

- container startup probs provide health checks only when the container start up

- container readiness probes will let you know when the container is ready to receive requests

Summary

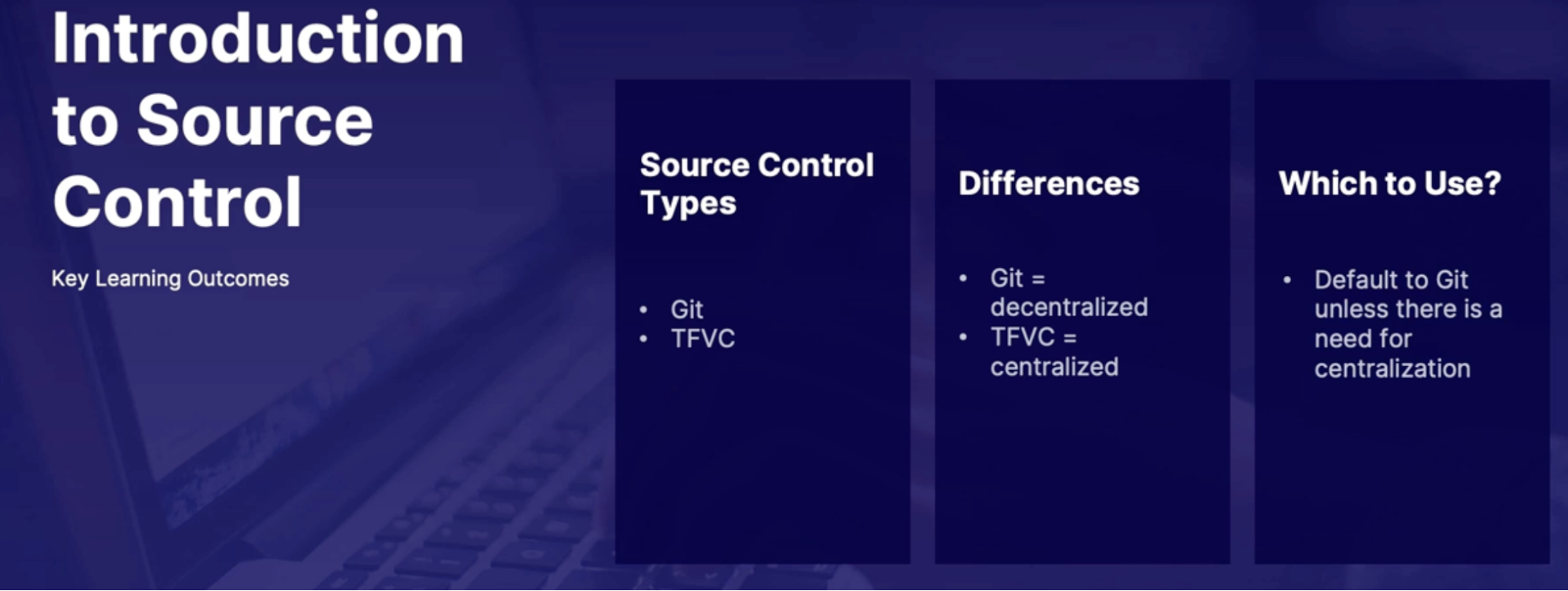

Chap - 4: Developing a modern Source Control Strategy

First step of CI/CD process of source control

Introduction to source control

What is source control

- Also known as source repository/version control (Central source of truth for group code base)

- allows multiple developers to collaborate on code and track changes (critical for any multi developer project)

- example Azure repos, GitHub, BitBucket

Source control types

- Git

- distributed/decentralized

- the deafult preferred option

- Each developer has a copy of the repo on their local machine

- includes all branch and history information

- Each developer checks in the local portion of the code and changes are merged in a central repository

- Team Foundation version control - TFVC

- Centralized /Client-Server

- the non-default option

- developers checkout the only version of each file on local machines(instead of an entire copy of code base)

- checked in code is then pushed to everyone else

Which one to use?

- Git is preferred unless there is a specialized need for centralized version control in TFVC

Summary

- What is source control? the central source of truth for group development

- source control types: Git and TFVC

- primary focus on GIT both inside and outside of Azure repos

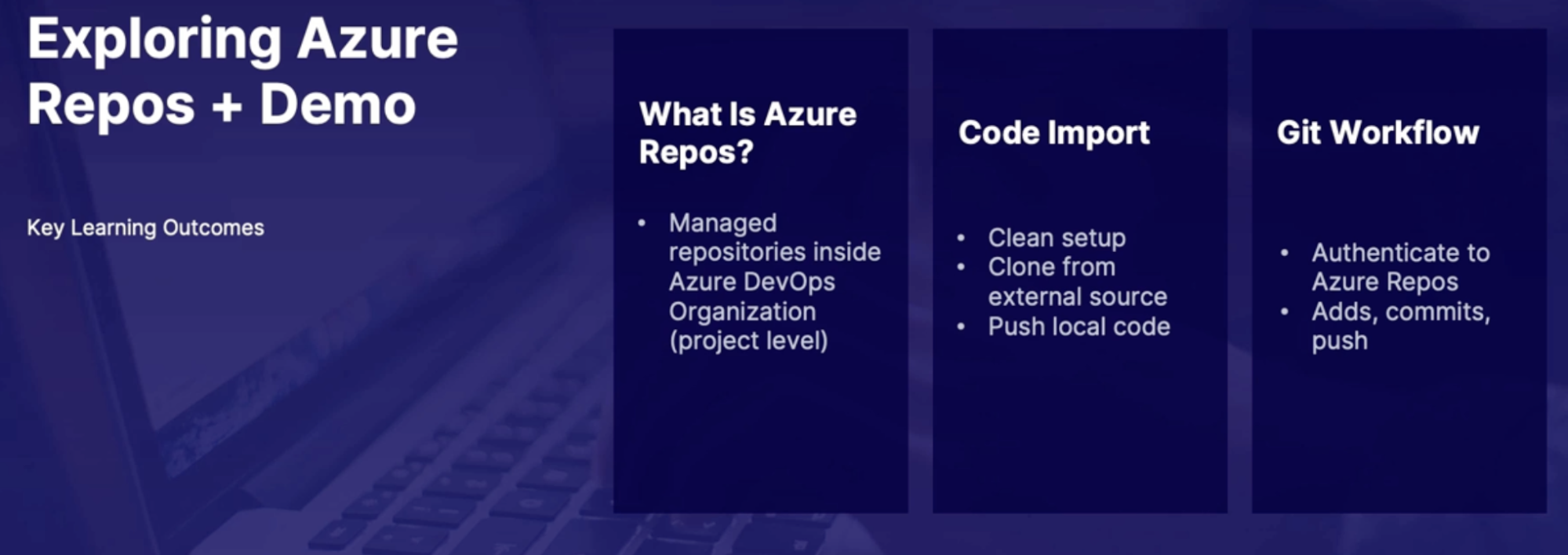

Exploring Azure repos

Azure repos at a glance

- Exist inside of Azure DevOps organization

- Project level

- Supports Git and TFVS

- An optional component for Azure pipelines

- can use external referrals in pipelines

Setting up Azure Repos

- all options involve getting code from somewhere else into Azure repos(Azure repo then becomes the source of truth)

Import options

- set up empty repo from scratch

- clone existing repo into Azure repo(GitHub, another Azure repo)

- push local code base into Azure repo

Supported GIT features

- branching methods

- history

- tagging

- pull request

- and much more // if it works in Git, it works with Azure repo

Summary

- Azure repo overview: managed repositories inside Azure DevOps organization

- import options: start from scratch, import external repo, push local code base

Azure Repos demo and Git workflow

- clone GitHub repo to Azure repo

- Import any public Git repo into Azure repo

- clone Azure repo to local environment and authenticate to Azure repos

- Clone repo

- Generate Git credentials // copy the password

- Clone in VS Code

- Enter password from step

- Update local code copy, and push/pull new changes to/from Azure repos

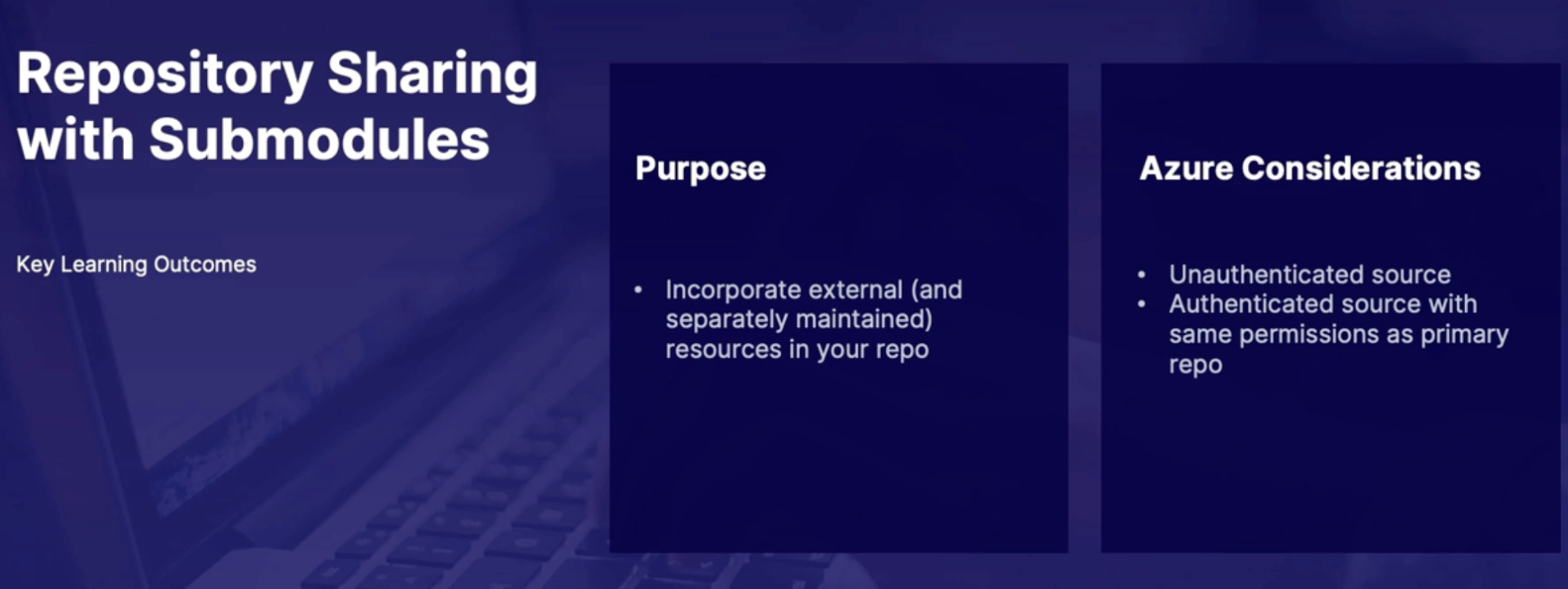

Repository sharing with submodule

Share repository using submodules

What are submodules

scenario

- challenge: Need to incorporate resources from a different GIT project in your current repo

- Examples: third-party library used in your projects

- need to treat external resources as separate entities yet seamlessly included within your project

- Solution: submodules

- not limited to Azure repos, core GIT feature - with an Azure twist

Callout

- contents of the code is maintained by another party, you are simply embedding that code into your own depository, however the updates are being handled by another party

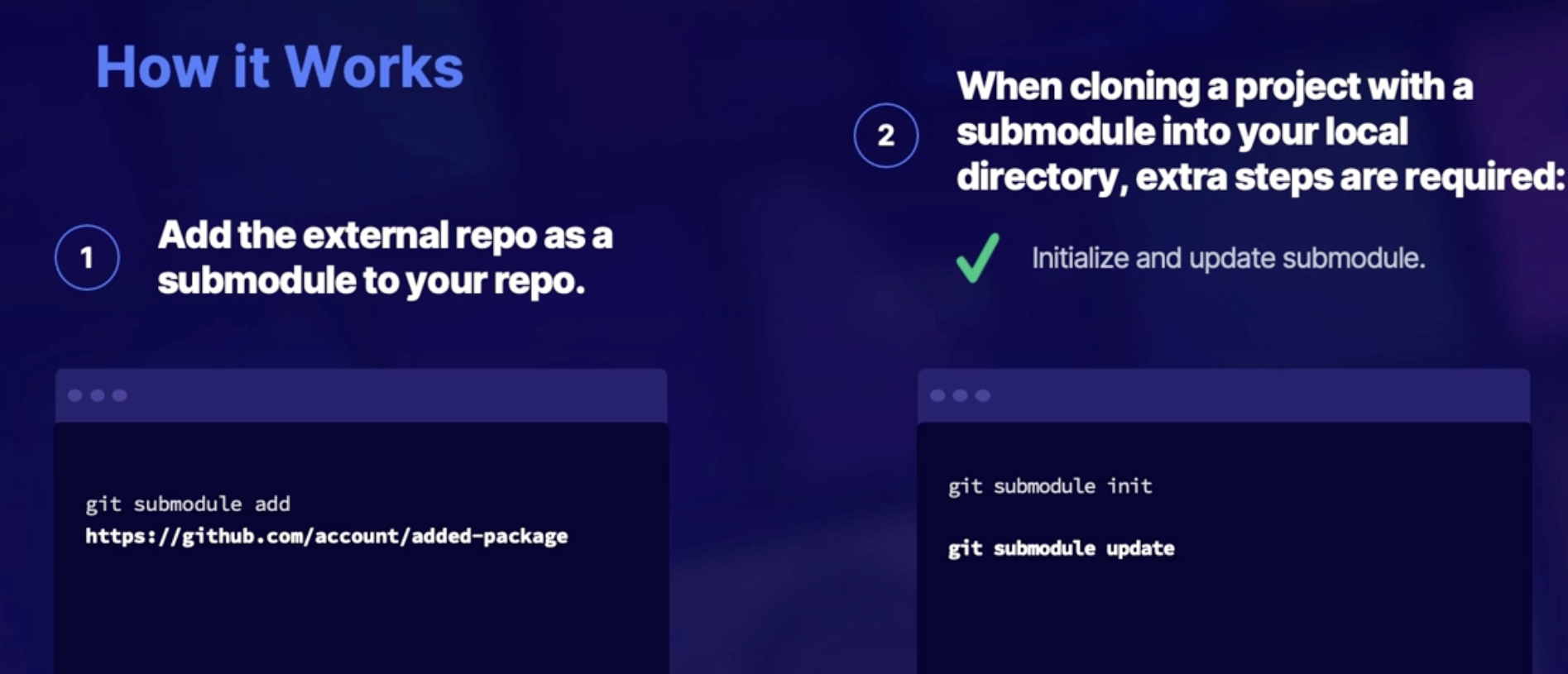

How submodule works

- add the external repo as a submodule to your repo

- when cloning a project with a submodule into your local directories extra steps are required

- initialize an update submodule

Submodules in Azure DevOps

- requirements to include in build pipelines

- Unauthenticated - i.e, publicly available

- Authenticated within your main repository account

- same GitHub organization, Azure organization, etc

- same account to access the primary repo must also be the same one to access the submodule repository

- submodules must be registered via http - not Ssh

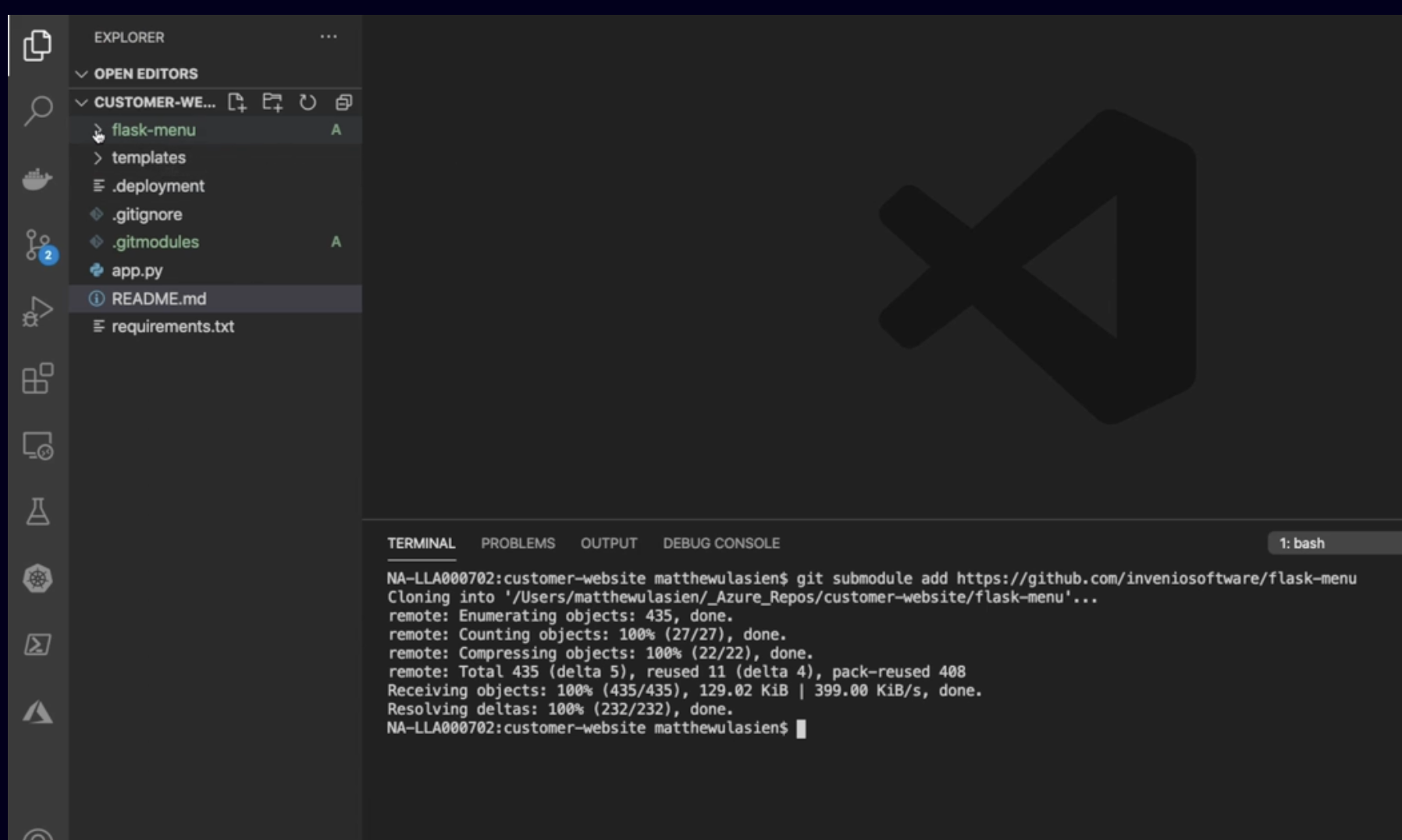

Demo: adding submodule to repo

- Add a submodule to our locally cloned repo

- push updates to Azure repos and view the results

- Once you push changes the file turns to blue and we have S next to it, notifying that its submodule

- if we were to clone and work with this repository onto a new machine, or even to our existing environment,

- we would need to manually update and initialize those submodules on the machine using the submodules init and update commands that we talked about earlier in this lesson.

Summary

- what are submodules? - nested resources posted from external repos

- how do you use submodules - adding new | initializing clone

- authentication with Azure pipelines: unauthenticated or authenticated with the same organization and rates as primary repository

Summary

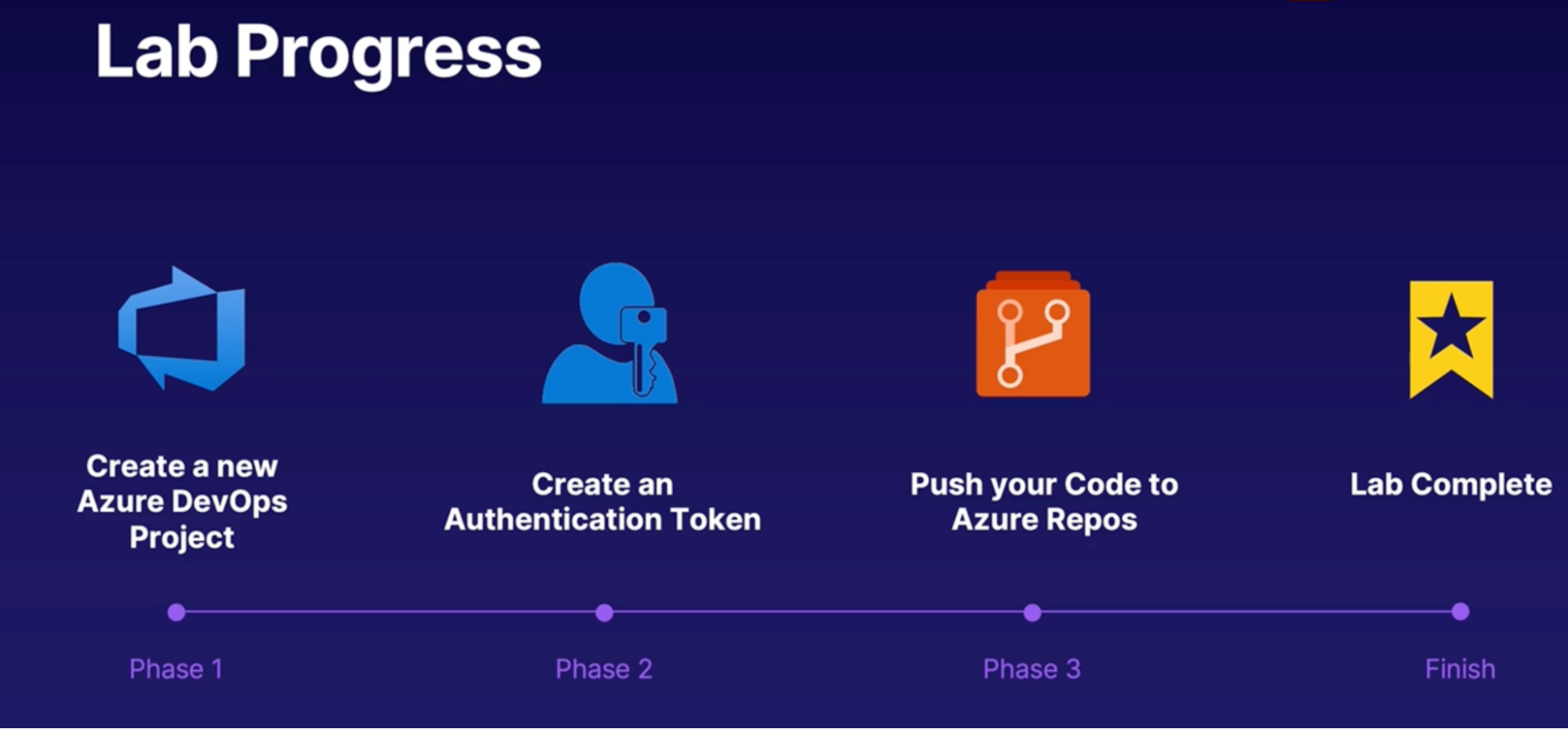

lab: authenticate to Azure repose using an authentication token

Objectives:

- You have just created your first Git repo in Azure DevOps and need to clone that repo down to your local machine.

- You decide the easiest and most secure way to clone the repo is by using an authentication token. After you've created the token, push your code to Azure Repos.

Steps

- Create a new repo from Azure DevOps

- From Repos → Files → Initialize master ranch with README and gitignore

- Add a gitignore: visual studio // will create new repo

- Create personal access tokens

- Create new token

- Set expiration

- Select scope

- Copy token

- Clone repo from Azure repo

- In your local env: use the cloned URL

- Enter password/PAT

Chap - 5: Planning and implementing branching strategies for the source code

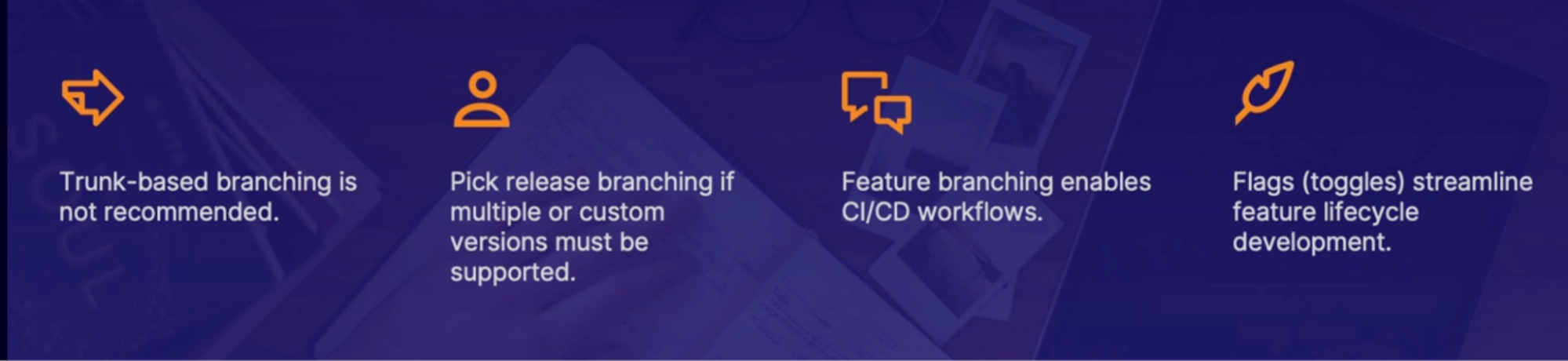

Configure branches

What is a branch

branch is a way in order for you to isolate code, work on it, and bring it back to the main source code.

Branch management

- Branch policies

- Initial safeguard

- Require a minimum number of reviewers: require approval from a specified number of viewers on a pull requests

- Check for linked work items: encourage traceability by checking for linked work items on pull requests

- Check for comment resolution: check to see that all comments have resolved on pull requests